This repository contains our implementation of the following paper:

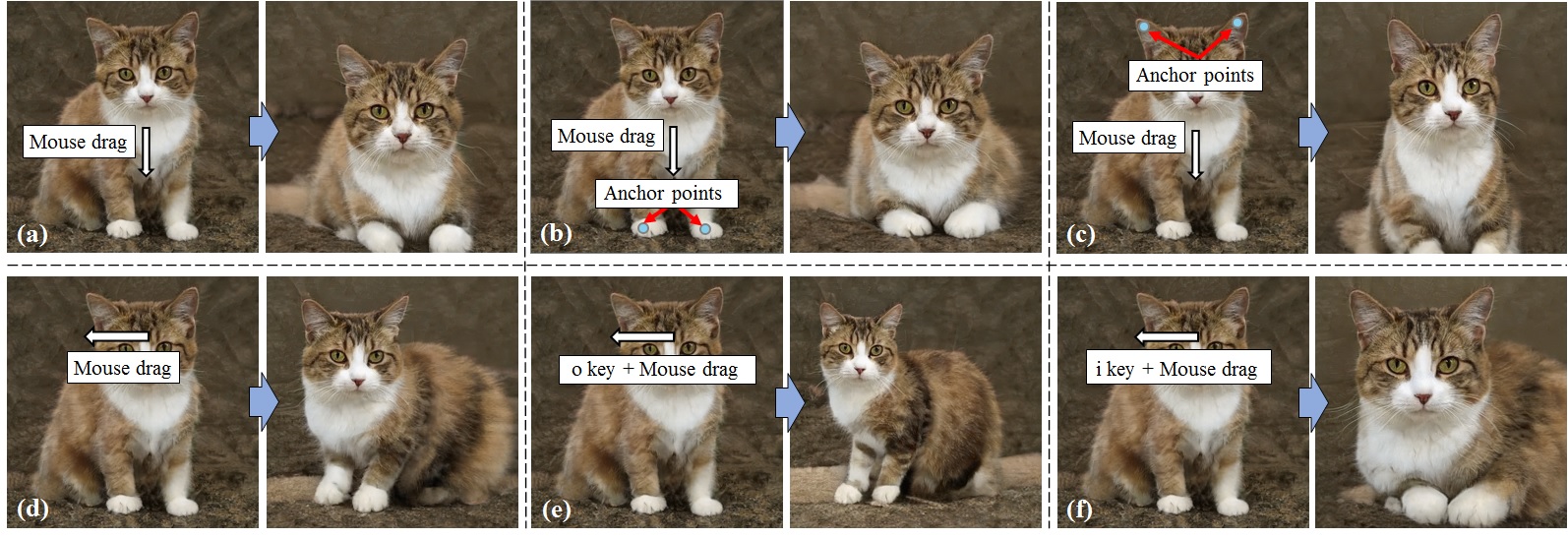

Yuki Endo: "User-Controllable Latent Transformer for StyleGAN Image Layout Editing," Computer Graphpics Forum (Pacific Graphics 2022) [Project] [PDF (preprint)]

- Python 3.8

- PyTorch 1.9.0

- Flask

- Others (see env.yml)

Download and decompress our pre-trained models.

We provide an interactive interface based on Flask. This interface can be locally launched with

python interface/flask_app.py --checkpoint_path=pretrained_models/latent_transformer/cat.pt

The interface can be accessed via http://localhost:8000/.

The latent transformer can be trained with

python scripts/train.py --exp_dir=results --stylegan_weights=pretrained_models/stylegan2-cat-config-f.pt

To perform training with your dataset, you need first to train StyleGAN2 on your dataset using rosinality's code and then run the above script with specifying the trained weights.

Gradio demo by Radamés Ajna

Please cite our paper if you find the code useful:

@Article{endoPG2022,

Title = {User-Controllable Latent Transformer for StyleGAN Image Layout Editing},

Author = {Yuki Endo},

Journal = {Computer Graphics Forum},

volume = {41},

number = {7},

pages = {395-406},

doi = {10.1111/cgf.14686},

Year = {2022}

}

This code heavily borrows from the pixel2style2pixel and expansion repositories.