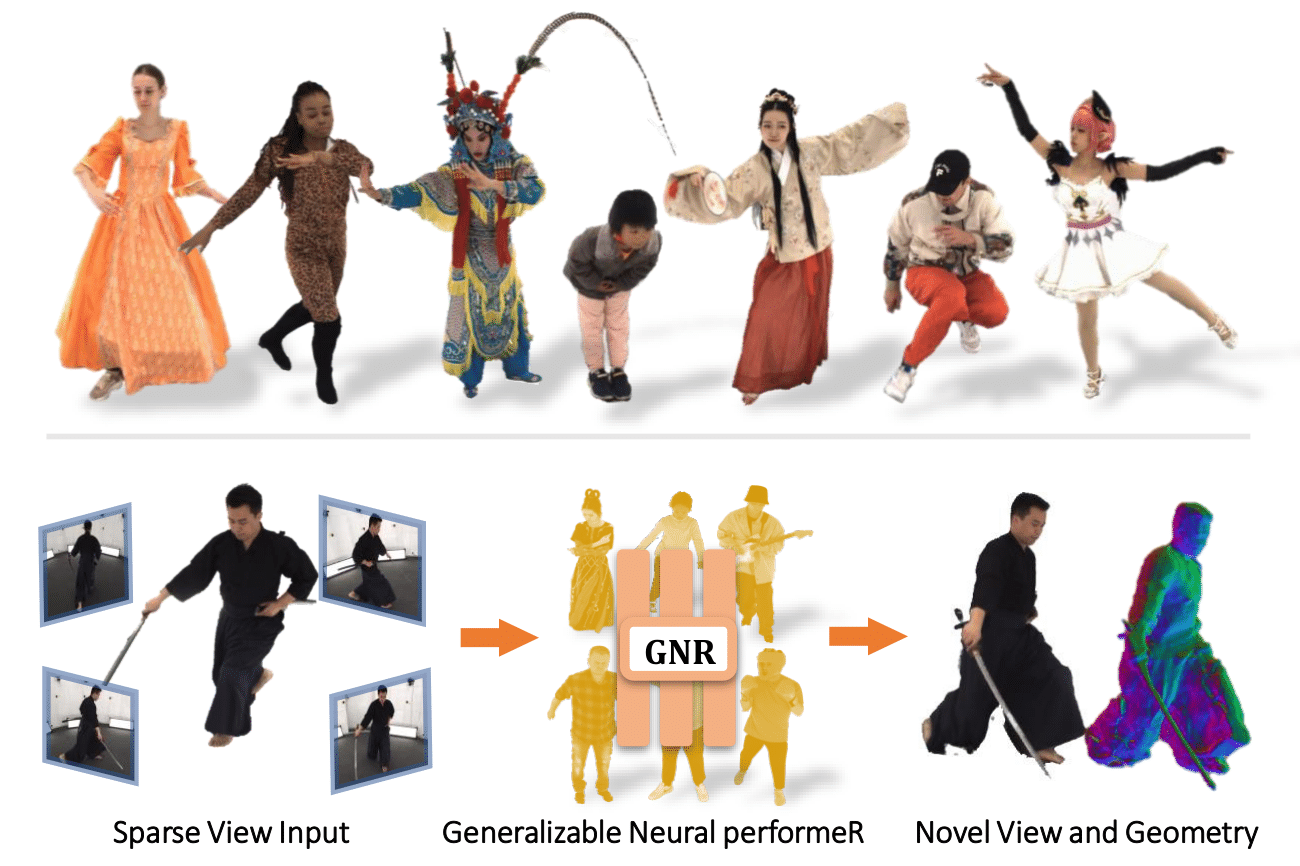

Abstract: This work targets at using a general deep learning framework to synthesize free-viewpoint images of arbitrary human performers, only requiring a sparse number of camera views as inputs and skirting per-case fine-tuning. The large variation of geometry and appearance, caused by articulated body poses, shapes and clothing types, are the key bot tlenecks of this task. To overcome these challenges, we present a simple yet powerful framework, named Generalizable Neural Performer (GNR), that learns a generalizable and robust neural body representation over various geometry and appearance. Specifically, we compress the light fields for novel view human rendering as conditional implicit neural radiance fields with several designs from both geometry and appearance aspects. We first introduce an Implicit Geometric Body Embedding strategy to enhance the robustness based on both parametric 3D human body model prior and multi-view source images hints. On the top of this, we further propose a Screen-Space Occlusion-Aware Appearance Blending technique to preserve the high-quality appearance, through interpolating source view appearance to the radiance fields with a relax but approximate geometric guidance.

Wei Cheng, Su Xu, Jingtan Piao, Chen Qian, Wayne Wu, Kwan-Yee Lin, Hongsheng Li

[Demo Video] | [Project Page] | [Data] | [Paper]

- [11/07/2022] Code is released.

- [02/05/2022] GeneBody Train40 is released! Apply here!

- [29/04/2022] SMPLx fitting toolbox and benchmarks are released!

- [26/04/2022] Techincal report released.

- [24/04/2022] The codebase and project page are created.

To download and use the GeneBody dataset set, please read the instructions in Dataset.md.

GeneBody provides the per-view per-frame segmentation, using BackgroundMatting-V2, and register the fitted SMPLx using our enhanced multi-view smplify repo in here.

To use annotations of GeneBody, please check the document Annotation.md, we provide a reference data fetch module in genebody.

Setup the environment

conda env create -f environment.yml

conda activate gnr

cd lib/mesh_grid && python setup.py install

Download the pre-trained model, if it raises a gdown failure, try to download the zip file from your browser from GoogleDrive or OneDrive.

bash scripts/download_model.sh

To render per-view depth image of GeneBody dataset

bash scripts/render_smpl_depth.sh ${GENEBODY_ROOT}

To run GNR

python apps/run_genebody.py --config configs/[train, test, render].txt --dataroot ${GENEBODY_ROOT}

if you have multiple machines and mulitple GPUs, you can try to train our model using distributed data parrallel

bash scripts/train_ddp.sh

We also provide benchmarks of start-of-the-art methods on GeneBody Dataset, methods and requirements are listed in Benchmarks.md.

To test the performance of our released pretrained models, or train by yourselves, run:

git clone --recurse-submodules https://github.com/generalizable-neural-performer/gnr.git

And cd benchmarks/, the released benchmarks are ready to go on Genebody and other datasets such as V-sense and ZJU-Mocap.

| Model | PSNR | SSIM | LPIPS | ckpts |

|---|---|---|---|---|

| NV | 19.86 | 0.774 | 0.267 | ckpts |

| NHR | 20.05 | 0.800 | 0.155 | ckpts |

| NT | 21.68 | 0.881 | 0.152 | ckpts |

| NB | 20.73 | 0.878 | 0.231 | ckpts |

| A-Nerf | 15.57 | 0.508 | 0.242 | ckpts |

(see detail why A-Nerf's performance is counterproductive in issue)

| Model | PSNR | SSIM | LPIPS | ckpts |

|---|---|---|---|---|

| PixelNeRF | 24.15 | 0.903 | 0.122 | |

| IBRNet | 23.61 | 0.836 | 0.177 | ckpts |

@article{cheng2022generalizable,

title={Generalizable Neural Performer: Learning Robust Radiance Fields for Human Novel View Synthesis},

author={Cheng, Wei and Xu, Su and Piao, Jingtan and Qian, Chen and Wu, Wayne and Lin, Kwan-Yee and Li, Hongsheng},

journal={arXiv preprint arXiv:2204.11798},

year={2022}

}