Divergence when using fluid interface

maxaehle opened this issue · 15 comments

Summary

The fluid interface connects RANS zones in such a way that flow can freely pass through it just as if they were a single RANS zone. We (@maxaehle, @BeckettZhou) observed convergence problems in cases where the meshes had "boundary-layer-like" high anisotropy at the fluid interface. However a singlezone simulation, for which the meshes are joined together, converged.

Setup (.cfg, .su2)

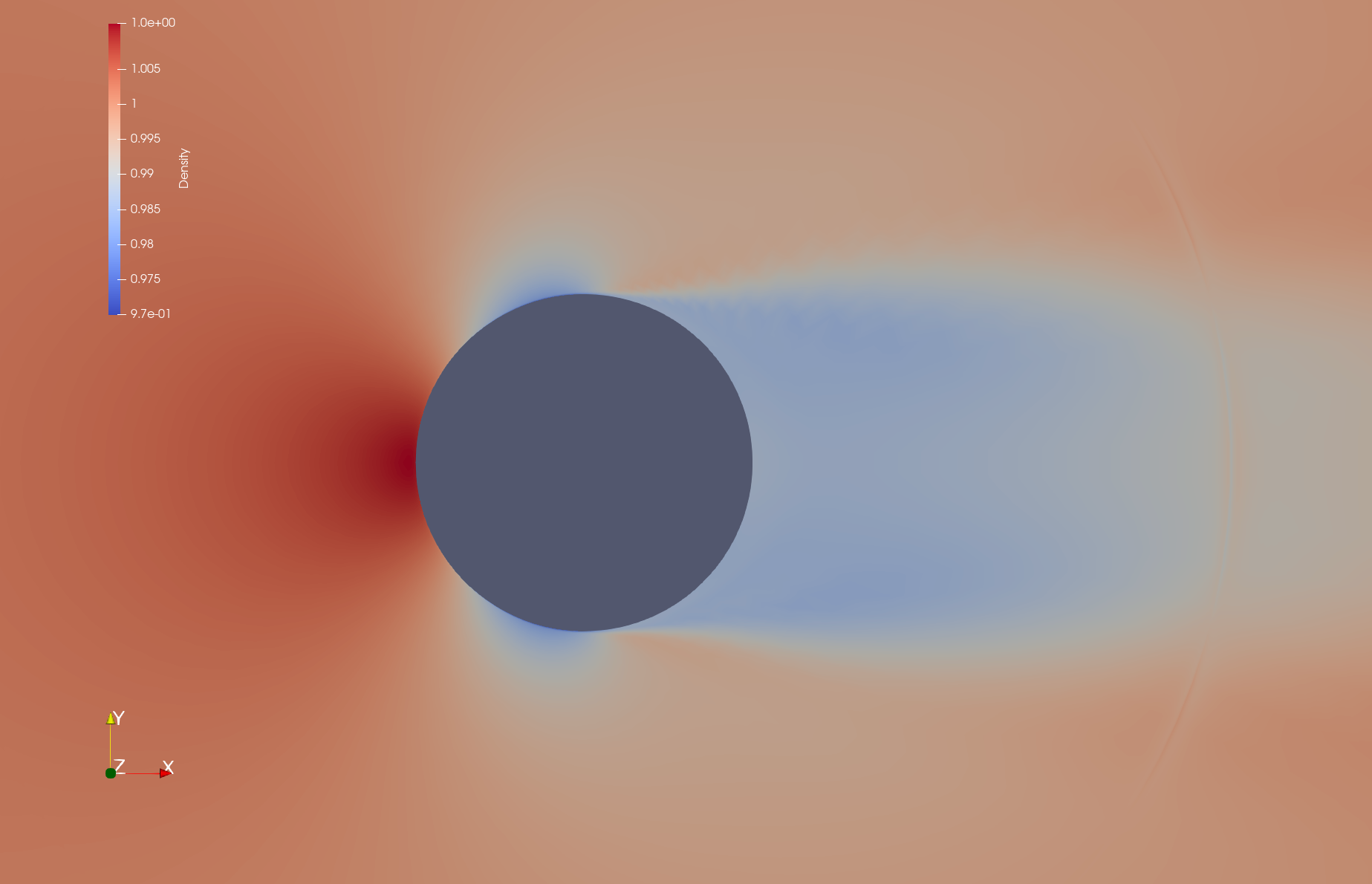

Density for singlezone run with joined meshes, converged:

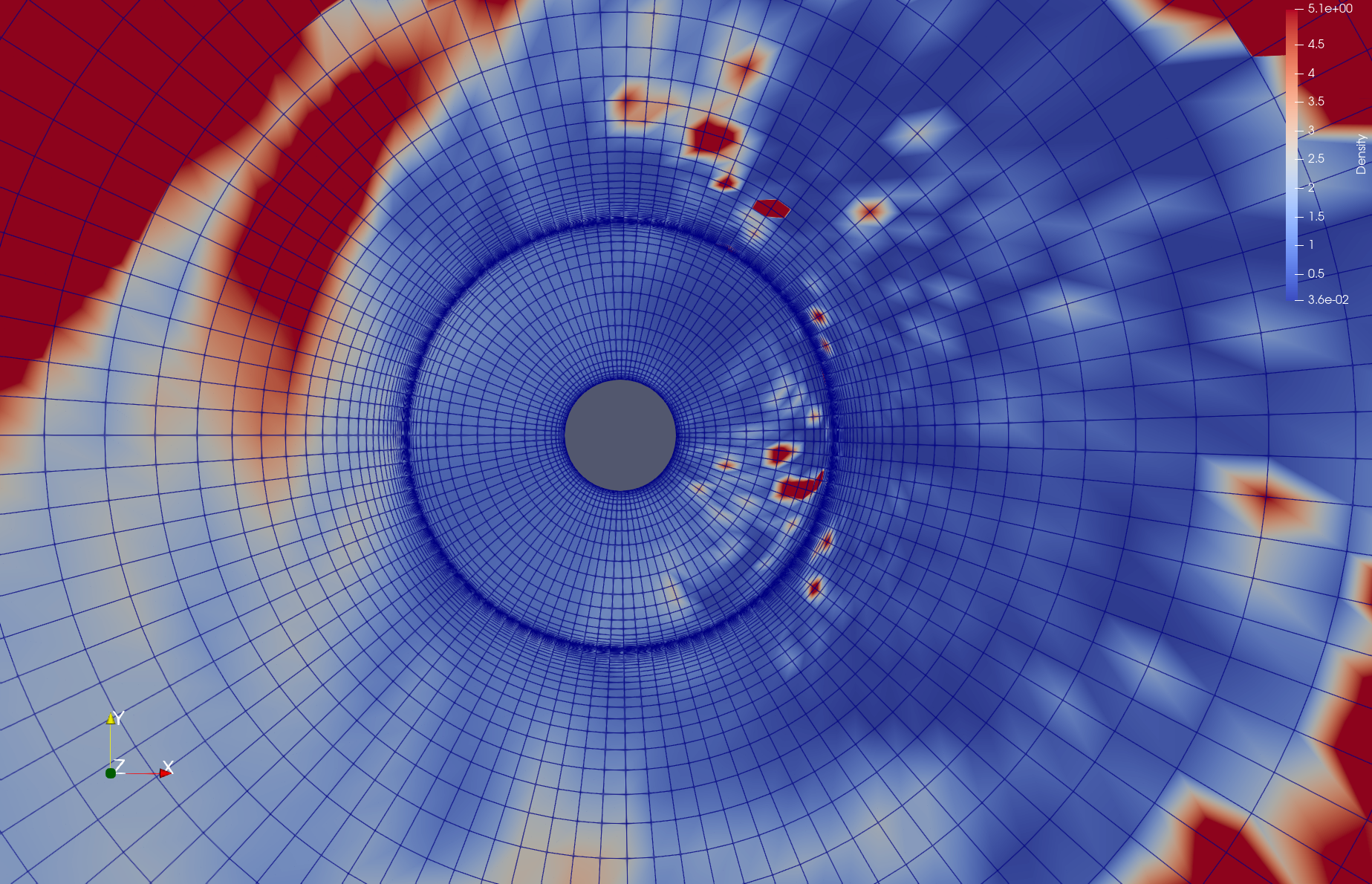

Density for multizone run with separate meshes and fluid interface, diverged:

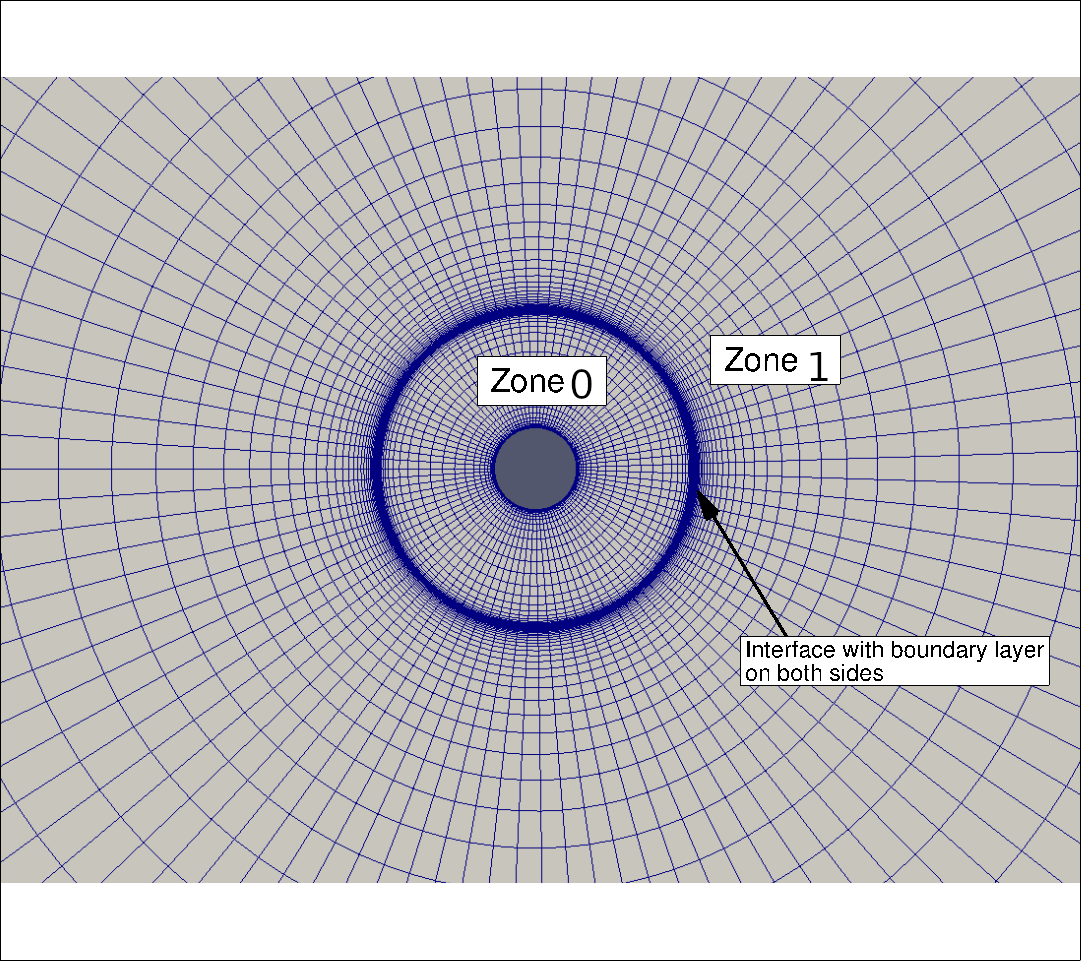

Mesh:

Download link: https://seafile.rlp.net/d/bb0fbb16eb414263b642/

In this issue I consider compressible flow around a circle at Re=1e6, Mach=0.15, using the SST turbulence model. The radial mesh has a boundary layer at the circle of radius 1 (adiabatic wall), and a "boundary-layer-like" structure around a circle of radius (approximately) 4. Having this "boundary-layer-like" structure does not make sense here, but in our project we later want to change the area 1<=radius<=4 to a "porous material" zone and this would introduce a boundary layer there.

Both the attached issue_complicated.zip, issue_simplified.zip contain subdirectories singlezone, multizone. I observed the problem best for issue_complicated.zip. With issue_simplified.zip I try to provide a minimal working example, and it reproduces the same convergence/divergence behaviour, but I'm not sure whether it really covers the complete problem. issue_simplified.zip has been created as follows:

- In the singlezone setup, the whole mesh has been exported to

singlezone.su2. Thesinglezone.cfgisTestCases/rans/naca0012/turb_NACA0012_sst.cfgwith adapted mesh filename, marker names and Reynolds number, andRESTART_SOL=NO. - In the multizone setup, the mesh has been split into two zones.

multizone-0.su2is the inner "ring" of1 <= radius <= 4between the circle and the "boundary-layer-like" structure.multizone-1.su2is the remaining part4 <= radius. I created amultizone.cfgthat refers tomultizone-0.cfg,multizone-1.cfgand joins the zones by aMARKER_FLUID_INTERFACE. The twomultizone-i.cfgs are identical to thesinglezone.cfgexcept that the mesh filename and markers we adapted again, and theITER=option was removed.

In issue_complicated.zip, filenames and cfg files are a bit different but the meshes are the same.

Observed and expected behaviour

I would expect that the two simulations give similar results. However, the singlezone setup converges while the multizone setup diverges (SU2 has diverged (NaN detected). or SU2 has diverged (Residual > 10^20 detected).). The same behaviour is observed for CFL=10 instead of 1000, and also for SA with CFL=10. I ran v7.2.0 (commit 3ec1c68) in serial (without MPI).

I reported a similar problem some time ago in the developer meeting, with a "chopped NACA 0012 airfoil" testcase. For this issue we created this more basic example which seems to reproduce the error (although I am not completely sure). Typically we would see the SU2 has diverged (Residual > 10^20 detected). error.

Differences between multizone/fluid-interface and singlezone/joined-meshes

We came up with the following differences:

-

Flux calculation across the interface: The flux between neighbor cells

i,j, one of which hasradius<4and the other one hasradius>4, is computed differently.-

In singlezone this is just an ordinary flux computation along an edge

i-j, withCSolver::Upwind_Residual(,Viscous_Residual). -

In multizone this flux between two zones is computed by

CSolver::BC_Fluid_Interface. When the method is called to compute the contribution of the interface on the residual of contol volumei,thisis the solver object of the zone of control volumei, so we can access all variables ati(like primitive, turbulent, and their spatial gradients) and give them to theCNumericsobjects. However we cannot access the variables atjdirectly! Through the "interface" mechanism, we get values of the primitive and turbulent variables atj, but not their gradients. That's why theBC_Fluid_Interfacehas lines like this:visc_numerics->SetPrimVarGradient(nodes->GetGradient_Primitive(iPoint), nodes->GetGradient_Primitive(iPoint));

I tried to fix this by transferring gradient information also, but it did not solve the problem.

-

-

Calculation of spatial gradients: Both in the Green-Gauß and the Weighted-Least-Squares calculation, in order to compute the gradient of a quantity

xat a pointionly the values ofxati, and neighbors ofiin the same zone, are taken into account. This means that the gradient at points withradius==4is based on less information, and hence is more inaccurate, in the multizone setup. -

General structure of the drivers: Maybe there are relevant differences between

CSinglezoneDriverandCMultizoneDriver, I don't know.

I will raise the question in the SU2 developer meeting tomorrow.

As we mentioned in the dev meeting where you exposed the problem, the implementation is not good for strongly coupled flows, and I would guess that it is worse for diffusion than convection (because diffusion is elliptic).

I suspect the main problem is that the linear system does not contain information from the other side of the interface, meaning the solution of the two domains is effectively decoupled.

You could try running the case at much lower CFL (below 1) even with an explicit method.

It is also possible that the current treatment could be improved, since it is an example of multiplicative Schwartz decomposition, maybe there is an "optimal" way of implementing that from a physics point of view. Just speculating here, but maybe it would help treating the interface as an outlet if flow is going out, and as an inlet if flow is coming in.

On the numerics side, you can also try hacking the MZ driver to use something more stable than block-Gauss-Seidel (e.g. some quasi-Newton thing for the interface).

But those are all band-aids IMO, if you want a robust fluid-fluid interface you need the coupling to be present in the linear system. The simplest way to do that is to have an internal boundary and treat the problem as single zone.

Thanks Pedro for hinting me at this coupling issue again, now I think I understand it!

For the record, here is what I talked about in today's developer meeting:

When I make the following changes in the issue_simplified/multizone/multizone-i.cfg :

88c88

< CFL_NUMBER= 0.1

---

> CFL_NUMBER= 1000.0

162c162

< TIME_DISCRE_FLOW= EULER_EXPLICIT

---

> TIME_DISCRE_FLOW= EULER_IMPLICIT

177c177

< TIME_DISCRE_TURB= EULER_EXPLICIT

---

> TIME_DISCRE_TURB= EULER_IMPLICIT

then the simplified multizone setup converges, albeit to a different solution:

than what the simplified singlezone setup (from above) converged to:

The same observation can be made analogously for issue_complicated:

The multizone setup with explicit Euler and CFL=0.1 (nearly) converges (actually the residual stalls at avg[bgs][0] approximately -13) to the following limit:

while the singlezone solution (with implicit Euler and CFL 1000) is (EDIT: was momentum plot, replaced by density plot)

Your last figure is for momentum magnitude, I assume that your results with explicit/implicit Euler for single zone are the same?

I assume that your results with explicit/implicit Euler for single zone are the same?

It turns out that they are not. When I applied the above modifications (CFL: 1000 -> 0.1, TIME_DISCRE_*: EULER_IMPLICIT->EULER_EXPLICIT; additionally I had to increase ITER) to the issue_simplified singlezone setup (the one with AoA=10°), I obtain the following solution:

with the following convergence history:

+------------------------------------------------------------------------------------------+

| Inner_Iter| rms[Rho]| rms[k]| rms[w]| CL| CD| LinSolRes|

+------------------------------------------------------------------------------------------+

| 0| -3.131336| -inf| -inf| 0.000000| 2.232692| -inf|

| 1| -3.281025| -inf| -inf| 0.000000| 3.198384| -inf|

...

| 9531740| -11.999999| -inf| -inf| -0.006045| 1.258662| -inf|

| 9531741| -12.000000| -inf| -inf| -0.006045| 1.258662| -inf|

| 9531742| -12.000000| -inf| -inf| -0.006045| 1.258662| -inf|

----------------------------- Solver Exit -------------------------------

All convergence criteria satisfied.

+-----------------------------------------------------------------------+

| Convergence Field | Value | Criterion | Converged |

+-----------------------------------------------------------------------+

| rms[Rho]| -12| < -12| Yes|

+-----------------------------------------------------------------------+

The density plot is

- different from the original

issue_simplifiedsinglezone solution with implicit Euler and CFL=1000. - similar to the

issue_simplifiedmultizone solution with implicit Euler and CFL=0.1.

Similarly, the TKE plots:

issue_simplifiedsinglezone implicit Euler CFL=1000

issue_simplifiedsinglezone explicit Euler CFL=0.1

(it is "red" throughout the domain, except for the wall marker)issue_simplifiedmultizone explicit Euler CFL=0.1: (similar image, "red" everywhere except wall)

Thus, the difference in solutions observed above is due to the choice of implicit vs. explicit Euler and CFL, and not due to problems regarding the interface.

Am I doing something wrong in the explicit Euler cfg file, whose diff to the SU2/TestCases/rans/naca0012/turb_NACA0012_sst.cfg is as follows?

27c27

< RESTART_SOL= NO

---

> RESTART_SOL= YES

45c45

< REYNOLDS_NUMBER= 1.0E6

---

> REYNOLDS_NUMBER= 6.0E6

70c70

< MARKER_HEATFLUX= ( circle, 0.0 )

---

> MARKER_HEATFLUX= ( airfoil, 0.0 )

76c76

< MARKER_PLOTTING= ( circle )

---

> MARKER_PLOTTING= ( airfoil )

79c79

< MARKER_MONITORING= ( circle )

---

> MARKER_MONITORING= ( airfoil )

88c88

< CFL_NUMBER= 0.1

---

> CFL_NUMBER= 1000.0

101c101

< ITER= 9999900

---

> ITER= 99999

162c162

< TIME_DISCRE_FLOW= EULER_EXPLICIT

---

> TIME_DISCRE_FLOW= EULER_IMPLICIT

177c177

< TIME_DISCRE_TURB= EULER_EXPLICIT

---

> TIME_DISCRE_TURB= EULER_IMPLICIT

203c203

< MESH_FILENAME= singlezone.su2

---

> MESH_FILENAME= n0012_225-65.su2

I noticed that

- the distinction between the time integration schemes (implicit Euler, explicit Euler, ...) is made in

CIntegration::Time_Integration: Exactly one of the functionsCSolver::ImplicitEuler_Iteration,CSolver::ExplicitEuler_Iteration,ExplicitRK_Iteration,ClassicalRK4_Iterationis called. - These are virtual functions, defined as "do nothing" in

CSolver. - Regarding the flow equations,

CFVMFlowSolverBase<>overridesImplicitEuler_Iteration, andCEulerSolver/CIncEulerSolveroverride the other three functions. - Regarding the turbulence equation,

CTurbSolveroverridesImplicitEuler_Iterationonly.

Thus probably I can use TIME_DISCRE_FLOW=EULER_EXPLICIT but not TIME_DISCRE_TURB=EULER_EXPLICIT?

Using Euler explicit for the turbulence used to result in a crash, I assumed you were using implicit with the same very low CFL, sorry about that.

We need to fix that somehow... Can you open another issue for us to keep track of this please?

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. If this is still a relevant issue please comment on it to restart the discussion. Thank you for your contributions.

After #1435 was merged, I tried using Explicit Euler for the time discretization of both the flow and turbulent solvers again. However, the divergence problems persist.

I will not continue to work on this issue.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. If this is still a relevant issue please comment on it to restart the discussion. Thank you for your contributions.

No, I gave up ;) Shall I close this issue then?

Keep it open

@maxaehle do you have these cases somewhere, so it is possible in the future to verify that the issue has been solved?

The config and mesh files can be downloaded from https://seafile.rlp.net/d/bb0fbb16eb414263b642/ and more information on them is provided above.

I ran the four cases in the current develop branch at 58cf2d4, configuring the build with --buildtype=debug.

SU2_CFD singlezone.cfg in issue_simplified/singlezone gives

+------------------------------------------------------------------------------------------+

| Inner_Iter| rms[Rho]| rms[k]| rms[w]| CL| CD| LinSolRes|

+------------------------------------------------------------------------------------------+

| 0| -3.131336| -3.358218| 2.356417| 0.021774| 45.825257| -2.955766|

...

| 12377| -11.999782| -15.033189| -10.208063| 0.003434| 0.989679| -4.378694|

| 12378| -12.000141| -15.033858| -10.208411| 0.003434| 0.989679| -4.378353|

----------------------------- Solver Exit -------------------------------

All convergence criteria satisfied.

SU2_CFD multizone.cfg in issue_simplified/multizone gives

+--------------------------------------+

| Multizone Summary |

+--------------------------------------+

| Outer_Iter| avg[bgs][0]| avg[bgs][1]|

+--------------------------------------+

| 0| -0.259741| -0.741089|

| 1| -1.224321| -2.240439|

...

| 841| -0.356891| 0.285076|

| 842| -0.124937| 0.178422|

Error in "void CSolver::SetResidual_RMS(const CGeometry*, const CConfig*)":

-------------------------------------------------------------------------

SU2 has diverged (NaN detected).

------------------------------ Error Exit -------------------------------

mpirun SU2_CFD mz-0.cfg in issue_complicated/singlezone with 24 MPI ranks (because it needs so many iterations) gives

+----------------------------------------------------------------+

| Inner_Iter| Time(sec)| rms[Rho]| CL| CD|

+----------------------------------------------------------------+

| 0| 4.5727e-02| -3.131336| -0.000000| 47.049080|

| 1| 3.9651e-02| -3.399537| -0.000000| 43.567743|

...

| 1304734| 3.6630e-02| -12.000000| -0.000000| 1.003811|

| 1304735| 3.6630e-02| -12.000003| -0.000000| 1.003811|

----------------------------- Solver Exit -------------------------------

All convergence criteria satisfied.

SU2_CFD mz-all.cfg in issue_complicated/multizone gives

+--------------------------------------+

| Multizone Summary |

+--------------------------------------+

| Outer_Iter| avg[bgs][0]| avg[bgs][1]|

+--------------------------------------+

| 0| -0.937734| -2.766626|

| 1| -2.167353| -6.482096|

...

| 6546| -0.859233| -0.793018|

| 6547| -0.865290| -0.739081|

Error in "void CSolver::SetResidual_RMS(const CGeometry*, const CConfig*)":

-------------------------------------------------------------------------

SU2 has diverged (NaN detected).

------------------------------ Error Exit -------------------------------