update The code for model ensemble (grid search): run_ensemble_grid.py

*working in progress

This repo is our research summary and playground for MRC. More features are coming.

The codes are based on Transformers v2.3.0. The dependencies are the same.

You can install the dependencies by pip install transformers==2.3.0

or directly download the requirements file: https://github.com/huggingface/transformers/blob/v2.3.0/requirements.txt and run pip install -r requirements.

Looking for a comprehensive and comparative review of MRC? check out our new survey paper: Machine Reading Comprehension: The Role of Contextualized Language Models and Beyond (preprint, 2020).

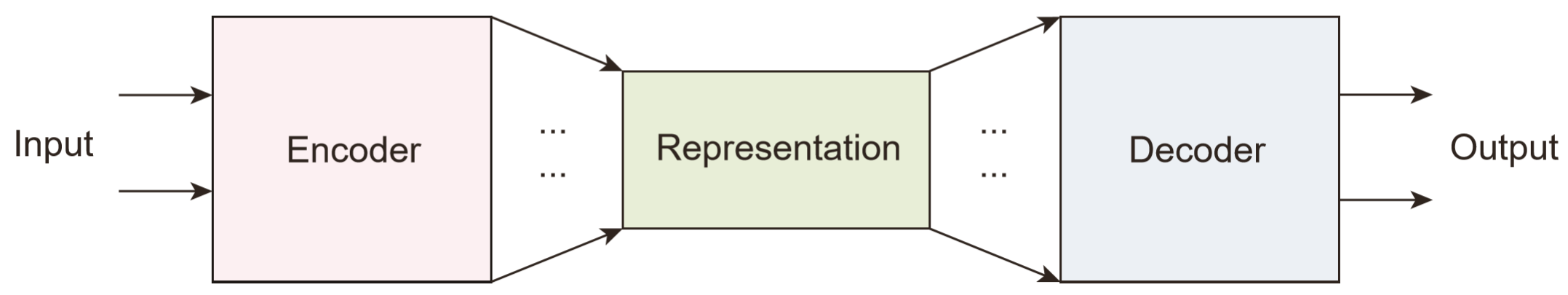

In this work, MRC model is regarded as a two-stage Encoder-Decoder architecture. Our empirical analysis is shared in this repo.

-

Language Units

Subword-augmented Embedding for Cloze Reading Comprehension (COLING 2018)

Effective Subword Segmentation for Text Comprehension (TASLP)

-

Linguistic Knowledge

Semantics-aware BERT for language understanding (AAAI 2020)

SG-Net: Syntax-Guided Machine Reading Comprehension (AAAI 2020)

-

Commonsense Injection

Multi-choice Dialogue-Based Reading Comprehension with Knowledge and Key Turns (preprint)

-

Contextualized language models (CLMs) for MRC:

The implementation is based on Transformers v2.3.0.

As part of the techniques in our Retro-Reader paper:

Retrospective Reader for Machine Reading Comprehension (preprint)

1) Multitask-style verification

We evaluate different loss functions

cross-entropy (run_squad_av.py)

binary cross-entropy (run_squad_av_bce.py)

mse regression (run_squad_avreg.py)

2) External verification

Train an external verifier (run_cls.py)

Cross Attention (run_squad_seq_trm.py)

Matching Attention (run_squad_seq_sc.py)

Related Work:

Modeling Multi-turn Conversation with Deep Utterance Aggregation (COLING 2018)

DCMN+: Dual Co-Matching Network for Multi-choice Reading Comprehension (AAAI 2020)

Model answer dependency (start + seq -> end) (run_squad_dep.py)

-

train a sketchy reader (

sh_albert_cls.sh) -

train an intensive reader (

sh_albert_av.sh) -

rear verification: merge the prediction for final answer (

run_verifier.py)

SQuAD 2.0 Dev Results:

```

{

"exact": 87.75372694348522,

"f1": 90.91630165754992,

"total": 11873,

"HasAns_exact": 83.1140350877193,

"HasAns_f1": 89.4482539777485,

"HasAns_total": 5928,

"NoAns_exact": 92.38015138772077,

"NoAns_f1": 92.38015138772077,

"NoAns_total": 5945

}

```

One-shot Learning for Question-Answering in Gaokao History Challenge (COLING 2018)

@article{zhang2020retrospective,

title={Machine Reading Comprehension: The Role of Contextualized Language Models and Beyond},

author={Zhang, Zhuosheng and Zhao, Hai and Wang, Rui},

journal={arXiv preprint arXiv:2005.06249},

year={2020}

}

@article{zhang2020retrospective,

title={Retrospective reader for machine reading comprehension},

author={Zhang, Zhuosheng and Yang, Junjie and Zhao, Hai},

journal={arXiv preprint arXiv:2001.09694},

year={2020}

}

CMRC 2017: The best single model (2017).

SQuAD 2.0: The best among all submissions (both single and ensemble settings); The first to surpass human benchmark on both EM and F1 scores with a single model (2019).

SNLI: The best among all submissions (2019-2020).

RACE: The best among all submissions (2019).

GLUE: The 3rd best among all submissions (early 2019).

Feel free to email zhangzs [at] sjtu.edu.cn if you have any questions.