Large Language Model (LLM) powered evaluator for Retrieval Augmented Generation (RAG) pipelines.

Our project is inspired by the RAGAS project which defines and implements 8 metrics to evaluate inputs and outputs of a Retrieval Augmented Generation (RAG) pipeline, and by ideas from the ARES paper, which attempts to calibrate these LLM evaluators against human evaluators.

It provides an LLM based framework to evaluate the performance of RAG systems using a set of metrics that are optimized for the application domain it (the RAG system) operates in. We have used the Gemini Pro 1.0 from Google AI as the LLM the framework uses. We have also used the Google AI embedding model to generate embeddings for some of the metrics.

- We re-implemented the RAGAS metrics using LangChain Expression Language (LCEL) so we could access outputs of intermediate steps in metrics calculation.

- We then implemented the metrics using DSPy (Declarative Self-improving Language Programs in Python) and optimized the prompts to minimize score difference with LCEL using a subset of examples for Few Shot Learning (using Bootstrap Few Shot with Random Search).

- We evaluated the confidence of scores produced by LCEL and DSPy metric implementations.

- We are building a tool that allows human oversight on the LCEL outputs (including intermediate steps) for Active Learning supervision.

- We will re-optimize the DSPy metrics using recalculated scores based on tool updates.

- DSPy has a steep learning curve and it is still a work in progress, so some parts of it don't work as expected

- Our project grew iteratively as our understanding of the problem space grew, so we had to do some steps sequentially, leading to wasted time

- How team members from different parts of the world came together and pooled their skills towards our common goal of building a set of domain optimized metrics.

- We gained greater insight into the RAGAS metrics once we implemented them ourselves. We gained additional insight when building the tool using the intermediate outputs.

- Our team was not familiar with DSPy at all, we learned to use it and are very impressed with its capabilities

We notice that most of our metrics involve predictive steps, where we predict a binary outcome given a pair of strings. These seem like variants of NLI (Natural Language Inference) which could be handled by non-LLM models, which are not only cheaper but also don't suffer from hallucinations, leading to more repeatable evaluations. It will require more data to train them, so we are starting to generate synthetic data, but this has other dependencies before we can start to offload these steps to smaller models.

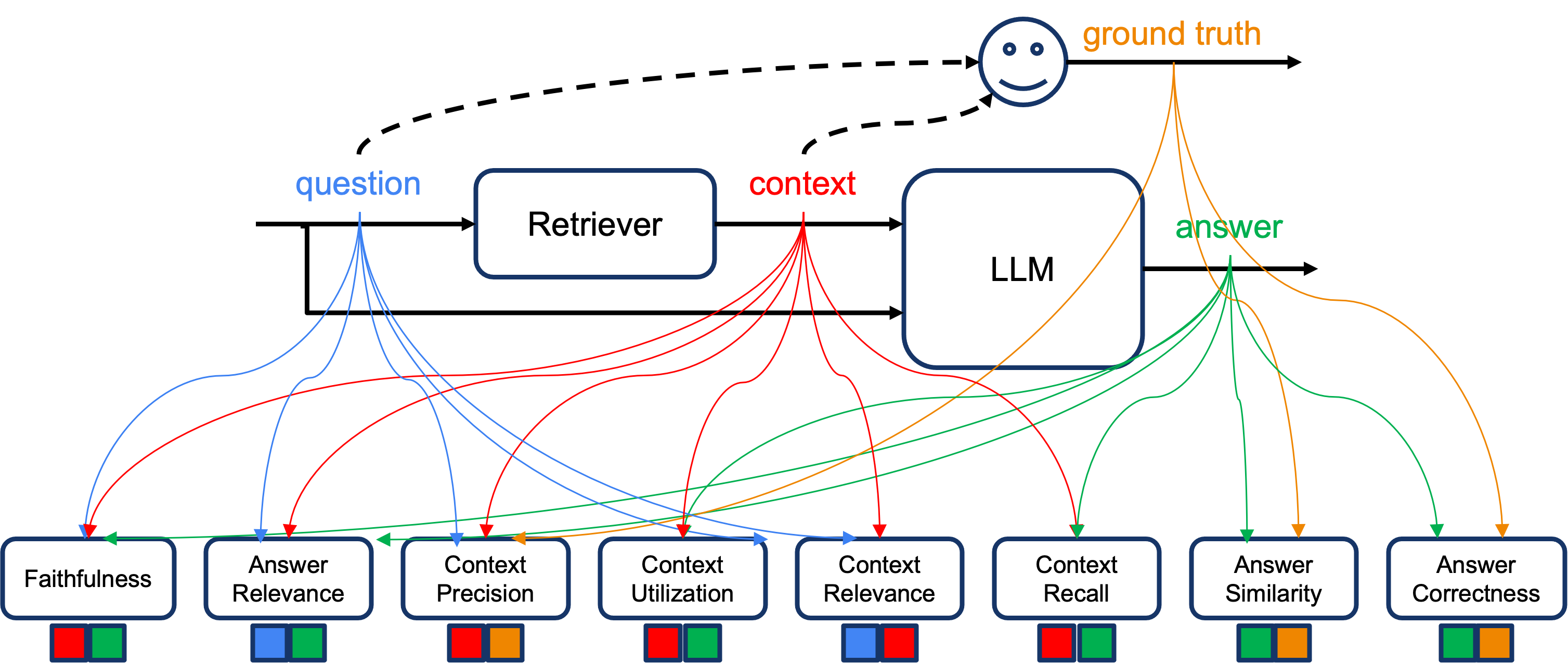

The following RAGAS metrics have been (re-)implemented in this project (because I had trouble making them work as-is, and because they are conceptually quite simple to implement).

- Faithfulness -- given question, context and answer, measures factual consistency of the answer against the given context.

- Answer Relevance -- given question, context and answer, measures how pertinent the answer is to the question.

- Context Precision -- given question, context and ground truth, measures whether statements in the ground truth are found in context.

- Context Utilization -- same as Context Precision, using the predicted answer instead of the ground truth.

- Context Relevance -- given question and context, measures relevance of the context against the question.

- Context Recall -- given the context and answer, measures extent to which context aligns with the answer.

- Answer Similarity -- given answer and ground truth, measures semantic similarity between them (cosine or cross encoder similarity).

- Answer Correctness -- given answer and ground truth, measures the accuracy given facts in these two texts.

The metrics described above can be run against your dataset by calling the run_prompted_metrics.py script in the src directory, with the path to the input (provided in JSON-L), the path to the output (TSV) file, and the appropriate metric name.

$ python3 run_prompted_metrics.py --help

usage: run_prompted_metrics.py [-h] --metric

{answer_correctness,answer_relevance,answer_similarity,context_precision,context_recall,context_relevance,context_utilization,faithfulness}

--input INPUT_JSONL [--output OUTPUT_TSV] [--parallel] [--cross-encoder]

options:

-h, --help show this help message and exit

--metric {answer_correctness,answer_relevance,answer_similarity,context_precision,context_recall,context_relevance,context_utilization,faithfulness}

The metric to compute

--input INPUT_JSONL

Full path to evaluation data in JSONL format

--output OUTPUT_TSV

Full path to output TSV file

--parallel Run in parallel where possible (default false)

--cross-encoder Use cross-encoder similarity scoring (default false)

Ideally, we want to generate metrics from a running RAG pipeline, but in order to simplify the development process, we have isolate the evaluation functionality, feeding it the input it needs via a JSON-L file. Each line of the JSON-L file represents a single RAG transaction. The required fields are as follows.

{

"id": {qid: int},

"query": {query: str},

"context": [

{

"id": {chunk_id: str},

"chunk_text": {chunk_text: str},

... other relevant fields

},

... more context elements

],

"ideal_answer": {ground_truth: str},

"predicted_answer": {answer: str}

}

We have used the AmnestyQA dataset on HuggingFace as our reference dataset. You can find a copy of that data in the format described above.

We have used DSPy to optimize our prompts for AmnestyQA dataset. At a very high level, this involves using random subsets of the training data (in our case outputs from our prompted RAG metrics) and finding the best subset of examples that produce the most optimized prompt.

The DSPy implementations look for the optimized configuration in resource/configs. If it doesn't find it, then it looks for the dataset to allow it to optimize itself first. We have provided configurations for RAGAS metrics prompts optimized for AmnestyQA, but you probably need to generate optimized versions for your own dataset. To do so, you need to run the generate_datasets.py script, which will extract data from running the RAGAS prompts against the LLM and write it out as a JSON file into data/dspy-datasets (that's where the DSPy fine-tuning code expects to find it). The command to generate a dataset to fine-tune a DSPy prompt for a particular metric is shown below:

$ python3 generate_datasets.py --help

usage: generate_datasets.py [-h] --metric

{answer_correctness,answer_relevance,answer_similarity,context_precision,context_recall,context_relevance,context_utilization,faithfulness}

--input INPUT --output OUTPUT [--parallel] [--debug]

options:

-h, --help show this help message and exit

--metric {answer_correctness,answer_relevance,answer_similarity,context_precision,context_recall,context_relevance,context_utilization,faithfulness}

The metric to generate datasets for

--input INPUT Full path to input JSONL file

--output OUTPUT Full path to output directory

--parallel Run in parallel where possible (default false)

--debug Turn debugging on (default: false)

To re-run the optimization locally, remove the configuration file for the metric from the resources/config directory. The next time you run run_learned_metrics.py it will re-optimize (this is a fairly lengthy process but doesn't require GPU). Leave the config file alone to re-use the prompt optimized for AmnestyQA.

$ python3 run_learned_metrics.py --help

usage: run_learned_metrics.py [-h] --metric

{answer_correctness,answer_relevance,answer_similarity,context_precision,context_recall,context_relevance,context_utilization,faithfulness}

--input INPUT [--output OUTPUT] [--cross-encoder] [--model-temp MODEL_TEMP]

options:

-h, --help show this help message and exit

--metric {answer_correctness,answer_relevance,answer_similarity,context_precision,context_recall,context_relevance,context_utilization,faithfulness}

The metric to compute

--input INPUT Full path to evaluation data in JSONL format

--output OUTPUT Full path to output TSV file

--cross-encoder Use cross-encoder similarity scoring (default true)

--model-temp MODEL_TEMP

The temperature of the model - between 0.0 and 1.0 (default 0.0)

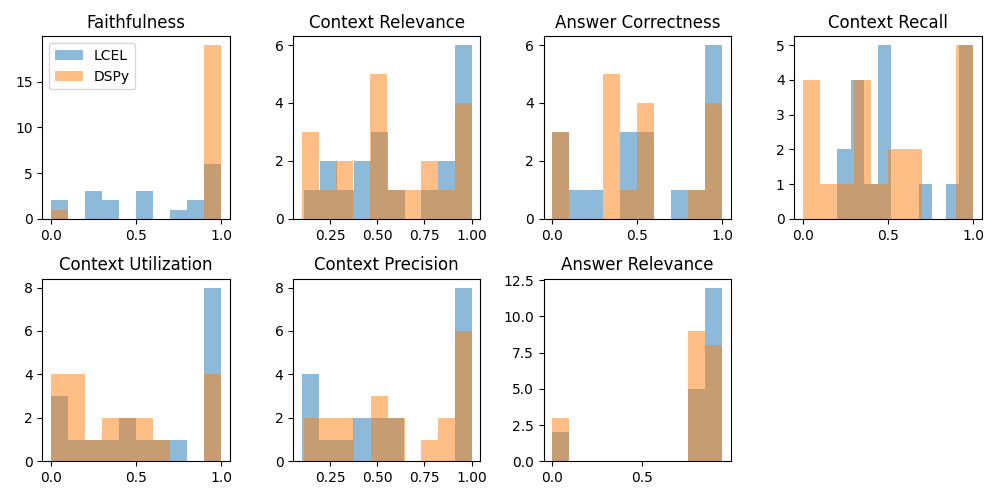

After computing the different metrics using the "prompted" approach using LCEL and the "optimized from data" approach using DSPy, we plot the scores as shown below.

Visually, at least for some of the metrics, the DSPy approach seems to produce scores whose histograms are narrower around 0 and 1. In order to quantify this intuition, we measure deviations of the scores from 0.5 on the upper and lower side, then compute the standard deviation of the deviations for both LCEL and DSPy scores for each metric. The results are summarized below.

| Metric | LCEL | DSPy |

|---|---|---|

| Faithfulness | 0.162 | 0.000 |

| Context Relevance | 0.191 | 0.184 |

| Answer Correctness | 0.194 | 0.193 |

| Context Recall | 0.191 | 0.183 |

| Context Utilization | 0.186 | 0.189 |

| Context Precision | 0.191 | 0.178 |

| Answer Relevance | 0.049 | 0.064 |

As can be seen, for most of the metrics, optimized prompts from DSPy produce more confident scores. In many cases, the difference is quite small, which may be attributed to the relatively few examples we are working with.