Miran Heo*, Sukjun Hwang*, Seoung Wug Oh, Joon-Young Lee, Seon Joo Kim (*equal contribution)

Jan 20, 2023: Our new online VIS method "GenVIS" is available at here!Sep 14, 2022: VITA is accepted to NeurIPS 2022!Aug 15, 2022: Code and pretrained weights are now available! Thanks for your patience :)

See installation instructions.

We provide a script train_net_vita.py, that is made to train all the configs provided in VITA.

To train a model with "train_net_vita.py" on VIS, first setup the corresponding datasets following Preparing Datasets for VITA.

Then run with COCO pretrained weights in the Model Zoo:

python train_net_vita.py --num-gpus 8 \

--config-file configs/youtubevis_2019/vita_R50_bs8.yaml \

MODEL.WEIGHTS vita_r50_coco.pth

To evaluate a model's performance, use

python train_net_vita.py \

--config-file configs/youtubevis_2019/vita_R50_bs8.yaml \

--eval-only MODEL.WEIGHTS /path/to/checkpoint_file

| Name | R-50 | R-101 | Swin-L |

|---|---|---|---|

| VITA | model | model | model |

| Name | Backbone | AP | AP50 | AP75 | AR1 | AR10 | Download |

|---|---|---|---|---|---|---|---|

| VITA | R-50 | 49.8 | 72.6 | 54.5 | 49.4 | 61.0 | model |

| VITA | Swin-L | 63.0 | 86.9 | 67.9 | 56.3 | 68.1 | model |

| Name | Backbone | AP | AP50 | AP75 | AR1 | AR10 | Download |

|---|---|---|---|---|---|---|---|

| VITA | R-50 | 45.7 | 67.4 | 49.5 | 40.9 | 53.6 | model |

| VITA | Swin-L | 57.5 | 80.6 | 61.0 | 47.7 | 62.6 | model |

| Name | Backbone | AP | AP50 | AP75 | AR1 | AR10 | Download |

|---|---|---|---|---|---|---|---|

| VITA | R-50 | 19.6 | 41.2 | 17.4 | 11.7 | 26.0 | model |

| VITA | Swin-L | 27.7 | 51.9 | 24.9 | 14.9 | 33.0 | model |

The majority of VITA is licensed under a Apache-2.0 License. However portions of the project are available under separate license terms: Detectron2(Apache-2.0 License), IFC(Apache-2.0 License), Mask2Former(MIT License), and Deformable-DETR(Apache-2.0 License).

If you use VITA in your research or wish to refer to the baseline results published in the Model Zoo, please use the following BibTeX entry.

@inproceedings{VITA,

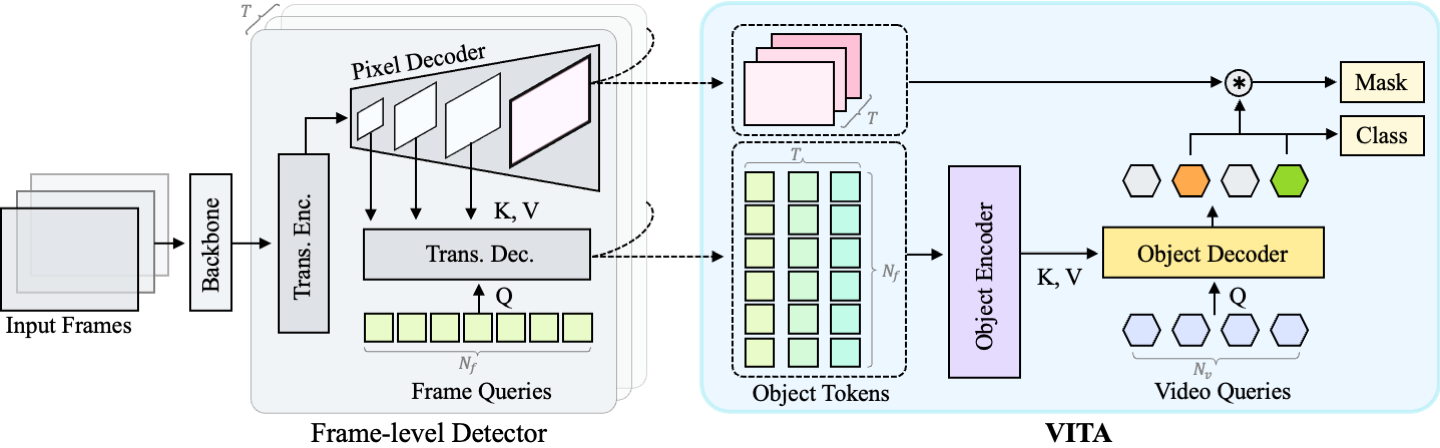

title={VITA: Video Instance Segmentation via Object Token Association},

author={Heo, Miran and Hwang, Sukjun and Oh, Seoung Wug and Lee, Joon-Young and Kim, Seon Joo},

booktitle={Advances in Neural Information Processing Systems},

year={2022}

}Our code is largely based on Detectron2, IFC, Mask2Former, and Deformable DETR. We are truly grateful for their excellent work.