Efficient Multimodal Transformer with Dual-Level Feature Restoration for Robust Multimodal Sentiment Analysis (TAC 2023)

[arXiv], [IEEE]

Licai Sun, Zheng Lian, Bin Liu, and Jianhua Tao

University of Chinese Academy of Sciences & Institute of Automation, Chinese Academy of Sciences & Tsinghua University

[2023.11.16] We upload the poster of EMT-DLFR for CCAC 2023.

In this paper, we aims to tackle two major challenges in robust multimodal sentiment analysis (MSA):

- inefficiency when modeling cross-modal interactions in unaligned multimodal data.

- vulnerability to random modality feature missing which typically occurs in realistic settings.

To this end, we propose Efficient Multimodal Transformer with Dual-Level Feature Restoration (EMT-DLFR):

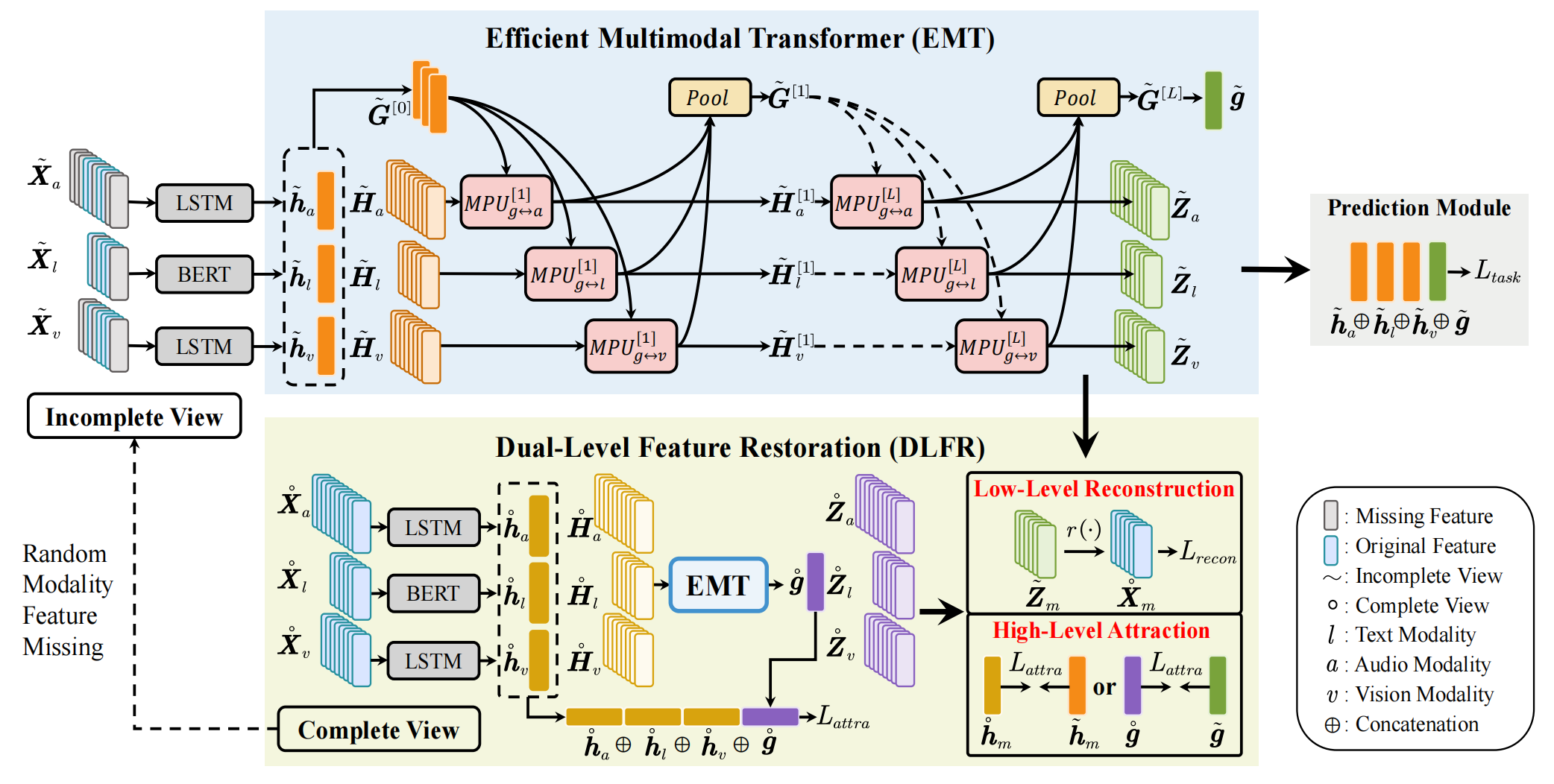

- Efficient Multimodal Transformer (EMT). EMT introduces the global multimodal context and employs it to interact with local unimodal features to enable efficient global-local cross-modal interaction, which not only avoids the quadratic scaling cost of previous local-local interaction methods (e.g., MulT, TFR-Net, and PMR) but also leads to performance gains.

- Dual-Level Feature Restoration (DLFR). Unlike the standalone implicit low-level feature reconstruction in TFR-Net, DLFR combines both implicit low-level feature reconstruction and explicit high-level feature attraction to more effectively guide EMT to achieve robust representation learning from incomplete multimodal data.

Python 3.8PyTorch 1.7.1transformers==4.29.0einops==0.6.0scikit-learn=1.1.3pandas==1.5.1numpy=1.23.4

Please refer to environment.yml for more details.

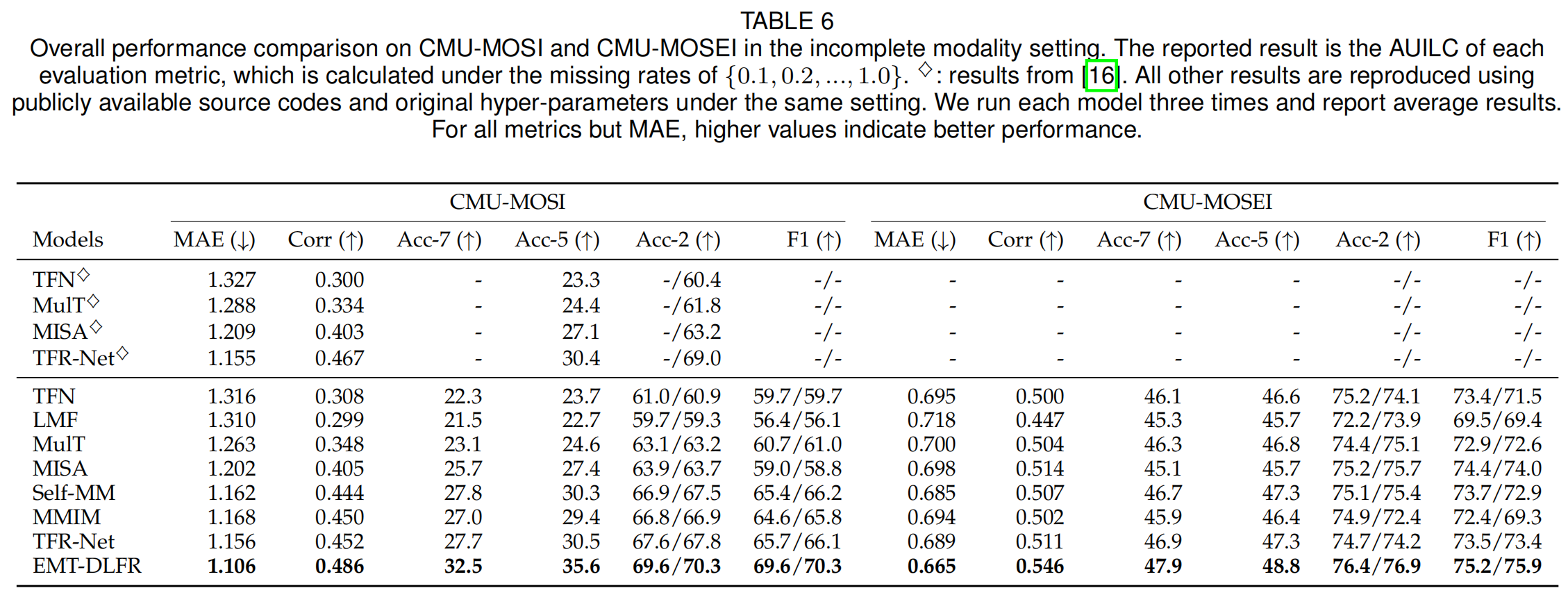

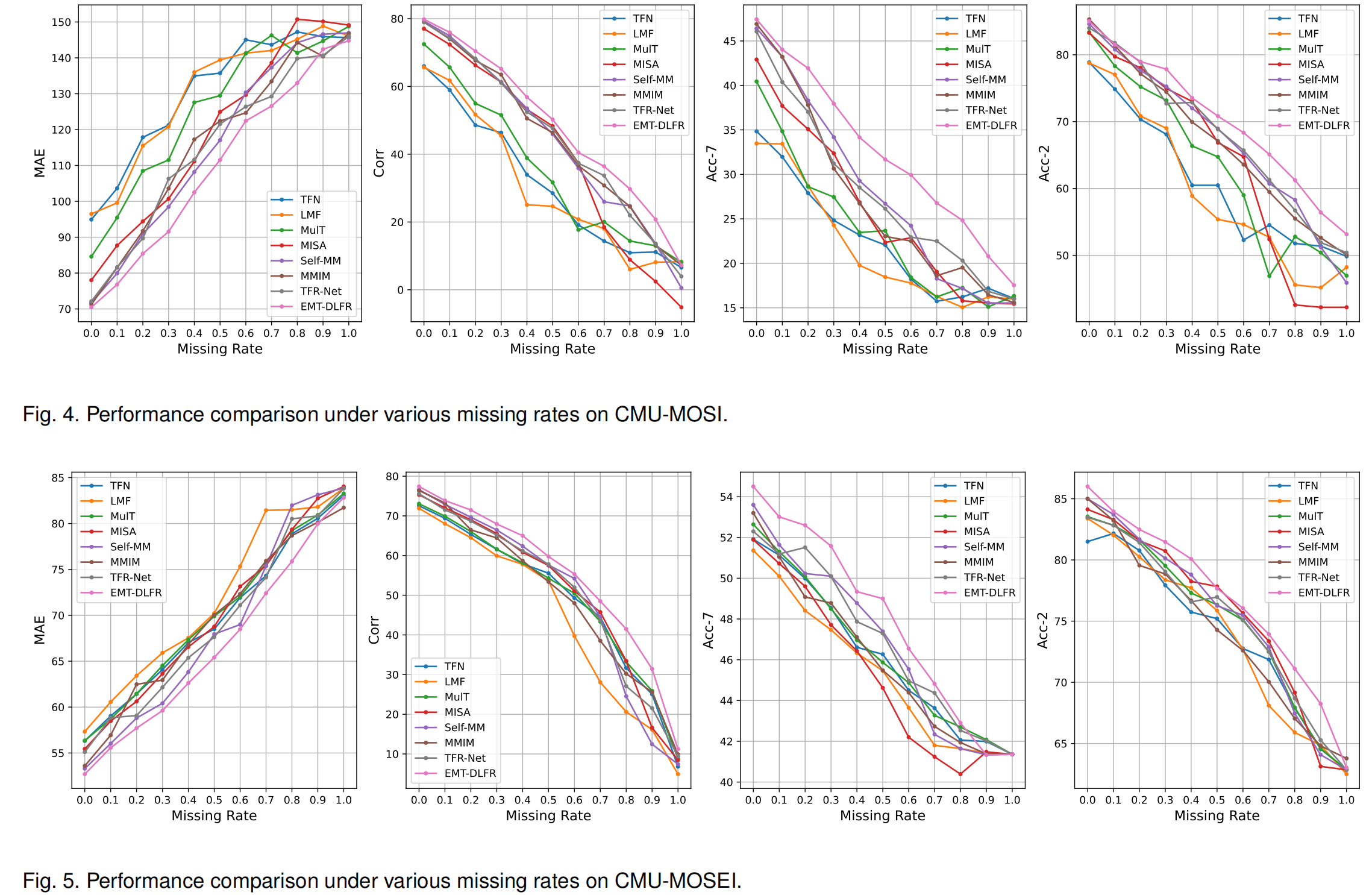

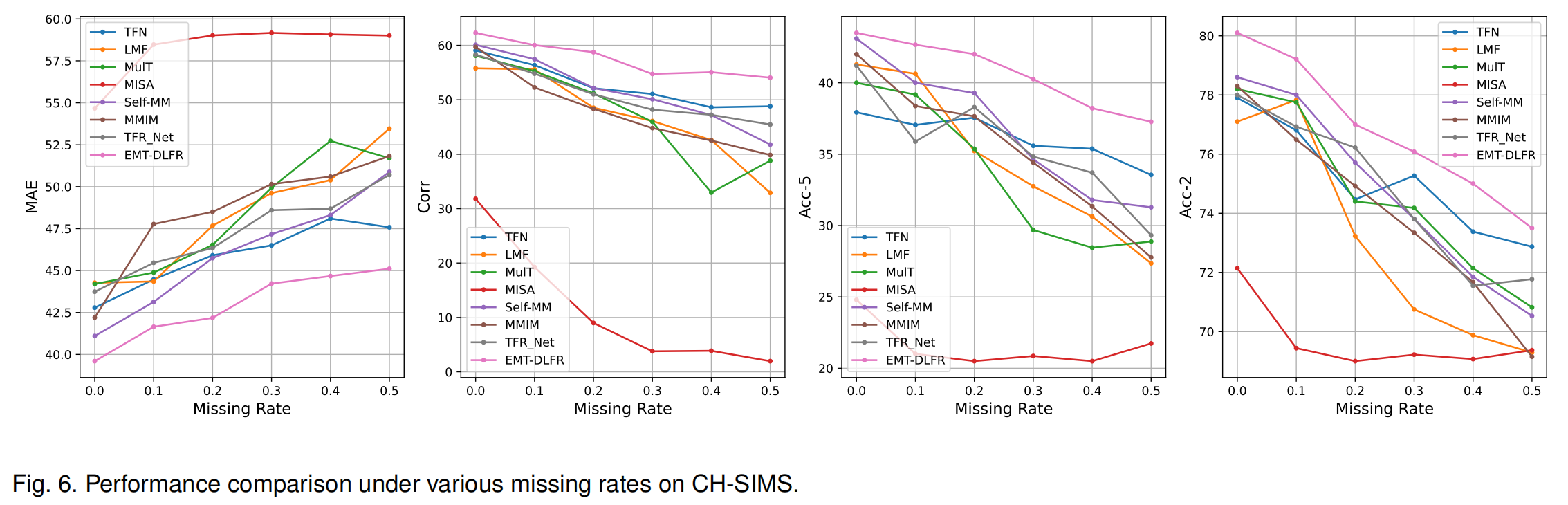

- We conduct experiments on three popular MSA datasets, including CMU-MOSI, CMU-MOSEI, and CH-SIMS.

- Download pre-pocessed dataset features and pre-trained bert checkpoints from Baidu Cloud Drive (code:

ctgs) or Google Cloud Drive (Credits: Self-MM). Assume your data structure to be like this (usingsha1sumcommand to verify SHA-1 hash value in the parenthesis):

|MMSA

|MOSI

|Processed

|unaligned_50.pkl (5da0b8440fc5a7c3a457859af27458beb993e088)

|MOSEI

|Processed

|unaligned_50.pkl (db3e2cff4d706a88ee156981c2100975513d4610)

|SIMS

|Processed

|unaligned_39.pkl (a00c73e92f66896403c09dbad63e242d5af756f8)

-

Go to config/get_data_root.py and change the data root to your own path.

-

For SIMS, you need to use the following script to generate the normalized version (only normalize audio and visual features).

python preprocess/normalize_sims.py

- CMU-MOSI

sh scripts/mosi/run_once.sh 0

or

sh scripts/mosi/run.sh 0

'run_once.sh': for running across all missing rates (i.e., 0.0, 0.1, ..., 1.0).

'run.sh': only run a single specified missing rate (e.g., 0.5).

- CMU-MOSEI

sh scripts/mosei/run_once.sh 0

- CMU-MOSEI

sh scripts/sims/run_once.sh 0

- CMU-MOSI & CMU-MOSEI

- CH-SIMS

- Note: since these datasets are relatively small and there exists the randomness caused by different software & hardware settings, the reproduced results might slightly better or worse than those reports in the paper. You can run multiple times and tweak the hyperparameters to obtain more better results.

Our conversational version for incomplete multimodal learning is open-sourced at GCNet.

This project is built upon TFR-Net and MMSA. Thanks for the nice codebases.

If you think this project is helpful, please feel free to leave a star and cite our paper:

@article{sun2023efficient,

title={Efficient multimodal transformer with dual-level feature restoration for robust multimodal sentiment analysis},

author={Sun, Licai and Lian, Zheng and Liu, Bin and Tao, Jianhua},

journal={IEEE Transactions on Affective Computing},

year={2023},

publisher={IEEE}

}

If you have any questions, feel free to contact us.

Licai Sun: sunlicai2019@ia.ac.cn, or Zheng Lian: lianzheng2016@ia.ac.cn