-

A collection of resources on attention-based graph neural networks(Github,Paper).

-

Welcome to submit a pull request to add more awesome papers.

- [x] [journal] [model] paper_title [[paper]](link) [[code]](link)- Surveys

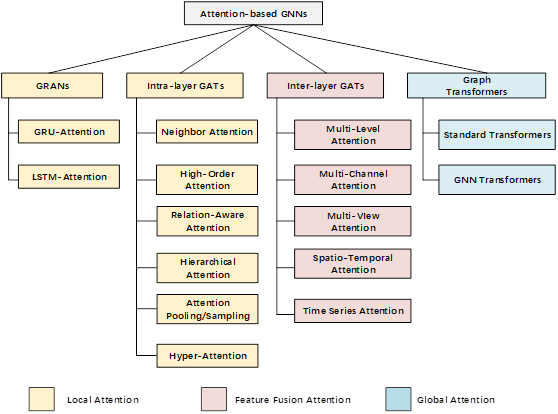

- GRANs : (Graph Recurrent Attention Networks)

- GATs : (Graph Attention Networks:Intra-layer, Inter-layer)

- Graph Transformers : (Graph Transformers)

-

[Artif Intell Rev2023] [survey] Attention-based Graph Neural Networks: A Survey [paper]

-

[TKDD2019] [survey] Attention Models in Graphs: A Survey [paper]

-

[arXiv2022] [survey] Transformer for Graphs: An Overview from Architecture Perspective [paper]

-

[TIST2022] [] Graph neural networks:Taxonomy, advances, and trends [paper]

-

[2020] [survey] A Comprehensive Survey on Graph Neural Networks [paper]

-

[2021] [survey] Graph Deep Learning: State of the Art and Challenges [paper]

- [2022] [survey] Graph Neural Networks for Natural Language Processing: A Survey [paper]

- [2022] [] Graph neural networks for recommender system [paper]

- [2021] [survey] Graph Neural Network for Traffic Forecasting: A Survey [paper]

- [2022] [survey] Graph Neural Networks in IoT: A Survey [paper]

- [2023] [] Multimodal learning with graphs [paper]

- [TKDE2022] [survey] A General Survey on Attention Mechanisms in Deep Learning [paper]

- [2021] [survey] Neural Attention Models in Deep Learning: Survey and Taxonomy [paper]

- [2021] [survey] An Attentive Survey of Attention Models [paper]

- [2021] [survey] Attention in Natural Language Processing [paper]

- [2018] [survey] An Introductory Survey on Attention Mechanisms in NLP Problems [paper]

- [2022] [survey] Visual Attention Methods in Deep Learning: An In-Depth Survey [paper]

- [2022] [survey] Visual Attention Network [paper]

- [2021] [survey] Attention Mechanisms in Computer Vision: A Survey [paper]

- [2021] [survey] Attention mechanisms and deep learning for machine vision: A survey of the state of the art [paper]

- [2016] [survey] Survey on the attention based RNN model and its applications in computer vision [paper]

- [2022] [survey] Efficient Transformers: A Survey [paper]

- [2021] [survey] A Survey of Transformers [paper]

- [TPAMI2022] [survey] A Survey on Vision Transformer [paper]

- [2022] [survey] Transformers in Vision: A Survey [paper]

- [2022] [survey] Transformers Meet Visual Learning Understanding: A Comprehensive Review [paper]

- [2022] [survey] Transformers in computational visual media: A survey [paper]

- [2021] [survey] A Survey of Visual Transformers [paper]

- [2020] [survey] A Survey on Visual Transformer [paper]

- [2022] [survey] Transformers in Time Series: A Survey [paper]

- [ICLR2016] [GGNN] Gated Graph Sequence Neural Networks [paper] [code]

- [UAI2018] [GaAN] GaAN: Gated Attention Networks for Learning on Large and Spatiotemporal Graphs [paper] [code]

- [ICML2018] [GraphRNN] Graphrnn: Generating realistic graphs with deep auto-regressive models [paper] [code]

- [NeurIPS2019] [GRAN] Efficient Graph Generation with Graph Recurrent Attention Networks [paper] [code]

- [T-SP2020] [GRNN] Gated Graph Recurrent Neural Networks [paper]

- [2021] [GR-GAT] Gated Relational Graph Attention Networks [paper]

- [NeurIPS2017] [GraphSage] Inductive representation learning on large graphs [paper] [code]

- [ICML2018] [JK-Net] Representation Learning on Graphs with Jumping Knowledge Networks [paper]

- [KDD2018] [GAM] Graph Classification using Structural Attention [paper] [code]

- [AAAI2019] [GeniePath] GeniePath: Graph Neural Networks with Adaptive Receptive Paths [paper]

- [ICLR2018] [GAT] Graph Attention Networks [paper] [code]

- [NeurIPS2019] [C-GAT] Improving Graph Attention Networks with Large Margin-based Constraints [paper]

- [IJCAI2020] [CPA] Improving Attention Mechanism in Graph Neural Networks via Cardinality Preservation [paper] [code]

- [ICLR2022] [GATv2] How Attentive are Graph Attention Networks? [paper] [code]

- [ICLR2022] [PPRGAT] Personalized PageRank Meets Graph Attention Networks [paper]

- [KDD2021] [Simple-HGN] Are we really making much progress? Revisiting, benchmarking and refining heterogeneous graph neural networks [paper] [code]

- [ICLR2021] [SuperGAT] How to Find Your Friendly Neighborhood: Graph Attention Design with Self-Supervision [paper] [code]

- [NeurIPS2021] [DMP] Diverse Message Passing for Attribute with Heterophily [paper]

- [KDD2019] [GANet] Graph Representation Learning via Hard and Channel-Wise Attention Networks [paper]

- [NeurIPS2021] [CAT] Learning Conjoint Attentions for Graph Neural Nets [paper] [code]

- [TransNNLS2021] [MSNA] Neighborhood Attention Networks With Adversarial Learning for Link Prediction [paper]

- [arXiv2018] [GGAT] Deeply learning molecular structure-property relationships using attention- and gate-augmented graph convolutional network [paper]

- [NeurIPS2019] [HGCN] Hyperbolic Graph Convolutional Neural Networks [paper]

- [TBD2021] [HAT] Hyperbolic graph attention network [paper]

- [IJCAI2020] [Hype-HAN] Hype-HAN: Hyperbolic Hierarchical Attention Network for Semantic Embedding [paper]

- [ICLR2019] [] Hyperbolic Attention Networks [paper]

- [ICLR2018] [AGNN] Attention-based Graph Neural Network for Semi-supervised Learning [paper]

- [KDD2018] [HTNE] Embedding Temporal Network via Neighborhood Formation [paper] [code]

- [CVPR2020] [DKGAT] Distilling Knowledge from Graph Convolutional Networks [paper] [code]

- [ICCV2021] [DAGL] Dynamic Attentive Graph Learning for Image Restoration [paper] [code]

- [AAAI2021] [SCGA] Structured Co-reference Graph Attention for Video-grounded Dialogue [paper]

- [AAAI2021] [Co-GAT] Co-GAT: A Co-Interactive Graph Attention Network for Joint Dialog Act Recognition and Sentiment Classification [paper]

- [ACL2020] [ED-GAT] Entity-Aware Dependency-Based Deep Graph Attention Network for Comparative Preference Classification [paper]

- [EMNLP2019] [TD-GAT] Syntax-Aware Aspect Level Sentiment Classification with Graph Attention Networks [paper]

- [WWW2020] [GATON] Graph Attention Topic Modeling Network [paper]

- [KDD2017] [GRAM] GRAM: Graph-based attention model for healthcare representation learning [paper] [code]

- [IJCAI2019] [SPAGAN] SPAGAN: Shortest Path Graph Attention Network [paper]

- [PKDD2021] [PaGNN] Inductive Link Prediction with Interactive Structure Learning on Attributed Graph [paper]

- [arXiv2019] [DeepLinker] Link Prediction via Graph Attention Network [paper]

- [IJCNN2020] [CGAT] Heterogeneous Information Network Embedding with Convolutional Graph Attention Networks [paper]

- [ICLR2020] [ADSF] Adaptive Structural Fingerprints for Graph Attention Networks [paper]

- [KDD2021] [T-GAP] Learning to Walk across Time for Interpretable Temporal Knowledge Graph Completion [paper] [code]

- [NeurIPS2018] [MAF] Modeling Attention Flow on Graphs [paper]

- [IJCAI2021] [MAGNA] Multi-hop Attention Graph Neural Network [paper] [code]

- [AAAI2020] [SNEA] Learning Signed Network Embedding via Graph Attention [paper]

- [ICANN2019] [SiGAT] Signed Graph Attention Networks [paper]

- [ICLR2019] [RGAT] Relational Graph Attention Networks [paper] [code]

- [arXiv2018] [EAGCN] Edge attention-based multi-relational graph convolutional networks [paper] [code]

- [KDD2021] [WRGNN] Breaking the Limit of Graph Neural Networks by Improving the Assortativity of Graphs with Local Mixing Patterns [paper] [code]

- [AAAI2020] [HetSANN] An Attention-based Graph Neural Network for Heterogeneous Structural Learning [paper] [code]

- [AAAI2020] [TALP] Type-Aware Anchor Link Prediction across Heterogeneous Networks Based on Graph Attention Network [paper]

- [KDD2019] [KGAT] KGAT: Knowledge Graph Attention Network for Recommendation [paper] [code]

- [KDD2019] [GATNE] Representation Learning for Attributed Multiplex Heterogeneous Network [paper] [code]

- [AAAI2021] [RelGNN] Relation-aware Graph Attention Model With Adaptive Self-adversarial Training [paper]

- [KDD2020] [CGAT] Graph Attention Networks over Edge Content-Based Channels [paper]

- [ACL2019] [AFE] Learning Attention-based Embeddings for Relation Prediction in Knowledge Graphs [paper] [code]

- [CIKM2021] [DisenKGAT] DisenKGAT: Knowledge Graph Embedding with Disentangled Graph Attention Network [paper]

- [AAAI2021] [GTAN] Graph-Based Tri-Attention Network for Answer Ranking in CQA [paper]

- [ACL2020] [R-GAT] Relational Graph Attention Network for Aspect-based Sentiment Analysis [paper] [code]

- [ICCV2019] [ReGAT] Relation-Aware Graph Attention Network for Visual Question Answering [paper] [code]

- [AAAI2021] [AD-GAT] Modeling the Momentum Spillover Effect for Stock Prediction via Attribute-Driven Graph Attention Networks [paper]

- [NeurIPS2018] [GAW] Watch your step: learning node embeddings via graph attention [paper]

- [TPAMI2021] [NLGAT] Non-Local Graph Neural Networks [paper]

- [NeurIPS2019] [ChebyGIN] Understanding Attention and Generalization in Graph Neural Networks [paper] [code]

- [ICML2019] [SAGPool] Self-Attention Graph Pooling [paper] [code]

- [ICCV2019] [Attpool] Attpool: Towards hierarchical feature representation in graph convolutional networks via attention mechanism [paper]

- [WWW2019] [HAN] Heterogeneous Graph Attention Network [paper] [code]

- [NC2022] [PSHGAN] Heterogeneous graph embedding by aggregating meta-path and meta-structure through attention mechanism [paper]

- [IJCAI2017] [PRML] Link prediction via ranking metric dual-level attention network learning [paper]

- [WSDM2022] [GraphHAM] Graph Embedding with Hierarchical Attentive Membership [paper]

- [AAAI2020] [RGHAT] Relational Graph Neural Network with Hierarchical Attention for Knowledge Graph Completion [paper]

- [AAAI2019] [LAN] Logic Attention Based Neighborhood Aggregation for Inductive Knowledge Graph Embedding [paper] [code]

- [2022] [EFEGAT] Learning to Solve an Order Fulfillment Problem in Milliseconds with Edge-Feature-Embedded Graph Attention [paper]

- [WWW2019] [DANSER] Dual Graph Attention Networks for Deep Latent Representation of Multifaceted Social Effects in Recommender Systems [paper] [code]

- [WWW2019] [UVCAN] User-Video Co-Attention Network for Personalized Micro-video Recommendation [paper]

- [KBS2020] [HAGERec] HAGERec: Hierarchical Attention Graph Convolutional Network Incorporating Knowledge Graph for Explainable Recommendation [paper]

- [Bio2021] [GCATSL] Graph contextualized attention network for predicting synthetic lethality in human cancers [paper] [code]

- [ICMR2020] [DAGC] DAGC: Employing Dual Attention and Graph Convolution for Point Cloud based Place Recognition [paper] [code]

- [AAAI2020] [AGCN] Graph Attention Based Proposal 3D ConvNets for Action Detection [paper]

- [EMNLP2019] [HGAT] HGAT: Heterogeneous Graph Attention Networks for Semi-supervised Short Text Classification [paper] [code]

- [ICLR2020] [Hyper-SAGNN] Hyper-Sagnn: A Self-Attention based Graph Neural Network for Hypergraphs [paper] [code]

- [CIKM2021] [HHGR] Double-Scale Self-Supervised Hypergraph Learning for Group Recommendation [paper] [code]

- [ICDM2021] [HyperTeNet] HyperTeNet: Hypergraph and Transformer-based Neural Network for Personalized List Continuation [paper] [code]

- [PR2020] [Hyper-GAT] Hypergraph Convolution and Hypergraph Attention [paper] [code]

- [CVPR2020] [] Hypergraph attention networks for multimodal learning [paper]

- [KDD2020] [DAGNN] Towards Deeper Graph Neural Networks [paper] [code]

- [T-NNLS2020] [AP-GCN] Adaptive propagation graph convolutional network [paper] [code]

- [CIKM2021] [TDGNN] Tree Decomposed Graph Neural Network [paper] [code]

- [arXiv2020] [GMLP] GMLP: Building Scalable and Flexible Graph Neural Networks with Feature-Message Passing [paper]

- [KDD2022] [GAMLP] Graph Attention Multi-layer Perceptron [paper] [code]

- [AAAI2021] [FAGCN] Beyond Low-frequency Information in Graph Convolutional Networks [paper] [code]

- [arXiv2021] [ACM] Is Heterophily A Real Nightmare For Graph Neural Networks To Do Node Classification? [paper]

- [KDD2020] [AM-GCN] AM-GCN: Adaptive Multi-channel Graph Convolutional Networks [paper] [code]

- [AAAI2021] [UAG] Uncertainty-aware Attention Graph Neural Network for Defending Adversarial Attacks [paper]

- [CIKM2021] [MV-GNN] Semi-Supervised and Self-Supervised Classification with Multi-View Graph Neural Networks [paper]

- [KSEM2021] [GENet] Graph Ensemble Networks for Semi-supervised Embedding Learning [paper]

- [NN2020] [MGAT] MGAT: Multi-view Graph Attention Networks [paper]

- [CIKM2017] [MVE] An Attention-based Collaboration Framework for Multi-View Network Representation Learning [paper]

- [NC2021] [EAGCN] Multi-view spectral graph convolution with consistent edge attention for molecular modeling [paper]

- [SIGIR2020] [GCE-GNN] Global Context Enhanced Graph Neural Networks for Session-based Recommendation [paper]

- [ICLR2019] [DySAT] Dynamic Graph Representation Learning via Self-Attention Networks [paper] [code]

- [PAKDD2020] [TemporalGAT] TemporalGAT: Attention-Based Dynamic Graph Representation Learning [paper]

- [IJCAI2021] [GAEN] GAEN: Graph Attention Evolving Networks [paper] [code]

- [CIKM2019] [MMDNE] Temporal Network Embedding with Micro- and Macro-dynamics [paper] [code]

- [ICLR2020] [TGAT] Inductive Representation Learning on Temporal Graphs [paper] [code]

- [2022] [TR-GAT] Time-Aware Relational Graph Attention Network for Temporal Knowledge Graph Embeddings [paper]

- [CVPR2022] [T-GNN] Adaptive Trajectory Prediction via Transferable GNN [paper]

- [AAAI2018] [ST-GCN] Spatial Temporal Graph Convolutional Networks for Skeleton-Based Action Recognition [paper] [code]

- [AAAI2020] [GMAN] GMAN: A Graph Multi-Attention Network for Traffic Prediction [paper]

- [AAAI2019] [ASTGCN] Attention Based Spatial-Temporal Graph Convolutional Networks for Traffic Flow Forecasting [paper] [code]

- [KDD2020] [ConSTGAT] ConSTGAT: Contextual Spatial-Temporal Graph Attention Network for Travel Time Estimation at Baidu Maps [paper]

- [ICLR2022] [RainDrop] Graph-Guided Network for Irregularly Sampled Multivariate Time Series [paper]

- [ICLR2021] [mTAND] Multi-Time Attention Networks for Irregularly Sampled Time Series [paper] [code]

- [ICDM2020] [MTAD-GAT] Multivariate Time-series Anomaly Detection via Graph Attention Network [paper]

- [WWW2020] [GACNN] Towards Fine-grained Flow Forecasting: A Graph Attention Approach for Bike Sharing Systems [paper]

- [IJCAI2018] [GeoMAN] GeoMAN: Multi-level Attention Networks for Geo-sensory Time Series Prediction [paper]

- [arXiv2019] [GTR] Graph transformer [paper]

- [arXiv2020] [U2GNN] Universal Self-Attention Network for Graph Classification [paper] [code]

- [WWW2022] [UGformer] Universal Graph Transformer Self-Attention Networks [paper] [code]

- [ICLR2021] [GMT] Accurate learning of graph representations with graph multiset pooling [paper] [code]

- [KDDCup2021] [Graphormer] Do Transformers Really Perform Bad for Graph Representation? [paper] [code]

- [NeurIPS2021] [Graphormer] Do Transformers Really Perform Bad for Graph Representation? [paper] [code]

- [NeurIPS2021] [HOT] Transformers Generalize DeepSets and Can be Extended to Graphs and Hypergraphs [paper] [code]

- [NeurIPS2020] [GROVER] Self-Supervised Graph Transformer on Large-Scale Molecular Data [paper] [code]

- [ICML(Workshop)2019] [PAGAT] Path-augmented graph transformer network [paper] [code]

- [AAAI2021] [GTA] GTA: Graph Truncated Attention for Retrosynthesis [paper]

- [AAAI2021] [GT] A generalization of transformer networks to graphs [paper] [code]

- [NeurIPS2021] [SAN] Rethinking graph transformers with spectral attention [paper] [code]

- [2020] [GraphBert] Graph-bert: Only attention is needed for learning graph representations [paper] [code]

- [ICML2021] [] Lipschitz Normalization for Self-Attention Layers with Application to Graph Neural Networks [paper]

- [IJCAI2021] [UniMP] Masked Label Prediction: Unified Message Passing Model for Semi-Supervised Classification [paper] [code]

- [NeurIPS2019] [GTN] Graph Transformer Networks [paper] [code]

- [KDD2020] [TagGen] A Data-Driven Graph Generative Model for Temporal Interaction Networks [paper]

- [NeurIPS2021] [GraphFormers] GraphFormers: GNN-nested Transformers for Representation Learning on Textual Graph [paper]

- [WWW2020] [HGT] Heterogeneous Graph Transformer [paper]

- [AAAI2020] [GTOS] Graph transformer for graph-to-sequence learning [paper] [code]

- [NAACL2019] [GraphWriter] Text Generation from Knowledge Graphs with Graph Transformers [paper] [code]

- [AAAI2021] [KHGT] Knowledge-Enhanced Hierarchical Graph Transformer Network for Multi-Behavior Recommendation [paper] [code]

- [AAAI2021] [GATE] GATE: Graph Attention Transformer Encoder for Cross-lingual Relation and Event Extraction [paper]

- [NeurIPS2021] [STAGIN] Learning Dynamic Graph Representation of Brain Connectome with Spatio-Temporal Attention [paper] [code]

Sun, C., Li, C., Lin, X. et al. Attention-based graph neural networks: a survey. Artif Intell Rev (2023). https://doi.org/10.1007/s10462-023-10577-2

@article{ WOS:001051847200001,

Author = {Sun, Chengcheng and Li, Chenhao and Lin, Xiang and Zheng, Tianji and Meng, Fanrong and Rui, Xiaobin and Wang, Zhixiao},

Title = {Attention-based graph neural networks: a survey},

Journal = {ARTIFICIAL INTELLIGENCE REVIEW},

Year = {2023},

Month = {2023 AUG 21},

DOI = {10.1007/s10462-023-10577-2},

EarlyAccessDate = {AUG 2023},

ISSN = {0269-2821},

EISSN = {1573-7462},

Unique-ID = {WOS:001051847200001},

}