Created by Yuxin Sun, Dong Lao, Ganesh Sundaramoorthi and Anthony Yezzi from Georgia Tech, UCLA and Raytheon Technologies.

This repository contains source code for the NeurIPS 2022 paper "Surprising Instabilities in Training Deep Networks and a Theoretical Analysis". We provide the code for producing experimental data, analyzing results and generating figures.

Please follow the installation instructions below.

Our codebase uses PyTorch. The code was tested with Python 3.7.9, torch 1.8.0 on Ubuntu 18.04 (should work with later versions).

We implemented a demo code for illustration of instability in discretizing the heat equation (heat.py).

It plots the variable u at different time.

Tt generates a plot for stable evolution by setting dt = 0.4.

It generates a plot for unstable evolution by setting dt = 0.8.

In folder ./instabillity, we include the code for providing empirical evidence of restrained instabilities in current deep learning training practice.

We provide the code for showing final test accuracy over different seeds (batch selections) and different floating

point perturbations (rows) for Resnet56 trained on CIFAR-10. It generates the data for Table 1(left) in section 4.2.

We provide ResNet56 with two activation functions, ReLU and Swish. Run:

./instabillity/main6.py

Different seed could be chosen by changing r in the arguments.

We also provide data analysis code for this experiment in ./scripts/mean_variance.py. It computes the final accuracy and standard deviation for input data.

We provide the code for showing the divergence phenomenon in network weights between the original SGD weights, and the perturbed SGD weights.

It generates the data for two plots in Figure 2. Run:

./instabillity/main2.py

Different learning rate divisor could be chosen by changing n in the arguments.

We also provide code for producing Figure 2(right) in ./instability/compute_iteration.m.

In folder ./single_layer, we include the code for experiments on a single layer network.

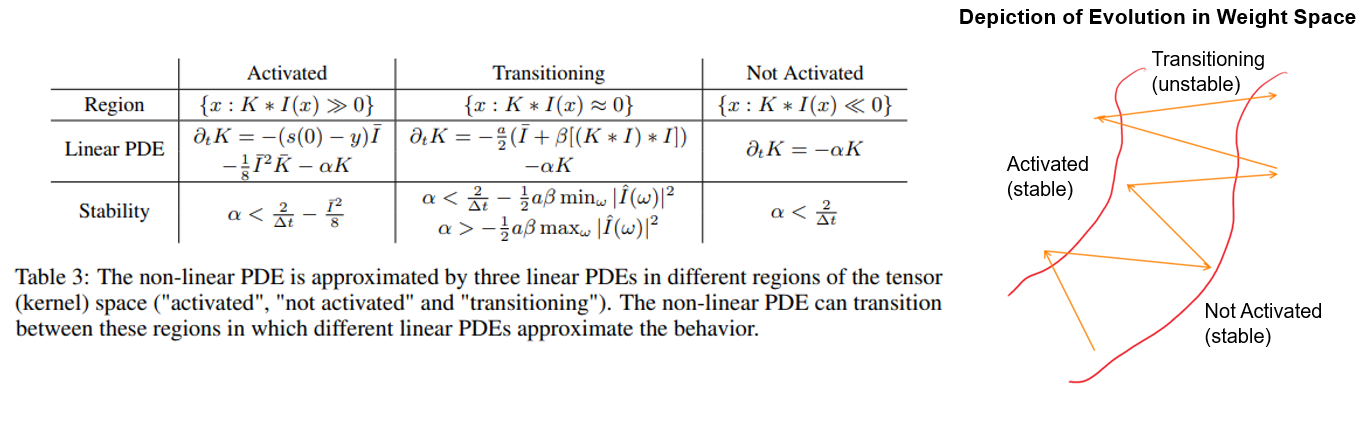

We provide code to validation two stability bounds for the linearized PDE (9). It generates the loss plots for the

discretization of lineared PDE (9). Run:

./sinlge_layer/linear.py

Different learning rate could be chosen by changing learning_rate in the parameters.

We also provide code for producing the Figure 3 in ./scripts/loss_plot.py.

We provide the code for showing that restrained instabilites are present in the (non-linear) gradient descent PDE of the one layer CNN.

It generates the loss plots for the non-linear PDE (8) for various choices of learning rates (dt). Run:

./single_layer/PySun.py

Different learning rate could be chosen by changing learning_rate in the parameters.

We also provide code for producing the Figure 4 (left) in ./scripts/oscillation_plot.py.

We provide the code for showing error amplification occurs in the (non-linear) gradient descent PDE of the one layer CNN.

It generates the data for Figure 4(right). Run:

./single_layer/PySun2.py

Different learning rate could be chosen by changing learning_rate in the parameters.

We also provide code for producing the Figure 4 (right) in ./instability/compute_onelayer.m.

This research was supported in part by Army Research Labs (ARL) W911NF-22-1-0267 and Raytheon Technologies Research Center.

If you find our work useful in your research, please cite our paper:

@inproceedings{NEURIPS2022_7b97adea,

author = {Sun, Yuxin and LAO, DONG and Sundaramoorthi, Ganesh and Yezzi, Anthony},

booktitle = {Advances in Neural Information Processing Systems},

editor = {S. Koyejo and S. Mohamed and A. Agarwal and D. Belgrave and K. Cho and A. Oh},

pages = {19567--19578},

publisher = {Curran Associates, Inc.},

title = {Surprising Instabilities in Training Deep Networks and a Theoretical Analysis },

url = {https://proceedings.neurips.cc/paper_files/paper/2022/file/7b97adeafa1c51cf65263459ca9d0d7c-Paper-Conference.pdf},

volume = {35},

year = {2022}

}See LICENSE file.