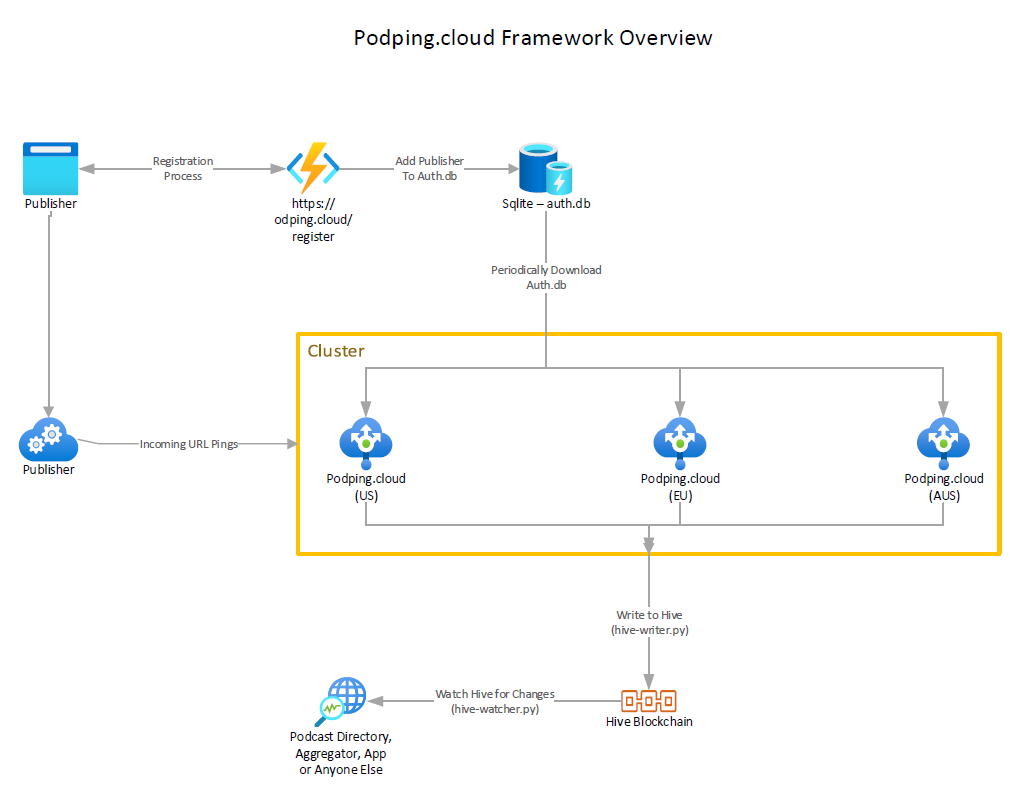

Podping.cloud is the hosted front-end to the Podping notification system. It stands in front of the back-end writer(s) to provide a more friendly HTTP based API.

There are two main components of a podping.cloud node. The first is a web HTTP front-end just called podping that

accepts GET requests like so:

GET https://podping.cloud/?url=https://feeds.example.org/podcast/rssYou can also append 2 additional parameters (reason and/or medium):

GET https://podping.cloud/?url=https://feeds.example.org/livestream/rss&reason=live&medium=musicIf reason is not present, the default is "update". If medium is not present, the default is "podcast". A full

explanation of these options and what they mean

is here.

The next component is one or more back-end writers that connect to the front-end over a ZMQ socket. Currently, the

only back-end writer is the hive-writer, a python script that

listens on localhost port 9999 for incoming events. When it receives an event, it attempts to write that event as

a custom JSON notification message to the Hive blockchain.

The front-end accepts GET requests and does a few things:

- Ensures that the sending publisher has included a valid 'Authorization' header token.

- Validates that the token exists in the

auth.dbsqlite db. - Validates that the format of the given podcast feed url looks sane

- Saves the url into the

queue.dbsqlite database in thequeuetable. - Returns

200to the sending publisher.

A separate thread runs in a loop as a queue checker and does the following:

- Checks the

queue.dbdatabase and fetches up to 1000 feeds at a time in FIFO order ("live" reason is prioritized). - Checks the ZEROMQ tcp socket to the

hive-writerlistener on port9999. - Construct one or more

Pingobjects in protocol buffers to send over the socket to the writer(s). - Sends the

Pingobjects tohive-writersocket for processing and waits for success or error to be returned. - If success is returned from the writer, the url is removed from

queue.db. - If an error is returned or an exception is raised, another attempt is made after 180 seconds.

There is a dummy auth token in the auth.db that is ready to use for testing. The token value is:

Blahblah^^12345678

In order to avoid running as a root user, please set the PODPING_RUNAS_USER environment variable to the non-root

user you want the front-end executable to run as. Something like this:

PODPING_RUNAS_USER="podping" ./target/release/podping- Podping-hivewriter: Accepts events from the podping.cloud front-end or from the command line and writes them to the Hive blockchain.

The best way to run a podping.cloud node is with docker compose. There is a [docker] folder for this. Just clone this

repo, switch to the docker folder and issue docker compose up. It is expected that the database files will live in

a directory called /data. If this directory doesn't exist, you will need to create it.

Initially, the auth.db and queue.db will be blank. You will need to populate the "publishers" table in the

auth.db file to have a funcional system. See the example files in the databases directory in this repo for an

example of the format for publisher token records.