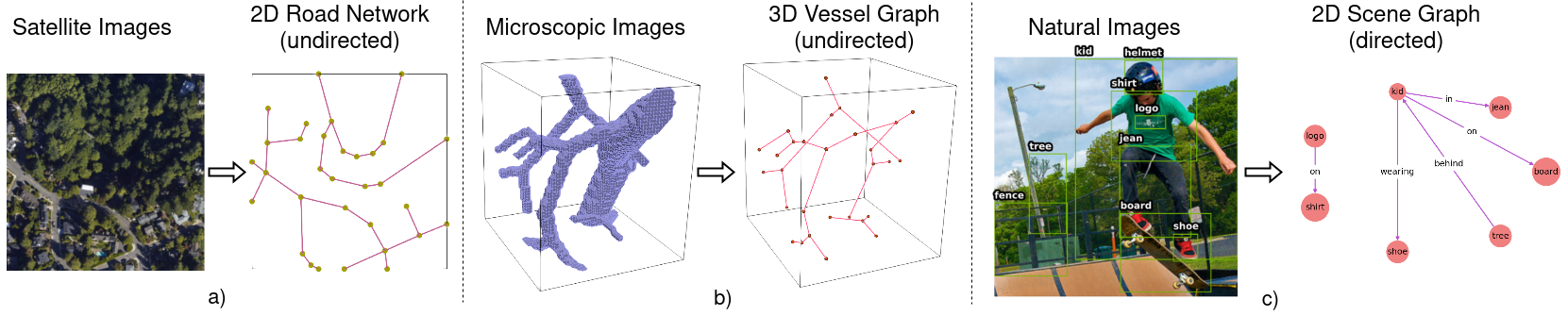

What is image-to-graph? Image-to-graph is a general class of problem appearing in many forms in computer vision and medical imaging. Primarily, the task is to discover an image's underlying structural- or semantic- graph representation. A few examples are shown above.

In spatio-structural tasks, such as road network extraction (Fig. a), nodes represent road-junctions or significant turns, while edges correspond to structural connections, i.e., the road itself. Similarly, in 3D blood vessel-graph extraction (Fig. b), nodes represent branching points or substantial curves, and edges correspond to structural connections, i.e., arteries, veins, and capillaries.

In the case of spatio-semantic graph generation, e.g., scene graph generation from natural images (Fig. c), the objects denote nodes, and the semantic relation denotes the edges.

Note that the 2D road network extraction and 3D vessel graph extraction tasks have undirected relations while the scene graph generation task has directed relations.

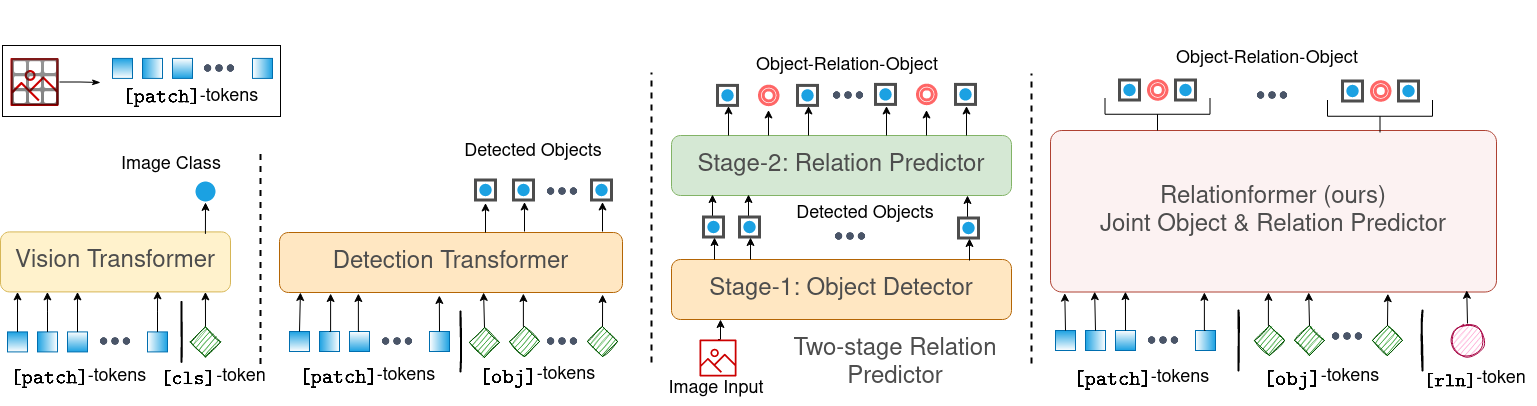

What it is Relationformer? Relationformer is a unified one-stage transformer-based framework that jointly predicts objects and their relations. We leverage direct set-based object prediction and incorporate the interaction among the objects to learn an object-relation representation simultaneously. In addition to existing [obj]-tokens, we propose a novel learnable token, namely the [rln]-token. Together with [obj]-tokens, [rln]-token exploits local and global semantic reasoning in an image through a series of mutual associations. In combination with the pair-wise [obj]-token, the [rln]-token contributes to a computationally efficient relation prediction.

A pictorial depiction above illustrates a general architectural evolution of transformers in computer vision and how Relationformer advances the concept of a task-specific learnable token one step further. The proposed Relationformer is also shown compared to the conventional two-stage relation predictor. The amalgamation of two separate stages not only simplifies the architectural pipeline but also co-reinforces both of the tasks.

About the code. We have provided the source code of Relation former along with instructions for training and evaluation script for individual datasets. Please follow the procedure below.

Please navigate through the respective branch for specific application

relationformer

├── master #overview

| └──

| └──

├── vessel_graph #3D vessel graph dataset

| └──

| └──

└── road_network #2D binary road network dataset

| └──

| └──

└── road_network_rgb #2D RGB road network dataset

| └──

| └──

└── scene_graph #2D scene graph dataset

└──

└──

We have instructions for individual dataset in their respective branch.

If you find our repository useful in your research, please cite the following:

@article{shit2022relationformer,

title={Relationformer: A Unified Framework for Image-to-Graph Generation},

author={Shit, Suprosanna and Koner, Rajat and Wittmann, Bastian and others},

journal={arXiv preprint arXiv:2203.10202},

year={2022}

}Relationformer code is released under the Apache 2.0 license. Please see the LICENSE file for more information.

We acknowledge the following repository from where we have inherited code snippets