Adapted from Ha and Schmidhuber, "World Models", 2018. Refer original project page.

We extend the Model Based World Model RL algorithm by updating in the following specifics:

- Use a vanilla autoencoder for the vision (V) model. The authors mention that they use a variational autoencoder to constrain and impose a gaussian distribution over the sampled state latents. We hypothesise that removing the gaussian constraint might work better since we do not constrict the state latents to a distribution.

- Use the PEPG (Parameter Exploring Policy Gradients) evolutionary algorithm to converge to the global maxima. A particular weakness of the CMA-ES method is that it discards the majority of the solutions in one generation, and keeps only the top n% of solutions. Weak solutions might contain information too that might help in the convergence. Refer this blog for a concise explanation

- To train the autoencoder and variational autoencoder, run the trainvae.py and trainae.py scripts.

- To train MDN-RNN network using autoencoder and MDN-RNN using variational autoencoder, run the trainmdrnn.py and trainmdrnn_ae.py

- To train the controller network using CMA-ES/PEPG with VAE/AE forward passes, train the corresponding files from: traincontroller_cmaes_ae.py, traincontroller_cmaes_vae.py, traincontroller_pepg_ae.py, traincontroller_pepg_vae.py

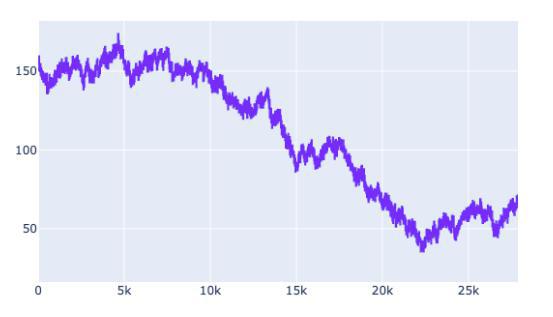

VAE Training Loss:

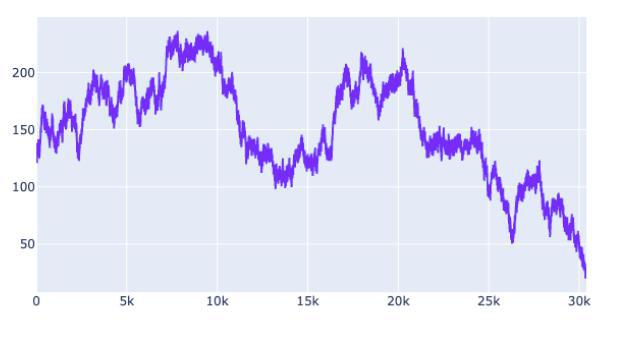

AE Training Loss:

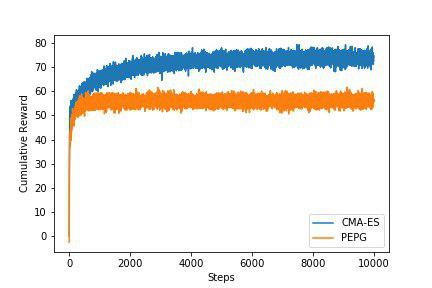

Cumulative sum reward with VAE latents:

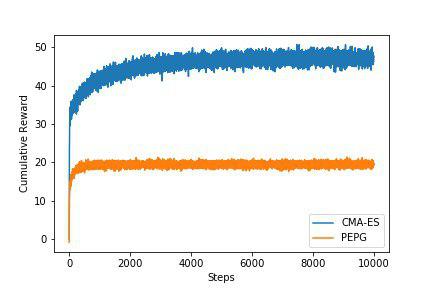

Cumulative sum reward with AE latents:

| Encoder Model/Parameter Search Method | Covariance Matrix Adaptation Evolution Strategy (CMA-ES) | Parameter-Exploring Policy Gradients (PEPG) |

|---|---|---|

| Variational Autoencoder | 74.67 +/- 10.12 | 60.94 +/- 6.17 |

| Vanilla Autoencoder | 47.34 +/- 6.37 | 20.36 +/- 3.80 |

Note: Code adapted from here