DA-AIM: Exploiting Instance-based Mixed Sampling via Auxiliary Source Domain Supervision for Domain-adaptive Action Detection

by Yifan Lu, Gurkirt Singh, Suman Saha and Luc Van Gool

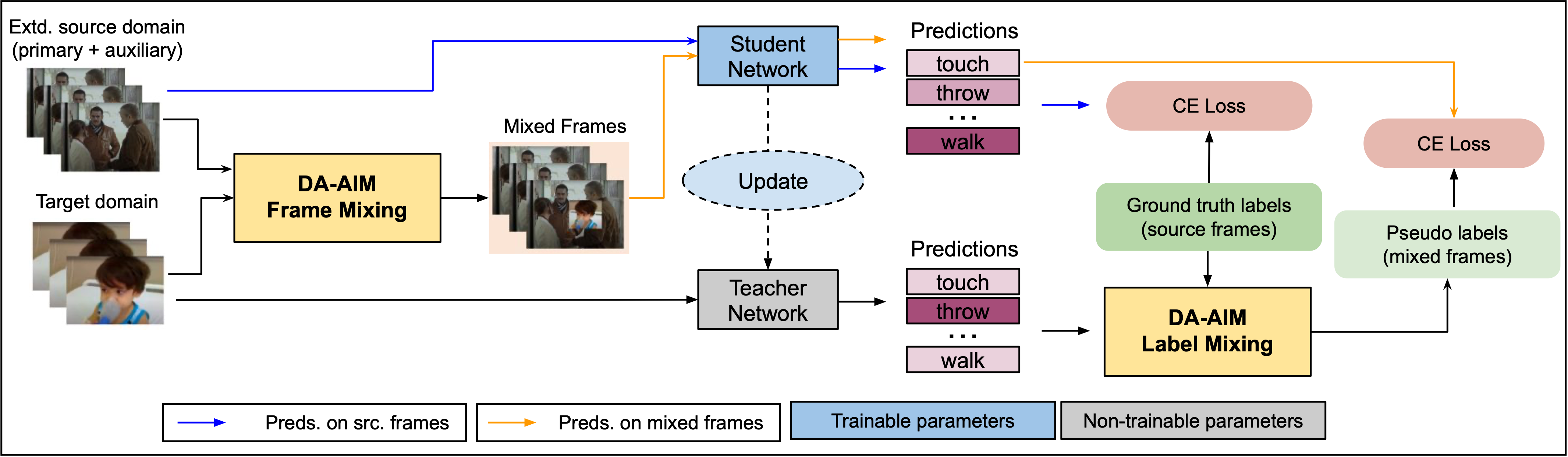

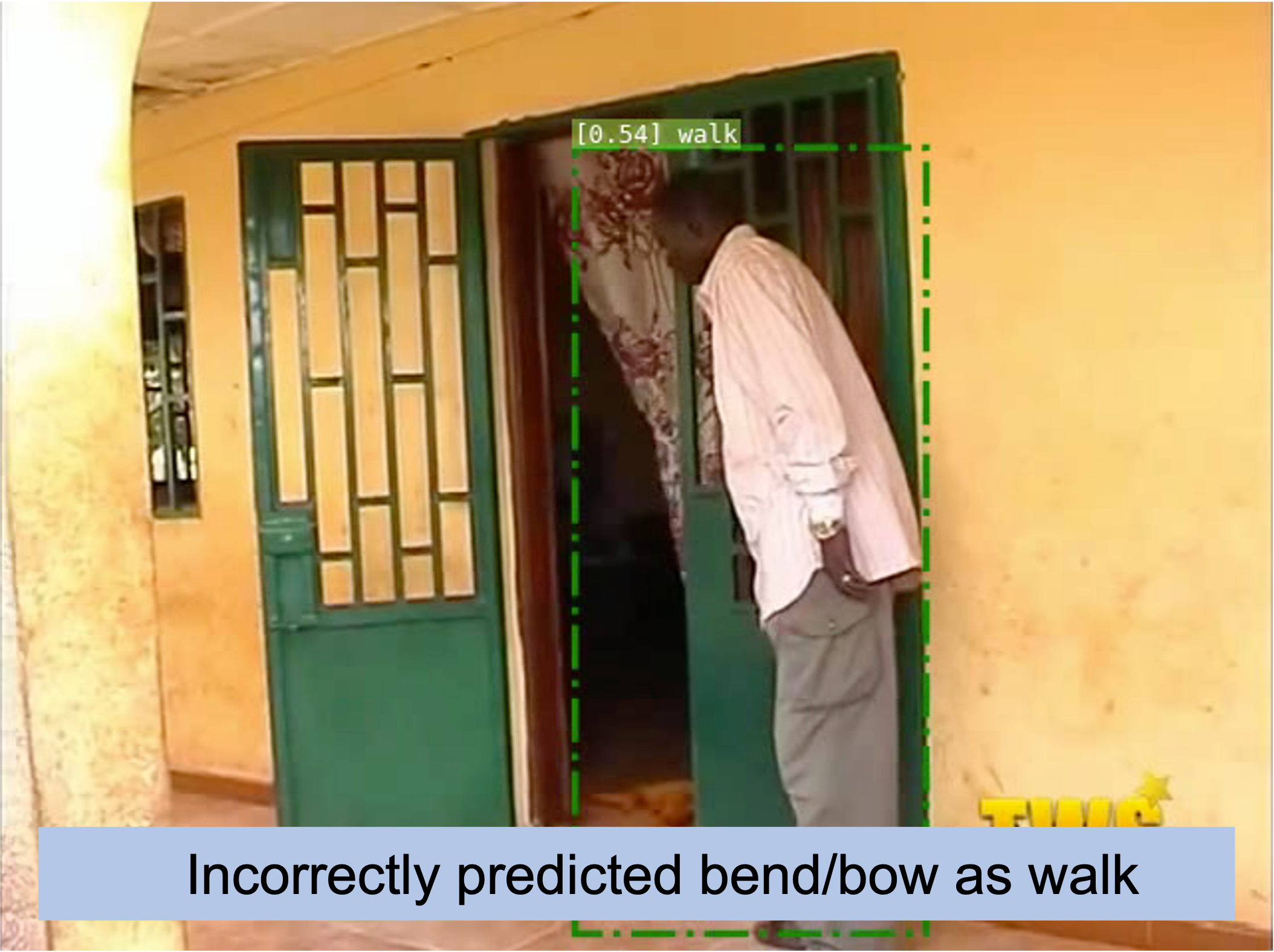

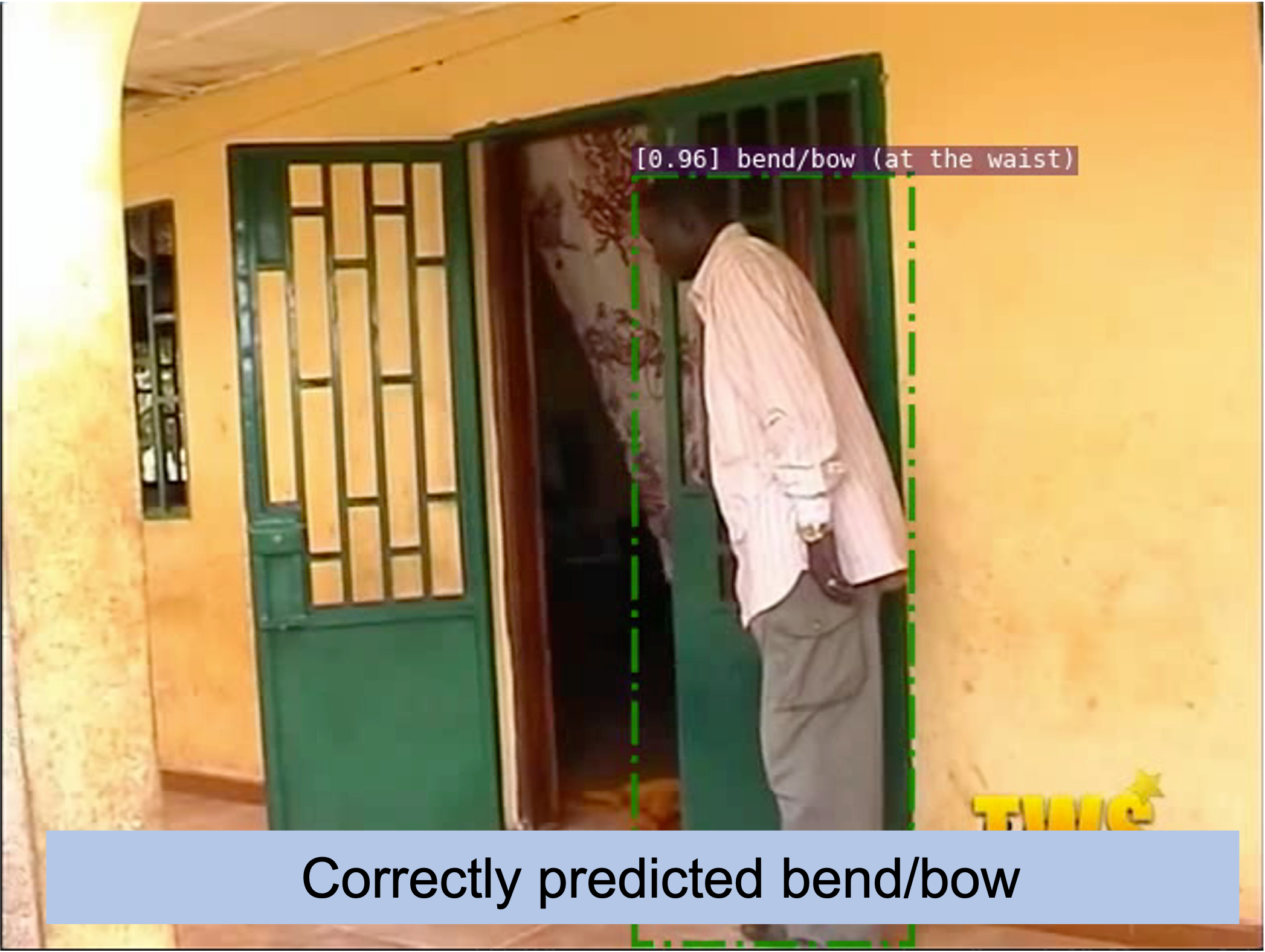

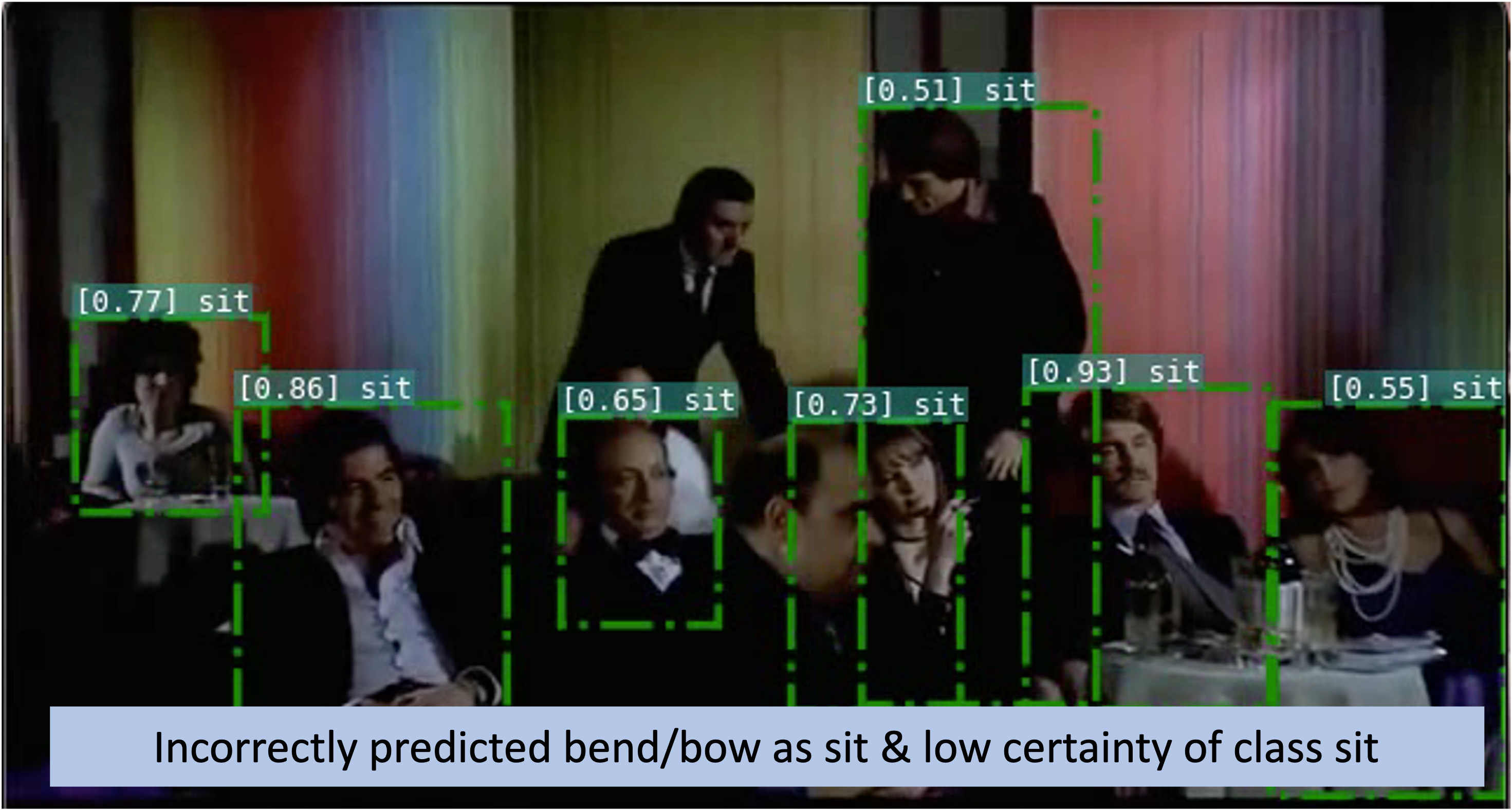

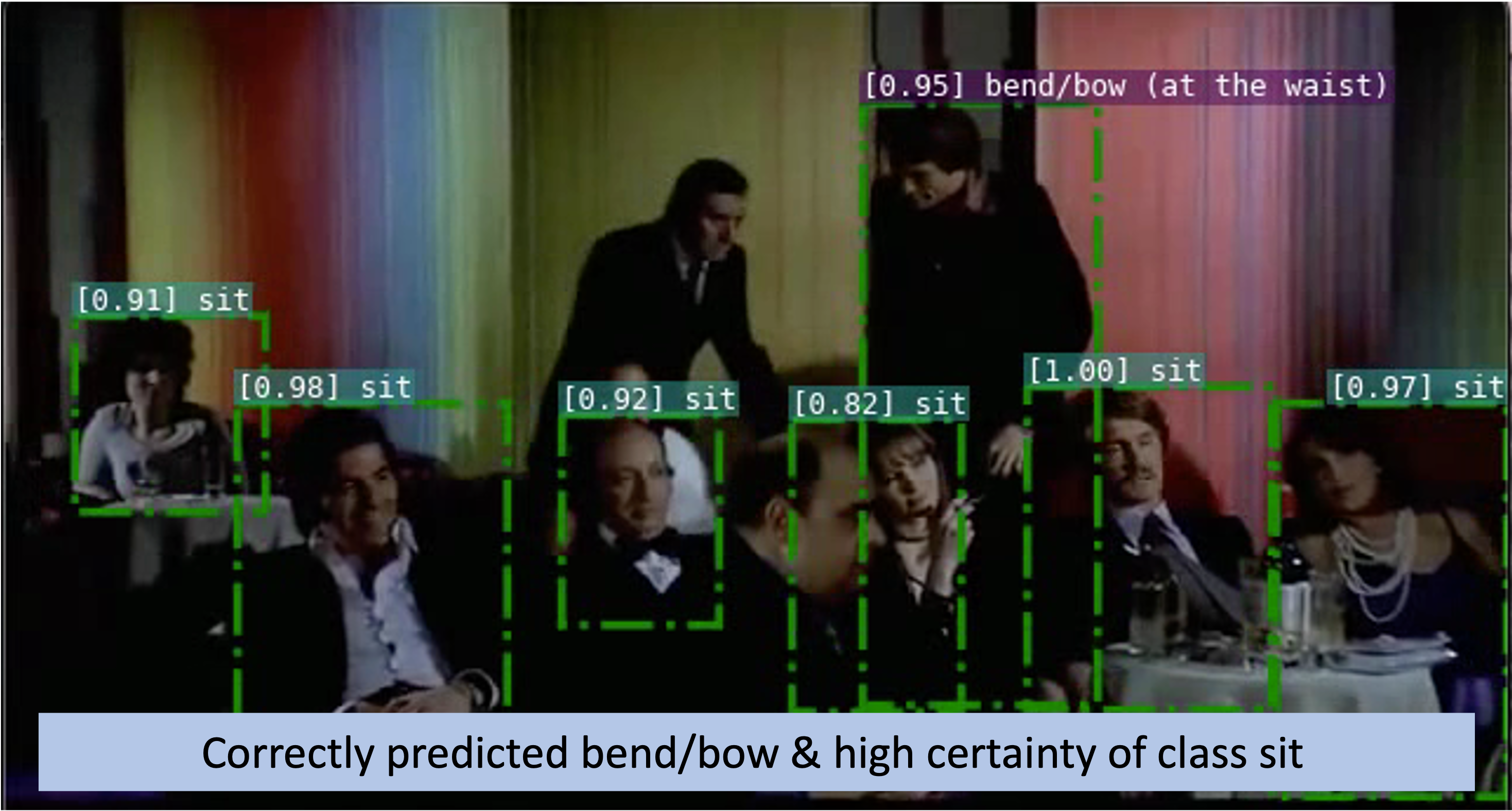

We propose a novel domain adaptive action detection approach and a new adaptation protocol that leverages the recent advancements in image-level unsupervised domain adaptation (UDA) techniques and handle vagaries of instance-level video data. Self-training combined with cross-domain mixed sampling has shown remarkable performance gain in semantic segmentation in UDA (unsupervised domain adaptation) context. Motivated by this fact, we propose an approach for human action detection in videos that transfers knowledge from the source domain (annotated dataset) to the target domain (unannotated dataset) using mixed sampling and pseudo-labe- based self-training. The existing UDA techniques follow a ClassMix algorithm for semantic segmentation. However, simply adopting ClassMix for action detection does not work, mainly because these are two entirely different problems, i.e. pixel-label classification vs. instance-label detection. To tackle this, we propose a novel action in stance mixed sampling technique that combines information across domains based on action instances instead of action classes. Moreover, we propose a new UDA training protocol that addresses the long-tail sample distribution and domain shift problem by using supervision from an auxiliary source domain (ASD). For ASD, we propose a new action detection dataset with dense frame-level annotations. We name our proposed framework domain-adaptive action instance mixing (DA-AIM). We demonstrate that DA-AIM consistently outperforms prior works on challenging domain adaptation benchmarks.

| Method | AVA-Kinetics → AVA | AVA-Kinetics → IhD-2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| bend/bow | lie/sleep | run/jog | sit | stand | walk | mAP | touch | throw | take a photo | mAP | |

| Baseline (Source) | 33.66 | 54.82 | 56.82 | 73.70 | 80.56 | 75.18 | 62.46 | 34.12 | 32.91 | 27.42 | 31.48 |

| Rotation | 25.53 | 58.86 | 55.05 | 72.42 | 79.84 | 68.49 | 60.03 | 30.12 | 34.58 | 25.39 | 30.03 |

| Clip-order | 28.24 | 57.38 | 56.90 | 69.54 | 77.10 | 74.68 | 60.64 | 28.28 | 32.30 | 29.93 | 30.17 |

| GRL | 24.99 | 48.41 | 59.89 | 68.68 | 78.79 | 71.38 | 58.69 | 25.79 | 39.71 | 28.90 | 31.46 |

| DA-AIM | 33.79 | 59.27 | 62.16 | 71.67 | 79.90 | 75.13 | 63.65 | 34.38 | 35.65 | 39.84 | 36.62 |

The paper can be found here: [Arxiv]

In case of interest, please consider citing:

@InProceedings{

}

Python 3.9.5 is used for this project. We recommend setting up a new virtual environment:

python -m venv ~/venv/daaim

source ~/venv/daaim/bin/activateClone DA-AIM repository then add this repository to $PYTHONPATH.

git clone https://github.com/wwwfan628/DA-AIM.git

export PYTHONPATH=/path/to/DA-AIM/slowfast:$PYTHONPATH

Build the required environment with:

cd DA-AIM

python setup.py build developBefore training or evaluation, please download the MiT weights and a pretrained DA-AIM checkpoint using the following command or directly download them here:

wget https:// # MiT weights

wget https:// # DA-AIM checkpointLarge-scale datasets are reduced because of three reasons:

- action classes needs to be matched to target domain

- for fair comparison with smaller datasets

- for the sake of time and resource consumption

We provide annotations and the corresponding video frames of the reduced datasets. The numbers contained in the name of

the dataset suggest the number of action classes, the maximum number of training and validation samples for each action class. For example,

ava_6_5000_all suggests there are 6 action classes, for each action class we have at most 5000

training samples and all validation samples from the original dataset are kept. Please, download the video frames

and annotations from here and extract them to Datasets/Annotations or

Datasets/Frames. Contact author Suman Saha for more details of downloading.

ava_6_5000_all: is reduced from the original AVA dataset. We selected 5000 training samples for classes bend/bow,

lie/sleep, run/jog, sit, stand and walk and kept all validation samples from those classes.

kinetics_6_5000_all: is reduced from the original AVA-Kinetics dataset. We selected 5000 training samples for classes bend/bow,

lie/sleep, run/jog, sit, stand and walk and kept all validation samples from those classes.

You can also use commands to download and extract datasets:

wget https://data.vision.ee.ethz.ch/susaha/wacv2023_datasets # download datasets

tar -C Datasets/Frames -xvf xxx.tar # extract frames to Datasets/Frames directory

The final folder structure should look like this:

Datasets

├── Annotations

│ ├── ava_6_5000_all

│ │ ├── annotations

│ │ │ ├── ava_action_list_v2.2.pbtxt

│ │ │ ├── ava_included_timestamps_v2.2.txt

│ │ │ ├── ava_train_excluded_timestamps_v2.2.csv

│ │ │ ├── ava_val_excluded_timestamps_v2.2.csv

│ │ │ ├── ava_train_v2.2.csv

│ │ │ ├── ava_val_v2.2.csv

│ │ ├── frame_lists

│ │ │ ├── train.csv

│ │ │ ├── val.csv

│ ├── kinetics_6_5000_all

│ │ ├── annotations

│ │ │ ├── kinetics_action_list_v2.2.pbtxt

│ │ │ ├── kinetics_val_excluded_timestamps.csv

│ │ │ ├── kinetics_train.csv

│ │ │ ├── kinetics_val.csv

│ │ ├── frame_lists

│ │ │ ├── train.csv

│ │ │ ├── val.csv

│ ├── ...

├── Frames

│ ├── ava_6_5000_all

│ │ ├── IMG1000

│ │ │ ├──

│ │ │ ├── ...

│ │ ├── IMG1000

│ │ │ ├──

│ │ │ ├── ...

│ │ ├── ...

│ ├── kinetics_6_5000_all

│ │ ├── IMG1000

│ │ │ ├──

│ │ │ ├── ...

│ │ ├── IMG1000

│ │ │ ├──

│ │ │ ├── ...

│ ├── ...

├── ...

1) Setting configuration yaml files

Configuration yaml files are located under ./configs/DA-AIM/. Modify the settings according to your

requirements. Most of the time, this step can be skipped and necessary settings can be modified in experiment shell files.

More details and explanations of the configuration settings please refer to ./slowfast/config/defaults.py.

2) Run experiments

Experiments can be executed with the following command:

python tools/run_net.py --cfg "configs/DA-AIM/KIN2AVA/SLOWFAST_32x2_R50_DA_AIM.yaml" \

OUTPUT_DIR "/PATH/TO/OUTPUT/DIR" \

AVA.FRAME_DIR "Datasets/Frames/kinetics_6_5000_all" \

AVA.FRAME_LIST_DIR "Datasets/Annotations/kinetics_6_5000_all/frame_lists/" \

AVA.ANNOTATION_DIR "Datasets/Annotations/kinetics_6_5000_all/annotations/" \

AUX.FRAME_DIR "Datasets/Frames/ava_6_5000_all" \

AUX.FRAME_LIST_DIR "Datasets/Annotations/ava_6_5000_all/frame_lists/" \

AUX.ANNOTATION_DIR "Datasets/Annotations/ava_6_5000_all/annotations/" \

TRAIN.CHECKPOINT_FILE_PATH "/PATH/TO/CKP/FILE"

If you skip the first step, please remember to modify the paths to dataset frames and annotations as well as the path

to the pretrained checkpoint here. Examples of shell scripts are provided under ./experiments.

1) Setting configuration yaml files

Similarly as in training, configuration settings need to be modified according to requirements. Configuration yaml files are located

under ./configs/DA-AIM/.To note is that TRAIN.ENABLE should be set as False in order to evoke evaluation. As previously mentioned,

this step can be skipped and necessary settings can be modified in shell files.

2) Perform evaluation

To perform evaluation, please use the following command:

python tools/run_net.py --cfg "configs/DA-AIM/AVA/SLOWFAST_32x2_R50.yaml" \

TRAIN.ENABLE False \

TRAIN.AUTO_RESUME True \

TENSORBOARD.ENABLE False \

OUTPUT_DIR "/PATH/TO/OUTPUT/DIR" \

AVA.FRAME_DIR "Datasets/Frames/ava_6_5000_all" \

AVA.FRAME_LIST_DIR "Datasets/Annotations/ava_6_5000_all/frame_lists/" \

AVA.ANNOTATION_DIR "Datasets/Annotations/ava_6_5000_all/annotations/"

Also here, attention should be paid to paths to the dataset frames and annotations, if the first step is skipped.

PySlowFast offers a range of visualization tools. More information at PySlowFast Visualization Tools. Additional visualization tools like plotting mixed samples and confusion matrices, please refer to DA-AIM Visualization Tools.

This project is based on the following open-source projects. We would like to thank their contributors for implementing and maintaining their works. Besides, many thanks to labmates Jizhao Xu, Luca Sieber and Rishabh Singh whose previous work has lent a helping hand at the beginning of this project. Finally, the filming of IhD-1 dataset is credited to Carlos Eduardo Porto de Oliveira's great photography.