This project aims to build a method for predicting equation of state parameters directly from molecular structures. We use PC-SAFT as the equation of state in this case study.

This work was published in the Chemical Engineering Journal

The preprint can be found here.

This project uses Kedro 0.18.0, a packaage for managing data science projects. You can read more about Kedro in their documentation to get started. However, step-by-step instructions for this project are given below.

First, clone the repository:

git clone https://github.com/sustainable-processes/dl4thermo.git

We're using git LFS for large data files, so make sure to set that up. Run the following to get the large files:

git lfs fetch origin main

Then, make sure to unzip the directories in data/01_raw.

We use poetry for dependency management. First, install poetry and then run:

poetry install --with=dev

poe install-pyg

This will download all the dependencies.

Notes for Mac users:

- Note that if you run on Mac OS, you'll need to have Ventura (13.1 or higher).

- First run this set of commands:

If this commnad fails, make sure to check the version of LLVM that was actually installed (i.e., run ls /opt/homebrew/Cellar/llvm@11/) and replace 11.1.0_3 in the third line above with the correct version.

arch -arm64 brew install llvm@11 brew install hdf5 HDF5_DIR=/opt/homebrew/opt/hdf5 PIP_NO_BINARY="h5py" LLVM_CONFIG="/opt/homebrew/Cellar/llvm@11/11.1.0_3/bin/llvm-config" arch -arm64 poetry install

Use the make_predictions.py command line script to make predictions of PCP-SAFT parameters. The scripts takes in a path to a text file with a SMILES string on each line.

python make_predictions.py smiles.txtwhere smiles.txt might be

C/C=C(/C)CC

CC(C)CC(C)N

CCCCCCN

CC(C)CC(=O)O

Kedro is centered around pipelines. Pipelines are a series of functions (called nodes) that transform data.

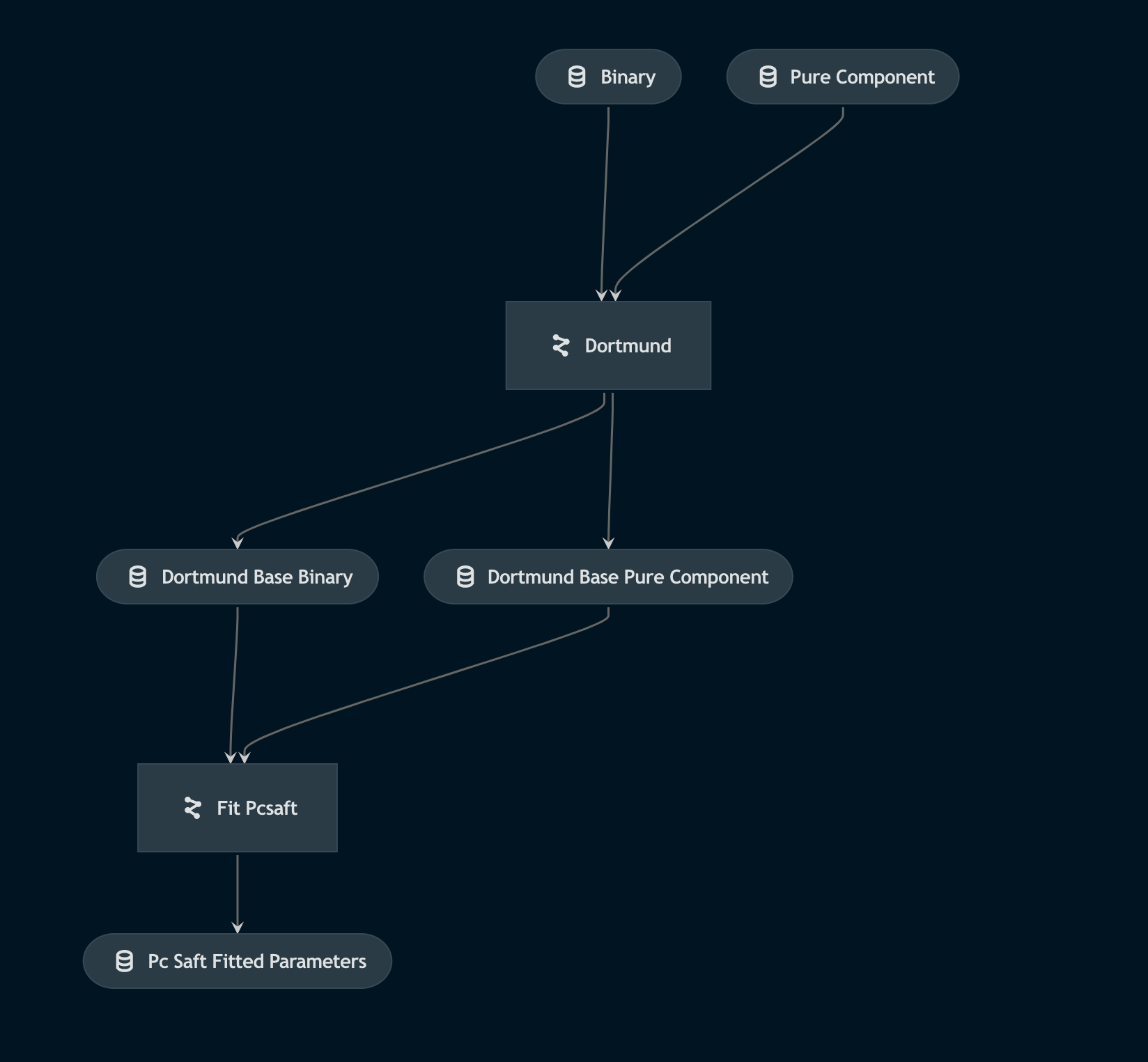

The easiest way to understand pipelines in this repository is using kedro-viz. kedro-viz is a web app that visualizes all pipelines via a dependency graph, as shown in the example below.

You can run kedro-viz using the following command:

kedro viz --autoreload

Add the autoreload flag to have the visualization automatically refresh on code changes.

You can run all Kedro pipelines in the project using:

kedro run

However, running all pipelines is usually unnecessary since many of the data processing outcomes are cached via git LFS. Instead, you can run a specific pipeline. For example, here is how to run only the Dortmund database processing pipeline:

kedro run --pipeline ddb

Kedro relies on a configuration system that can be a bit unintuitive. All configuration is stored inside conf. Inside conf, you will see the following directories:

base: Used for configuration stored in gitlocal: Used for configuration only stored on your machine

Each of the above directories has two important configuration filees:

catalog.yml: Describes the Data Catalog, a flexible way of importing and exporting data to/from pipelines. I strongly recommend skimming the examples in the documentation to get a feel for how the Data Catalog worksparameters/**.yml: The parameters files for each pipeline contain parameters that can be referenced inside the pipeline. Note that parameters are needed for any node input that does not come from the Data Catalog or a previous node. To see how to reference parameters, look at the Dortmund data processing pipeline.

- (Optional) Process the Dortmund data. This includes resolving SMILES strings, filtering data, and generating conformers. Note, these pipelines will not work without manually downloading the data from Dortmund databank, which is commercial.

kedro run --pipeline ddb

kedro run --pipeline ddb_model_prep- (Optional) Generate COSMO-RS pretraining data.

kedro run --pipeline cosmo- Train model for predicting dipole moments

kedro run --pipeline train_spk_mu_model- (Optional) Fit PC-SAFT parameters - This requires the Dortmund databank since it uses the output from step 1.

# Regression on Dortmund data

kedro run --pipeline pcsaft_regression

# Generate LaTex table with stats about fitting

kedro run --pipeline pcp_saft_fitting_results_table

# (Optional) Run regression on COSMO-RS data

kedro run --pipeline pcsaft_cosmo_regression

kedro run --pipeline pcp_saft_cosmo_fitting_results_table- Train models - this can be run using the input files in

data/05_model_input.

# Preprocess data

kedro run --pipeline prepare_regressed_split

# Train feed-forward nework

kedro run --pipeline train_ffn_regressed_model

# Train random forest

kedro run --pipeline train_sklearn_regressed_model

# Train D-MPNN model

kedro run --pipeline train_chemprop_regressed_model

# Train MPNN model

kedro run --pipeline train_pyg_regressed_model

# Pretrain on COSMO-RS and fine-tune on Dortmund

# Make sure to update the checkpoint_artifact_id under

# pyg_regressed_pcp_saft_model.train_args in

# conf/base/parameters/train_models.yml

# to the one from the pretrain run on wandb

kedro run --pipeline train_pyg_pretrain_cosmo_model

kedro run --pipeline train_pyg_regressed_model- Evaluate models

Make sure update results_table in conf/base/parameters/results_analysis.yml with the correct wandb files.

kedro run --pipeline results_tableTo use JupyterLab, run:

kedro jupyter lab

And if you want to run an IPython session:

kedro ipython

For both, you will need to run the following cell magic to get the Kedro global variables.

%load_ext kedro.extras.extensions.ipython

%reload_kedroYou can then load data like this:

df = catalog.load("imported_thermoml")