Please make a pull request if you find a venue to be missing.

- Search-oriented conversational AI @ ICTIR 2017

- Conversational Approaches to IR @ SIGIR 2017

- Talking with Conversational Agents in Collaborative Action @ CSCW 2017

- 1st Conversational AI: Today's Practice and Tomorrow's Potential @ NeurIPS 2017

- Search-oriented conversational AI @ EMNLP 2018

- 1st Workshop of Knowledge-aware and Conversational Recommender Systems @ RecSys 2018

- Conversational Approaches to IR @ SIGIR 2018

- 2nd Conversational AI: Today's Practice and Tomorrow's Potential @ NeurIPS 2018

- NLP for Conversational AI @ ACL 2019

- Search-Oriented Conversational AI @ WWW 2019

- Search-Oriented Conversational AI @ IJCAI 2019

- Conversational Interaction Systems @ SIGIR 2019

- Conversational Agents: Acting on the Wave of Research and Development @ CHI 2019

- 1st International Conference on Conversational User Interfaces 2019

- Dagstuhl 2019 Seminar Conversational Search

- 3rd Conversational AI: Today's Practice and Tomorrow's Potential @ NeurIPS 2019

- user2agent 2019: 1st Workshop on User-Aware Conversational Agents @ IUI 2019

- Conversational Systems for E-Commerce Recommendations and Search @ WSDM 2020

- 3rd International Workshop on Conversational Approaches to Information Retrieval (CAIR) @ CHIIR 2020

- Search-Oriented Conversational AI @ EMNLP 2020

- user2agent 2020: 2nd Workshop on User-Aware Conversational Agents @ IUI 2020

- 2nd International Conference on Conversational User Interfaces 2020

- KDD Converse 2020: Workshop on Conversational Systems Towards Mainstream Adoption

- CUI@CSCW2020: Collaborating through Conversational User Interfaces

- ACM Multimedia 2020 workshop Multimodal Conversational AI

- Workshop on Mixed-Initiative ConveRsatiOnal Systems @ ECIR 2021

- CUI@IUI2021 workshop: Theoretical and Methodological Challenges in Intelligent Conversational User Interface Interactions

- Conversational User Interfaces Conference (CUI 2021)

- Future Conversations 2021 workshop @ CHIIR 2021

- Let's Talk About CUIs: Putting Conversational User Interface Design Into Practice @ CHI 2021

- SCAI QReCC 21 Conversational Question Answering Challenge as part of the 6th SCAI workshop

- 3rd NLP for Conversational AI Workshop @ EMNLP 2021

- When Creative AI Meets Conversational AI @ COLING 2022 (no website yet)

- 7th SCAI workshop @ SIGIR 2022

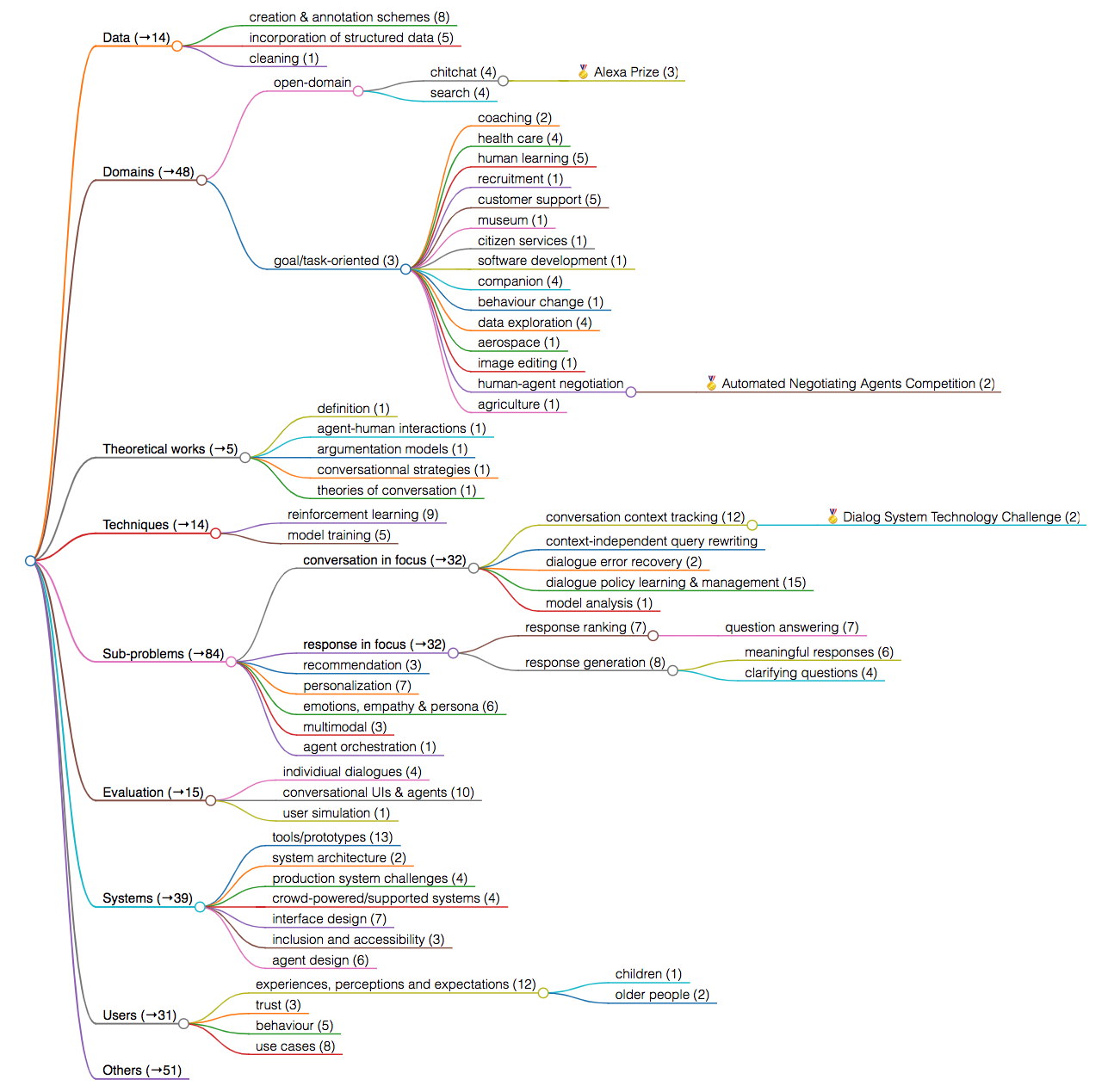

This categorization was created in a bottom-up manner based on the 300+ papers accepted by the workshops/conferences listed above (up to and including the MICROS 2021 workshop). Each paper was included in only one category or sub-category. This is of course purely subjective; ask 10 different researchers to come up with categories and you get 10 different results ...

The bracketed numbers starting with → indicate the total number of papers in this branch of the tree; e.g. there are a total of 48 papers that fall into some node inside the Domains category. The bracketed numbers after a sub-category without → indicate the number assigned to this particular node by me. For instance, chitchat (4) means that 4 papers fell in this category while Alexa Prize (3) means that three - different - papers fell into this more specific sub-category.

The mindmap was created with markmap, a neat markdown to mindmap tool. The svg of the mindmap is also available: open it in your favourite browser to experience an unpixelated mindmap. If you want to reuse the categories, alter/update/edit them, take the markdown file as starting point and then head over to markmap!

Interestingly, if you check out the report published about the Dagstuhl Conversational Search seminar in late 2019 (where many researchers came together to define/work on a roadmap) you will find that the themes chosen there are not well covered in the above research lines. Dagstuhl themes:

- Defining conversational search

- Evaluating conversational search

- Modeling in conversational search

- Argumentation and explanation

- Scenarios that invite conversational search

- Conversational search for learning technologies

- Common conversational community prototype

- 🚴 XOR-TyDi QA

- "XOR-TyDi QA brings together for the first time information-seeking questions, open-retrieval QA, and multilingual QA to create a multilingual open-retrieval QA dataset that enables cross-lingual answer retrieval. It consists of questions written by information-seeking native speakers in 7 typologically diverse languages and answer annotations that are retrieved from multilingual document collections."

- 🚴 Leaderboard for multi-turn response selection

- "Multi-turn response selection in retrieval-based chatbots is a task which aims to select the best-matched response from a set of candidates, given the context of a conversation. This task is attracting more and more attention in academia and industry. However, no one has maintained a leaderboard and a collection of popular papers and datasets yet. The main objective of this repository is to provide the reader with a quick overview of benchmark datasets and the state-of-the-art studies on this task, which serves as a stepping stone for further research."

- 🚴 Papers with code leaderboard for conversational response selection

- 🗂 MANtIS: a Multi-Domain Information Seeking Dialogues Dataset.

- "Unlike previous information-seeking dialogue datasets that focus on only one domain, MANtIS has more than 80K diverse conversations from 14 different Stack Exchannge sites, such as physics, travel and worldbuilding. Additionaly, all dialogues have a url, providing grounding to the conversations. It can be used for the following tasks: conversation response ranking/generation and user intent prediction. We provide manually annotated user intent labels for more than 1300 dialogues, resulting in a total of 6701 labeled utterances."

- 🗂 CANARD

- "CANARD is a dataset for question-in-context rewriting that consists of questions each given in a dialog context together with a context-independent rewriting of the question."

- "CANARD is constructed by crowdsourcing question rewritings using Amazon Mechanical Turk. We apply several automatic and manual quality controls to ensure the quality of the data collection process. The dataset consists of 40,527 questions with different context lengths."

- 🗂 QReCC

- "We introduce QReCC (Question Rewriting in Conversational Context), an end-to-end open-domain question answering dataset comprising of 14K conversations with 81K question-answer pairs. The goal of this dataset is to provide a challenging benchmark for end-to-end conversational question answering that includes the individual subtasks of question rewriting, passage retrieval and reading comprehension."

- 🗂 CAsT-19: A Dataset for Conversational Information Seeking

- "The corpus is 38,426,252 passages from the TREC Complex Answer Retrieval (CAR) and Microsoft MAchine Reading COmprehension (MARCO) datasets. Eighty information seeking dialogues (30 train, 50 test) are on average 9 to 10 questions long. A dialogue may explore a topic broadly or drill down into subtopics." (source)

- 🗂 FIRE 2020 task: Retrieval From Conversational Dialogues (RCD-2020)

- "Task 1: Given an excerpt of a dialogue act, output the span of text indicating a potential piece of information need (requiring contextualization)."

- "Task 2: Given an excerpt of a dialogue act, return a ranked list of passages containing information on the topic of the information need (requiring contextualization)."

- "The participants shall be provided with a manually annotated sample of dialogue spans extracted from four movie scripts along with entire movie scripts. The collection from which passages are to be retrieved for contextualization is the Wikipedia collection (dump from 2019). Each document in the Wikipedia collection is composed of explicitly marked-up passages (in the form of the paragraph tags). The retrievable units in our task are the passages (instead of whole documents)."

- 🗂 MIMICS

- "A Large-Scale Data Collection for Search Clarification"

- "MIMICS-Click includes over 400k unique queries, their associated clarification panes, and the corresponding aggregated user interaction signals (i.e., clicks)."

- "MIMICS-ClickExplore is an exploration data that includes aggregated user interaction signals for over 60k unique queries, each with multiple clarification panes."

- "MIMICS-Manual includes over 2k unique real search queries. Each query-clarification pair in this dataset has been manually labeled by at least three trained annotators."

- 🗂 ClariQ challenge

- "we have crowdsourced a new dataset to study clarifying questions"

- "We have extended the Qulac dataset and base the competition mostly on the training data that Qulac provides. In addition, we have added some new topics, questions, and answers in the training set."

- 🗂 PolyAI

- "This repository provides tools to create reproducible datasets for training and evaluating models of conversational response. This includes: Reddit (3.7 billion comments), OpenSubtitles (400 million lines from movie and television subtitles) and Amazon QA (3.6 million question-response pairs in the context of Amazon products)"

- 🗂 🚴 Natural Questions: Google's latest question answering dataset.

- "Natural Questions contains 307K training examples, 8K examples for development, and a further 8K examples for testing."

- "NQ is the first dataset to use naturally occurring queries and focus on finding answers by reading an entire page, rather than extracting answers from a short paragraph. To create NQ, we started with real, anonymized, aggregated queries that users have posed to Google's search engine. We then ask annotators to find answers by reading through an entire Wikipedia page as they would if the question had been theirs. Annotators look for both long answers that cover all of the information required to infer the answer, and short answers that answer the question succinctly with the names of one or more entities. The quality of the annotations in the NQ corpus has been measured at 90% accuracy."

- Paper

- 🗂 🚴 QuAC: Question Answering in Context

- "A dataset for modeling, understanding, and participating in information seeking dialog. Data instances consist of an interactive dialog between two crowd workers: (1) a student who poses a sequence of freeform questions to learn as much as possible about a hidden Wikipedia text, and (2) a teacher who answers the questions by providing short excerpts (spans) from the text."

- 🗂 🚴 CoQA: A Conversational Question Answering Challenge

- "CoQA contains 127,000+ questions with answers collected from 8000+ conversations. Each conversation is collected by pairing two crowdworkers to chat about a passage in the form of questions and answers."

- 🗂 🚴 HotpotQA: A Dataset for Diverse, Explainable Multi-hop Question Answering

- "HotpotQA is a question answering dataset featuring natural, multi-hop questions, with strong supervision for supporting facts."

- 🗂 🚴 QANTA: Question Answering is Not a Trivial Activity

- "A question answering dataset composed of questions from Quizbowl - a trivia game that is challenging for both humans and machines. Each question contains 4-5 pyramidally arranged clues: obscure ones at the beginning and obvious ones at the end."

- 🗂 MSDialog

- "The MSDialog dataset is a labeled dialog dataset of question answering (QA) interactions between information seekers and answer providers from an online forum on Microsoft products."

- "The annotated dataset contains 2,199 multi-turn dialogs with 10,020 utterances."

- 🗂 ShARC: Shaping Answers with Rules through Conversation

- "Most work in machine reading focuses on question answering problems where the answer is directly expressed in the text to read. However, many real-world question answering problems require the reading of text not because it contains the literal answer, but because it contains a recipe to derive an answer together with the reader's background knowledge. We formalise this task and develop a crowd-sourcing strategy to collect 37k task instances."

- 🗂 Training Millions of Personalized Dialogue Agents

- 5 million personas and 700 million persona-based dialogues

- 🗂 Ubuntu Dialogue Corpus

- "A dataset containing almost 1 million multi-turn dialogues, with a total of over 7 million utterances and 100 million words."

- 🗂 MultiWOZ: Multi-domain Wizard-of-Oz dataset

- "Multi-Domain Wizard-of-Oz dataset (MultiWOZ), a fully-labeled collection of human-human written conversations spanning over multiple domains and topics."

- 🗂 Frames: Complex conversations and decision-making

- "The 1369 dialogues in Frames were collected in a Wizard-of-Oz fashion. Two humans talked to each other via a chat interface. One was playing the role of the user and the other one was playing the role of the conversational agent. We call the latter a wizard as a reference to the Wizard of Oz, the man behind the curtain. The wizards had access to a database of 250+ packages, each composed of a hotel and round-trip flights. We gave users a few constraints for each dialogue and we asked them to find the best deal."

- 🗂 Wizard of Wikipedia: 22,311 conversations grounded with Wikipedia knowledge

- "We consider the following general open-domain dialogue setting: two participants engage in chit-chat, with one of the participants selecting a beginning topic, and during the conversation the topic is allowed to naturally change. The two participants, however, are not quite symmetric: one will play the role of a knowledgeable expert (which we refer to as the wizard) while the other is a curious learner (the apprentice)."

- "... first crowd-sourcing 1307 diverse discussion topics and then conversations involving 201,999 utterances about them"

- Available at http://www.parl.ai/.

- 🗂 Qulac: 10,277 single-turn conversations consiting of clarifying questions and their answers on multi-faceted and ambigouous queries from TREC Web track 2009-2012.

- "Qulac presents the first dataset and offline evaluation framework for studying clarifying questions in open-domain information-seeking conversational search systems."

- "... we collected Qulac following a four-step strategy. In the first step, we define the topics and their corresponding subtopics. In the second step, we collected several candidates clarifying questions for each query through crowdsourcing. Then, in the third step, we assessed the relevance of the questions to each facet and collected new questions for those facets that require more specific questions. Finally, in the last step, we collected the answers for every query-facet-question triplet."

- 🗂 TREC 2019 Conversational Search task

- 🗂 🚴 MS Marco: question answering and passage re-ranking

- This is not a conversational dataset but has been used for some sub-tasks in conversational IR (e.g. question rewriting) in the past.

- 🗂 🚴 CoSQL: CoSQL is a corpus for building cross-domain Conversational text-to-SQL systems.

- "It is the dialogue version of the Spider and SParC tasks. CoSQL consists of 30k+ turns plus 10k+ annotated SQL queries, obtained from a Wizard-of-Oz collection of 3k dialogues querying 200 complex databases spanning 138 domains."

- ORCAS: Open Resource for Click Analysis in Search

- NLP Progress (many tasks and resources)

- Papers with code

- Arxiv Sanity Preserver