This is a unofficial tensorflow implementation of VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera.

For the caffe model required in the repository: please contact the author of the paper.

- Python 3

- tensorflow v2 (2.0.0+)

- pycaffe

- matplotlib 3.0.0 or 3.0.2 (v3.0.2 shuts down occasionally for unknown reason)

Fedora 29

pip3 install -r requirements.txt --user

sudo dnf install protobuf-devel leveldb-devel snappy-devel opencv-devel boost-devel hdf5-devel glog-devel gflags-devel lmdb-devel atlas-devel python-lxml boost-python3-devel

git clone https://github.com/BVLC/caffe.git

cd caffe

sudo make all

sudo make runtest

sudo make pycaffe

sudo make distribute

sudo cp .build_release/lib/ /usr/lib64

sudo cp -a distribute/python/caffe/ /usr/lib/python3.7/site-packages/

- Drop the caffe model into

models/caffe_model. - Run

init_weights.pyto generate tensorflow model.

-

(Deperated)

benchmark.pyis a class implementation containing all the elements needed to run the model. -

run_estimator.pyis a script for running with video stream. -

(Recommended)

run_estimator_ps.pyis a multiprocessing version script. When 3d plotting function shuts down inrun_estimator.pymentioned above, you can try this one. -

run_estimator_robot.pyadditionally provides ROS network and/or serial connection for communication in robot controlling. -

[NOTE] To run the video stream based scripts mentioned above:

i ) click the left mouse button to confirm a simple static bounding box generated by HOG method;

ii) trigger any keyboard input to exit while the network running.

-

run_pic.pyis a script for running with one single picture: the outputs are 4×21 heatmaps and 2D results.

- I don't know why in some cases the 3d plotting function (from matplotlib) shuts down in the script. It may result from the variety of programming environments. Anyone capable to fix this and pull a request would be gratefully appreciated.

- The input image in this implementation is in BGR color format (cv2.imread()) and the pixel value is regulated into a range of [-0.4, 0.6).

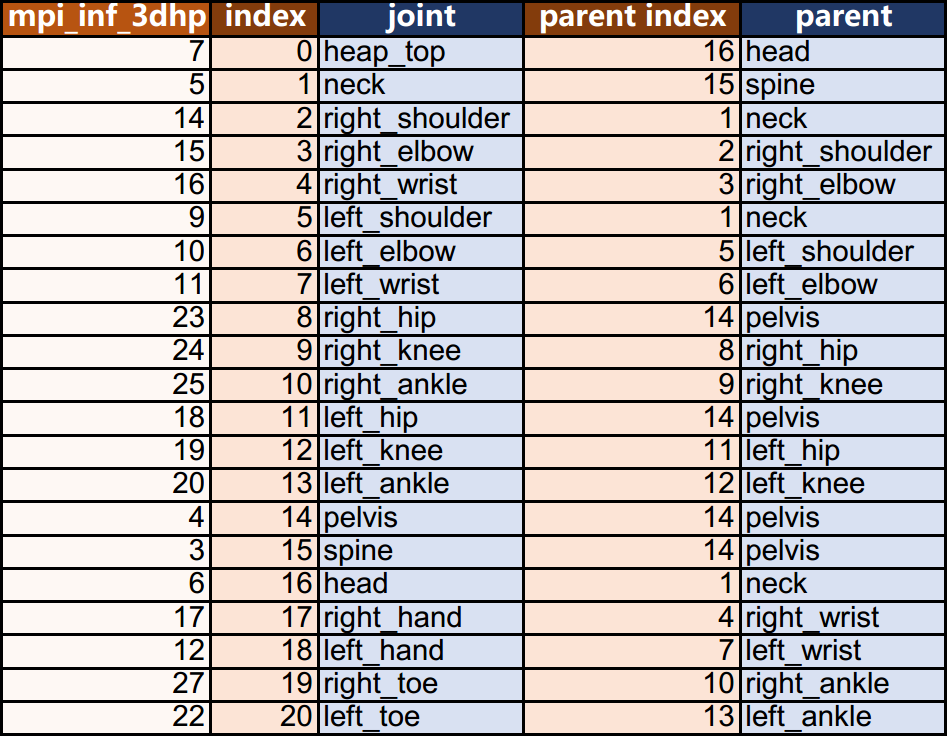

- The joint-parent map (detailed information in

materials/joint_index.xlsx):

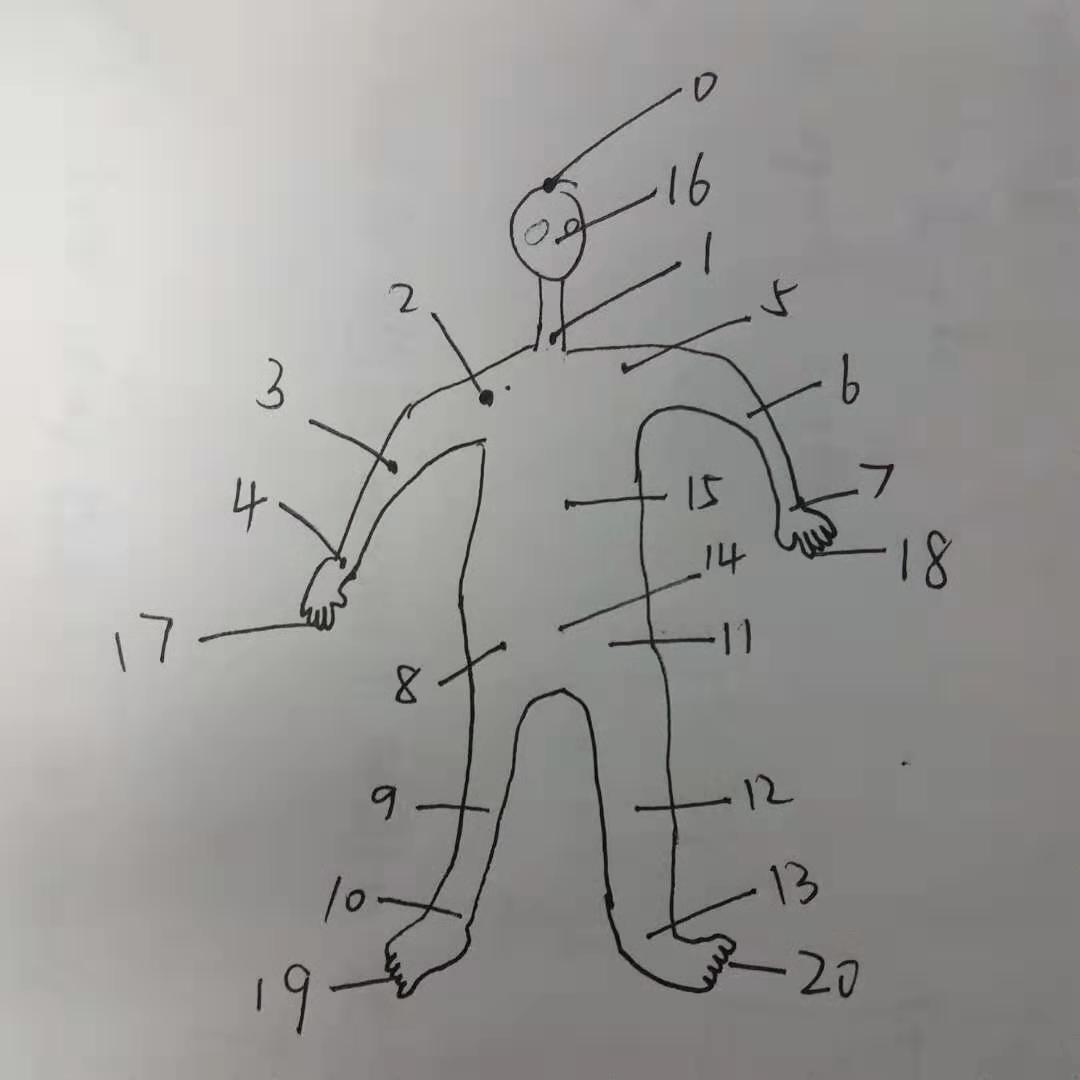

- I drew a diagram to show the joint positions (don't laugh):

- Every input image is assumed to contain 21 joints to be found, which means it is easy to fit wrong results when a joint is actually not in the input.

- In some cases the estimation results are not so good as the results shown in the paper author's promotional video.

- UPDATE: the running speed is now faster thanks to some coordinate extraction optimization!

- The training script

train.pyis not complete yet (I failed to reconstruct the model:( So do not use it. Maybe some day in the future I will try to fix it. Also pulling requests are welcomed.

- Implement a better bounding box strategy.

- Implement the training script.

For MPI-INF-3DHP dataset, refer to my another repository.

- original MATLAB implementation provided by the paper author.

- timctho/VNect-tensorflow

- EJShim/vnect_estimator