This repository is cloned from chenjun2hao/DDRNet.pytorch and modified for research

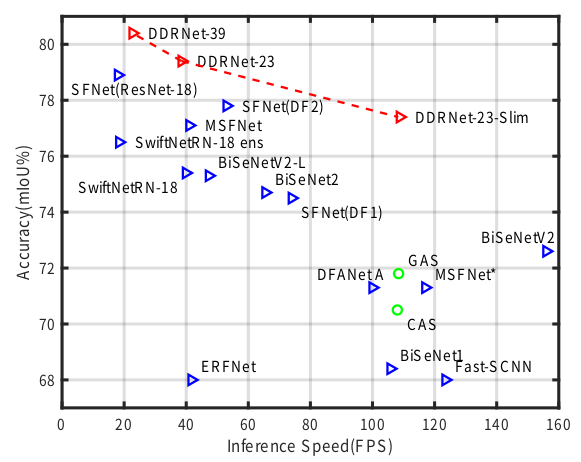

This is the unofficial code of Deep Dual-resolution Networks for Real-time and Accurate Semantic Segmentation of Road Scenes. which achieve state-of-the-art trade-off between accuracy and speed on cityscapes and camvid, without using inference acceleration and extra data!on single 2080Ti GPU, DDRNet-23-slim yields 77.4% mIoU at 109 FPS on Cityscapes test set and 74.4% mIoU at 230 FPS on CamVid test set.

The code mainly borrows from HRNet-Semantic-Segmentation OCR and the official repository, thanks for their work.

A comparison of speed-accuracy trade-off on Cityscapes test set.torch>=1.7.0

cudatoolkit>=10.2

-

Download two files below from Cityscapes.to the ${CITYSCAPES_ROOT}

- leftImg8bit_trainvaltest.zip

- gtFine_trainvaltest.zip

-

Unzip them

-

Rename the folders like below

└── cityscapes ├── leftImg8bit ├── test ├── train └── val └── gtFine ├── test ├── train └── val -

Update some properties in {REPO_ROOT}/experiments/cityscapes/${MODEL_YAML} like below

DATASET: DATASET: cityscapes ROOT: ${CITYSCAPES_ROOT} TEST_SET: 'cityscapes/list/test.lst' TRAIN_SET: 'cityscapes/list/train.lst' ...

- The official repository provides pretrained models for Cityscapes

-

Download the pretrained model to ${MODEL_DIR}

-

Update

MODEL.PRETRAINEDandTEST.MODEL_FILEin {REPO_ROOT}/experiments/cityscapes/${MODEL_YAML} like below... MODEL: ... PRETRAINED: "${MODEL_DIR}/${MODEL_NAME}.pth" ALIGN_CORNERS: false ... TEST: ... MODEL_FILE: "${MODEL_DIR}/${MODEL_NAME}.pth" ...

- Execute the command below to evaluate the model on Cityscapes-val

cd ${REPO_ROOT}

python tools/eval.py --cfg experiments/cityscapes/ddrnet23_slim.yaml

| model | OHEM | Multi-scale | Flip | mIoU | FPS | E2E Latency (s) | Link |

|---|---|---|---|---|---|---|---|

| DDRNet23_slim | Yes | No | No | 77.83 | 91.31 | 0.062 | official |

| DDRNet23_slim | Yes | No | Yes | 78.42 | TBD | TBD | official |

| DDRNet23 | Yes | No | No | 79.51 | TBD | TBD | official |

| DDRNet23 | Yes | No | Yes | 79.98 | TBD | TBD | official |

mIoU denotes an mIoU on Cityscapes validation set.

FPS is measured by following the test code provided by SwiftNet. (Refer to speed_test from lib/utils/utils.py for more details.)

E2E Latency denotes an end-to-end latency including pre/post-processing.

FPS and latency are measured with batch size 1 on RTX 2080Ti GPU and Threadripper 2950X CPU.

Note

- with the

ALIGN_CORNERS: falsein***.yamlwill reach higher accuracy.

download the imagenet pretrained model, and then train the model with 2 nvidia-3080

cd ${PROJECT}

python -m torch.distributed.launch --nproc_per_node=2 tools/train.py --cfg experiments/cityscapes/ddrnet23_slim.yamlthe own trained model coming soon

| model | Train Set | Test Set | OHEM | Multi-scale | Flip | mIoU | Link |

|---|---|---|---|---|---|---|---|

| DDRNet23_slim | train | eval | Yes | No | Yes | 77.77 | Baidu/password:it2s |

| DDRNet23_slim | train | eval | Yes | Yes | Yes | 79.57 | Baidu/password:it2s |

| DDRNet23 | train | eval | Yes | No | Yes | ~ | None |

| DDRNet39 | train | eval | Yes | No | Yes | ~ | None |

Note

- set the

ALIGN_CORNERS: truein***.yaml, because i use the default setting in HRNet-Semantic-Segmentation OCR. - Multi-scale with scales: 0.5,0.75,1.0,1.25,1.5,1.75. it runs too slow.

- from ydhongHIT, can change the

align_corners=Truewith better performance, the default option isFalse