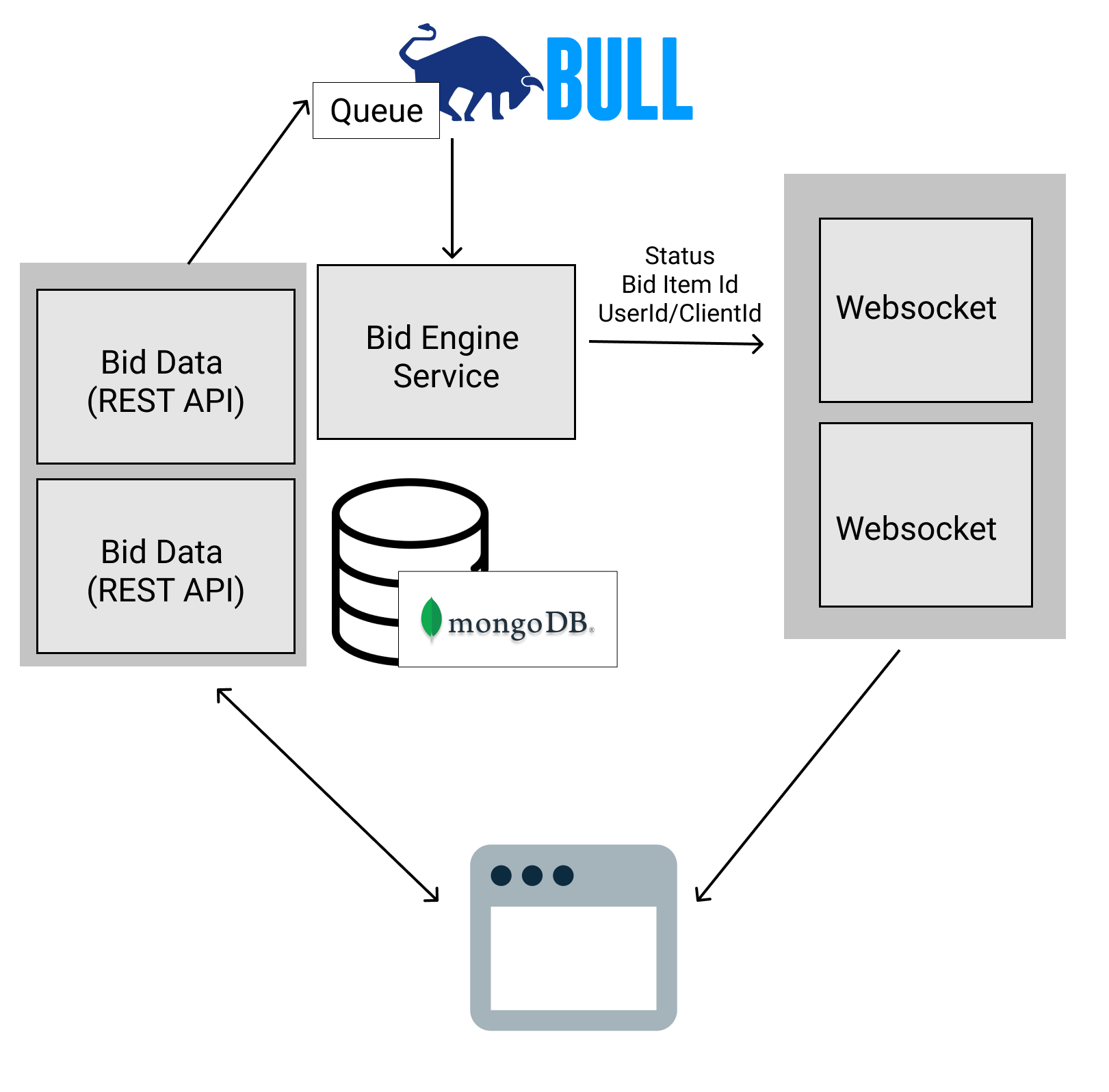

Scalable Bidding System With Microservices Architecture

Example of frontend application using this service is bid-it-app

All 3 main applications are under single NestJS monorepo.

- REST API Source code

- Bid Engine Source code

- Websocket server Source code

There are two ways to run this application:

- Docker (recommended)

- Manual

docker-compose --compatibility up -dThis will starts 3 Rest API services with 3 WebSocket servers with Nginx as load-balancer in front of them.

You can access the REST API Swagger UI at http://localhost:3000/api

You can shutdown the services with the command

docker-compose down

- MongoDB

- Redis

-

Install dependencies.

yarn install

-

Adding an

.envfile at the root of the project with the following content:DEALS_DB_URL=mongodb://localhost:27017/deal USERS_DB_URL=mongodb://localhost:27017/user REPORTING_DB_URL=mongodb://localhost:27017/report REDIS_URL=redis://localhost:6379

-

Start all the services.

yarn start

-

You can access the REST API Swagger UI at http://localhost:3000/api

We use a reporting server (source) listening to Redis event and persists the events to MongoDB.

The reports then can be generated with generate-report.ts

-

Start all the services:

docker-compose --compatibility up -d

-

Generate test data and simulate 200 concurrent clients performing bidding, and generates reporting in console.

./run-simulation.sh

-

Start all the services:

yarn start

-

(First time only) Generate all the deals:

yarn setup

-

Simulate many clients performing bidding:

yarn simulate

-

Generate reports:

yarn report

- Both REST API and Websocket servers can be scaled horizontally easily.

- At the moment, a single queue is used to process all bids. This singleton design is intentional to avoid race conditions between bids.

- The singleton design of queue may have performance impact if the load is very high. One possible solution to explore is to distribute the load to process bids across multiple queues by ensuring bids associated with a particular deal always go to the same queue.