PyTorch-RL-IL (rlil): A PyTorch Library for Building Reinforcement Learning and Imitation Learning Agents

rlil is a library for reinforcement learning (RL) and imitation learning (IL) research.

This library is developed from Autonomous Learning Library (ALL).

Some modules such as Approximation, Agent and presets are almost the same as ALL.

For the basic concepts of them, see the original documentation: https://autonomous-learning-library.readthedocs.io/en/stable/.

Unlike ALL, rlil uses an distributed sampling method like rlpyt and machina, which makes it easy to switch between offline and online learning.

Also, rlil utilizes a replay buffer library cpprb.

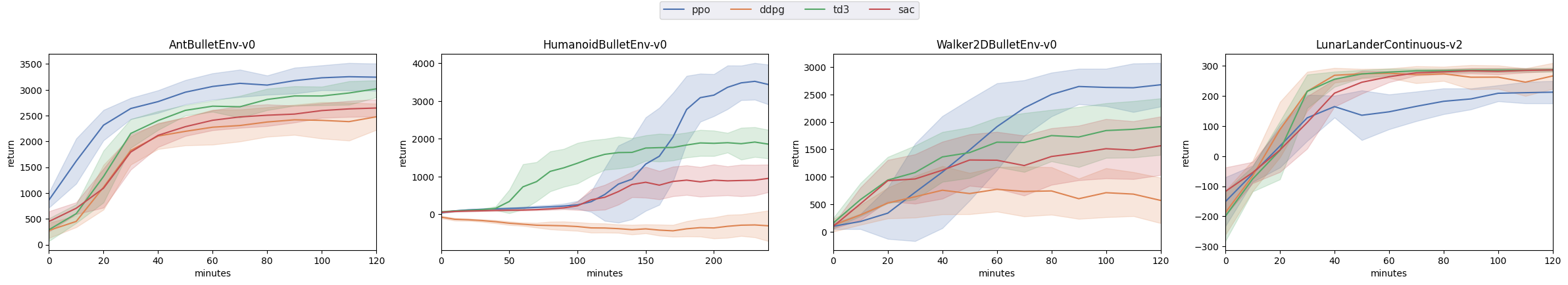

These algorithms are run online and do not require demonstrations.

You can test the algorithms by:

python scripts/continuous/online.py [env] [agent] [options]

-

Vanilla Actor Critic (VAC), code -

Deep DPG (DDPG), code -

Twind Dueling DDPG (TD3), code -

Soft Actor Critic (SAC), code -

Proximal Policy Optimization Algorithms (PPO), code -

Trust Region Policy Optimization (TRPO)

You can test the algorithms by:

python scripts/continuous/online.py [env] [agent] [options]

-

Neural Network Dynamics for Model-Based Deep Reinforcement Learning with Model-Free Fine-Tuning, code

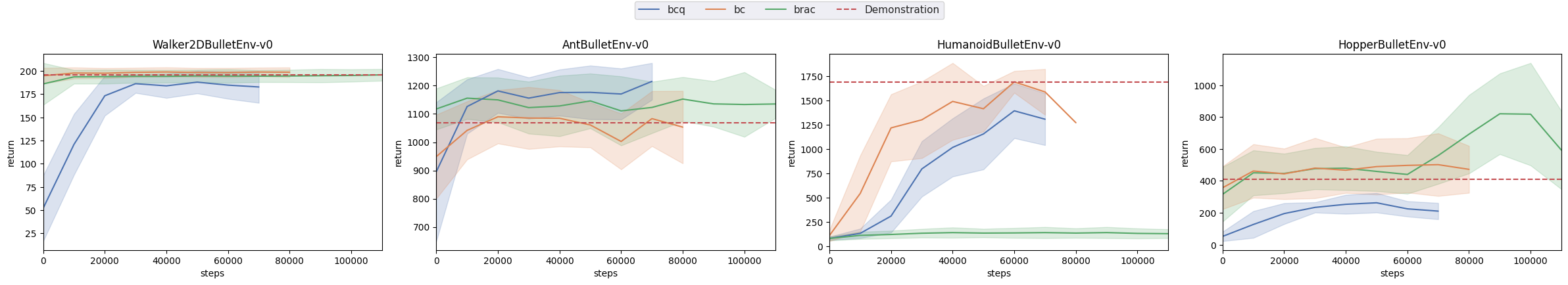

The following offline IL and RL algorithms train an agent with a demonstration and do not require any interaction with the environment.

You can test the algorithms by:

python scripts/continuous/online.py [env] [agent] [path to the directory which includes transitions.pkl]

These offline rl implementations should be incorrect and the result doesn't follow the result of the original paper. Any contributions and suggestions are welcome.

-

Batch-Constrained Q-learning (BCQ), code -

Bootstrapping Error Accumulation Reduction (BEAR), code -

Behavior Regularized Actor Critic (BRAC), code

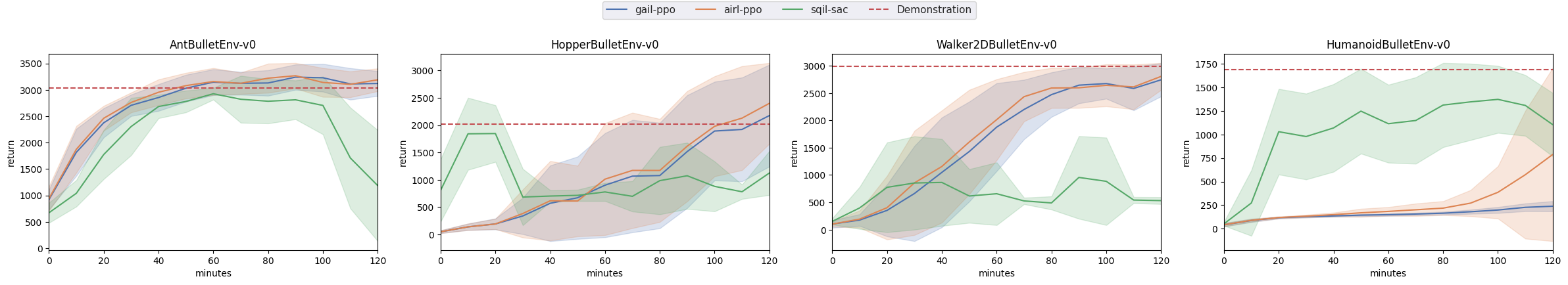

The online IL algorithms train an agent with a demonstration and interactions with the environment.

You can test the algorithms by:

python scripts/continuous/online_il.py [env] [agent (e.g. gail)] [base_agent (e.g. ppo)] [path to the directory which includes transitions.pkl]

-

Generative Adversarial Imitation Learning (GAIL), code -

Learning Robust Rewards with Adversarial Inverse Reinforcement Learning (AIRL), code -

Soft Q Imitation Learning (SQIL), code

Currently, DDPG, TD3 and SAC can be combined with M-step, PER and Ape-X. To enable them, just pass n_step, prioritized or use_apex argument to the preset. For example,

from rlil.presets.continuous import ddpg

ddpg(n_step=5, prioritized=True)

ddpg(apex=True)

The implementation of Ape-X is not same as the original paper since rlil uses episodic training.

The implemented Ape-X is unstable and sensitive to the mini-batch size. See this issue.

-

Noisy Networks for Exploration, code -

Prioritized Experience Replay (PER), code -

Multi-step learning (M-step), code -

Ape-X, code

Some popular imitation learning algorithms, like BC and GAIL, assume that the expert demonstrations come from the same MDP. This assumption does not hold in many real-life scenarios where discrepancies between the expert and the imitator MDPs are common. Therefore, rlil has some different dynamics pybullet envs for such different MDP settings. For example, the following code makes a pybullet ant env with half the length of its front legs.

import gym

from rlil.environments import ENVS

gym.make(ENVS["half_front_legs_ant"])

See rlil/environments/__init__.py for the available environments.

You can install from source:

git clone git@github.com:syuntoku14/pytorch-rl-il.git

cd pytorch-rl-il

pip install -e .

Follow the installation instruction above, and then get started in the scripts folder.

The scripts/continuous folder includes the training scripts for continuous environment. See the Algorithms section above for instructions on how to run them.

Example:

The following code uses PPO to train an agent for 60 minutes.

The option --num_workers allows you to specify the number of workers for distributed sampling.

The --exp_info option is used in order to organize the results directory.

It should include a one-line description of the experiment.

The result will be saved in the directory: runs/[exp_info]/[env]/[agent with ID].

cd pytorch-rl-il

python scripts/continuous/online.py ant ppo --train_minutes 60 --num_workers 5 --exp_info example_training

You can check the training progress using:

tensorboard --logdir runs/[exp_info]

and opening your browser to http://localhost:6006. The tensorboard records not only the learning curve, but also presets' parameters and settings related to the experiment, such as git diffs.

After the training, you can draw the learning curve by scripts/plot.py:

python scripts/plot.py runs/[exp_info]/[env]/[agent with ID]

The figure of the learning curve will be saved as runs/[exp_info]/[env]/[agent with ID]/result.png.

Same as ALL, you can watch the trained model using:

python scripts/continuous/watch_continuous.py runs/[exp_info]/[env]/[agent with ID]

You can run the trained agent and save the trajectory by scripts/record_trajectory.py.

python scripts/record_trajectory.py runs/[exp_info]/[env]/[agent with ID]

Then, transitions.pkl file, which is a pickled object generated by cpprb.ReplayBuffer.get_all_transitions(), will be saved in runs/[exp_info]/[env]/[agent with ID].

You can train an agent with imitation learning using the transitions.pkl.

Example:

The following code uses the trained agent in the previous PPO example.

# record trajectory

python scripts/record_trajectory.py runs/example_training/AntBulletEnv-v0/[ppo_ID]

# start sqil with sac training

python scripts/online_il.py ant sqil sac runs/example_training/AntBulletEnv-v0/[ppo_ID] --exp_info example_sqil

# plot the result

python scripts/plot.py runs/example_sqil/AntBulletEnv-v0/[sqil-sac_ID]