This is the official PyTorch implementation of CVPR 2024 paper (LAFS: Landmark-based Facial Self-supervised Learning for Face Recognition).

Our code is partly borrowed from DINO (https://github.com/facebookresearch/dino) and Insightface(https://github.com/deepinsight/insightface).

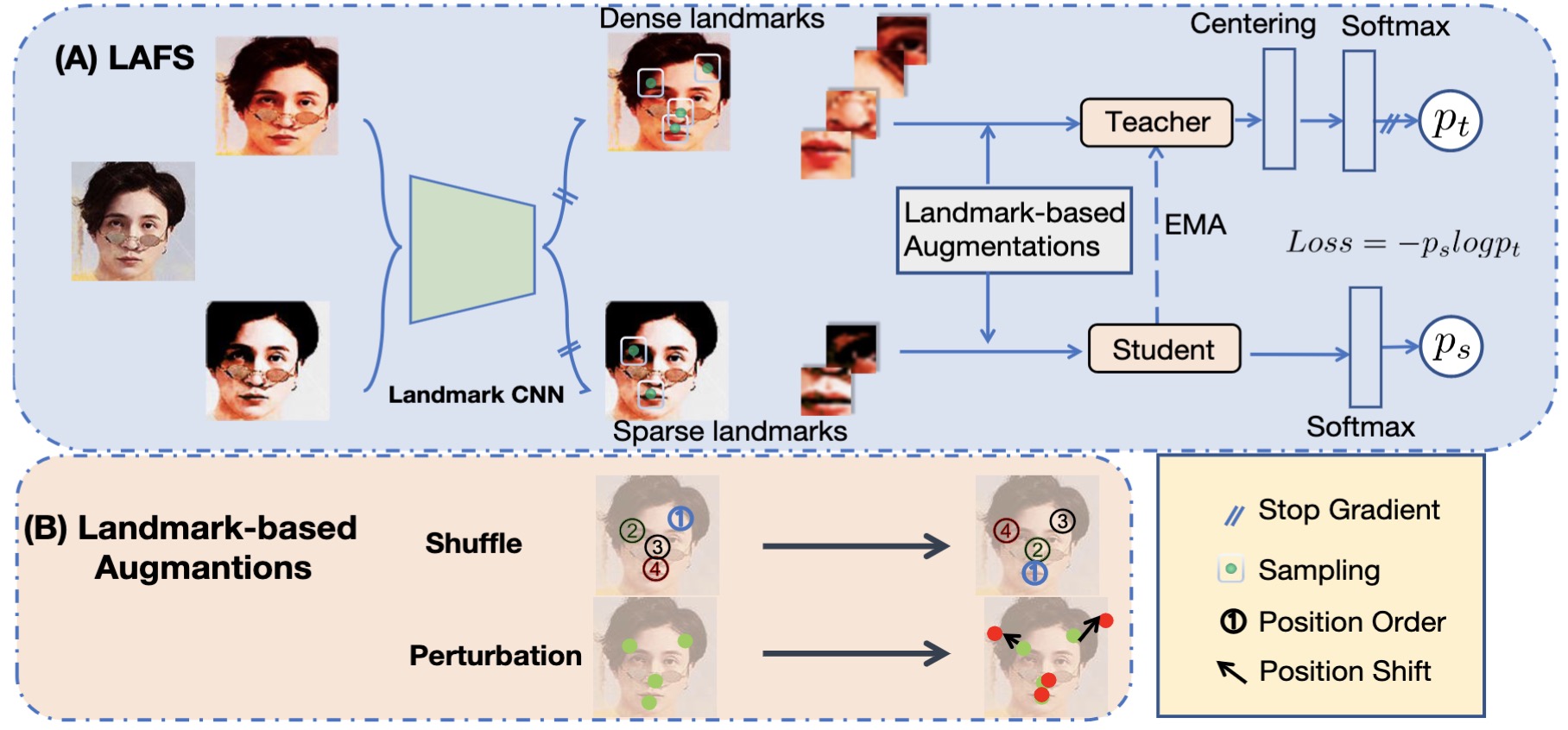

In this work we focus on learning facial representations that can be adapted to train effective face recognition models, particularly in the absence of labels. Firstly, compared with existing labelled face datasets, a vastly larger magnitude of unlabeled faces exists in the real world. We explore the learning strategy of these unlabeled facial images through self-supervised pretraining to transfer generalized face recognition performance. Moreover, motivated by one recent finding, that is, the face saliency area is critical for face recognition, in contrast to utilizing random cropped blocks of images for constructing augmentations in pretraining, we utilizcd e patches localized by extracted facial landmarks. This enables our method - namely Landmark-based Facial Self-supervised learning (LAFS), to learn key representation that is more critical for face recognition. We also incorporate two landmark-specific augmentations which introduce more diversity of landmark information to further regularize the learning. With learned landmark-based facial representations, we further adapt the representation for face recognition with regularization mitigating variations in landmark positions. Our method achieves significant improvement over the state-of-the-art on multiple face recognition benchmarks, especially on more challenging few-shot scenarios.

@InProceedings{Sun_2024_CVPR,

author = {Sun, Zhonglin and Feng, Chen and Patras, Ioannis and Tzimiropoulos, Georgios},

title = {LAFS: Landmark-based Facial Self-supervised Learning for Face Recognition},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024}

}Please consider cite our paper and star the repo if you find this repo useful.

- LAFS Pretraining scripts

- DINO-face Pretraining scripts

- Checkpoints

- Finetuning scripts

- IJB datasets evaluation code

Please stay tuned for more updates.

- Pytorch Version

torch==1.8.1+cu111; torchvision==0.9.1+cu111

- Dataset

-

MS1MV3 -- Please download from InsightFace(https://github.com/deepinsight/insightface/tree/master/recognition/_datasets_). Note that MS1MV3 use bgr order, and WebFace4M use rgb order.

-

WebFace4m --I can't release the .rec file of this whole dataset as there is license issue. Please obtain from the offical website(https://www.face-benchmark.org/download.html) and convert into .ref file using ima2rec.py.

-

Few-shot WebFace4m: To ensure that the experiments are reproducible, the few-shot WebFace4M datasets filtered by me are released as follows:

| 1-shot | 2-shot | 4-shot | 10-shot | full |

|---|---|---|---|---|

| link | link | link | link | please use the original WebFace4M |

Please specify the ratio for experiment in FaceDataset function. For example, 1% with 1-shot, you should set partition in FaceDataset to 0.01.

- SSL Pretraining Command:

Before you start self-supervised pretraining, please use the landmark weight trained on MS1MV3 or WebFace4M, and specify in --landmark_path:

- Part-fViT MS1MV3 (Performance on IJB-C: TAR@FAR=1e-4 97.29).

- Part-fViT WebFace4M (Performance on IJB-C: TAR@FAR=1e-4 97.40)

python -m torch.distributed.launch --nproc_per_node=2 lafs_train.py

Note on 2A100 (40GB), the total pretraining training time would be around 2-3 days.

- Supervised finetuning Command:

The training setting difference between the MS1MV3 and WebFace4M is that MS1MV3 use a stronger mixup probability of 0.2 and rand augmentation with magnitude of 2, while WebFace4M use 0.1 for mixup and rand augmentation magnitude.

And please note the colour channel of these datasets, i.e. MS1MV3 use brg order.

Before you run the following command, please change the dataset path --dataset_path, SSL pretrained model --model_dir, and model from stage 1 --pretrain_path.

If you want to run the model with flip augmentation only, please disable the mixup and augmentations, by setting random_resizecrop, rand_au to False in the FaceDataset function, and set mixup-prob to 0.0

python -m torch.distributed.launch --nproc_per_node=2 --nnodes=1 --node_rank=0 --master_port 47771 train_largescale.py

Please let me know if there is any problem in using this code as the cleaned code hasn't been tested. I will keep updating the usage of the code, thank you!

python IJB_evaluation.py

Please specify the model_path and image_path in the file. You can download the data from Insightface

| Training set | pretrained | Model | IJB-B | IJB-C |

|---|---|---|---|---|

| WebFace4M | No | Part fViT | 96.05 | 97.40 |

| WebFace4M | LAFS | Part fViT | IJB-B: 96.28 | 97.58 |

| Pretraining Set | SSL Method | Model |

|---|---|---|

| 1-shot WebFace4M | DINO | fViT |

| 1-shot WebFace4M | LAFS | Part fViT |

| --- | --- | --- |

| MS1MV3 | LAFS | --- |

This project is licensed under the terms of the MIT license.