- NOTE

- Introduction

- Technical overview:

- architecture

- setup instructions

- testing

- debugging

- How to use your install!

- Contributing and communications

As of September 2021 the work on the project is suspended. I came to the conclusion that other people's projects for alternative search may have better chances to hopefully succeed, and my own time is (at least for now) better spent elsewhere. That being said, I learned a lot working on this and it was very satisfying.

Do contact me if you have questions about running the whole thing or its internal workings!

ActualScan is a smart (analytic) Web search engine with infinitely sortable results. It focuses on informative and niche websites, online discussions and articles. It's good for exploring what people have to say about any topic. Read more on the visions for social indexing and analytic results on the blog.

ActualScan is free software (AGPL v3): you can run your own instance and review and modify the code, as long as you also make the source available to users.

Currently indexing should work decently on many Wordpress sites, some media and forums sites, and Reddit (optional: you have to use your own Reddit API key).

You can follow the blog with the email newsletter or RSS. Also see the contributing info at the end.

Under the hood ActualScan is a bunch of services written in Python, Java and some Common Lisp. The whole stack is provided with two orchestration schemes, Docker Compose and Ansible.

Docker Compose is intended for testing and developing ActualScan code on your local machine. We try to include as much of production-like network security (TLS etc.) as possible, to test that everything works, but overall this version is not intended for serious production use.

The Ansible version, on the other hand, should offer a relatively plug-and-play experience where you provide your servers and get a search engine. Due to some recent serious changes in the stack, and apparently changes in RedHat's CentOS support guarantees, this is currently not up to date. It is possible to go fully Ansible in the future, but then we would require using virtual machines for local development.

Important note: only for sites' search pages, the crawler ignores robots.txt (the unofficial standard for telling crawlers what to crawl). This is unlikely to get you into any trouble if you play with ActualScan casually on a local machine: but consider yourself warned. In the future there will be config option to disable this. This is done because website owners are likely not to predict our use case when blocking robots specifically from their search pages. (Search pages are useless for most existing crawlers and search engines.) If sites (also) block their content pages from robots, we won't save or index them anyway.

A guide to exploring the code if you want. The list goes by the Docker Compose container names.

- Website: a Django project, at

python_modules/ascan. Thescanpackage (app) contains the main search interface and classes for controlling crawling/scanning process. Themanagerpackage contains user interface for managing sites, tags and other entities. Thebgpackage is for everything else, like user profiles, autocomplete etc. This partitioning scheme isn't ideal, but a replacement would have to be clearly better and for the long term. - Maintenance daemon: a constant worker supervising scans (starting, finishing...), sending WebSocket updates etc. The code is in

python_modules/ascan/maintenance.py - Speechtractor: a Lisp HTTP service used by the crawler. Gets raw HTML, extracts parsed text, URLs, author info and such. The code

is in

lisp/speechtractor. The continued use of Common Lisp is not certain. It has been already abandoned for Omnivore; the reasoning with pros and cons may appear on the blog at some point. - Omnivore2: a Python service used by the crawler and working on the index in the background. It gets the articles,

parses them with spaCy, splits them into a-couple-sentences chunks loosely called

"periods", and applies various text analytics. The texts are then

annotated with this info in Solr and it can be used freely for sorting results. The code lives in

python_modules/omnivore2. - A StormCrawler-based crawler (the zookeeper, nimbus and other Storm cluster members):

this does the actual crawling and fetching pages with reasonable politeness. The process is tightly controlled and monitorable

from the website through objects stored in PostgreSQL. The relevant Storm topology code lives in

java/generalcrawl. There used to exist a convoluted Scrapy spider for this which became unworkable. Not all functionality has been ported, which is a big priority now. - Redditscrape: a PRAW-based script, working continuously in parallel to the

regular Web crawler. It uses Reddit API instead of HTML scraping. The code is in

python_modules/ascan/reddit_scraper.py. - The Selenium instance. This is needed for parsing pages relying on JavaScript, especially search pages. Currently not used because the relevant crawler code needs to be ported from the old Scrapy spider.

- The databases: Solr for storing and searching web pages, Redis for some ephemeral flags and data used by Python code, PostgreSQL for everything else.

Sorry that this is a longish, somewhat manual process. If you're not sure what you're doing, don't! The experience should be gradually improved when the project matures.

- These instructions are written for Linux, but everything we use is cross-platform. Install Docker and Docker Compose. You will also need OpenSSL, JDK 11+ and Apache Maven installed.

- Clone this repository. Make a copy of the

.env.samplefile and name it.env. - Open that file and follow instructions, setting up passwords, secrets etc. for the services. Never reuse those in production!

- Create the self-signed SSL certificates for communications between services.

(These are different from public-facing certs you would use when putting ActualScan on the Web.)

In each case use the same passphrase that you set as

KEYSTORE_PASSWORDvariable in your.envfile. From thecerts/devdirectory run:

openssl req -x509 -newkey rsa:4096 -keyout ascan_dev_internal.pem -out ascan_dev_internal.pem \

-config openssl.cnf -days 9999

# Make a copy of the key without the passphrase:

openssl rsa -in ascan_dev_internal.pem -out ascan_dev_internal_key.key

openssl pkcs12 -export -in ascan_dev_internal.pem -inkey ascan_dev_internal.pem \

-out ascan_dev_ssl_internal.keystore.p12 -name "ascan-solr"- Compile the Java package with the crawler topology. From

java/generalcrawlrun:

mvn compile

mvn package- Now you can build the services with

docker-compose buildin the main repo directory. - Before starting the website, perform necessary database migrations like so. The last command will walk you through creating the admin account.

docker-compose run website python manage.py makemigrations scan

docker-compose run website python manage.py makemigrations manager

docker-compose run website python manage.py makemigrations bg

docker-compose run website python manage.py makemigrations

docker-compose run website python manage.py migrate

docker-compose run website python manage.py createsuperuser- Finally, you can issue the

docker-compose upcommand. Your local ActualScan instance is now up and running!

Troubleshooting

- If you paste the first OpenSSL command and it does nothing (says

req: Use -help for summary.), try retyping it manually. See here for a similar problem. - If you use and enforce SELinux, the containers may have problems with reading from disk inside them. The

usual symptom is Python complaining about unimportable modules. You can change the rules appropriately

by running

chcon -Rt svirt_sandbox_file_t .(probably as sudo) in the project directory. - Storm and the

nimbuscontainer can crash after restarting if you don't rundocker-compose downafter stopping everything. You probably have to rundocker-compose downbefore runningdocker-compose upagain.

You can run all existing tests in the containers with bash run-tests-docker-compose.sh. The containers need

to be up. Python services use pytest, Common Lisp tests are

scripted with the fiasco package.

- If you want to dig into some failing tests with

pdbin one container,docker-compose exec website pytest -x --pdbcan be useful (in this case for thewebsiteservice). - To plug directly into the PostgreSQL console, use

docker-compose exec postgres psql -U <your-postgres-username>. - To get more logs from the

redditscrapeservice, change-L INFOin its entry indocker-compose.ymlto-L DEBUG. Foromnivore2this is controlled by the--log-level=debug(you may replacedebugwithinfoetc.) parameter. - You sometimes can also see more logs from a service with

docker-compose logs <container_name>. - Use the Storm UI available at

http://localhost:8888. There you inspect the configuration, thecrawltopology, change its log level and so on. - To directly inspect the Storm topology (crawler) logs, modify this snippet. The exact directory changes depending on the timestamp when the crawler topology started.

docker-compose exec storm_supervisor ls /logs/workers-artifacts

docker-compose exec storm_supervisor cat /logs/workers-artifacts/crawl-1-<THIS PART CHANGES>/6700/worker.logAfter following the setup instructions you can navigate to the website address (localhost:8000 for the

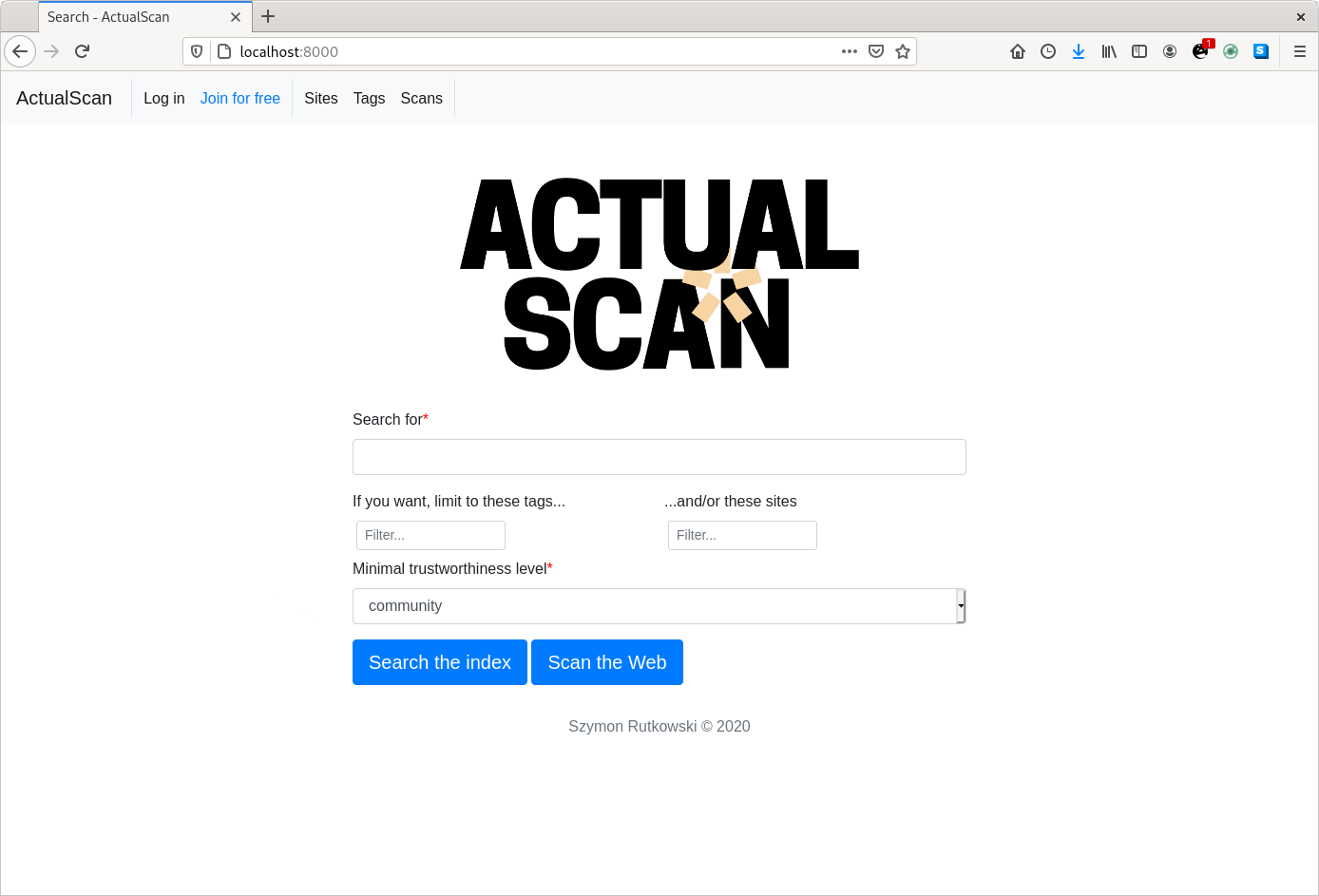

Docker Compose version) in your browser. You will see something like this:

Search the index button searches the already indexed pages. Scan the Web button scans the selected sites

(and/or sites with selected tags) for the query phrase. But for now, you have no tags or sites in your database.

Let's change that.

Search the index button searches the already indexed pages. Scan the Web button scans the selected sites

(and/or sites with selected tags) for the query phrase. But for now, you have no tags or sites in your database.

Let's change that.

Login to the admin account that you created (you may also register a new user, if you configured email properly). Go to the Tags tab on the website. Create some tags that you want to classify sites with: for example technology, news etc.

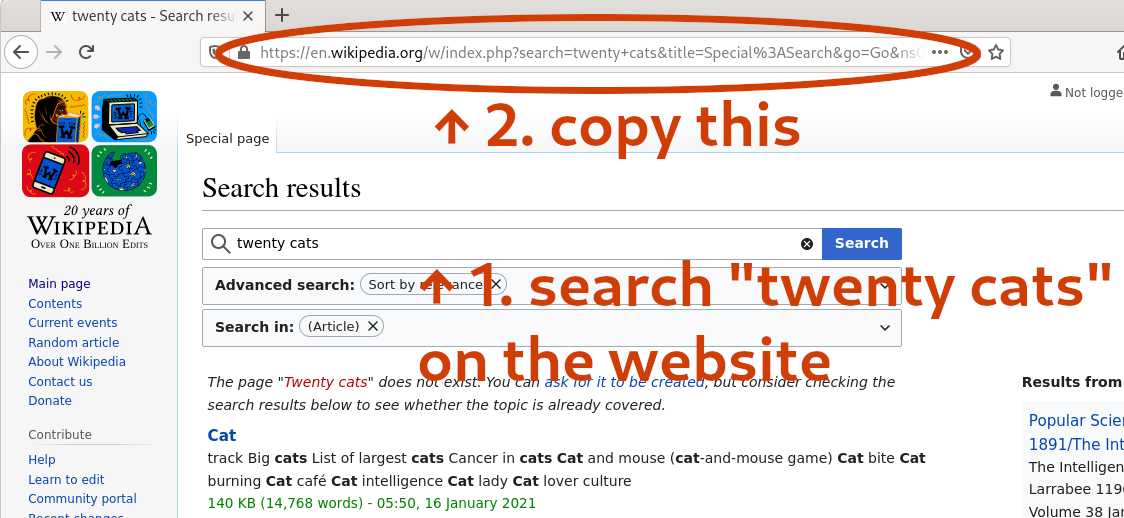

Now go to the Sites tab and click Add a new site. The way ActualScan does selective crawling is by making

use of the sites' own search function. (You can also just crawl them traditionally, this will be ported soon.)

You normally add sites by typing twenty cats into their search box, then clicking on Search or an

equivalent button on their website. Then copy the URL from your browser to the Add site form in

the ActualScan interface.

Because ActualScan performs smart text extraction and analysis, not all websites will be succesfully crawled.

The number of them will be increasing with improvements in the crawler and Speechtractor. For now,

you can try these websites:

Because ActualScan performs smart text extraction and analysis, not all websites will be succesfully crawled.

The number of them will be increasing with improvements in the crawler and Speechtractor. For now,

you can try these websites:

https://www.cnet.com/search/?query=twenty+catshttps://www.newscientist.com/search/?q=twenty+cats&search=&sort=relevancehttps://www.reuters.com/search/news?blob=twenty+catshttps://www.tomshardware.com/search?searchTerm=twenty+cats

You can also add subreddits (if you configured Reddit API in .env).

The interface should be able to guess the site's homepage address for you. Select one of the content types (blog, forums, media, social) -- this is used by Speechtractor to guide the text and metadata extraction. Select at least one tag for the website. Click Add the site.

After adding the sites you can return to the ActualScan homepage. Type your query (for example curiosity) into the search box and click on Scan the Web button. This will create a new scan and take you to its monitoring page. You can wait for the scan to finish, or click Search the index now even while it's still working.

Once you have more than 10 results for a query, custom ranking comes into play. Use the sliders to the right to change the scoring rules in real time. For example, mood refers to positive/negative sentiment detected in the text. You can set minimal and maximal value for a parameter, and adjust its weight (if you set a higher weight, text fragments with higher values of the parameter will come to the top).

Click on Add rule at the bottom of the slider column to name and add the rule permanently

to your ActualScan installation. You can also edit, copy, paste and share the rule strings next to that

button, such as sl,*,*,2;wl,9,*,5;awtf,*,0.4,2;neg,*,0.35,0;pos,*,0.45,0.

- Main needs and plans for the project are communicated with GitHub issues.

- Currently the main channel for suggestions is email (szymon atsign szymonrutkowski.pl) or GitHub issues.