Point Cloud Registration and Change Detection in Urban Environment Using an Onboard Lidar Sensor and MLS Reference Data

Supplementary material to our paper in the International Journal of Applied Earth Observation and Geoinformation, 2022

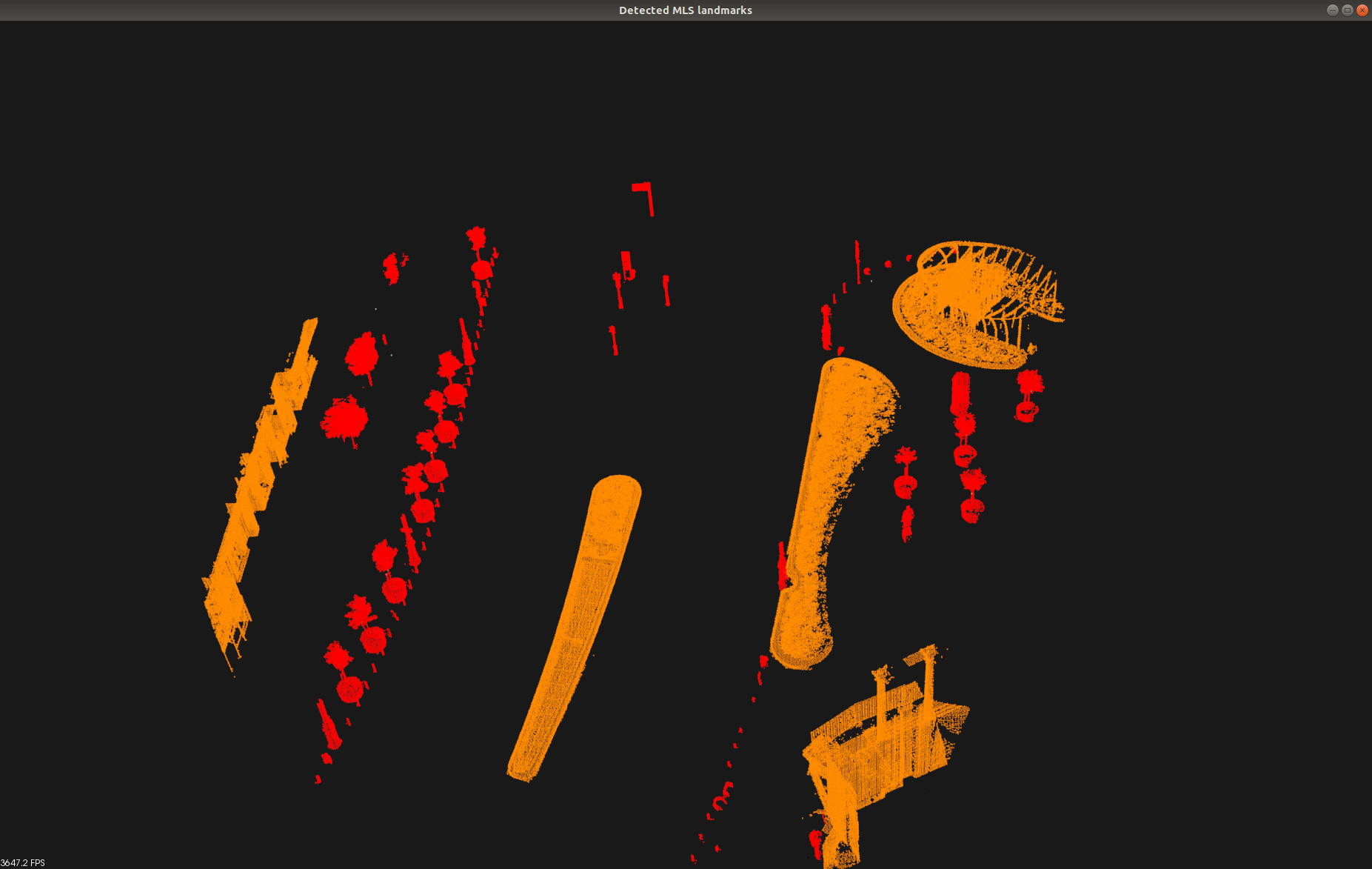

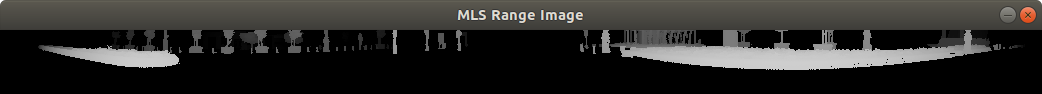

Left: MLS point cloud obtained in Kálvin square, Budapest, Hungary.

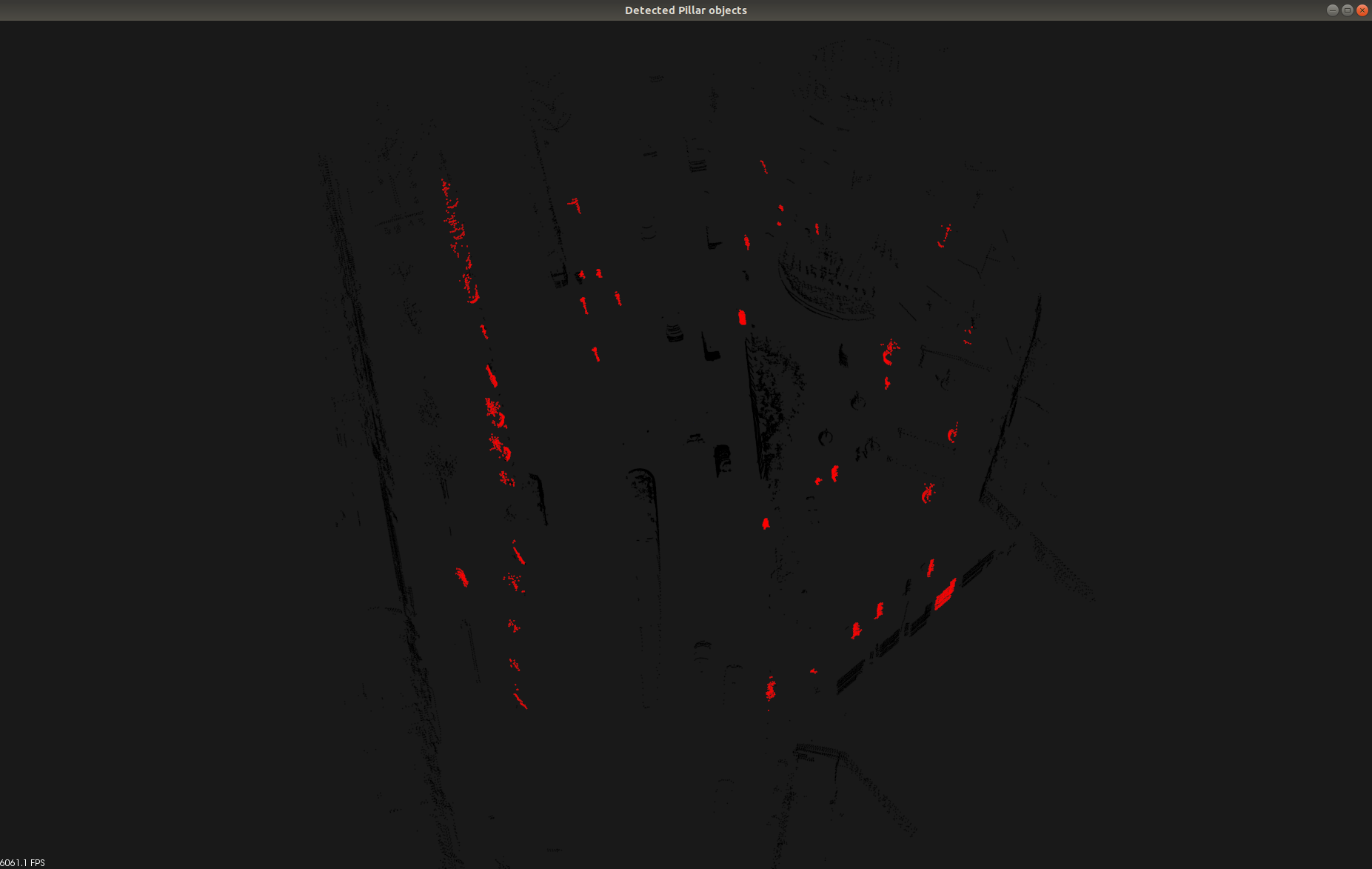

Right: Point cloud frame captured by a Velodyne HDL64E sensor in Kálvin square, Budapest, Hungary.

The full dataset used in the paper can be downloaded from this link.

The description of the dataset and its usage is available here.

If you found this work helpful for your research, or use some part of the code, please cite our paper:

@article{lidar-scu,

title = {Point cloud registration and change detection in urban environment using an onboard Lidar sensor and MLS reference data},

journal = {International Journal of Applied Earth Observation and Geoinformation},

volume = {110},

pages = {102767},

year = {2022},

issn = {1569-8432},

doi = {https://doi.org/10.1016/j.jag.2022.102767},

url = {https://www.sciencedirect.com/science/article/pii/S0303243422000939},

author = {Örkény Zováthi and Balázs Nagy and Csaba Benedek},

}

The code was written in C++ and tested on a desktop computer with Ubuntu 18.04. Please carefully follow our installation guide.

PCL-1.8.1

Eigen3

OpenCV

CMake

$ sudo apt install libeigen3-dev libpcl-devPlease follow the instructions of this tutorial.

- Clone this repository

- Build the project:

$ mkdir build && cd build

$ cmake ..

$ make -j10- Run the project on sample demo data:

$ ./Lidar-SCU|--project_root:

|--Data:

|--Samples:

|--Velo1.pcd

|--MLS1.pcd

|--Velo2.pcd

|--MLS2.pcd

|--Velo3.pcd

|--MLS3.pcd

|--Velo4.pcd

|--MLS4.pcd

|--Velo5.pcd

|--MLS5.pcd

|--Output:

Run the algorithms on your own sample demo data pair:

$ ./Lidar-SCU path_to_rmb_lidar_frame.pcd path_to_mls_data.pcdBy default, the execution follows the steps below. At each step, one should see similar outputs as listed here. Please close each figure to go to the next step.

Color code: red = pillar-like columns, orange = other static objects

Color code: red = pillar-like object candidates

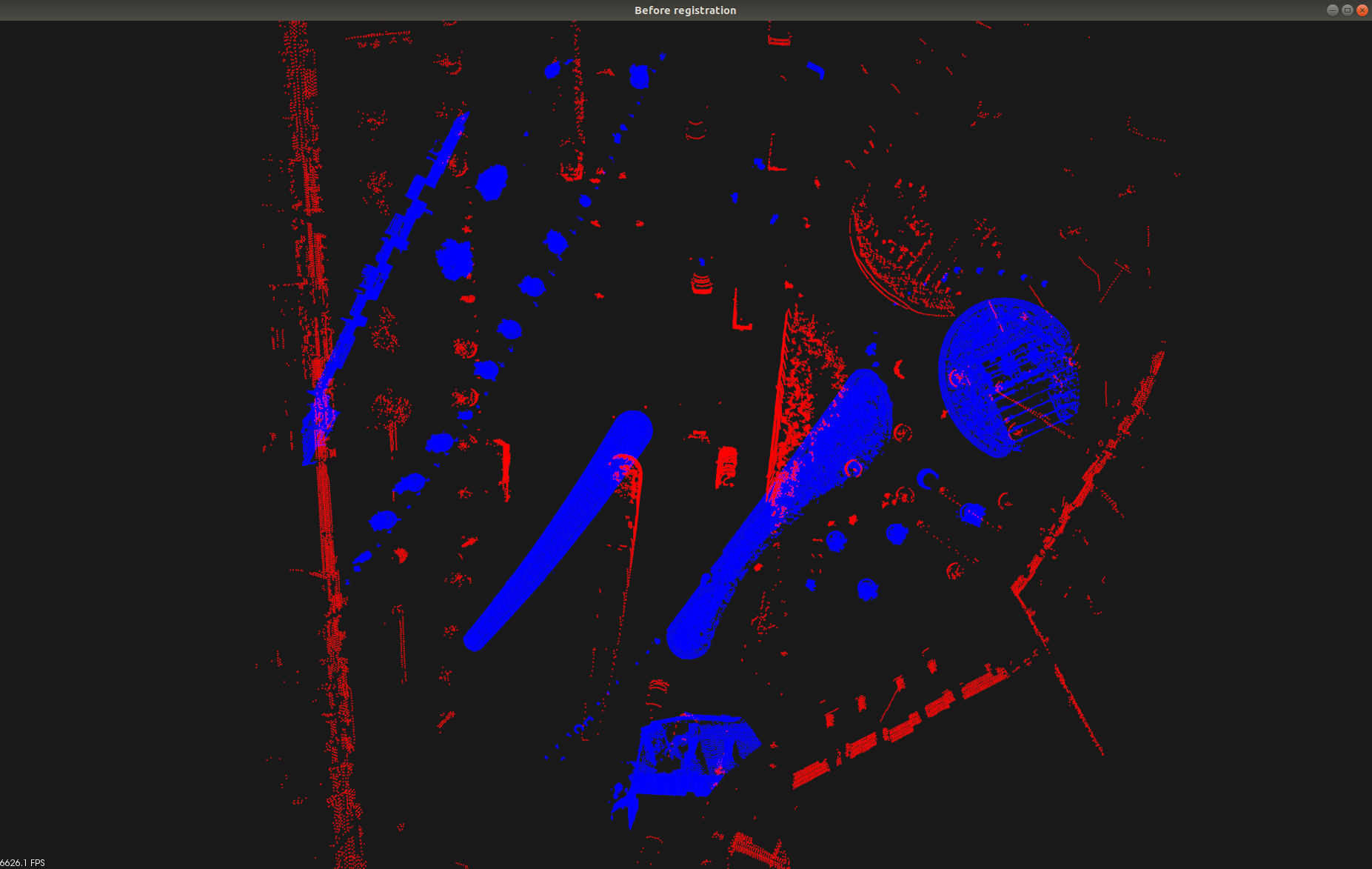

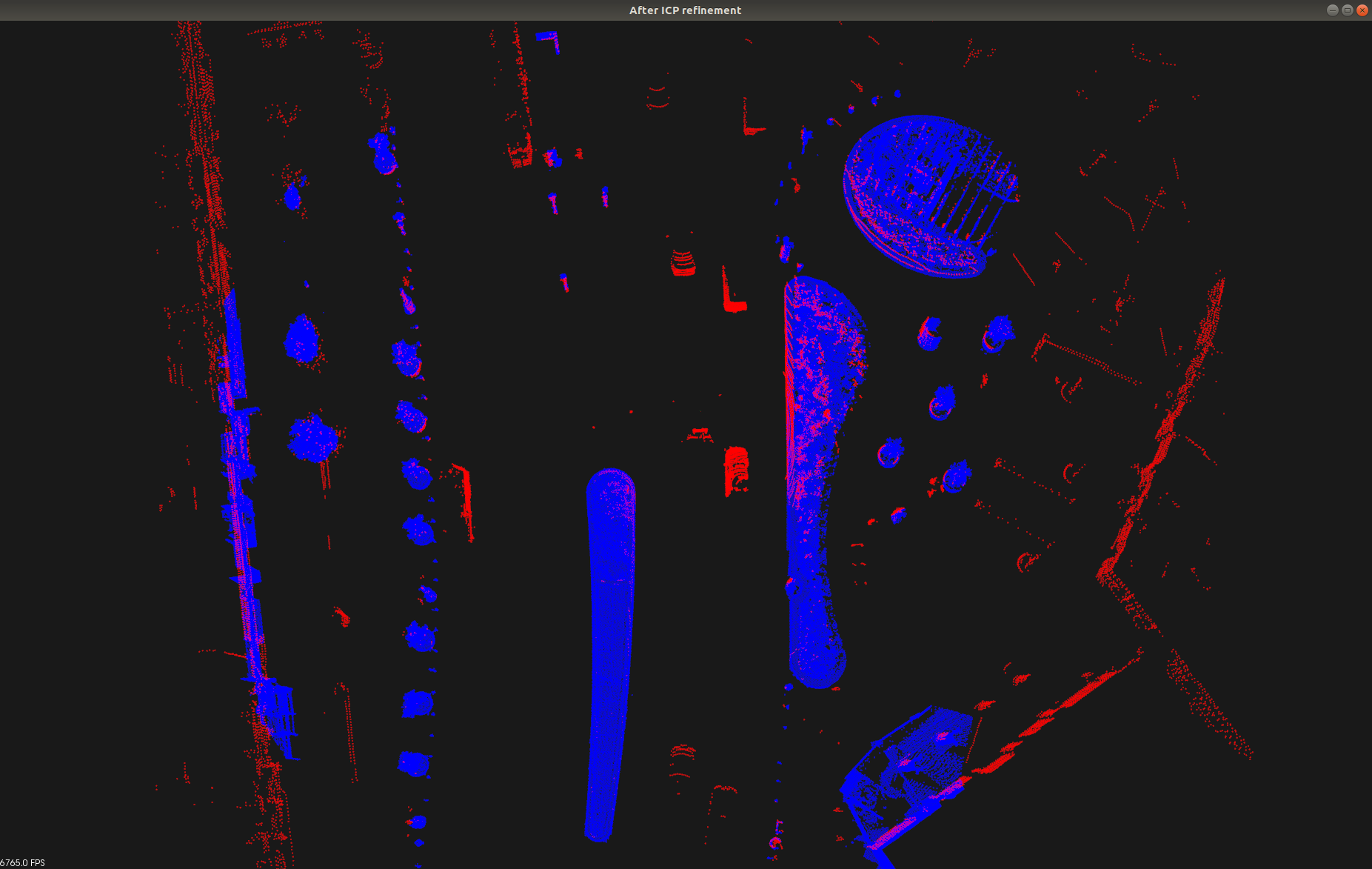

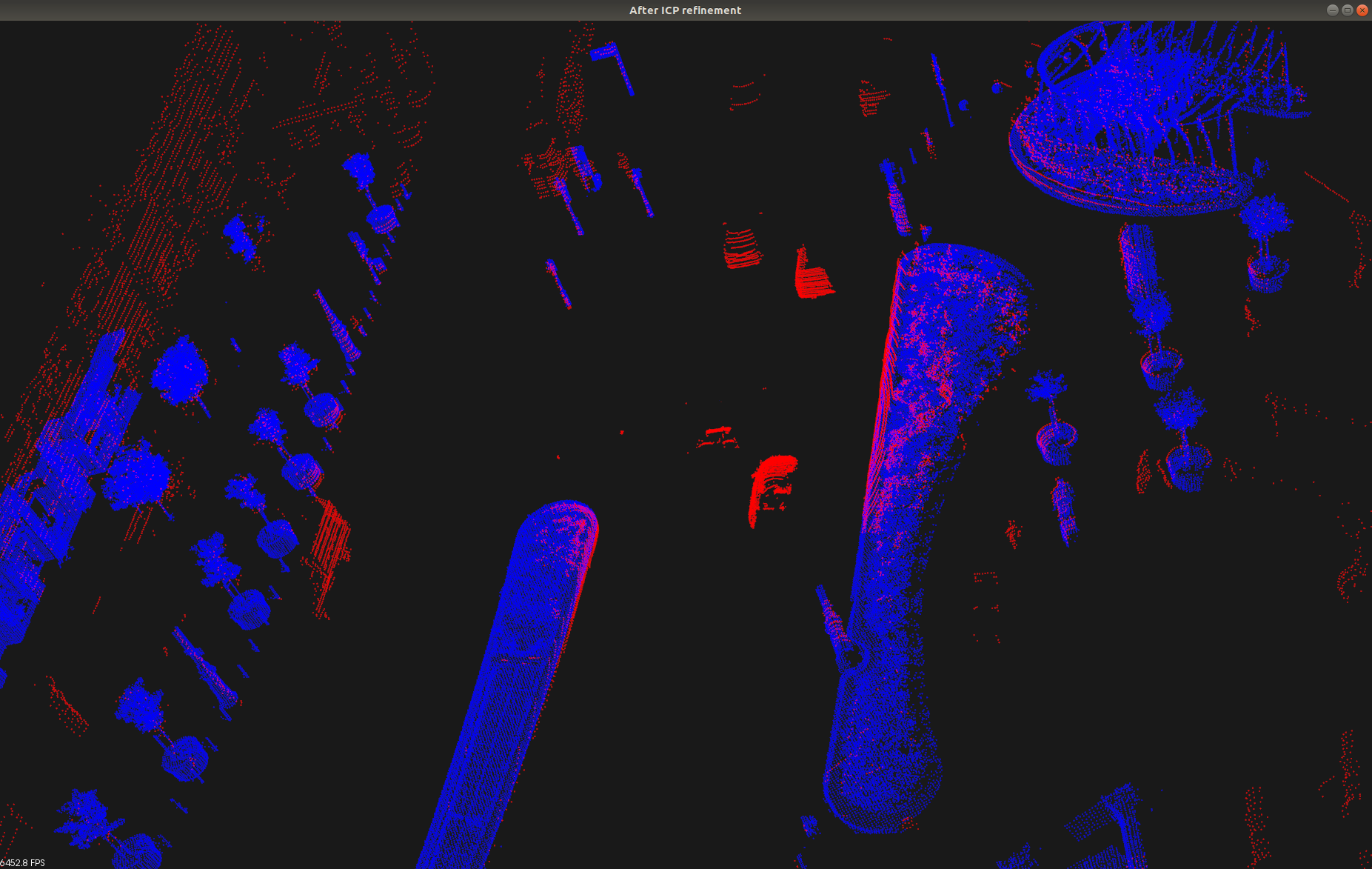

Color code: red = RMB Lidar, blue = MLS map

Left: before alignment

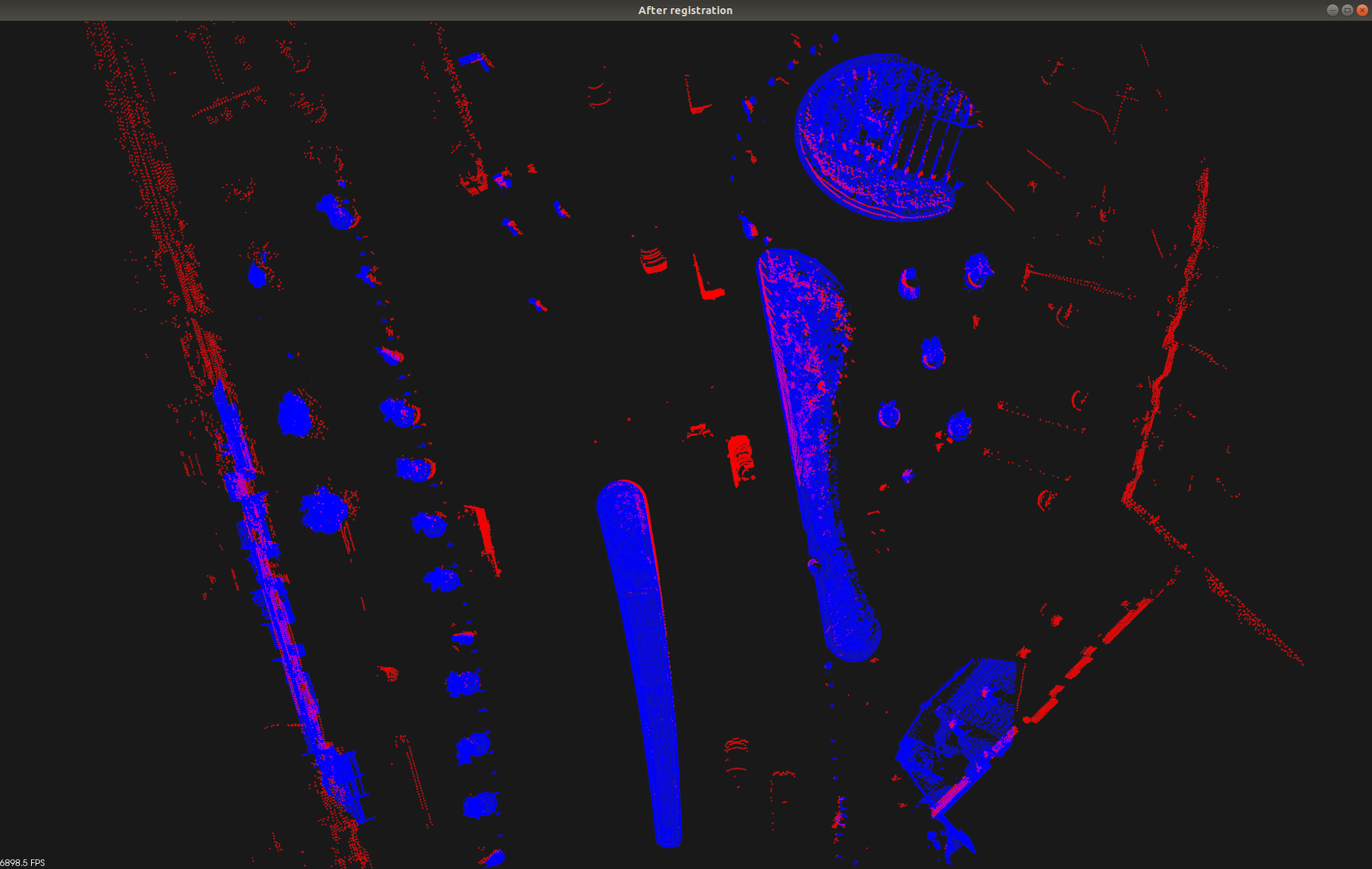

Right: after alignment

Color code: red = RMB Lidar, blue = MLS map

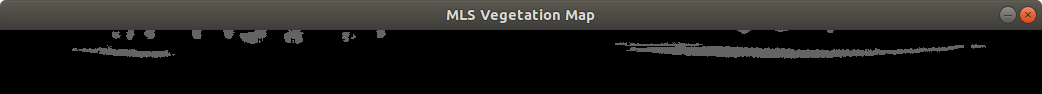

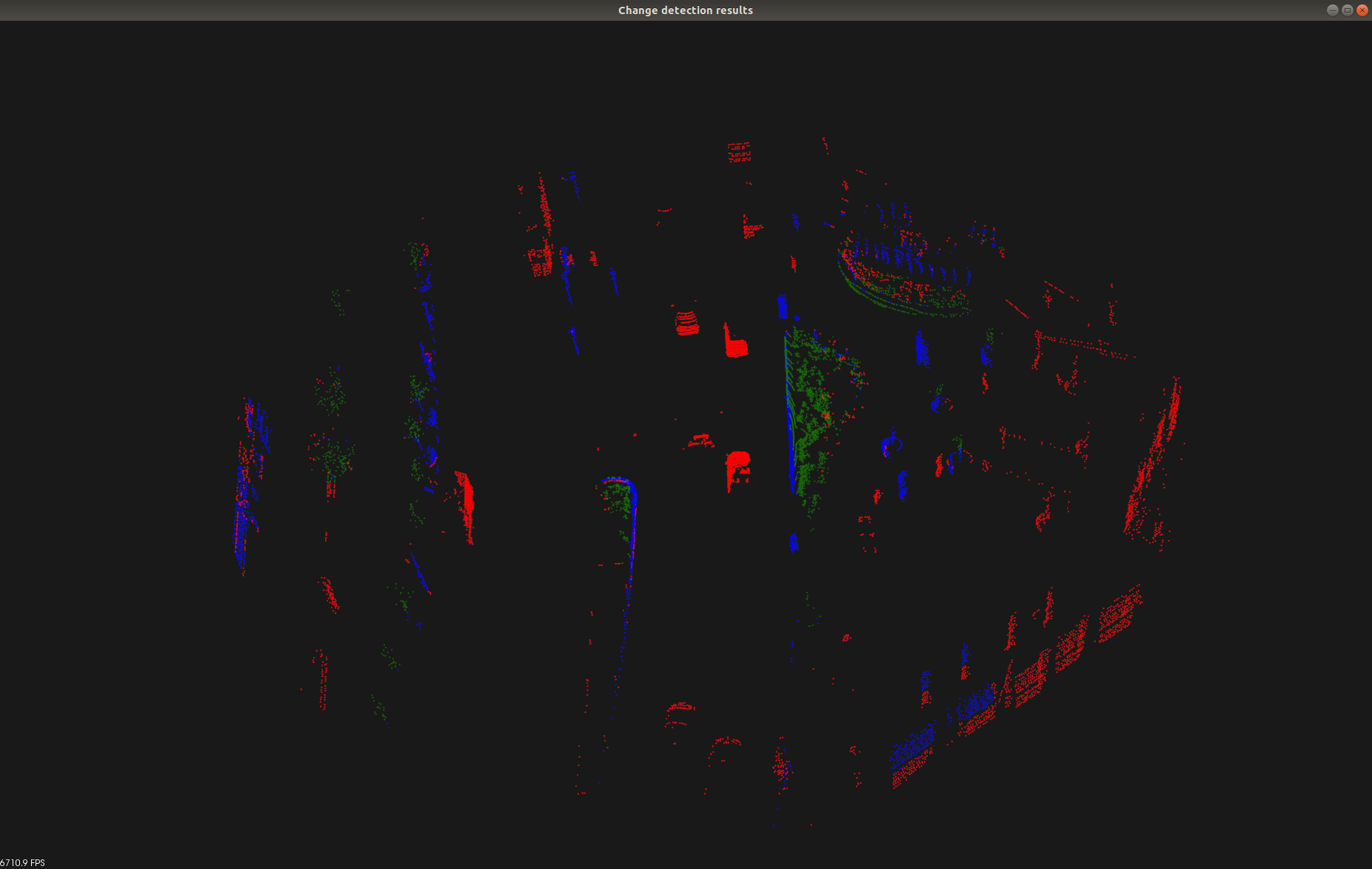

Color code: blue = static, red = dynamic change, blue = vegetation change

The code of this repository was implemented in the Machine Perception Research Laboratory, Institute of Computer Science and Control (SZTAKI), Budapest.