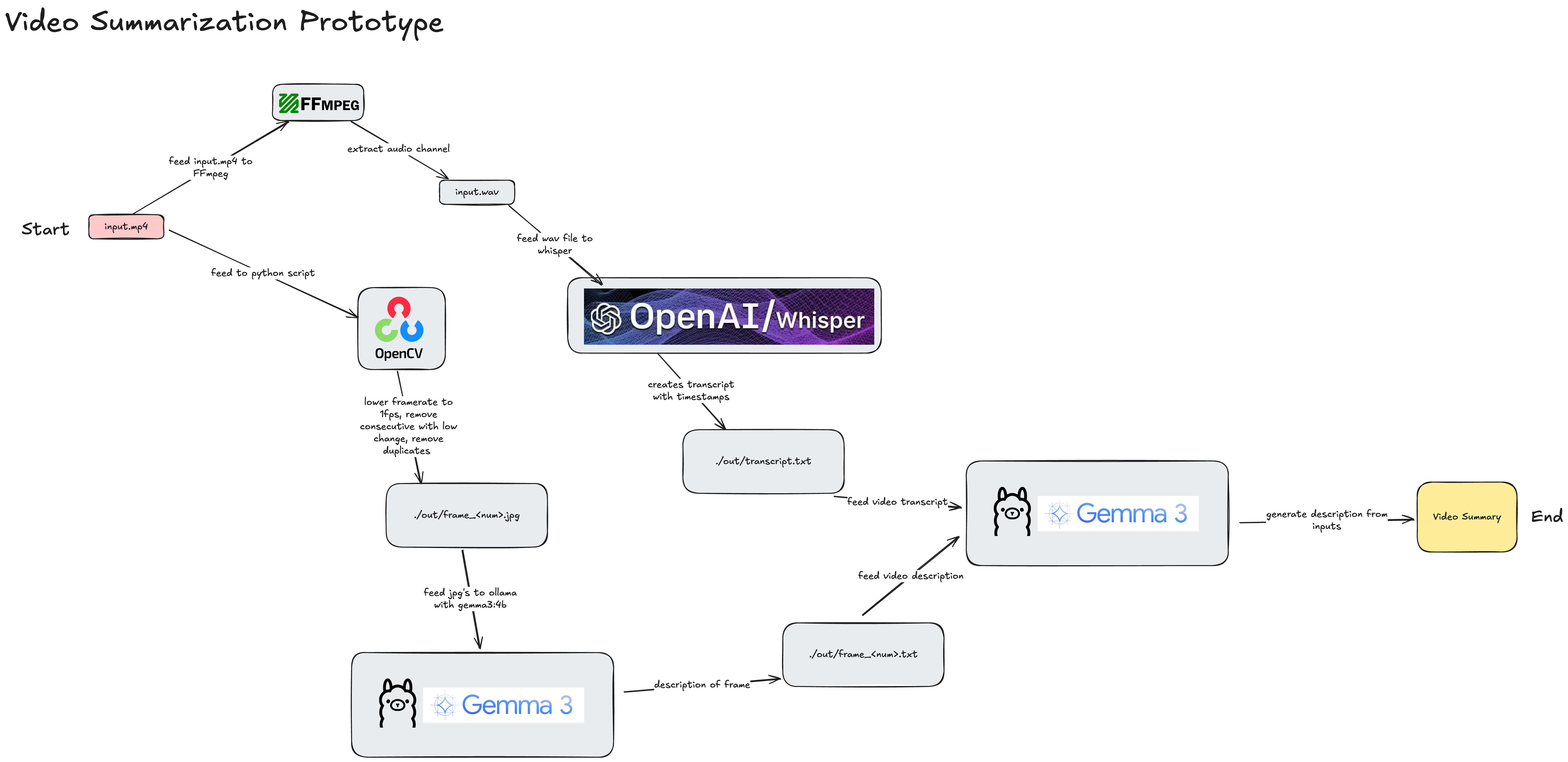

vid is a proof-of-concept AI video summarizer that combines video processing and state-of-the-art AI models to generate concise summaries of video content. It leverages frame extraction, audio transcription, and multimodal AI (Gemma via Ollama) to produce video summaries.

- Frame Extraction: Selects key frames from the video using frame difference analysis and similarity filtering.

- Audio Transcription: Extracts and transcribes audio using Whisper.

- Image Description: Describes selected frames using Gemma (via Ollama).

- Video Summarization: Combines transcript and frame descriptions to generate a summary using Gemma.

- Audio Extraction: Extracts audio from the input video (

input.mp4) and saves asinput.wav. - Transcription: Transcribes the audio to text using Whisper and saves to

./out/transcript.txt. - Frame Selection: Processes video frames, saving those with significant changes to

./out/frame_XXXX.jpg. - Frame Filtering: Removes visually similar frames to reduce redundancy.

- Image Description: Uses Gemma (Ollama) to describe each selected frame, saving descriptions to

./out/frame_XXXX.txt. - Summarization: Combines transcript and frame descriptions, prompting Gemma to generate a concise video summary (

./out/summary.txt).

Install dependencies:

pip install -r requirements.txt- Place your video file as

input.mp4in the project directory. - Run the main script:

python main.py

- Find outputs in the

./outdirectory:transcript.txt: Audio transcriptframe_XXXX.jpg: Key framesframe_XXXX.txt: Frame descriptionssummary.txt: Final video summary

See system.png for a visual overview of the pipeline.

- The project is a proof of concept and may require GPU for best performance.

- Gemma and Whisper models must be installed and available to Python.

- Ollama must be running locally with the Gemma model pulled.

MIT