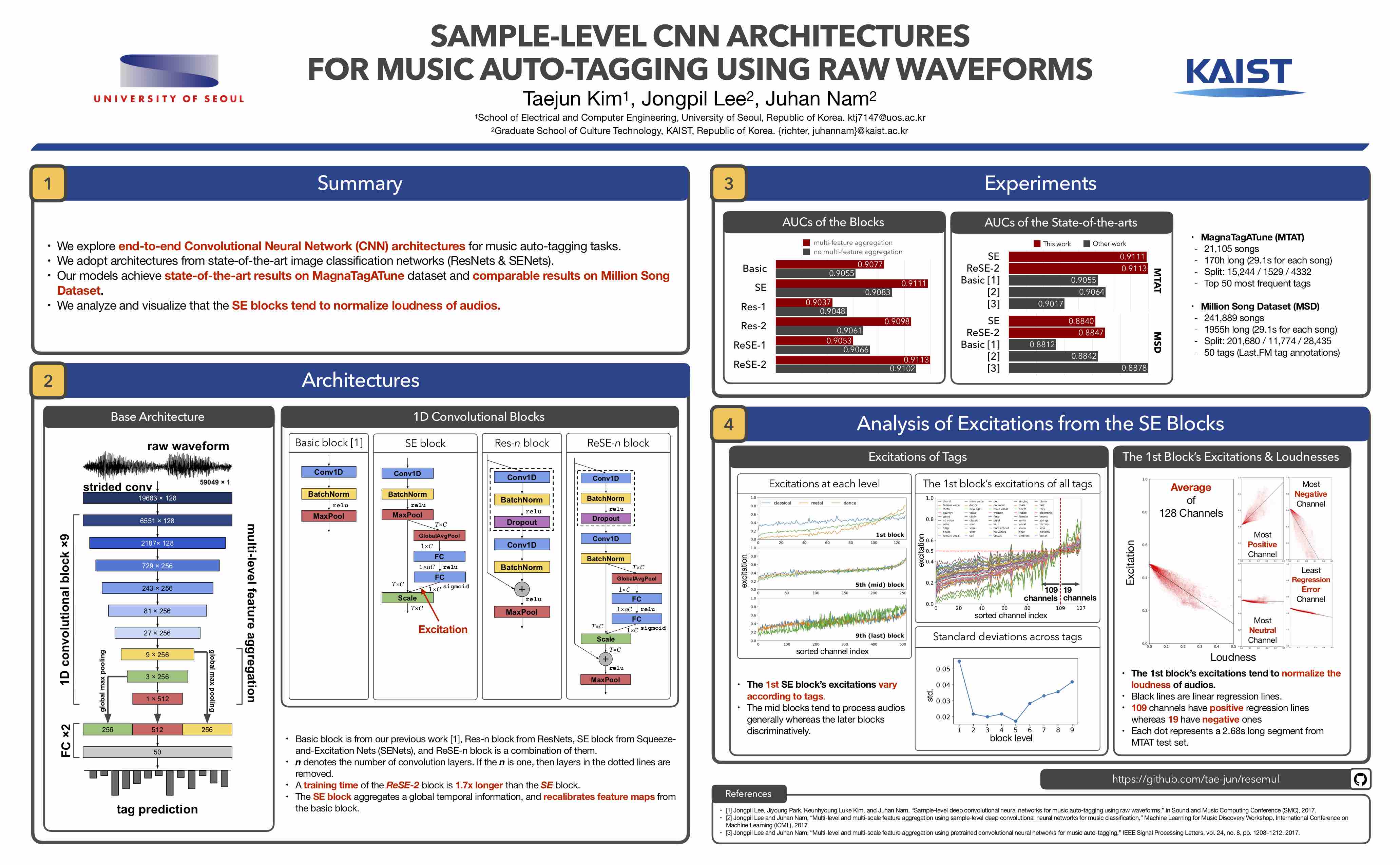

A TensorFlow+Keras implementation of "Sample-level CNN Architectures for Music Auto-tagging Using Raw Waveforms" including Jupyter note for excitation analysis

- Prerequisites

- Preparing MagnaTagATune (MTT) Dataset

- Preprocessing the MTT dataset

- Training a model from scratch

- Downloading pre-trained models

- Evaluating a model

- Excitation Analysis

- Issues & Questions

@inproceedings{kim2018sample,

title={Sample-level CNN Architectures for Music Auto-tagging Using Raw Waveforms},

author={Kim, Taejun and Lee, Jongpil and Nam, Juhan},

booktitle={International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year={2018},

organization={IEEE}

}

- Python 3.5 and the required packages

ffmpeg(required formadmom)

pip install -r requirements.txt

pip install madmomThe madmom package has a install-time dependency, so should be

installed after installing packages in requirements.txt.

This will install the required packages:

- keras must use

v2.0.5(has an issue) - tensorflow

- numpy

- pandas

- scikit-learn

- madmom

- scipy (madmom dependency)

- cython (madmom dependency)

- matplotlib (used for excitation analysis)

ffmpeg is required for madmom.

brew install ffmpegadd-apt-repository ppa:mc3man/trusty-media

apt-get update

apt-get dist-upgrade

apt-get install ffmpegyum install epel-release

rpm --import http://li.nux.ro/download/nux/RPM-GPG-KEY-nux.ro

rpm -Uvh http://li.nux.ro/download/nux/dextop/el ... noarch.rpm

yum install ffmpegDownload audio data and tag annotations from here. Then you should

see 3 .zip files and 1 .csv file:

mp3.zip.001

mp3.zip.002

mp3.zip.003

annotations_final.csvTo unzip the .zip files, merge and unzip them (referenced here):

cat mp3.zip.* > mp3_all.zip

unzip mp3_all.zipYou should see 16 directories named 0 to f. Typically, 0 ~ b are

used to training, c to validation, and d ~ f to test.

To make your life easier, make a directory named dataset, and place them in the directory as below:

mkdir datasetYour directory structure should be like:

dataset

├── annotations_final.csv

└── mp3

├── 0

├── 1

├── ...

└── f

Now, the MTT dataset preparation is Done!

This section describes a required preprocessing task for the MTT

dataset. Note that this requires 48G storage space. It uses multiprocessing.

These are what the preprocessing does:

- Select top 50 tags in

annotations_final.csv - Split dataset into training, validation, and test sets

- Resample the raw audio files to 22050Hz

- Segment the resampled audios into

59049sample length - Convert the segments to TFRecord format

To run the preprocessing:

python build_mtt.pyAfter the preprocessing your dataset directory should be like:

dataset

├── annotations_final.csv

├── mp3

│ ├── 0

│ ├── ...

│ └── f

└── tfrecord

├── test-0000-of-0043.seq.tfrecord

├── ...

├── test-0042-of-0043.seq.tfrecord

├── train-0000-of-0152.tfrecord

├── ...

├── train-0151-of-0152.tfrecord

├── val-0000-of-0015.tfrecord

├── ...

└── val-0014-of-0015.tfrecord

18 directories, 211 files

To train a model from scratch, run the code:

python train.pyThe trained model and logs will be saved under the directory log.

train.py trains a model using SE block by default. To see configurable options,

run python train.py -h. Then you will see:

usage: train.py [-h] [--data-dir PATH] [--train-dir PATH]

[--block {se,rese,res,basic}] [--no-multi] [--alpha A]

[--batch-size N] [--momentum M] [--lr LR] [--lr-decay DC]

[--dropout DO] [--weight-decay WD] [--initial-stage N]

[--patience N] [--num-lr-decays N] [--num-audios-per-shard N]

[--num-segments-per-audio N] [--num-read-threads N]

Sample-level CNN Architectures for Music Auto-tagging.

optional arguments:

-h, --help show this help message and exit

--data-dir PATH

--train-dir PATH Directory where to write event logs and checkpoints.

--block {se,rese,res,basic}

Block to build a model: {se|rese|res|basic} (default:

se).

--no-multi Disables multi-level feature aggregation.

--alpha A Amplifying ratio of SE block.

--batch-size N Mini-batch size.

--momentum M Momentum for SGD.

--lr LR Learning rate.

--lr-decay DC Learning rate decay rate.

--dropout DO Dropout rate.

--weight-decay WD Weight decay.

--initial-stage N Stage to start training.

--patience N Stop training stage after #patiences.

--num-lr-decays N Number of learning rate decays.

--num-audios-per-shard N

Number of audios per shard.

--num-segments-per-audio N

Number of segments per audio.

--num-read-threads N Number of TFRecord readers.For example, if you want to train a model without multi-feature aggregation using Res block:

python train.py --block res --no-multiYou can download the two best models of the paper:

- SE+multi (AUC 0.9111) [download]: a model using

SEblocks and multi-feature aggregation - ReSE+multi (AUC 0.9113) [download]: a model using

ReSEblocks and multi-feature aggregation

To download them from command line:

# SE+multi

curl -L -o se-multi-auc_0.9111-tfrmodel.hdf5 https://www.dropbox.com/s/r8qlxbol2p4ods5/se-multi-auc_0.9111-tfrmodel.hdf5?dl=1

# ReSE+multi

curl -L -o rese-multi-auc_0.9113-tfrmodel.hdf5 https://www.dropbox.com/s/fr3y1o3hyha0n2m/rese-multi-auc_0.9113-tfrmodel.hdf5?dl=1To evaluate a model run:

python eval.py <MODEL_PATH>For example, if you want to evaluate the downloaded SE+multi model:

python eval.py se-multi-auc_0.9111-tfrmodel.hdf5- If you just want to see codes and plots, please open

excitation_analysis.ipynb. - If you want to analyze excitations by yourself, please follow next steps.

python extract_excitations.py <MODEL_PATH>

# For example, to extract excitations from the downloaded `SE+multi` model:

python extract_excitations.py se-multi-auc_0.9111-tfrmodel.hdf5This will extract excitations from the model and save them as a Pandas DataFrame.

The saved file name is excitations.pkl by default.

Run Jupyter notebook:

jupyter notebookAnd open the note excitation_analysis.ipynb in Jupyter notebook.

Run and explore excitations by yourself.

If you have any issues or questions, please post it on issues so that other people can share it :) Thanks!