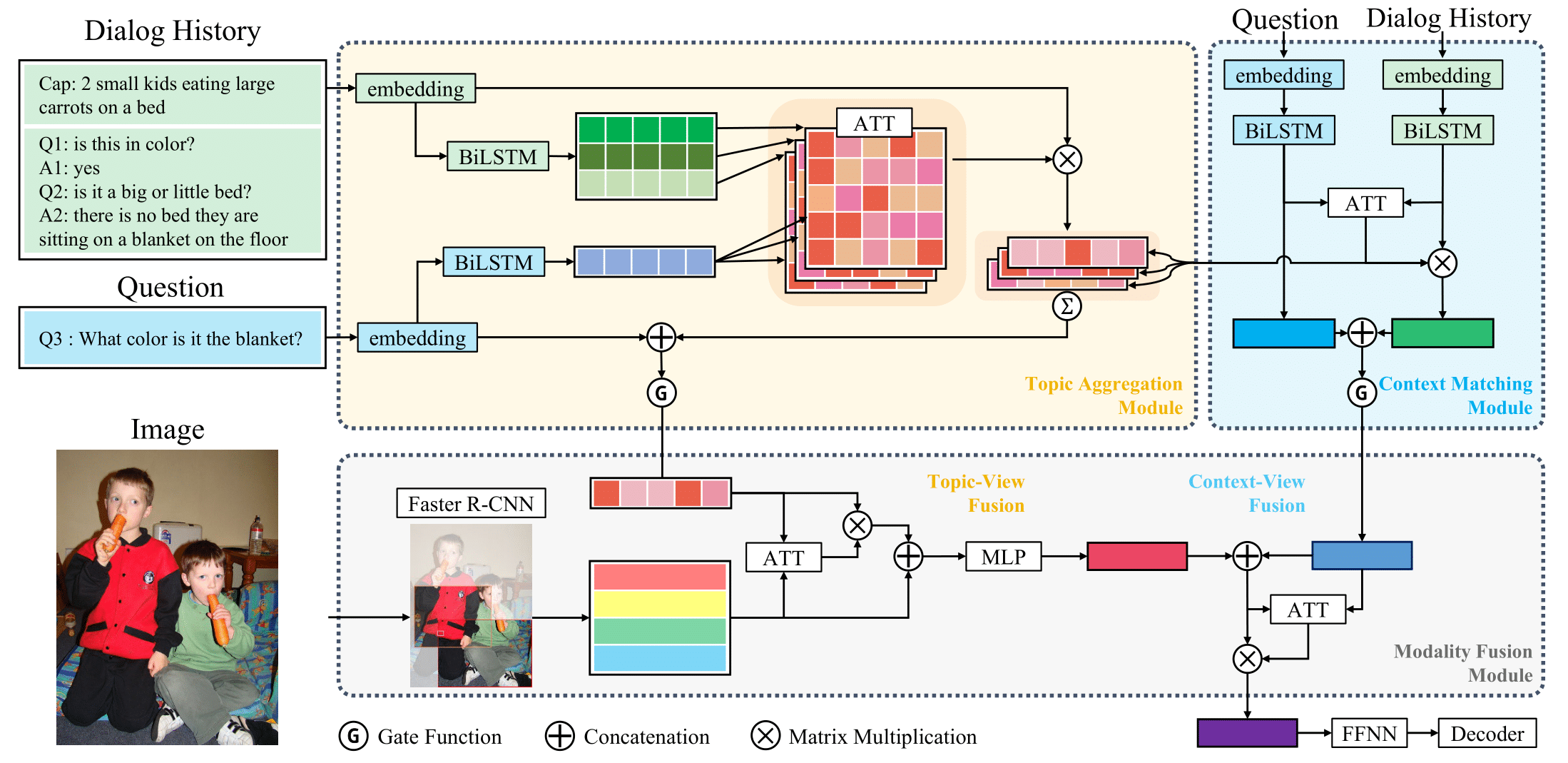

Implements the model described in the following paper Multi-View Attention Networks for Visual Dialog.

@article{park2020multi,

title={Multi-View Attention Networks for Visual Dialog},

author={Park, Sungjin and Whang, Taesun and Yoon, Yeochan and Lim, Heuiseok},

journal={arXiv preprint arXiv:2004.14025},

year={2020}

}

This code is reimplemented as a fork of batra-mlp-lab/visdial-challenge-starter-pytorch and yuleiniu/rva.

This code is implemented using PyTorch v1.3.1, and provides out of the box support with CUDA 10 and CuDNN 7.

Anaconda / Miniconda is the recommended to set up this codebase.

Clone this repository and create an environment:

git clone https://www.github.com/taesunwhang/MVAN-VisDial

conda create -n mvan_visdial python=3.7

# activate the environment and install all dependencies

conda activate mvan_visdial

cd MVAN-VisDial/

pip install -r requirements.txt- Download the VisDial v0.9 and v1.0 dialog json files from here and keep it under

$PROJECT_ROOT/data/v0.9and$PROJECT_ROOT/data/v1.0directory, respectively. - batra-mlp-lab provides the word counts for VisDial v1.0 train split

visdial_1.0_word_counts_train.json. They are used to build the vocabulary. Keep it under$PROJECT_ROOT/data/v1.0directory. - If you wish to use preprocessed textaul inputs, we provide preprocessed data here and keep it under

$PROJECT_ROOT/data/visdial_1.0_textdirectory. - For the pre-extracted image features of VisDial v1.0 images, batra-mlp-lab provides Faster-RCNN image features pre-trained on Visual Genome. Keep it under

$PROJECT_ROOT/data/visdial_1.0_imgand set argumentimg_feature_typetofaster_rcnn_x101inconfig/hparams.pyfile.

features_faster_rcnn_x101_train.h5: Bottom-up features of 36 proposals from images oftrainsplit.features_faster_rcnn_x101_val.h5: Bottom-up features of 36 proposals from images ofvalsplit.features_faster_rcnn_x101_test.h5: Bottom-up features of 36 proposals from images oftestsplit.

- gicheonkang provides pre-extracted Faster-RCNN image features, which contain bounding boxes information. Set argument

img_feature_typetodan_faster_rcnn_x101inconfig/hparams.pyfile.

train_btmup_f.hdf5: Bottom-up features of 10 to 100 proposals from images oftrainsplit (32GB).train_imgid2idx.pkl:image_idto bbox index file fortrainsplitval_btmup_f.hdf5: Bottom-up features of 10 to 100 proposals from images ofvalidationsplit (0.5GB).val_imgid2idx.pkl:image_idto bbox index file forvalsplittest_btmup_f.hdf5: Bottom-up features of 10 to 100 proposals from images oftestsplit (2GB).test_imgid2idx.pkl:image_idto bbox index file fortestsplit

Download the GloVe pretrained word vectors from here, and keep glove.6B.300d.txt under $PROJECT_ROOT/data/word_embeddings/glove directory.

Simply run

python data/preprocess/init_glove.pyTrain the model provided in this repository as:

python main.py --model mvan --version 1.0Set argument load_pthpath to /path/to/checkpoint.pth in config/hparams.py file.

Model chekcpoints are saved for every epoch. Set argument save_dirpath in config/hparams.py file.

Evaluation of a trained model checkpoint can be done as follows:

python evaluate.py --model mvan --evaluate /path/to/checkpoint.pth --eval_split valIf you wish to evaluate the model on test split, replace --eval_split val with --eval_split test.

This will generate a json file for each split to evaluate on various metrics (Mean reciprocal rank, R@{1, 5, 10}, Mean rank, and Normalized Discounted Cumulative Gain (NDCG)).

If you wish to evaluate on test split, EvalAI provides the evaluation server.