This repository provides PyTorch implementation for classifier's posterior GAN (CP-GAN). Given class-overlapping data, CP-GAN can learn a class-distinct and class-mutual image generator that can capture between-class relationships and generate an image selectively based on the class specificity.

Note: In our other studies, we have also proposed GAN for label noise, GAN for image noise, and GAN for blur, noise, and compression. Please check them from the links below.

- Label-noise robust GAN (rGAN) (CVPR 2019): GAN for label noise

- Noise robust GAN (NR-GAN) (CVPR 2020): GAN for image noise

- Blur, noise, and compression robust GAN (BNCR-GAN) (CVPR 2021): GAN for blur, noise, and compression

Class-Distinct and Class-Mutual Image Generation with GANs.

Takuhiro Kaneko, Yoshitaka Ushiku, and Tatsuya Harada.

In BMVC 2019 (Spotlight).

[Paper] [Project] [Slides] [Poster]

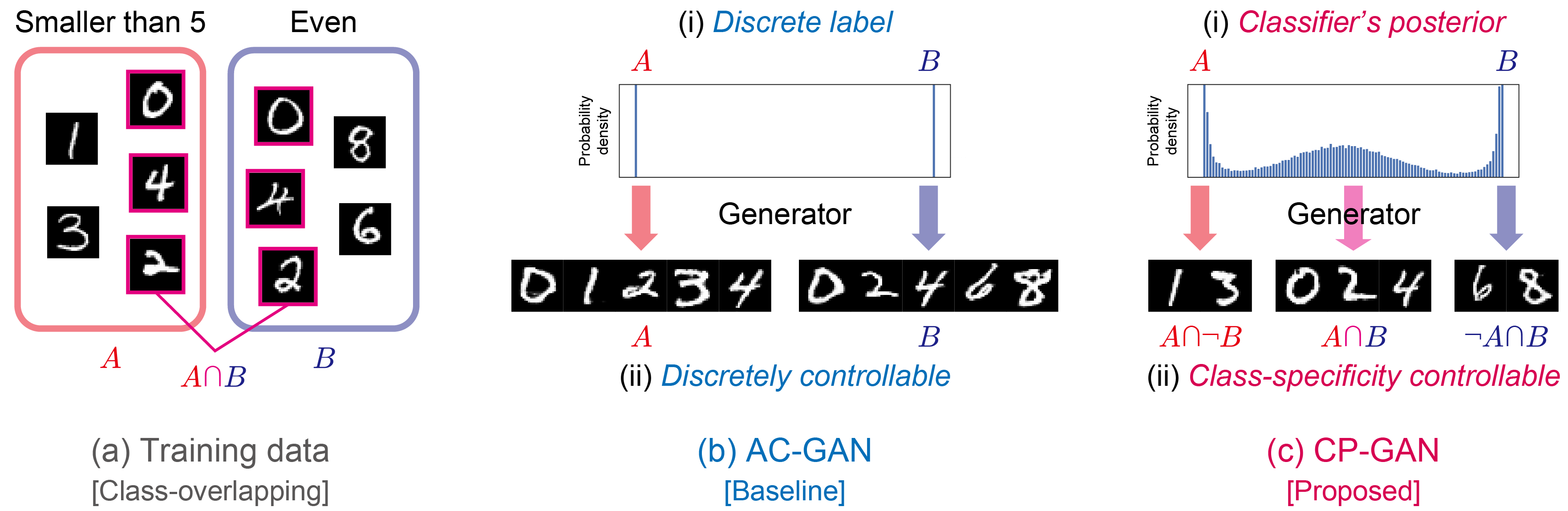

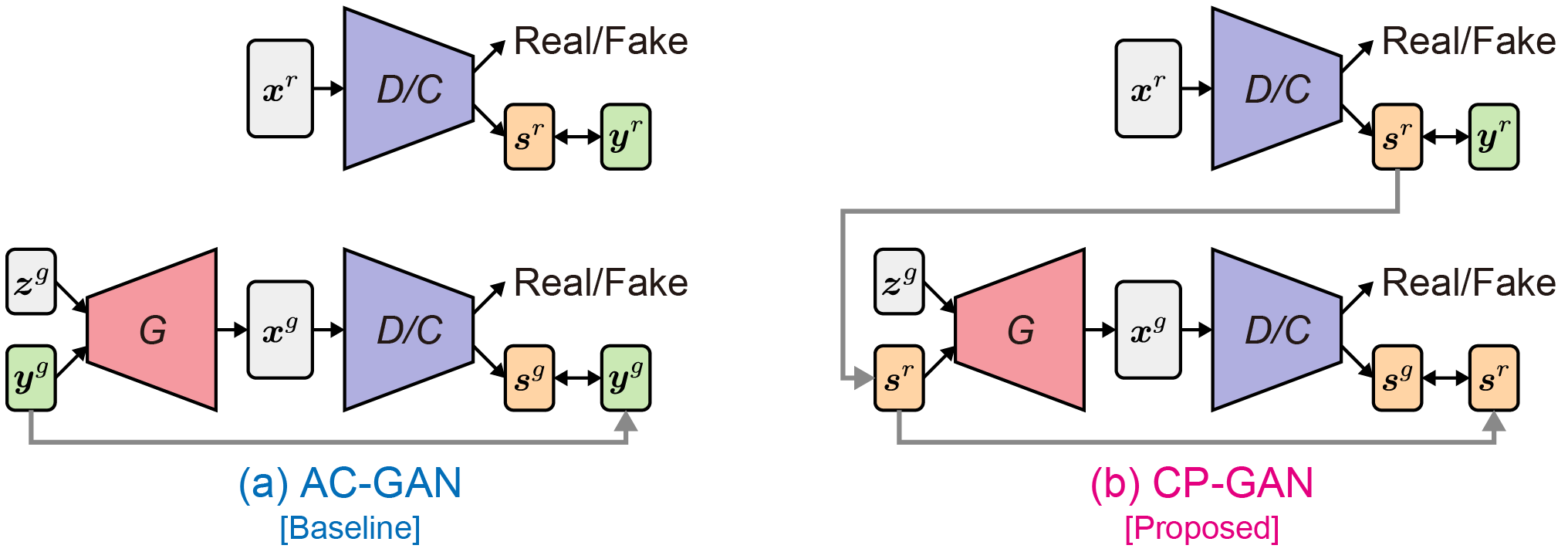

Our goal is, given class-overlapping data, to construct a class-distinct and class-mutual image generator that can selectively generate an image conditioned on the class specificity. To solve this problem, we propose CP-GAN (b), in which we redesign the generator input and the objective function of auxiliary classifier GAN (AC-GAN) [2] (a). Precisely, we employ the classifier’s posterior to represent the between-class relationships and incorporate it into the generator input. Additionally, we optimize the generator so that the classifier’s posterior of generated data corresponds with that of real data. This formulation allows CP-GAN to capture the between-class relationships in a data-driven manner and to generate an image conditioned on the class specificity.

Clone this repo:

git clone https://github.com/takuhirok/CP-GAN.git

cd CP-GAN/First, install Python 3+. Then install PyTorch 1.0 and other dependencies by

pip install -r requirements.txtTo train a model, use the following script:

python train.py \

--dataset [cifar10/cifar10to5/cifar7to3] \

--trainer [acgan/cpgan] \

--out output_directory_pathPlease choose one of the options in the square brackets ([ ]).

To train CP-GAN on CIFAR-10to5, run the following:

python train.py \

--dataset cifar10to5 \

--trainer cpgan \

--out outputs/cifar10to5/cpganTo generate images, use the following script:

python test.py \

--dataset [cifar10/cifar10to5/cifar7to3] \

--g_path trained_model_path \

--out output_directory_pathPlease choose one of the options in the square brackets ([ ]).

To test the above-trained model on CIFAR-10to5, run the following:

python test.py \

--dataset cifar10to5 \

--g_path outputs/cifar10to5/cpgan/netG_iter_100000.pth \

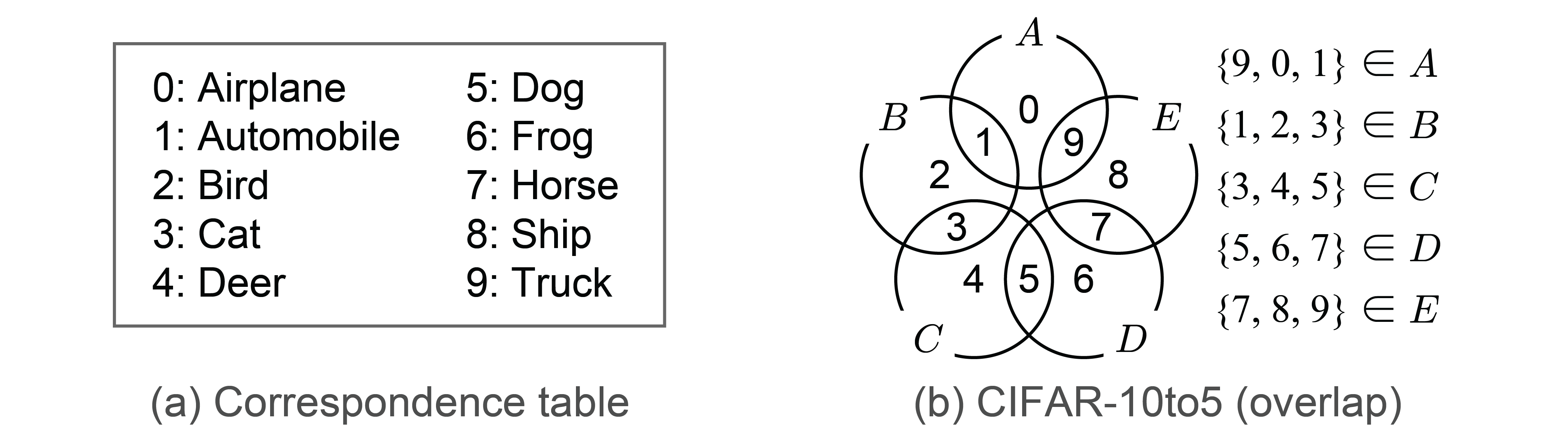

--out samplesClass-overlapping setting. The original ten classes (0,...,9; defined in (a)) are divided into five classes (A,...,E) with class overlapping, as shown in (b).

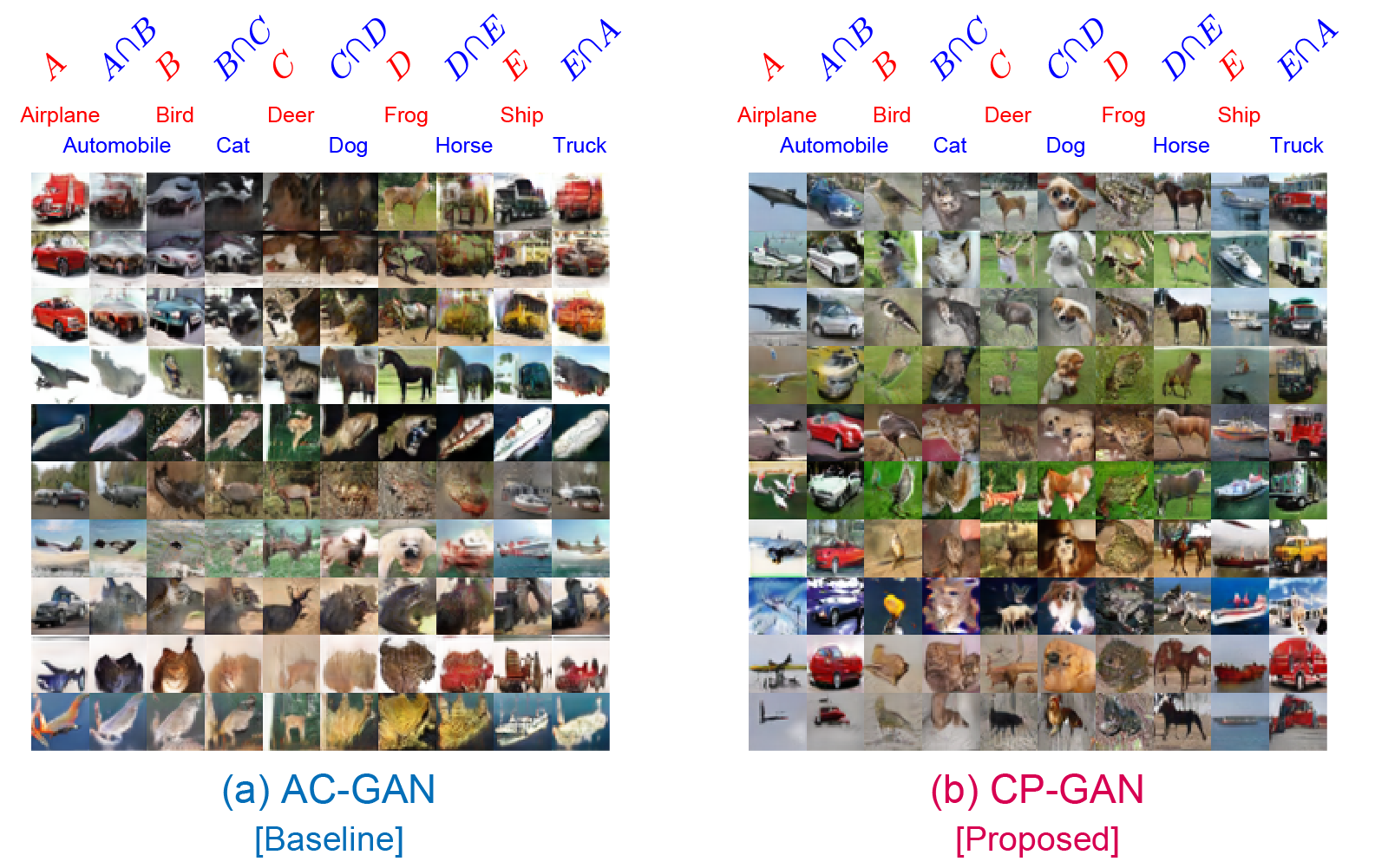

Results. Each column shows samples associated with the same class-distinct and class-mutual states: airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck, respectively, from left to right. Each row includes samples generated from a fixed z*g* and a varied y*g*. CP-GAN (b) succeeds in selectively generating class-distinct (red font) and class-mutual (blue font) images, whereas AC-GAN (a) fails to do so.

If you use this code for your research, please cite our paper.

@inproceedings{kaneko2019CP-GAN,

title={Class-Distinct and Class-Mutual Image Generation with GANs},

author={Kaneko, Takuhiro and Ushiku, Yoshitaka and Harada, Tatsuya},

booktitle={Proceedings of the British Machine Vision Conference},

year={2019}

}

- T. Kaneko, Y. Ushiku, and T. Harada. Label-Noise Robust Generative Adversarial Networks, In CVPR, 2019.

- A. Odena, C. Olah, and J. Shlens. Conditional Image Synthesis with Auxiliary Classifier GANs. In ICML, 2017.

- T. Kaneko and T. Harada. Noise Robust Generative Adversarial Networks. In CVPR, 2020.

- T. Kaneko and T. Harada. Blur, Noise, and Compression Robust Generative Adversarial Networks. In CVPR, 2021.