This repository provides PyTorch implementation for label-noise robust GAN (rGAN). rGAN can learn a label-noise robust conditional generator that can generate an image conditioned on the clean label even when only noisy labeled images are available for training.

Note: In our other studies, we have also proposed GAN for ambiguous labels, GAN for image noise, and GAN for blur, noise, and compression. Please check them from the links below.

- Classifier's posterior GAN (CP-GAN) (BMVC 2019): GAN for ambiguous labels

- Noise robust GAN (NR-GAN) (CVPR 2020): GAN for image noise

- Blur, noise, and compression robust GAN (BNCR-GAN) (CVPR 2021): GAN for blur, noise, and compression

Label-Noise Robust Generative Adversarial Networks.

Takuhiro Kaneko, Yoshitaka Ushiku, and Tatsuya Harada.

In CVPR 2019 (Oral).

[Paper] [Project] [Slides] [Poster] [Talk]

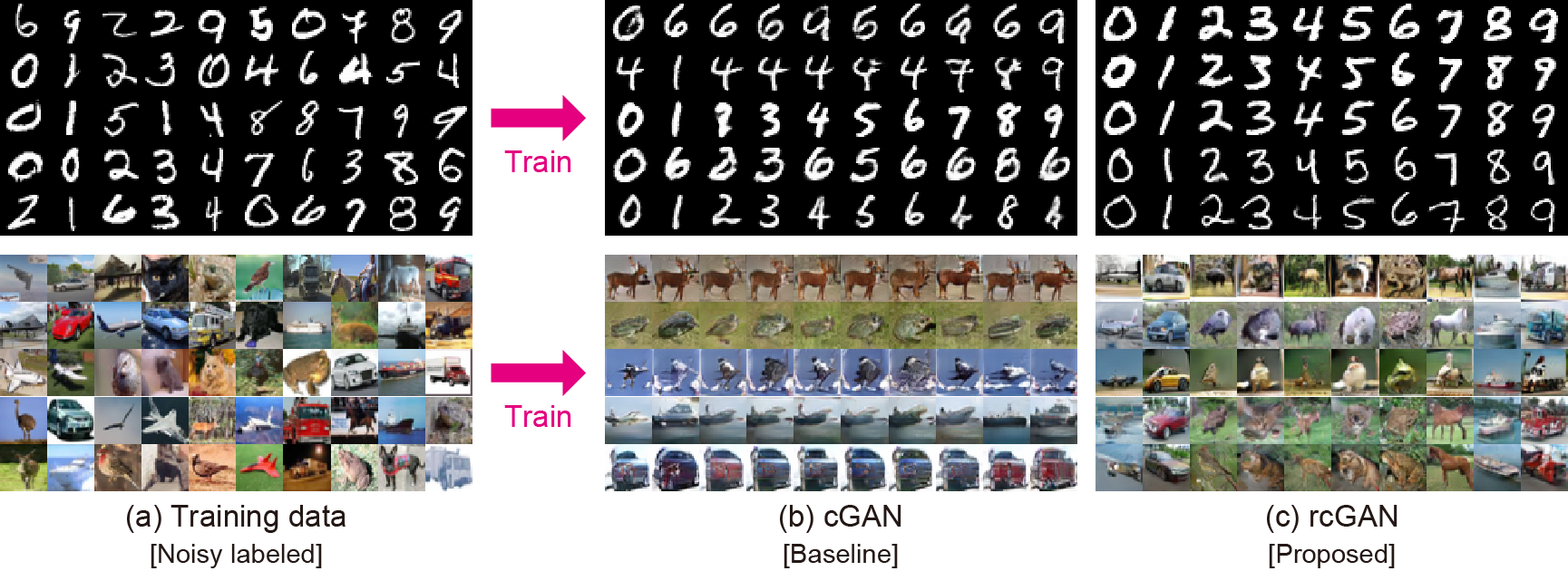

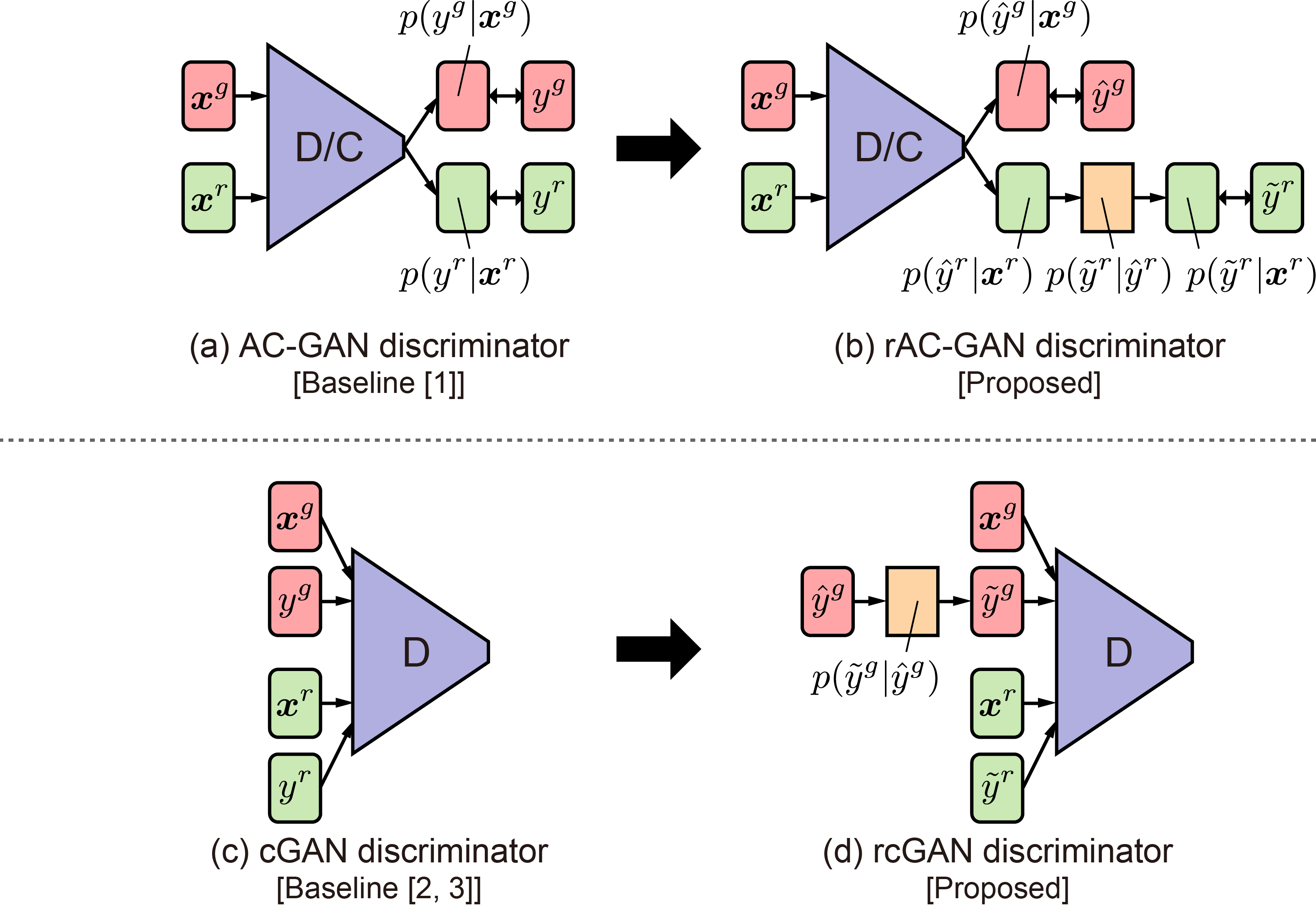

Our task is, when given noisy labeled images, to construct a label-noise robust conditional generator that can generate an image conditioned on the clean label rather than conditioned on the noisy label. Our main idea for solving this problem is to incorporate a noise transition model (viewed as orange rectangles in (b) and (d); which represents a probability that a clean label is corrupted to a noisy label) into typical class conditional GANs. In particular, we develop two variants: rAC-GAN (b) and rcGAN (d) that are extensions of AC-GAN [1] (a) and cGAN [2, 3] (c), respectively.

Clone this repo:

git clone https://github.com/takuhirok/rGAN.git

cd rGAN/First, install Python 3+. Then install PyTorch 1.0 and other dependencies by

pip install -r requirements.txtTo train a model, use the following script:

bash ./scripts/train.sh \

[acgan/racgan/cgan/rcgan] [sngan/wgangp/ctgan] [cifar10/cifar100] \

[symmetric/asymmetric] [0/0.1/0.3/0.5/0.7/0.9] output_directory_pathPlease choose one of the options in the square brackets ([ ]).

To train rcSN-GAN (rcGAN + SN-GAN) on CIFAR-10 in the symmetric noise with a noise rate 0.5, run the following:

bash ./scripts/train.sh rcgan sngan cifar10 symmetric 0.5 outputsTo train rAC-CT-GAN (rAC-GAN + CT-GAN) on CIFAR-100 in the asymmetric noise with a noise rate 0.7, run the following:

bash ./scripts/train.sh racgan ctgan cifar100 asymmetric 0.7 outputsTo generate images, use the following script:

python test.py --g_path trained_model_path --out output_directory_pathpython test.py --g_path outputs/netG_iter_100000.pth --out samplesNote: In our paper, we conducted early stopping based on the FID because some models collapsed before the end of the training.

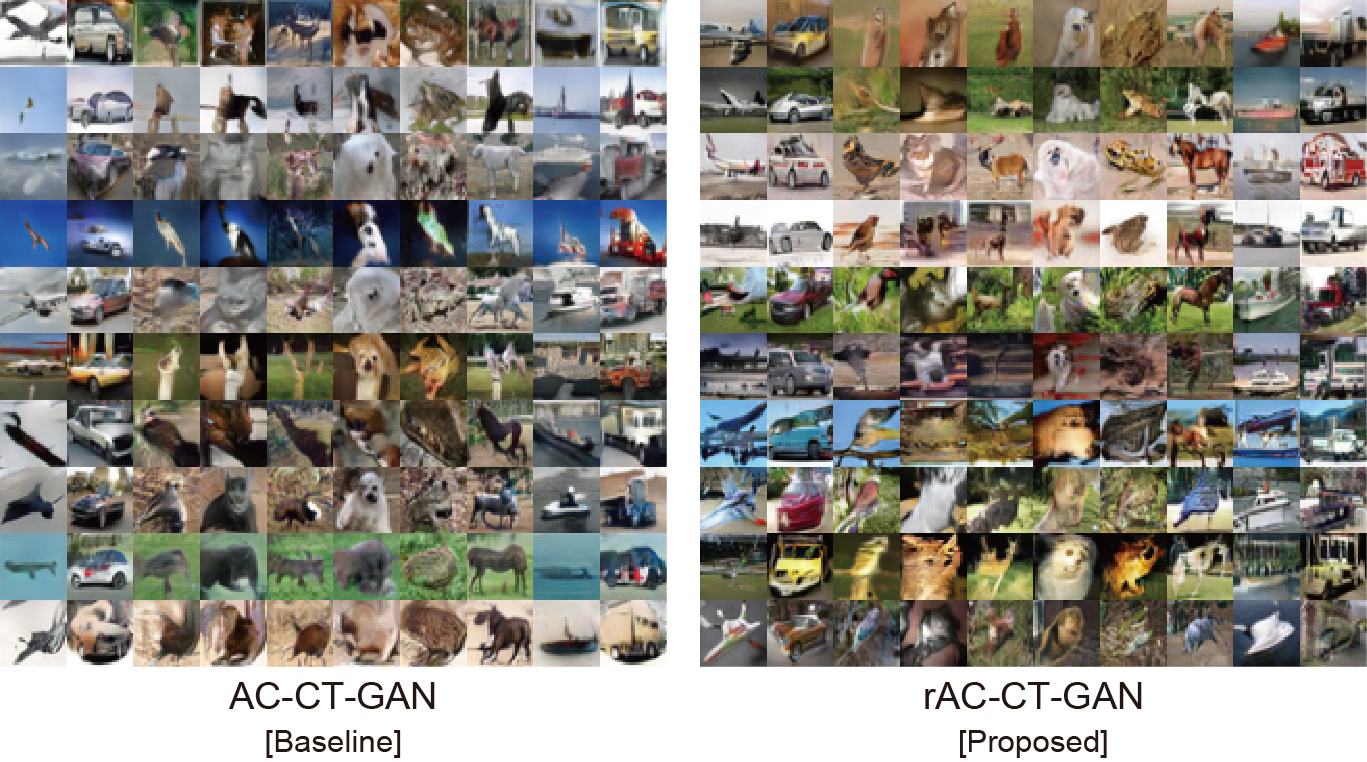

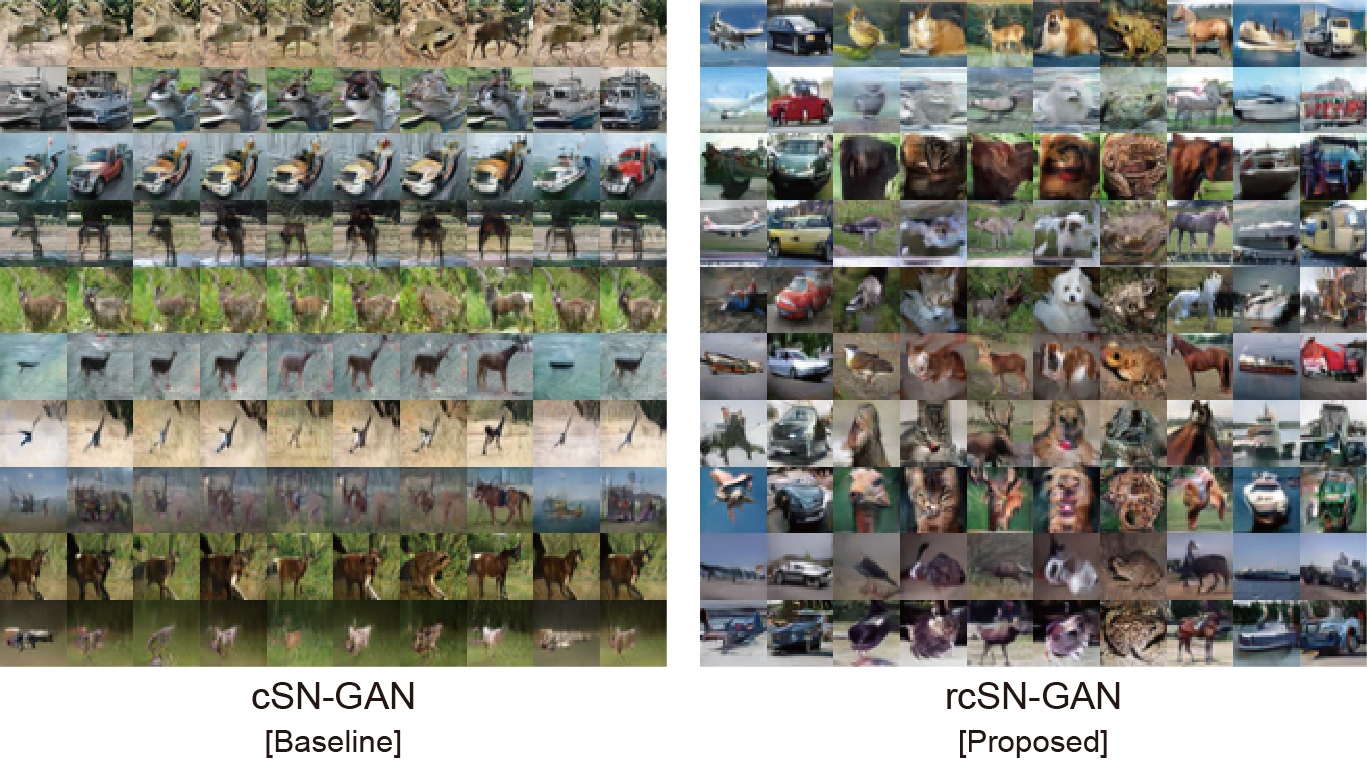

In each picture block, each column shows samples associated with the same class: airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck, respectively, from left to right. Each row includes samples generated from a fixed z and a varied yg.

If you use this code for your research, please cite our paper.

@inproceedings{kaneko2019rGAN,

title={Label-Noise Robust Generative Adversarial Networks},

author={Kaneko, Takuhiro and Ushiku, Yoshitaka and Harada, Tatsuya},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2019}

}

Note: Kiran Koshy Thekumparampil, Ashish Khetan, Zinan Lin, and Sewoong Oh published a paper [4] independently from us on the same problem. They use similar ideas as our work, albeit with a different architecture. You should also check out their awesome work at https://arxiv.org/abs/1811.03205.

- A. Odena, C. Olah, and J. Shlens. Conditional Image Synthesis with Auxiliary Classifier GANs. In ICML, 2017.

- M. Mirza and S. Osindero. Conditional Generative Adversarial Nets. arXiv preprint arXiv:1411.1784, 2014.

- T. Miyato and M. Koyama. cGANs with Projection Discriminator. In ICLR, 2018.

- K. K. Thekumparampil, A. Khetan, Z. Lin, and S. Oh. Robustness of Conditional GANs to Noisy Labels. In NeurIPS, 2018.

- T. Kaneko, Y. Ushiku, and T. Harada. Class-Distinct and Class-Mutual Image Generation with GANs. In BMVC, 2019.

- T. Kaneko and T. Harada. Noise Robust Generative Adversarial Networks. In CVPR, 2020.

- T. Kaneko and T. Harada. Blur, Noise, and Compression Robust Generative Adversarial Networks. In CVPR, 2021.