Table of Contents generated with DocToc

- Kaggle:How to LightGBM with lightgbm.cv

- Python API

- roc auc score

- 1st place solution

- 2nd place solution

- many good technics (Japanese)

- Feather packege

- Encoding for categorical values

- Important thing is good set of smart features and diverse set of base algorithms.

- A lot of features based on division and substraction from the application_train.csv

- The most notable division was by EXT_SOURCE_3

- The most important features that I engineered, in descending order of importance (measured by gain in the LGBM model)

- Find data structure, understand column description, mannagement of the feature

- Use feather.file

- Feature engineering by using script file

- How to feature selection -> using LGBM importance ??

- Using the predictive value of such regression as features

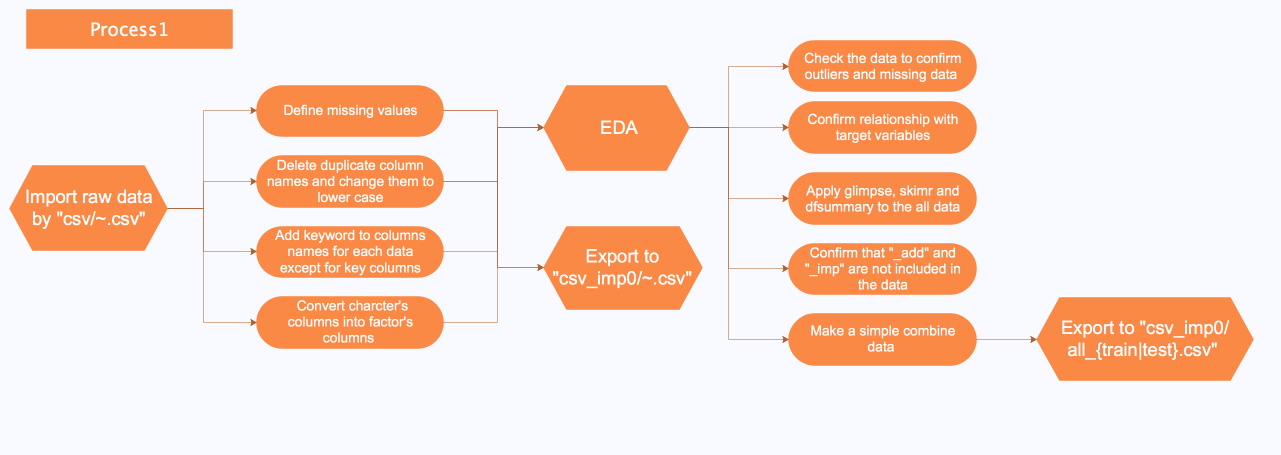

- 0_EDA.Rmd: Checking data simply and searching problem

- 1_Preprocess_app.Rmd: Preprocessing for application_{train|test}.csv

- 1_Preprocess_bureau.Rmd: Preprocessing for bureau.csv and bureau_balance.csv (not changed)

- 1_Preprocess_pre_app.Rmd: Preprocessing for previous_applications.csv (not changed)

- 1_Preprocess_ins_pay.Rmd: Preprocessing for installments_payment.csv (not changed)

- 1_Preprocess_pos_cash.Rmd: Preprocessing for POS_CASH_balance.csv (not changed)

- 1_Preprocess_credit.Rmd: Preprocessing for credit_card_balance.csv (not changed)

- 2_Combine.Rmd: Combining all data and Checking for data (not changed)

- 3_XGBoost.Rmd: construct xgboost model, predict, make a submit file, search best features, parameter tune (not changed)

- LightGBM.ipynb: lightgbm, cross validation, predict

- NeuralNetwork.ipynb: neural network, predict

- function.R: Descrive detail of functions

- makedummies.R: Make factor values dummy variables

- file_name + submit_date.csv

- raw data

- {...}.csv: Apply basic preprocess

- all_{train|test}.csv: Combine all tables

- {...}_imp.csv: Complement missing values, Extract features

- all_{train|test}.csv: Combine all tables

- best_para.tsv: recorded best features

- score_sheet.tsv: train auc, test auc, LB score

- Flowchart.eddx, FlowChart.png: Illustrate the process chart

- about_column.numbers: Explain all table columns

├── Home_Credit_Kaggle.Rproj

├── README.md

├── Rmd

│ ├── 0_EDA.Rmd

│ ├── 1_Preprocess_app.Rmd

│ ├── 1_Preprocess_app.html

│ ├── 1_Preprocess_bureau.Rmd

│ ├── 1_Preprocess_credit.Rmd

│ ├── 1_Preprocess_ins_pay.Rmd

│ ├── 1_Preprocess_pos_cash.Rmd

│ ├── 1_Preprocess_pre_app.Rmd

│ ├── 2_Combine.Rmd

│ └── 3_XGBoost.Rmd

├── input

│ ├── csv

│ │ ├── HomeCredit_columns_description.csv

│ │ ├── POS_CASH_balance.csv

│ │ ├── application_test.csv

│ │ ├── application_train.csv

│ │ ├── bureau.csv

│ │ ├── bureau_balance.csv

│ │ ├── credit_card_balance.csv

│ │ ├── installments_payments.csv

│ │ ├── previous_application.csv

│ │ └── sample_submission.csv

│ ├── csv_imp0

│ │ ├── all_data_test.csv

│ │ ├── all_data_train.csv

│ │ ├── POS_CASH_balance.csv

│ │ ├── application_test.csv

│ │ ├── application_train.csv

│ │ ├── bureau.csv

│ │ ├── bureau_balance.csv

│ │ ├── credit_card_balance.csv

│ │ ├── installments_payments.csv

│ │ └── previous_application.csv

│ └── csv_imp1

│ ├── all_data_test.csv

│ ├── all_data_train.csv

│ ├── application_test_imp.csv

│ ├── application_train_imp.csv

│ └── credit_card_balance_imp.csv

├── data

│ ├── best_para.tsv

│ ├── best_para_old_100.tsv

│ ├── about_column.numbers

│ ├── FlowChart.eddx

│ ├── FLowChart.png

│ └── score_sheet.tsv

├── jn

│ ├── LightGBM.ipynb

│ └── NeuralNetwork.ipynb

├── py

│ ├──

│ └──

├── submit

└── script

├── function.R

└── makedummies.R