Author: Talip Ucar (ucabtuc@gmail.com)

Pytorch implementation of "Representation Learning with Contrastive Predictive Coding" (https://arxiv.org/pdf/1807.03748.pdf).

Source: https://arxiv.org/pdf/1807.03748.pdf

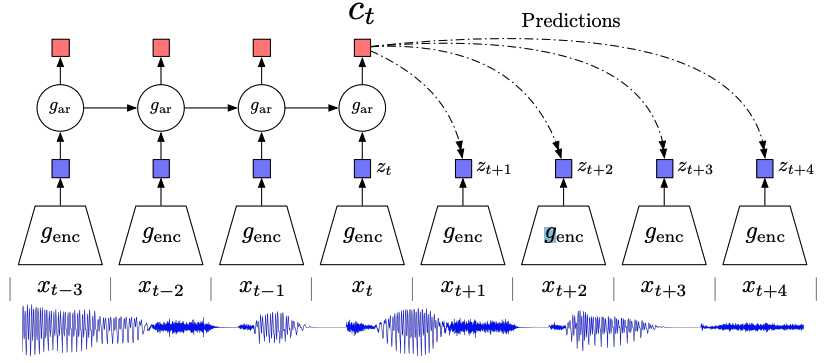

Model consists of two networks;

-CNN-based Encoder that generates representation zt from a given sequence (such as audio)

-GRU-based Autoregressive model that generates context ct from z≤t

Thus, the overall, forward pass can be described in 3 steps: i) generating representations, ii) context, iii) predictions.

i) z0:t+s = Encoder(x0:t+s)

ii) ct = GRU(z≤t)

iii) qt+i = Wict for each time step forward, i=[1,s]

Training Objective:

To maximize correlation between corresponding qt+i and zt+i for each i=[1,s] by maximizing diagonal of

log(Softmax(zTq)), where q=[qt+1 qt+2..qt+s]T and z =[zt+1 zt+2..zt+s].

A custom CNN-based encoder model is provided, and its architecture is defined in yaml file of the model ("./config/cpc.yaml").

Example:

conv_dims: - [ 1, 512, 10, 5, 3, 1] # i=input channel, o=output channel, k = kernel, s = stride, p = padding, d = dilation - [512, 512, 8, 4, 2, 1] # [i, o, k, s, p, d] - [512, 512, 4, 2, 1, 1] # [i, o, k, s, p, d] - [512, 512, 4, 2, 1, 1] # [i, o, k, s, p, d] - [512, 512, 4, 2, 1, 1] # [i, o, k, s, p, d] - 512

conv_dims defines first 5 convolutional layer as well as feature dimension of its output. You can change this architecture

by modifying it in yaml file. These dimensions are chosen so that we have down-sampling factor of 160 to get a feature vector for

every 10ms of speech, also the rate of the phoneme sequence labels obtained for LibrisSpeech dataset.

Following datasets are supported:

- LibrisSpeech (train-clean-100)

- TODO: include a test set for LibrisSpeech

It requires Python 3.8. You can set up the environment by following three steps:

- Install pipenv using pip

- Activate virtual environment

- Install required packages

Run following commands in order to set up the environment:

pip install pipenv # If it is not installed yet

pipenv shell # Activate virtual environment

pipenv install --skip-lock # Install required packages. --skip-lock is optional,

# and used to skip generating Pipfile.lock file

You can train the model once you download LibrisSpeech dataset (train-clean-100) and place it under "./data" folder. The more datasets will be supported in the future.

- The model is evaluated using LibriSpeech phone and speaker classification

- The results are reported on both training and test sets.

- Doing same evaluation as above using randomly initialized model (untrained)

- Full supervised training on the same task, using same model architecture.

Results at the end of training is saved under "./results" directory. Results directory structure:

results

|-evaluation

|-training

|-model

|-plots

|-loss

You can save results of evaluations under "evaluation" folder. At the moment, the results of evaluation is also printed out on the display, and not saved.

To train the model using LibrisSpeech dataset, you can use following command:

python 0_train.py

Once you have a trained model, you can evaluate the model using:

python 1_eval.py

For further details on what arguments you can use (or to add your own arguments), you can check out "/utils/arguments.py"

MLFlow is used to track experiments. It is turned off by default, but can be turned on by changing option in runtime config file in "./config/runtime.yaml"