For programmers, who are interested in AI and are deploying RAGs without knowledge on API development, Dialog is an App to simplify RAG deployments, using the most modern frameworks for web and LLM interaction, letting you spend less time coding and more time training your model.

This repository serves as an API focused on letting you deploy any LLM you want, based on the structure provided by dialog-lib.

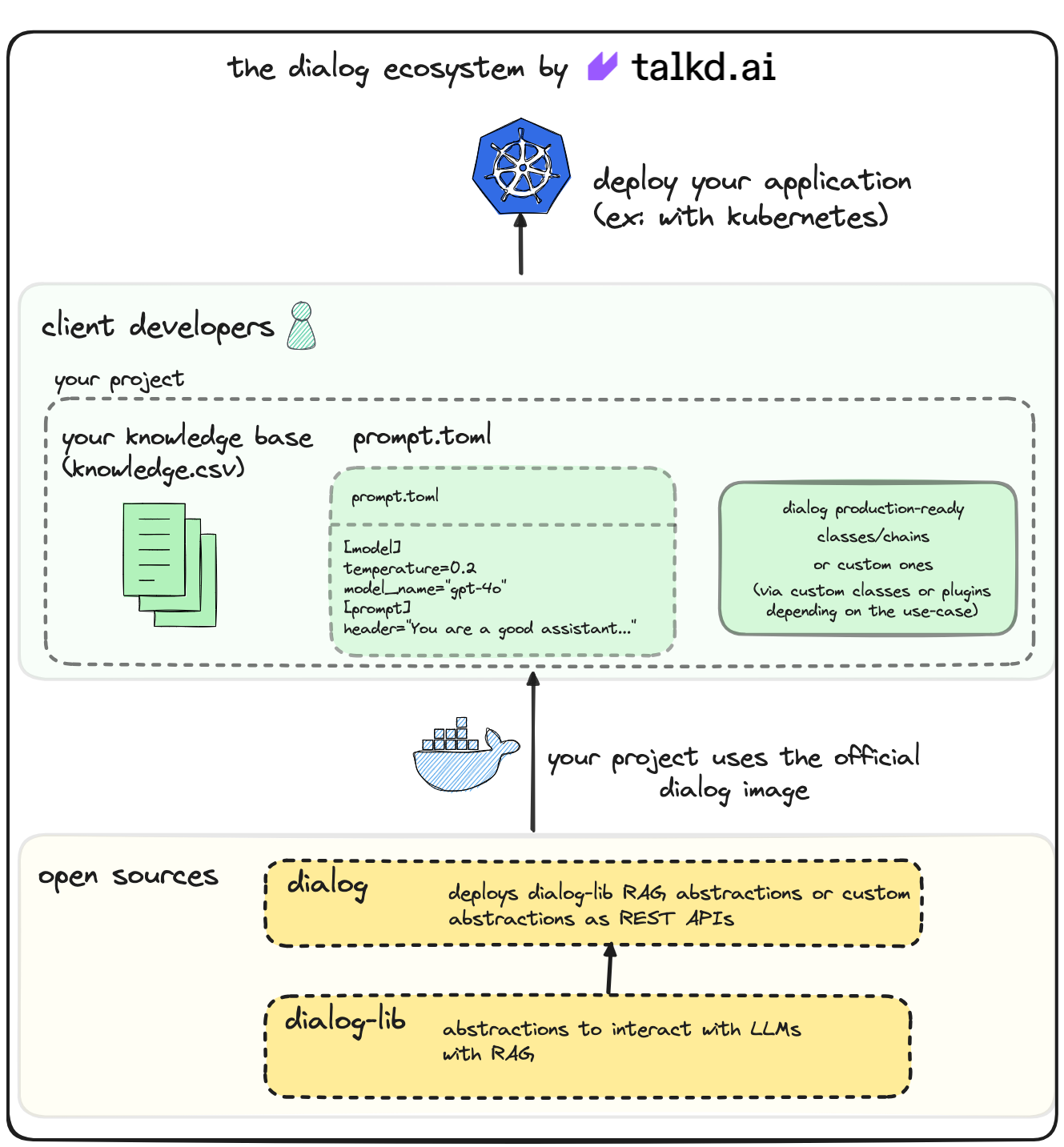

We started focusing on humanizing RAGs (making the answer scope very delimited and human-like sounding), but we are expanding for broader approaches to improving RAG deployment and maintenance for everyone. Check out our current architecture below and, for more information, check our documentation!

We assume you are familiar with Docker, if you are not, this amazing video tutorial will help you get started. If you want a more detailed getting started, follow the Quick Start session from our docs for setup.

To run the project for the first time, you need to have Docker and Docker Compose installed on your machine. If you don't have it, follow the instructions on the Docker website.

After installing Docker and Docker Compose, clone the repository and run the following command:

cp .env.sample .envInside the .env file, set the OPENAI_API_KEY variable with your OpenAI API key.

Then, run the following command:

docker-compose upit will start two services:

-

db: where the PostgresSQL database runs to support chat history and document retrieval for RAG; -

dialog: the service with the API.

We've written some tutorials to help you get started with the project:

- Deploy your own ChatGPT in 5 minutes

- GPT-4o: Learn how to Implement a RAG on the new model, step-by-step!

Also, you can check our documentation for more information.

We are thankful for all the support we receive from our sponsors, who help us keep the project running and improving. If you want to become a sponsor, check out our Sponsors Page.

| Github Accelerator | Buser |

|---|---|

In partnership with Open-WebUI, we made their chat interface our own as well, if you want to use it on your own application, change the docker-compose file to use the docker-compose-open-webui.yml file:

docker-compose -f docker-compose-open-webui.yml upWe are thankful for all of the contributions we receive, mostly reviewed by this awesome maintainers team we have:

made with 💜 by talkd.ai