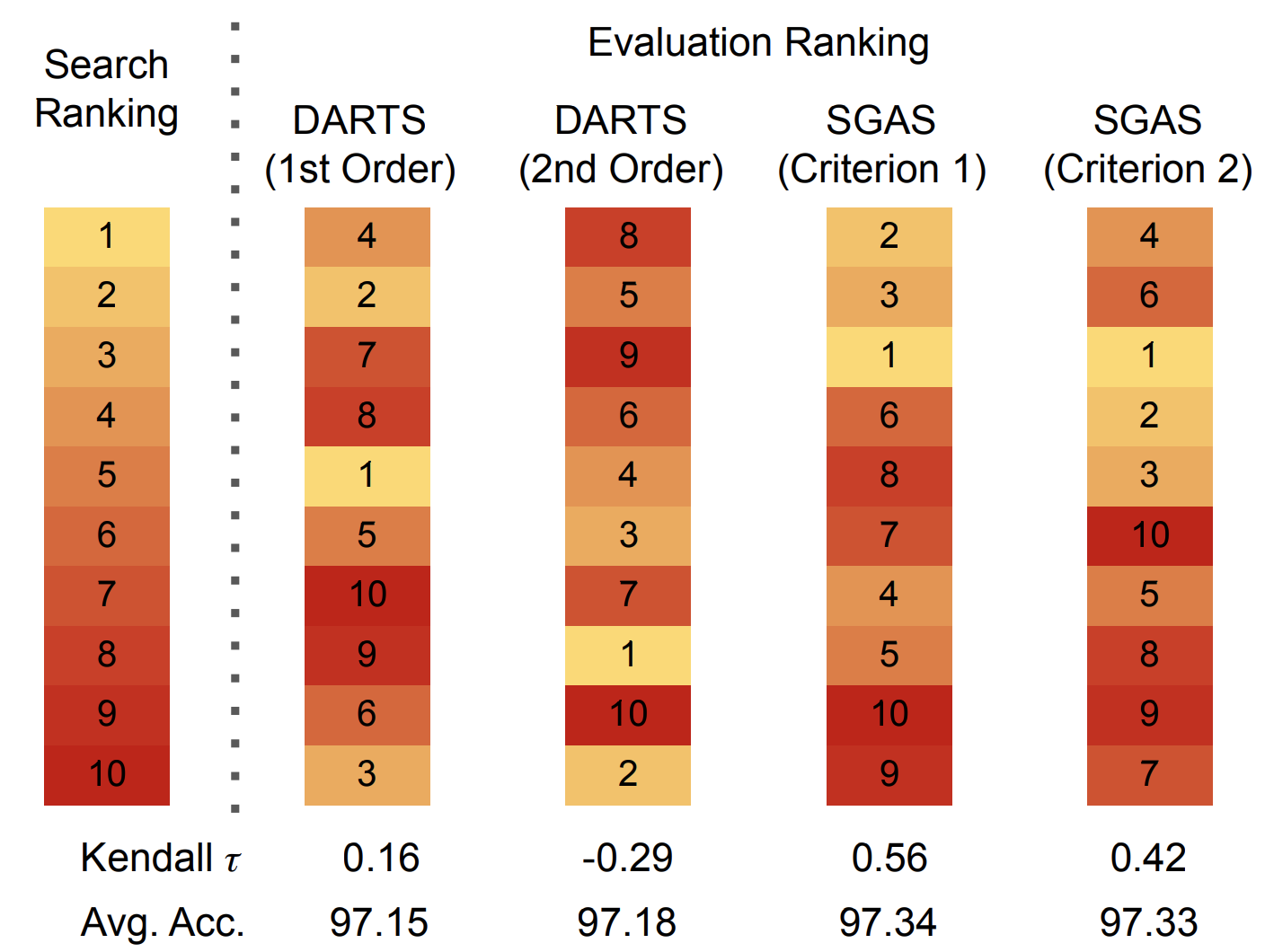

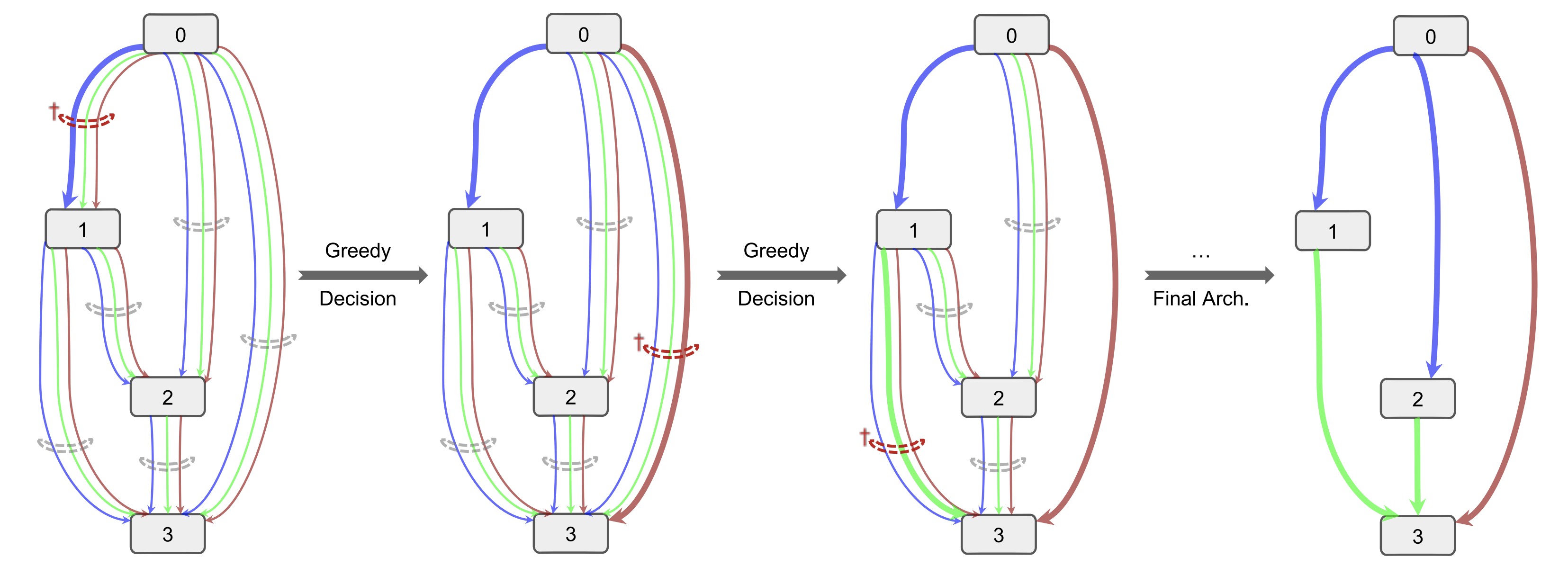

Architectures with a higher validation accuracy during the search phase may perform worse in the evaluation. Aiming to alleviate this common issue, we introduce sequential greedy architecture search (SGAS), an efficient method for neural architecture search. By dividing the search procedure into subproblems, SGAS chooses and prunes candidate operations in a greedy fashion. We apply SGAS to search architectures for Convolutional Neural Networks (CNN) and Graph Convolutional Networks (GCN).

[Project] [Paper] [Slides] [Pytorch Code]

Extensive experiments show that SGAS is able to find state-of-the-art architectures for tasks such as image classification, point cloud classification and node classification in protein-protein interaction graphs with minimal computational cost.

- Pytorch 1.4.0

- Pytorch Geometric (only needed for GCN experiments)

In order to setup a conda environment with all neccessary dependencies run,

source sgas_env_install.sh

You will find detailed instructions how to use our code for CNN architecture search, in the folder cnn and GCN architecture search, in the folder gcn. Currently, we provide the following:

- Conda environment

- Search code

- Training code

- Evaluation code

- Several pretrained models

- Visualization code

Please cite our paper if you find anything helpful,

@inproceedings{li2019sgas,

title={SGAS: Sequential Greedy Architecture Search},

author={Li, Guohao and Qian, Guocheng and Delgadillo, Itzel C and M{\"u}ller, Matthias and Thabet, Ali and Ghanem, Bernard},

booktitle={Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2020},

}

MIT License

This code is heavily borrowed from DARTS. We would also like to thank P-DARTS for the test code on ImageNet.

Further information and details please contact Guohao Li and Guocheng Qian.