Dual-scale enhanced and cross-generative consistency learning for semi-supervised medical image segmentation

Authors: Yunqi Gu, Tao Zhou, Yizhe Zhang, Yi Zhou, Kelei He, Chen Gong, and Huazhu Fu.

-

This repository provides code for "Dual-scale enhanced and cross-generative consistency learning for semi-supervised medical image segmentation (DEC-Seg)". (paper)

-

If you have any questions about our paper, feel free to contact me. And if you are using DEC-Seg for your research, please cite this paper (BibTeX).

- [2024/10/12] Release training/testing code.

- [Dual-scale enhanced and cross-generative consistency learning for semi-supervised medical image segmentation]

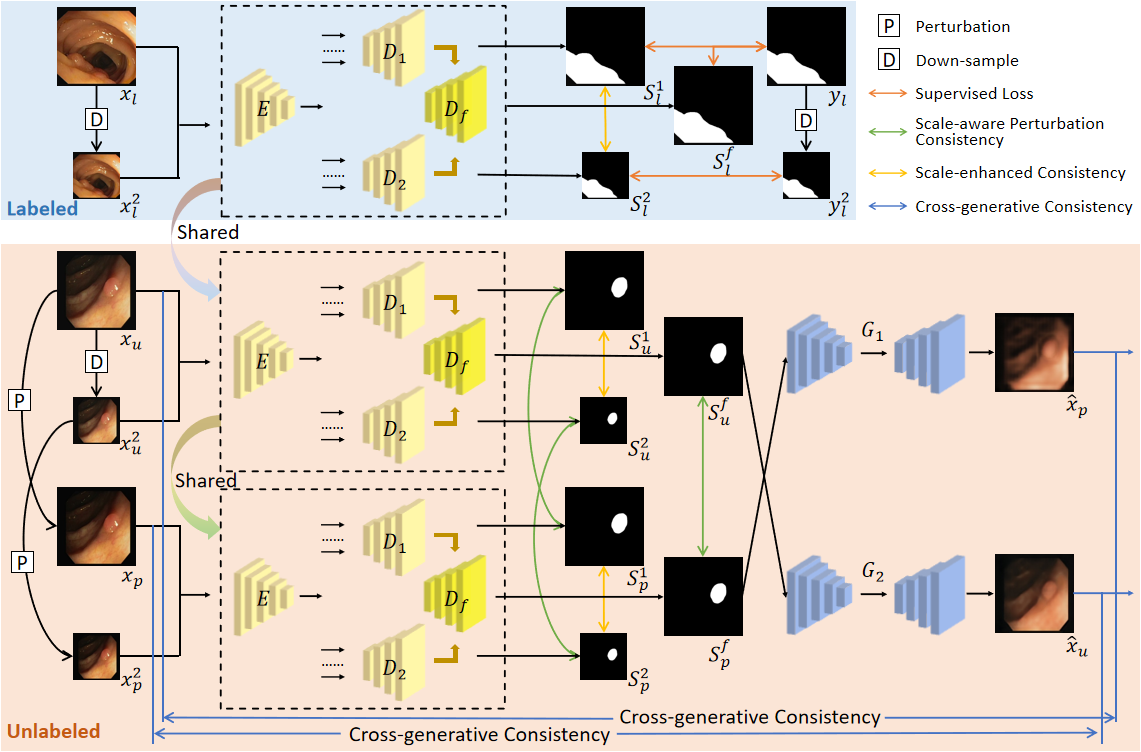

Table of contents generated with markdown-toc

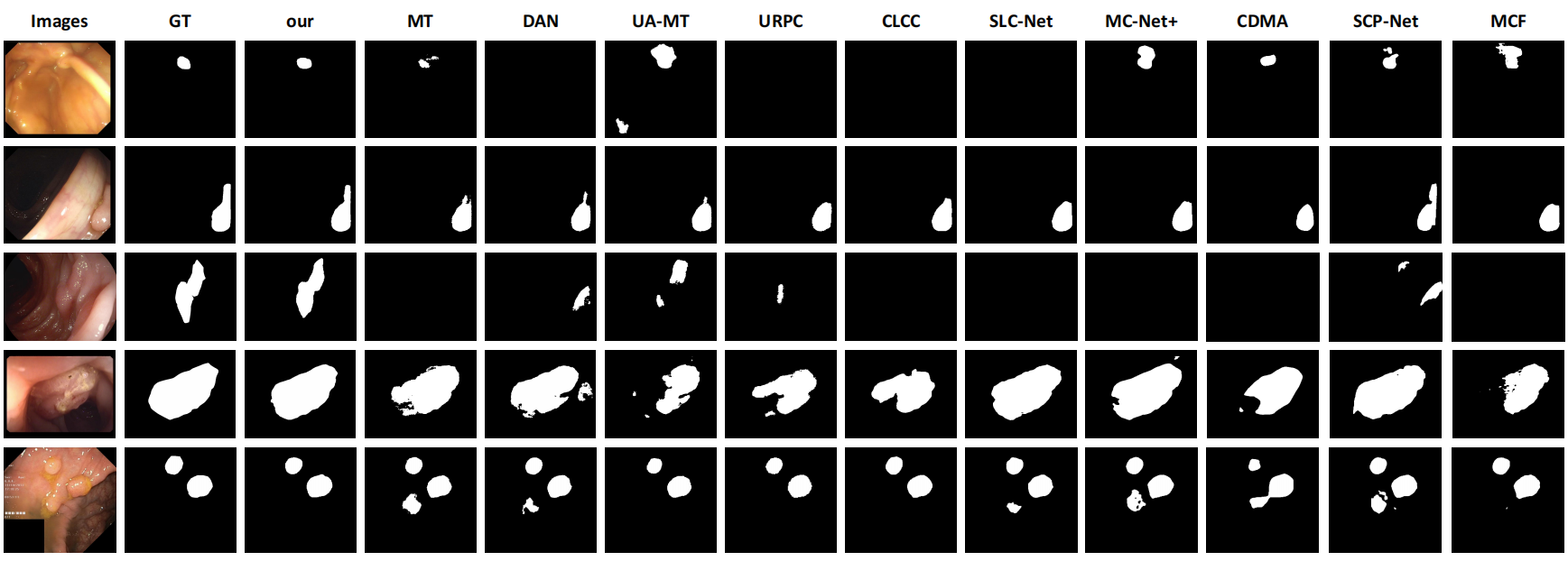

Medical image segmentation plays a crucial role in computer-aided diagnosis. However, existing methods heavily rely on fully supervised training, which requires a large amount of labeled data with time-consuming pixel-wise annotations. Moreover, accurately segmenting lesions poses challenges due to variations in shape, size, and location. To address these issues, we propose a novel Dual-scale Enhanced and Cross-generative consistency learning framework for semi-supervised medical image Segmentation (DEC-Seg). First, we propose a Cross-level Feature Aggregation (CFA) module that integrates features from adjacent layers to enhance the feature representation ability across different resolutions. To address scale variation, we present a scale-enhanced consistency constraint, which ensures consistency in the segmentation maps generated from the same input image at different scales. This constraint helps handle variations in lesion sizes and improves the robustness of the model. Furthermore, we propose a cross-generative consistency scheme, in which the original and perturbed images can be reconstructed using cross-segmentation maps. This consistency constraint allows us to mine effective feature representations and boost the segmentation performance. To further exploit the scale information, we propose a Dual-scale Complementary Fusion (DCF) module that integrates features from two scale-specific decoders operating at different scales to help produce more accurate segmentation maps. Extensive experimental results on multiple medical segmentation tasks (polyp, skin lesion, and brain glioma) demonstrate the effectiveness of our DEC-Seg against other state-of-the-art semi-supervised segmentation approaches.

Figure 1: Overview of the proposed DEC-Seg.

Figure 2: Qualitative Results.

The training and testing experiments are conducted using PyTorch with a single NVIDIA GeForce RTX3090 GPU with 24 GB Memory.

Note that our model also supports low memory GPU, which means you can lower the batch size

-

Configuring your environment (Prerequisites):

Note that DEC-Seg is only tested on Ubuntu OS with the following environments. It may work on other operating systems as well but we do not guarantee that it will.

-

Creating a virtual environment in terminal:

conda create -n DECSeg python=3.8. -

Installing necessary packages: PyTorch 1.9.0

-

-

Preparing necessary data:

-

preprocess the training and test data and place it into

./data/with the following structure:|-- data | |-- PolypDataset | |-- train | |-- val | |-- test | |-- CVC-300 | |-- CVC-ClinicDB | |-- CVC-ColonDB | |-- ETIS-LaribPolypDB | |-- Kvasir | |-- train.txt | |-- val.txt | |-- CVC-300.txt | |-- CVC-ClinicDB.txt | |-- CVC-ColonDB.txt | |-- ETIS-LaribPolypDB.txt | |-- Kvasir.txt -

downloading Res2Net weights and and move it into

./checkpoints/, which can be found in this download link (Google Drive).

-

-

Training Configuration:

-

Assigning your costumed path, like

--root_pathand--train_saveintrain.py. -

Just enjoy it!

-

-

Testing Configuration:

-

After you download all the pre-trained model and testing dataset, just run

MyTest.pyto generate the final prediction map: replace your trained model directory (--pth_path). -

Just enjoy it!

-

Matlab: One-key evaluation is written in MATLAB code (link),

please follow this the instructions in ./eval/main.m and just run it to generate the evaluation results in ./res/.

The complete evaluation toolbox (including data, map, eval code, and res): new link.

They can be found in download link.

Please cite our paper if you find the work useful:

@article{gu2025dual,

title={Dual-scale enhanced and cross-generative consistency learning for semi-supervised medical image segmentation},

author={Gu, Yunqi and Zhou, Tao and Zhang, Yizhe and Zhou, Yi and He, Kelei and Gong, Chen and Fu, Huazhu},

journal={Pattern Recognition},

volume={158},

pages={110962},

year={2025},

publisher={Elsevier}

}

The source code is free for research and education use only. Any comercial use should get formal permission first.