English | 한국어

LMOps is a complex and challenging field, but it plays a pivotal role in the successful deployment and management of large language models.

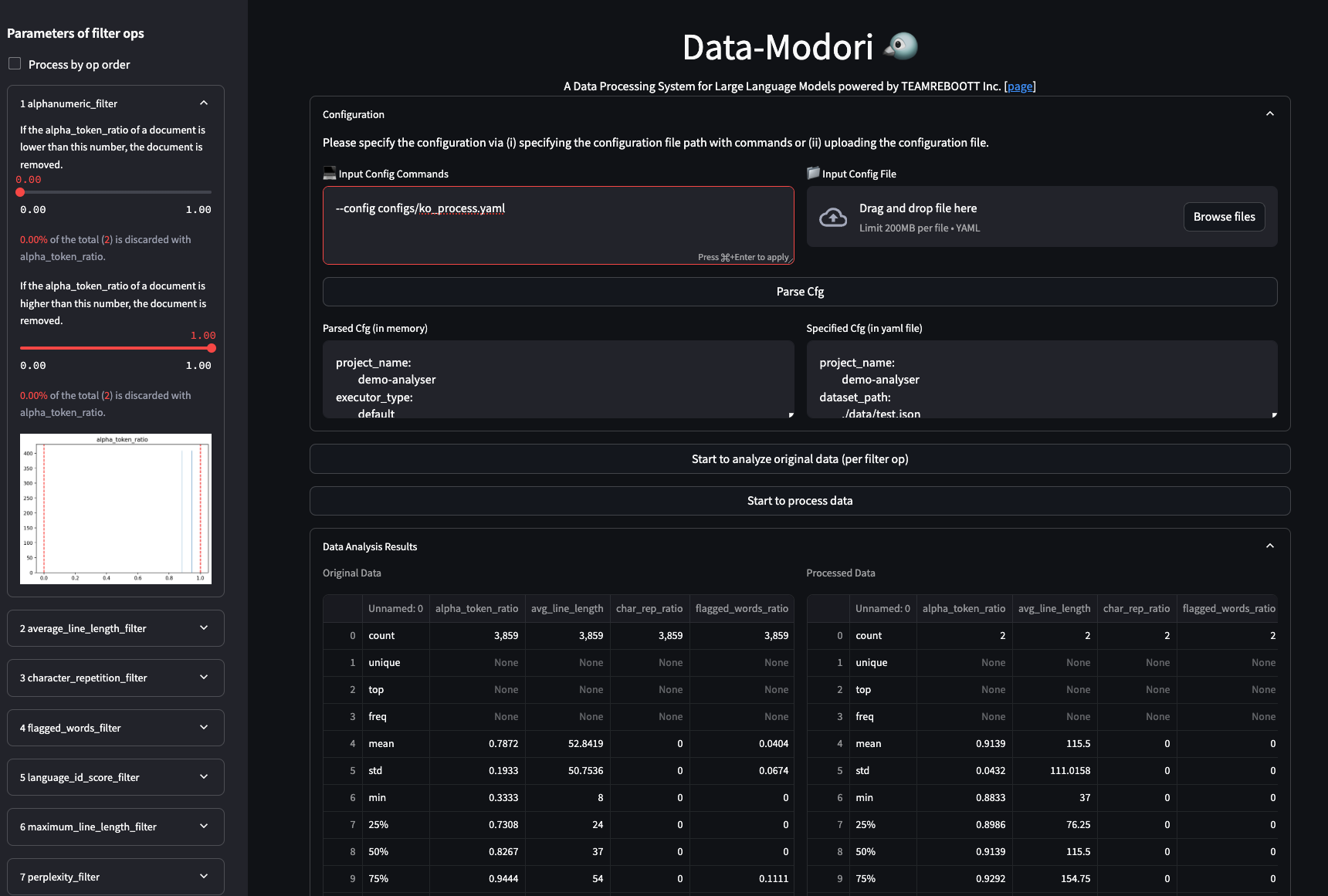

Data-Modori is an unified platform that guides you into the realm of LLM, offering diverse possibilities by analyzing useful information from various sources 🌐.

We gather all the puzzle pieces of the developing process of LLM, assemble them into one, and invite you into the world of the information you desire.

- 🛠️ Flexible Analysis: Utilize advanced analysis tools to delve into your data for Korean languages, gaining new insights and perspectives.

- 📊 Customized Results: Organize and present data according to your requirements, delivering tailored results.

- 🖥️ User-Friendly Interface: An intuitive and easy-to-use interface allows users to harness the power of data without requiring advanced knowledge.

- 🤖 Easy-to-Learn: We provide an intuitive codes, including finetuning and auto-eval codes fot LLMs.

- Recommend Python==3.8

- gcc >= 5 (at least C++14 support)

- Get source code from Github

git clone https://github.com/teamreboott/data-modori

cd data-modori- Run the following command to install the required modules from data-modori:

pip install -r environments/combined_requirements.txtData-Modori provides a variety of operators!

- Run

process_data.pytool with your config as the argument to process your dataset.

python tools/process_data.py --config configs/process.yaml- Note: For some operators that involve third-party models or resources which are not stored locally on your computer, it might be slow for the first running because these ops need to download corresponding resources into a directory first.

The default download cache directory is

~/.cache/data_modori. Change the cache location by setting the shell environment variable,DATA_MODORI_CACHE_HOMEto another directory, and you can also changeDATA_MODORI_MODELS_CACHEorDATA_MODORI_ASSETS_CACHEin the same way:

# cache home

export DATA_MODORI_CACHE_HOME="/path/to/another/directory"

# cache models

export DATA_MODORI_MODELS_CACHE="/path/to/another/directory/models"

# cache assets

export DATA_MODORI_ASSETS_CACHE="/path/to/another/directory/assets"- Run

analyze_data.pytool with your config as the argument to analyse your dataset.

python tools/analyze_data.py --config configs/analyser.yaml- Note: Analyser only compute stats of Filter ops. So extra Mapper or Deduplicator ops will be ignored in the analysis process.

- Run

app.pytool to visualize your dataset in your browser.

streamlit run app.py- Config files specify some global arguments, and an operator list for the

data process. You need to set:

- Global arguments: input/output dataset path, number of workers, etc.

- Operator list: list operators with their arguments used to process the dataset.

- You can build up your own config files by:

- ➖:Modify from our example config file

config_all.yamlwhich includes all ops and default arguments. You just need to remove ops that you won't use and refine some arguments of ops. - ➕:Build up your own config files from scratch. You can refer our

example config file

config_all.yaml, op documents, and advanced Build-Up Guide for developers. - Besides the yaml files, you also have the flexibility to specify just one (of several) parameters on the command line, which will override the values in yaml files.

- ➖:Modify from our example config file

python xxx.py --config configs/process.yaml --language_id_score_filter.lang=ko # Process config example for dataset

# global parameters

project_name: 'demo-process'

dataset_path: './data/test.json' # path to your dataset directory or file

export_path: './output/test.jsonl'

np: 4 # number of subprocess to process your dataset

text_keys: 'content'

# process schedule

# a list of several process operators with their arguments

process:

- language_id_score_filter:

lang: 'ko'Data-Modori is released under Apache License 2.0.

We are in a rapidly developing field and greatly welcome contributions of new features, bug fixes and better documentations. Please refer to How-to Guide for Developers.

Data-Modori is used across various LLM projects and research initiatives, including industrial LLMs. We look forward to more of your experience, suggestions and discussions for collaboration!

We thank and refers to several community projects, such as data-juicer, KoBERT, komt, ko-lm-evaluation-harness, Huggingface-Datasets, Bloom, Pile, Megatron-LM, DeepSpeed, Arrow, Ray, Beam, LM-Harness, HELM, ....