A simple implementation of a GPT-like model using TensorFlow and Keras. It uses a Transformer architecture with multi-head attention to generate responses from input questions.

- Transformer-based architecture with multi-head attention

- Positional encoding added to input embeddings

- Customizable hyperparameters (e.g., embedding size, number of layers)

- Tokenizer-based input processing

- Option to train from scratch or load a pre-trained model

- Interactive question-answer generation

Install dependencies:

pip install tensorflow keras numpyThe model uses a custom dataset (simpleGPTDict) with question-answer pairs. Each pair is tokenized with and tokens.

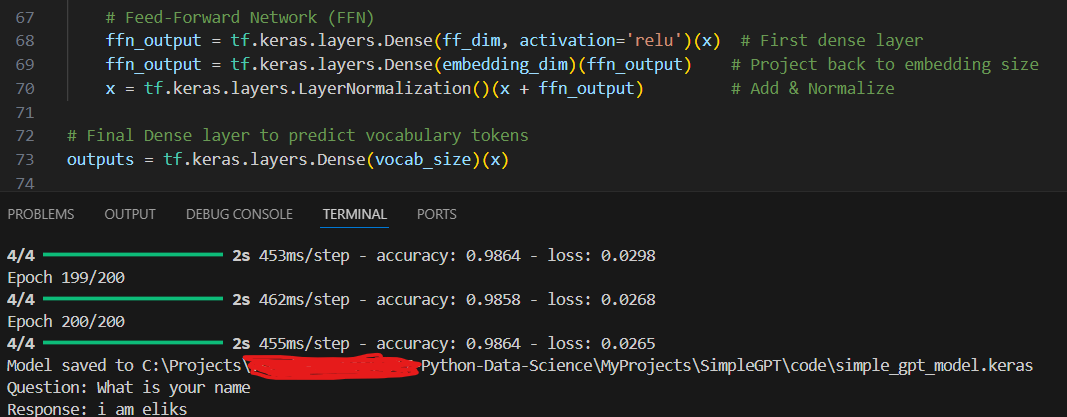

Input: Tokenized question sequences Architecture: Embedding → Positional Encoding → Transformer Encoder → Output Layer Training: Uses SparseCategoricalCrossentropy loss and Adam optimizer

To train the model, run:

python SimpleGPT.pySelect option 1 to train from scratch. The trained model will be saved as simple_gpt_model.keras.

To load an existing model, select option 0 when prompted.

Interact with the model to generate responses:

Question: What is your name?

Response: i am eliks This project is licensed under the MIT License - see the LICENSE file for details.