This is a framework to benchmark the accuracy of Picovoice's speech-to-intent engine (a.k.a rhino) against other natural language understanding engines. For more information regarding the engine refer to its repository directly here. This repository contains all data and code to reproduce the results. In this benchmark we evaluate the accuracy of engine for the context of voice enabled coffee maker. You can listen to one of the sample commands here. In order to simulate the real-life situations we have tested in two noisy conditions (1) Cafe and (2) Kitchen. You can listen to samples of noisy data here and here.

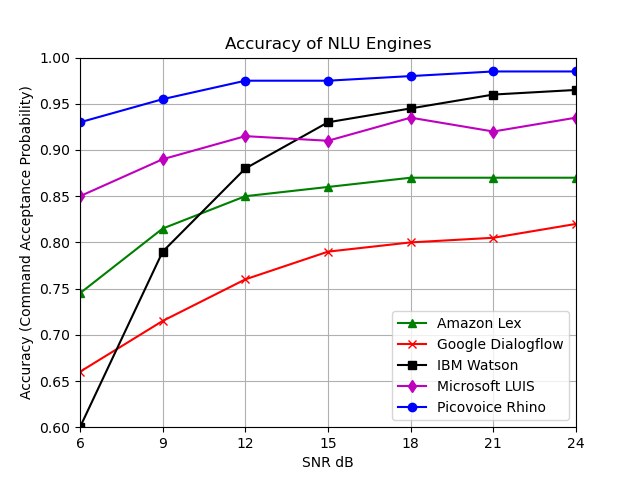

Additionally we compare the accuracy of rhino with Google Dialogflow, Amazon Lex, IBM Watson and Microsoft LUIS.

The speech commands are crowd sourced from more than 50 unique speakers. Each speaker contributed about 10 different commands. Collectively there are 619 commands used in this benchmark. Noise is downloaded from Freesound.

Clone the directory and its submodules via

git clone --recurse-submodules https://github.com/Picovoice/speech-to-intent-benchmark.gitThe repository grabs the latest version of rhino as a Git submodule under rhino. All data needed for this benchmark including speech, noise, and labels are provided under data. Additionally the Dialogflow agents used in this benchmark are exported here. The Amazon Lex bots used in this benchmark are exported here. The benchmark code is located under benchmark.

The first step is to mix the clean speech data under clean with noise. There are two types of noise used for this benchmark (1) cafe and (2) kitchen. In order to create noisy test data enter the following from the root of the repository in shell

python benchmark/mixer.py ${NOISE}${NOISE} can be either kitchen or cafe.

Create accuracy results for running the noisy spoken commands through a NLU engine by running the following

python benchmark/benchmark.py --engine_type ${AN_ENGINE_TYPE} --noise ${NOISE}The valid options for the engine_type parameter are: AMAZON_LEX, GOOGLE_DIALOGFLOW, IBM_WATSON, MICROSOFT_LUIS and PICOVOICE_RHINO.

In order to run the noisy spoken commands through Dialogflow API, include your Google Cloud Platform credential path and Google Cloud Platform project ID like the following

python benchmark/benchmark.py --engine_type GOOGLE_DIALOGFLOW --gcp_credential_path ${GOOGLE_CLOUD_PLATFORM_CREDENTIAL_PATH} --gcp_project_id ${GOOGLE_CLOUD_PLATFORM_PROJECT_ID} --noise ${NOISE}To run the noisy spoken commands through IBM Watson API, include your IBM Cloud credential path and your Natural Language Understanding model ID.

If you already have a custom Speech to Text language model, include its ID using the argument --ibm_custom_id. Otherwise, a new custom language model

will be created for you.

python benchmark/benchmark.py --engine_type IBM_WATSON --ibm_credential_path ${IBM_CREDENTIAL_PATH} --ibm_model_id ${IBM_MODEL_ID} --noise ${NOISE}Before running noisy spoken commands through Microsoft LUIS, add your LUIS credentials and Speech credentials into /data/luis/credentials.env.

Below is the result of benchmark. Command Acceptance Probability (Accuracy) is defined as the probability of the engine

to correctly understand the speech command.

The Amazon Lex bot, Google Dialogflow agent, IBM Watson model and LUIS app used to produce these results were

all trained on all 432 sample utterances.