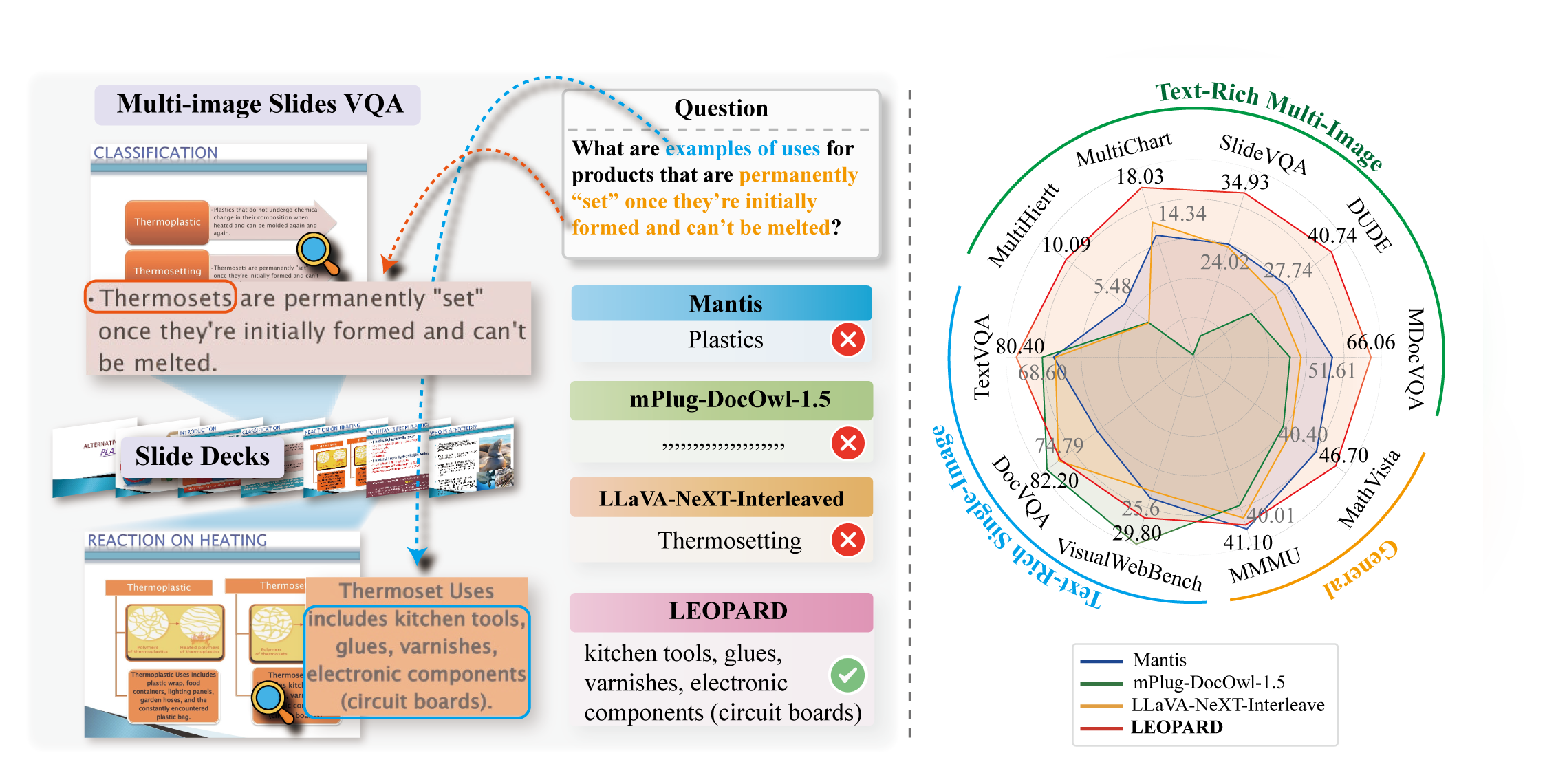

This is the repository for Leopard, a MLLM that is specifically designed to handle complex vision-language tasks involving multiple text-rich images. In real-world applications, such as presentation slides, scanned documents, and webpage snapshots, understanding the inter-relationships and logical flow across multiple images is crucial.

The code, data, and model checkpoints will be released in one month. Stay tuned!

- 📢 [2024-10-19]. Evaluation code for Leopard-LLaVA and Leopard-Idefics2 is available.

- 📢 [2024-10-30]. We release the checkpoints of Leopard-LLaVA and Leopard-Idefics2.

- 📢 [2024-11] Uploaded the Leopard-Instruct dataset to Huggingface.

- 📢 [2024-12] We released the training code for Leopard-LLaVA and Leopard-Idefics2.

- A High-quality Instruction-Tuning Data: LEOPARD leverages a curated dataset of approximately 1 million high-quality multimodal instruction-tuning samples specifically designed for tasks involving multiple text-rich images.

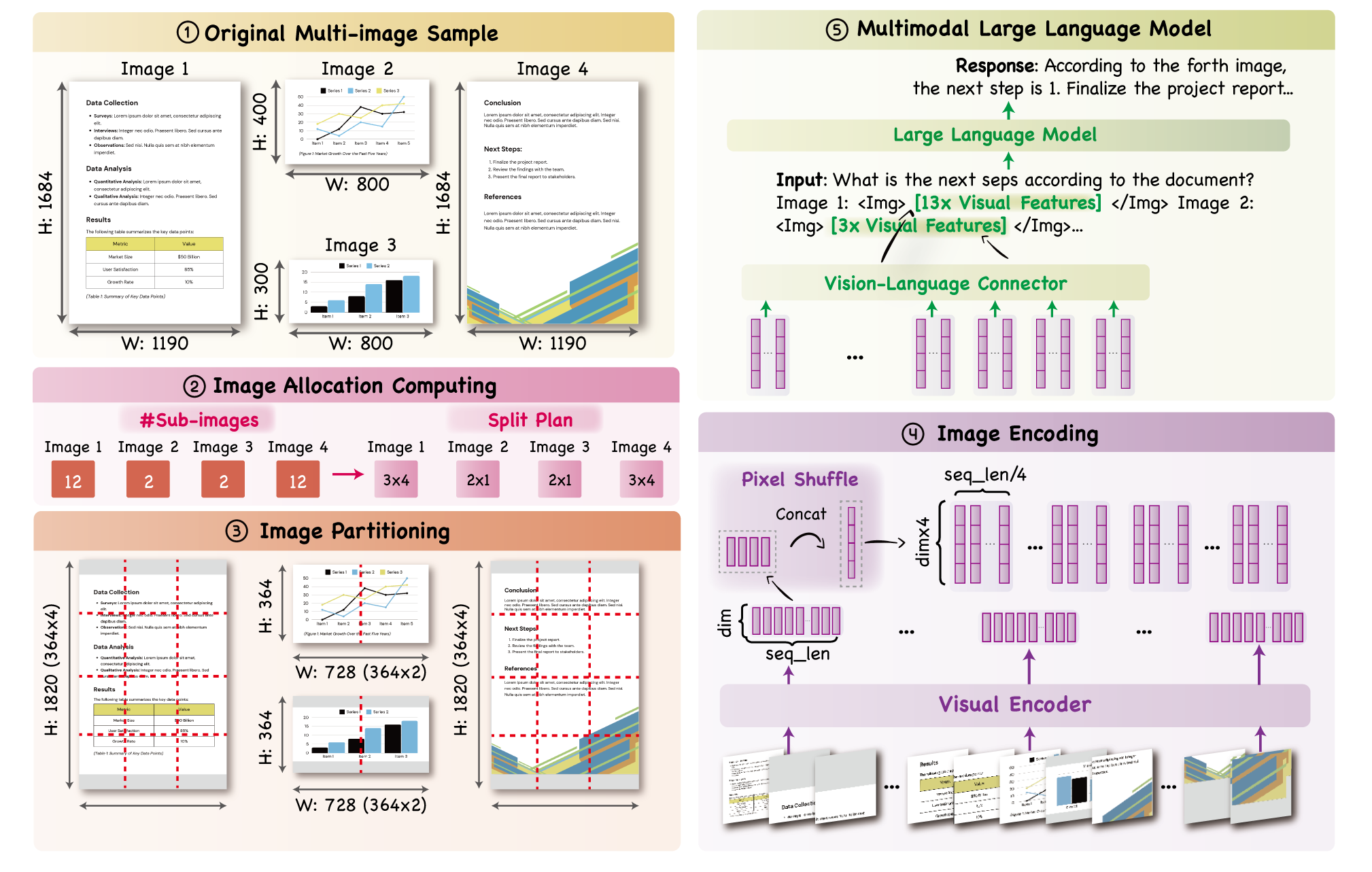

- Adaptive High-Resolution Multi-image Encoding: An innovative multi-image encoding module dynamically allocates visual sequence lengths based on the original aspect ratios and resolutions of input images, ensuring efficient handling of multiple high-resolution images.

- Superior Performance: LEOPARD demonstrates strong performance across text-rich, multi-image benchmarks and maintains competitive results in general-domain evaluations.

For evaluation, please refer to the Evaluations section.

We provide the checkpoints of Leopard-LLaVA and Leopard-Idefics2 on Huggingface.

For model training, please refer to the Training section.