Code and model weights for our paper "Salience Allocation as Guidance for Abstractive Summarization" accepted at EMNLP 2022. If you find the code useful, please cite the following paper.

@inproceedings{wang2022salience,

title={Salience Allocation as Guidance for Abstractive Summarization},

author={Wang, Fei and Song, Kaiqiang and Zhang, Hongming and Jin, Lifeng and Cho, Sangwoo and Yao, Wenlin and Wang, Xiaoyang and Chen, Muhao and Yu, Dong},

booktitle={Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing},

year={2022}

}

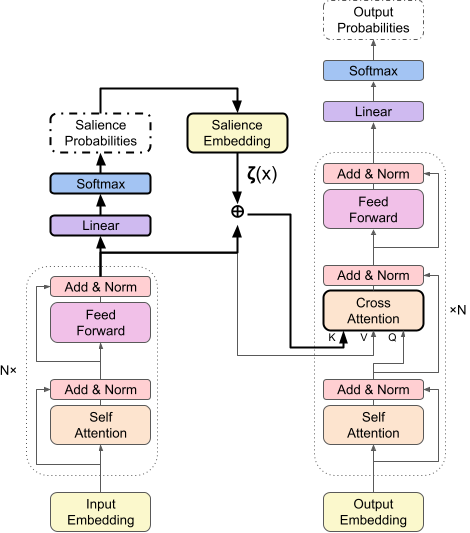

We propose allocation of salience expectation as flexible and reliable guidance for abstractive summarization. To estimate and incorporate the salience allocation, we propose a salience-aware cross-attention that is free to plug into any Transformer-based encoder-decoder models, consisting of three steps:

- Estimate salience degrees of each sentence.

- Map salience degrees to embeddings.

- Add salience embeddings to key states of cross-attention.

Create the environment with conda and pip.

conda env create -f environment.yml

conda activate season

pip install -r requirements.txtInstall nltk "punkt" package.

python -c "import nltk; nltk.download('punkt');"We've tested this environment with python 3.8 and cuda 10.2. (For other CUDA version, please install the corresponding packages)

Run the following commands to download the CNN/DM dataset, preprocess it, and save it locally.

mkdir data

python preprocess.pyPlease run the scripts below:

bash run_train.shThe trained model parameters and training logs are saved in outputs/train folder.

Note that the evaluation process for each checkpoint during training are simplified for efficiency, so the results are lower than the final evaluation results. You can change the setting according to this post. You can further evaluate the trained model by following the inference steps.

You can use our trained model weights to generate summaries for your data.

Step 1. Download Trained Model Weights to checkpoints directory.

mkdir checkpoints

cd checkpoints

unzip season_cnndm.zipStep 2. Generate summaries for CNN/DM Test set.

bash run_inference.shAfter running the script, you will get the results in outputs/inference folder including the predicted summaries in generated_predictions.txt and the ROUGE results in predict_results.json.

Copyright 2022 Tencent

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

This repo is only for research purpose. It is not an officially supported Tencent product.