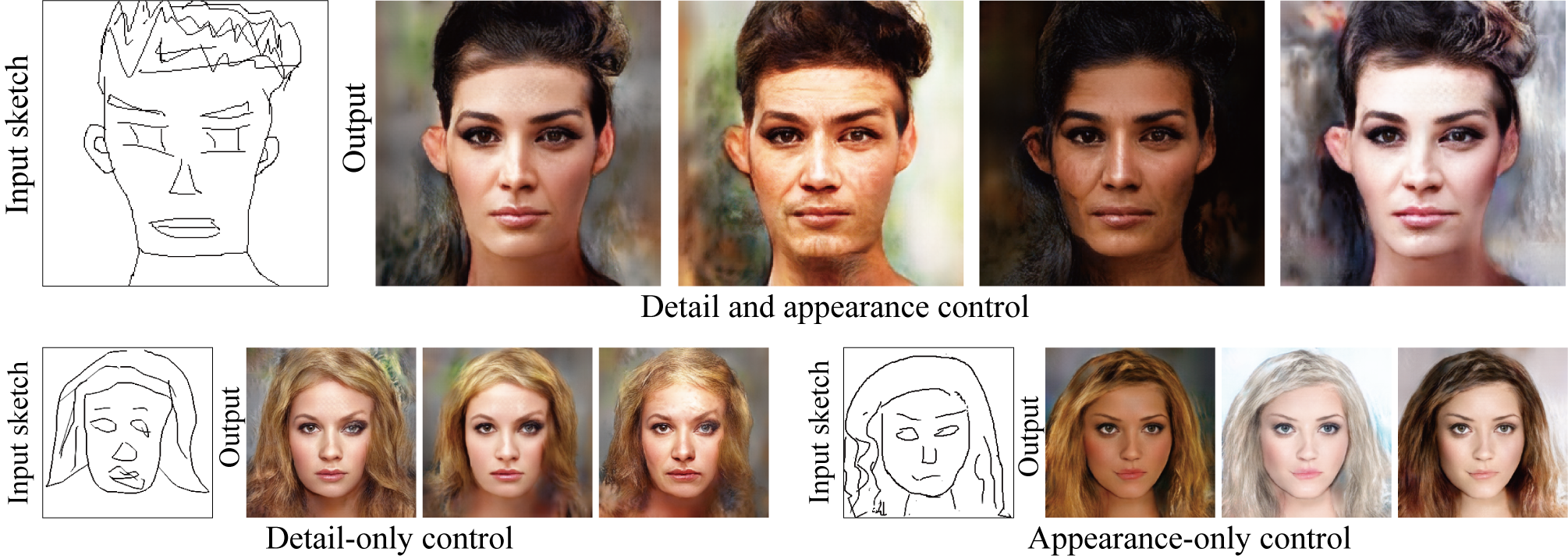

Diversifying Detail and Appearance in Sketch-Based Face Image Synthesis

This code is our implementation of the following paper:

Takato Yoshikawa, Yuki Endo, Yoshihiro Kanamori: "Diversifying Detail and Appearance in Sketch-Based Face Image Synthesis" The Visual Computer (Proc. of Computer Graphics Internatinal 2022), 2022. [Project][PDF (28 MB)]

Prerequisites

Run the following code to install all pip packages.

pip install -r requirements.txt

Inference with our pre-trained models

- Download our pre-trained models for the CelebA-HQ dataset and put them into the "pretrained_model" directory in the parent directory.

- Download the zip file from "Human-Drawn Facial sketches" in DeepPS, unzip it, and put the "sketches" directory into the "data" directory in the parent directory.

- Run test.py

cd src

python test.py

Training

- Download the edge map dataset from Google Drive to the "data" directory.

- Download the CelebA-HQ dataset and run resize_image.py to resize the image.

cd src

python resize_image.py --input path/to/CelebA-HQ/dataset --output ../data/CelebA-HQ256

Training the detail network H

python train.py \

--train_path ../data/CelebA-HQ256_DFE \

--edge_path ../data/CelebA-HQ256_HED \

--edgeSmooth \

--save_model_name network-H

Training the appearance network F

python train.py \

--train_path ../data/CelebA-HQ256 \

--edge_path ../data/CelebA-HQ256_DFE \

--weight_feat 0.0 \

--save_model_name network-F

Citation

Please cite our paper if you find the code useful:

@article{YoshikawaCGI22,

author = {Takato Yoshikawa and Yuki Endo and Yoshihiro Kanamori},

title = {Diversifying Detail and Appearance in Sketch-Based Face Image Synthesis},

journal = {The Visual Computer (Proc. of Computer Graphics Internatinal 2022)},

volume = {38},

number = {9},

pages = {3121-–3133},

year = {2022}

}

Acknowledgements

This code heavily borrows from the DeepPS repository.