Spring 2017 Galvanize Data Science Immersive

An Investigation of Android mobile Malware using the SherLock dataset and Big Data Tools.

Abstract: This project uses the SherLock dataset and an Apache Spark cluster running on Amazon EMR to train machine learning algorithms to identify malicious activity in Android mobile handset logs.

Results: Using a gradient-boosted trees model resulted in active ransomware activity with an accuracy of 96% and sensitivity of 97%.

All of the log time-series slicing, feature vectorization, training, and classification of malicious/not-malicious activity was done inside the Spark cluster using PySpark and SparkML.

See this work as a presentation in Google Slides.

See the video of this talk.

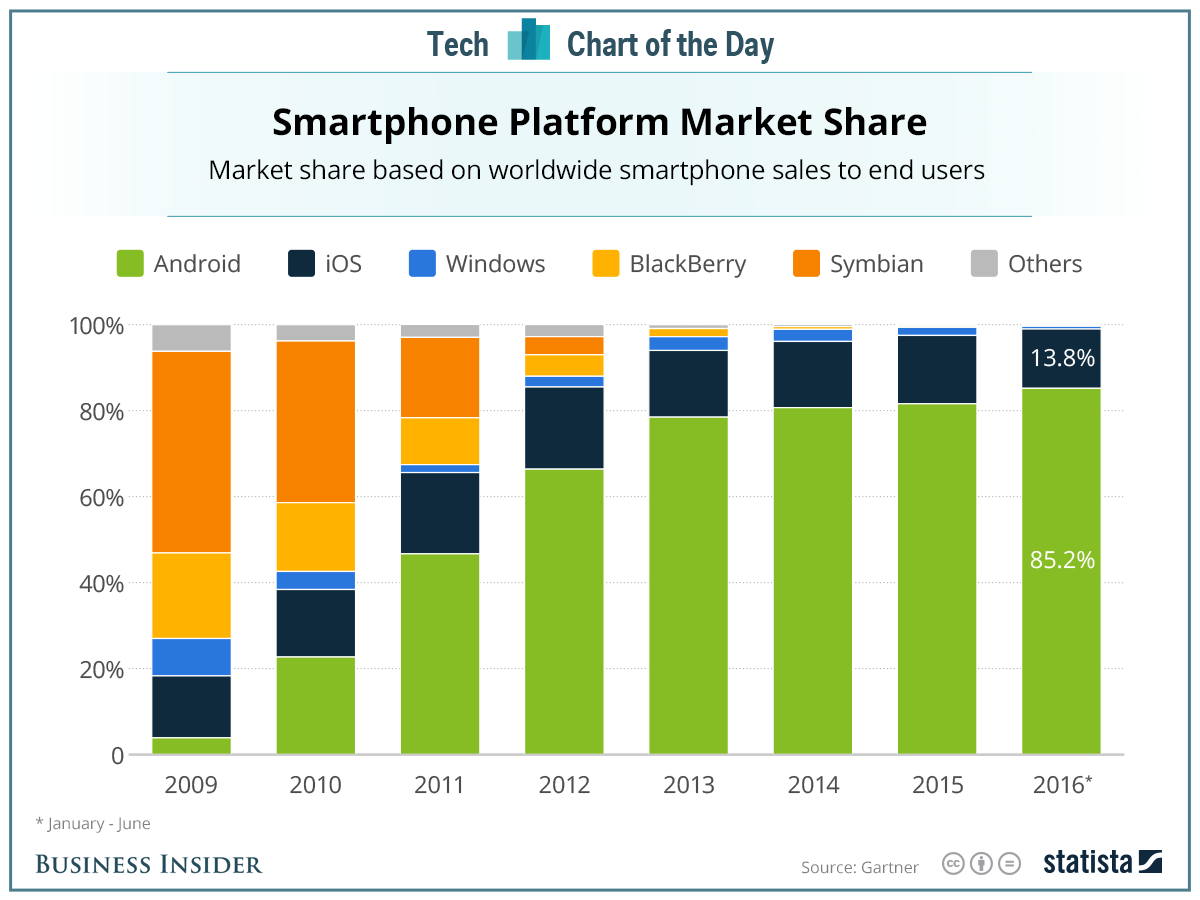

To properly investigate mobile device malware, one must focus on the platforms on which most malware resides. Currently, >85% of smartphone devices operate the Android OS [Fig. 1], while 99% of mobile malware is found on the Android platform.

Figure 1. Smartphone Mobile OS Market Share, 2009-2016

For this project, I chose to focus on a specific type of malware known as ransomware, due to the rapidly increasing threat and high visibility of ransomware in the news (see WannaCry).

Ransomware is a type of malware wherein the malicious code encrypts portions of the device data storage and demands payment from the victim to unlock the data.

What is the SherLock Dataset?

A long-term smartphone sensor dataset with a high temporal resolution. The dataset also offers explicit labels capturing the activity of malwares running on the devices. The dataset currently contains 10 billion data records from 30 users collected over a period of 2 years and an additional 20 users for 10 months (totaling 50 active users currently participating in the experiment).

The primary purpose of the dataset is to help security professionals and academic researchers in developing innovative methods of implicitly detecting malicious behavior in smartphones. Specifically, from data obtainable without superuser (root) privileges. However, this dataset can be used for research in domains that are not strictly security related. For example, context aware recommender systems, event prediction, user personalization and awareness, location prediction, and more. The dataset also offers opportunities that aren't available in other datasets. For example, the dataset contains the SSID and signal strength of the connected WiFi access point (AP) which is sampled once every second, over the course of many months.

One of the interesting aspects of the SherLock dataset is the researchers' use of custom-written "simulated" malware. Across a range of malware types, the researchers write custom malware application packages that perform the same activities as existing malware, albeit in a non-destructive way. For example, much like existing malware, the malware only performs malicious activities at random times. By performing the same actions as real malware and in the same manner, the phone's internal state sensors accurately capture the usage patterns and context of a real attack.

Furthermore, to aid in investigative efforts, the SherLock application consists of two parts: the Moriarty package which simulates the attack and leaves clues in the log of malicious or benign activity, and the SherLock package, which monitors the sensors and internal state of the device as explained above. By combining the Moriarty clues with the SherLock sensor data, machine-learning models can be trained to recognize an attack event.

More information on the data set and methods of collection can be found here: http://bigdata.ise.bgu.ac.il/sherlock/

All of the phone sensor and log information is collected on the mobile device, then uploaded to a database server at Ben-Gurion University. While I thought I would be granted access to query the GBU database, the log files were actually provided as a Google Drive share.

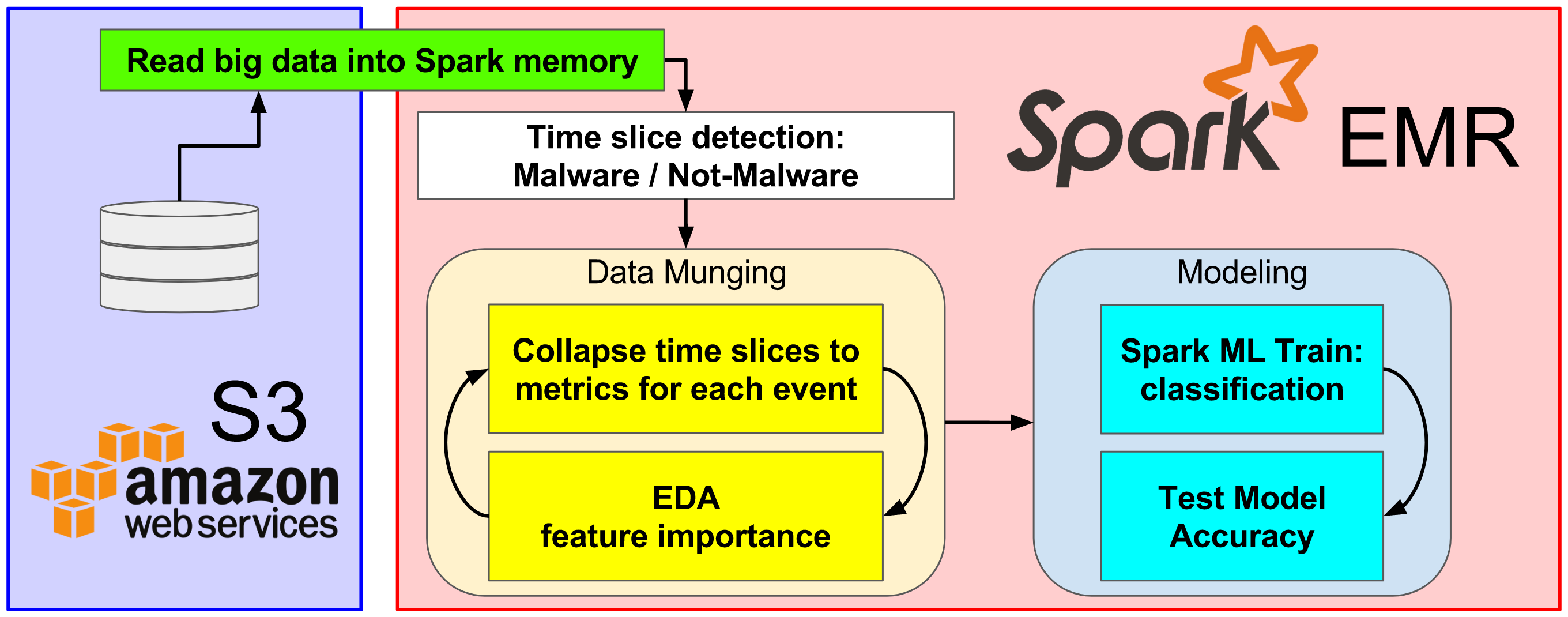

The full SherLock dataset is quite large, over 600 billion data points in 10 billion records, with an on-disk size of approximately 6TB. To handle such a large dataset, I utilized the Amazon Web Services Elastic MapReduce (EMR) service and file management using the Amazon S3 storage service. [Fig. 2]

Figure 2. Data management Pipeline

EMR is a service that allows the building of clusters of servers to accelerate Map-Reduce jobs through distributed database queries and analysis.

For my project, I utilized an Apache Spark cluster of 4 core nodes of type "r4.16xlarge" memory-optimized servers, each with 64 CPU, 488 GB of RAM, and 20 Gigabit network connections. The master node was a single "m3.xlarge" server, with 4 CPU and 15 GB of RAM.

Once the Spark cluster is up and running, the following steps are necessary to analyze the SherLock dataset. [Fig. 3]

- Read log files from S3 into EMR cluster Spark memory.

- Determine time-slice event boundaries from Moriarty data.

- I chose to build a dictionary of malicious and benign events for fast-lookup during query. Each device has multiple instances of benign or malicious event time slices.

- Convert time slice periods into vectorized table of events for machine learning. (see note below)

- Split the vectorized table into training and test subsets (80/20).

- Train the machine learning algorithms using SparkML models with training set.

- Logistic Regression -- Base case

- Gradient-Boosted Trees -- Advanced case

- Test model accuracy by predicting malicious or benign event in hold-out test set.

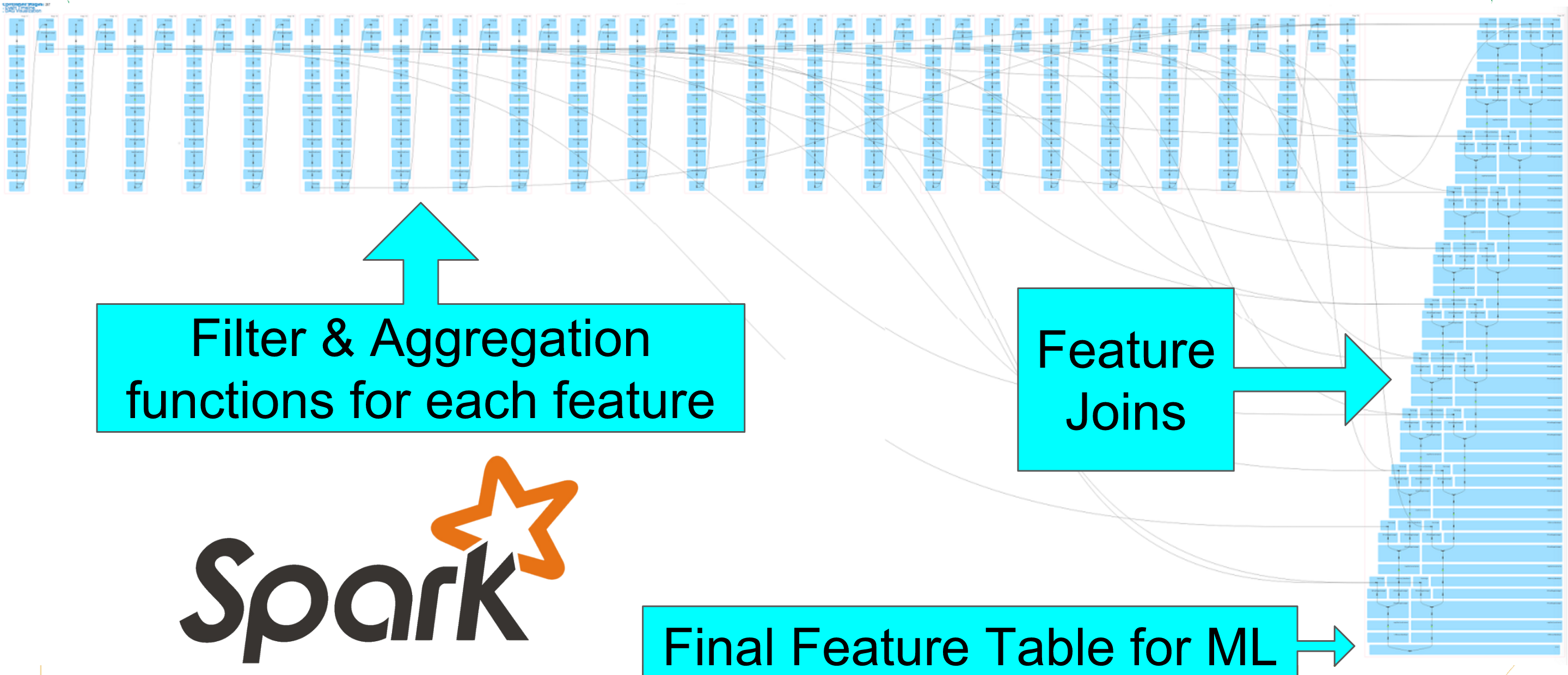

Note: Each time slice period is a begin-end time stamp pair for an individual event for a single user. Because the log files record a continuous series of data points, from multiple devices across multiple log files, captured within the period, it is necessary to perform several map-reduce filter, aggregations, and joins to extract the necessary features of interest. The primary difficulty of this project is collapsing the series of data points into a compact vectorized table for use in the machine learning. By compact vectorized table, I mean that each row of the table is single malicious or benign event, and each column is an aggregated feature of interest within the time slice. For example, one feature of interest is maximum CPU utilization within the time period. [Fig. 4]

Figure 4. Spark Directed Acyclic Graph for building the vectorized table

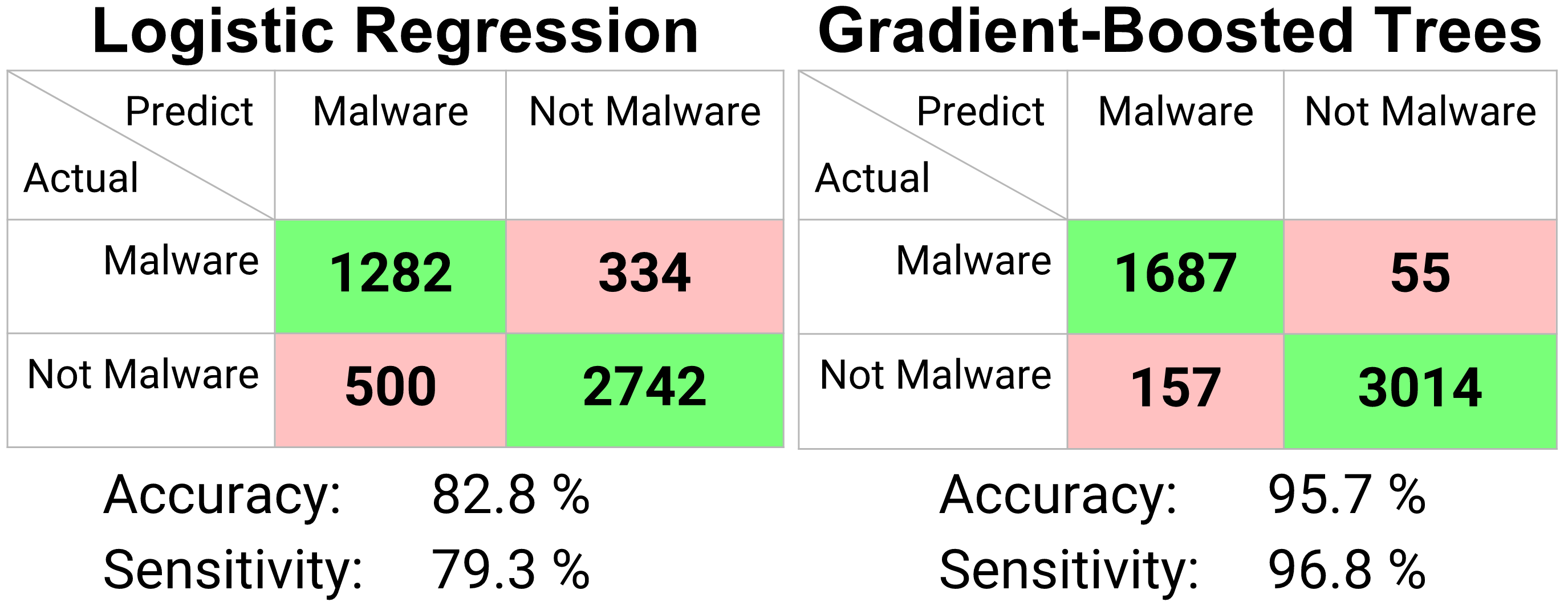

Figure 5. Confusion Matrix Predicting malware events.

The measure of the machine learning model is the ability to correctly predict events in the hold-out test set. The hold-out data was not used to train the model.

In my case, two model results are shown. A logistic regression model and a gradient-boosted trees model. Both models are classification models (result in a distinct prediction of malware or not-malware), although the logistic regression model allows choosing a threshold after the fact for maximum accuracy.

Presented above is the confusion matrix results of the model outputs. [Fig. 5] The correctly predicted values are highlighted in green and the incorrectly predicted values are highlighted in red.

In terms of malware prediction, the two metrics of interest are overall accuracy (true-positives and true-negatives divided by all events), as well as the sensitivity (true-positives divided by actual positives). Sensitivity is arguably the more important metric, as incorrectly predicting an event is not-malicious (false-negative) when it actually is malicious has the highest risk to the user.

-

The logistic regression model resulted in an accuracy of 83% with sensitivity of 80%.

-

The gradient-boosted trees model resulted in an accuracy of 96% and sensitivity of 97%.

Undoubtedly, these results could be improved further with more time to perform feature engineering.

An ideal threat detection method would continuously monitor the mobile device's running processes and internal state, raising a flag as soon as possible after a malicious event begins. However, continuous monitoring uses significant battery life and a trade-off must be made to balance the user's desire for longer battery lifetime against the economic or efficiency lost to disruption of the device availability. For example, analyzing internal-state metrics for malware by aggregating features over a period of 20 seconds may provide sufficient malware detection resolution while also avoiding excessive battery drain.

My sincere thanks to Yisroel Mirsky of Ben-Gurion University of the Negev, Dept. of Software and Information Systems Engineering