InternLM-XComposer2

Thanks the community for HuggingFace Demo | OpenXLab Demo of InternLM-XComposer2.

👋 join us on Discord and WeChat

InternLM-XComposer2: Mastering Free-form Text-Image Composition and Comprehension in Vision-Language Large Models

InternLM-XComposer: A Vision-Language Large Model for Advanced Text-image Comprehension and Composition

ShareGPT4V: Improving Large Multi-modal Models with Better Captions

DualFocus: Integrating Macro and Micro Perspectives in Multi-modal Large Language Models

InternLM-XComposer2 is a groundbreaking vision-language large model (VLLM) based on InternLM2-7B excelling in free-form text-image composition and comprehension. It boasts several amazing capabilities and applications:

-

Free-form Interleaved Text-Image Composition: InternLM-XComposer2 can effortlessly generate coherent and contextual articles with interleaved images following diverse inputs like outlines, detailed text requirements and reference images, enabling highly customizable content creation.

-

Accurate Vision-language Problem-solving: InternLM-XComposer2 accurately handles diverse and challenging vision-language Q&A tasks based on free-form instructions, excelling in recognition, perception, detailed captioning, visual reasoning, and more.

-

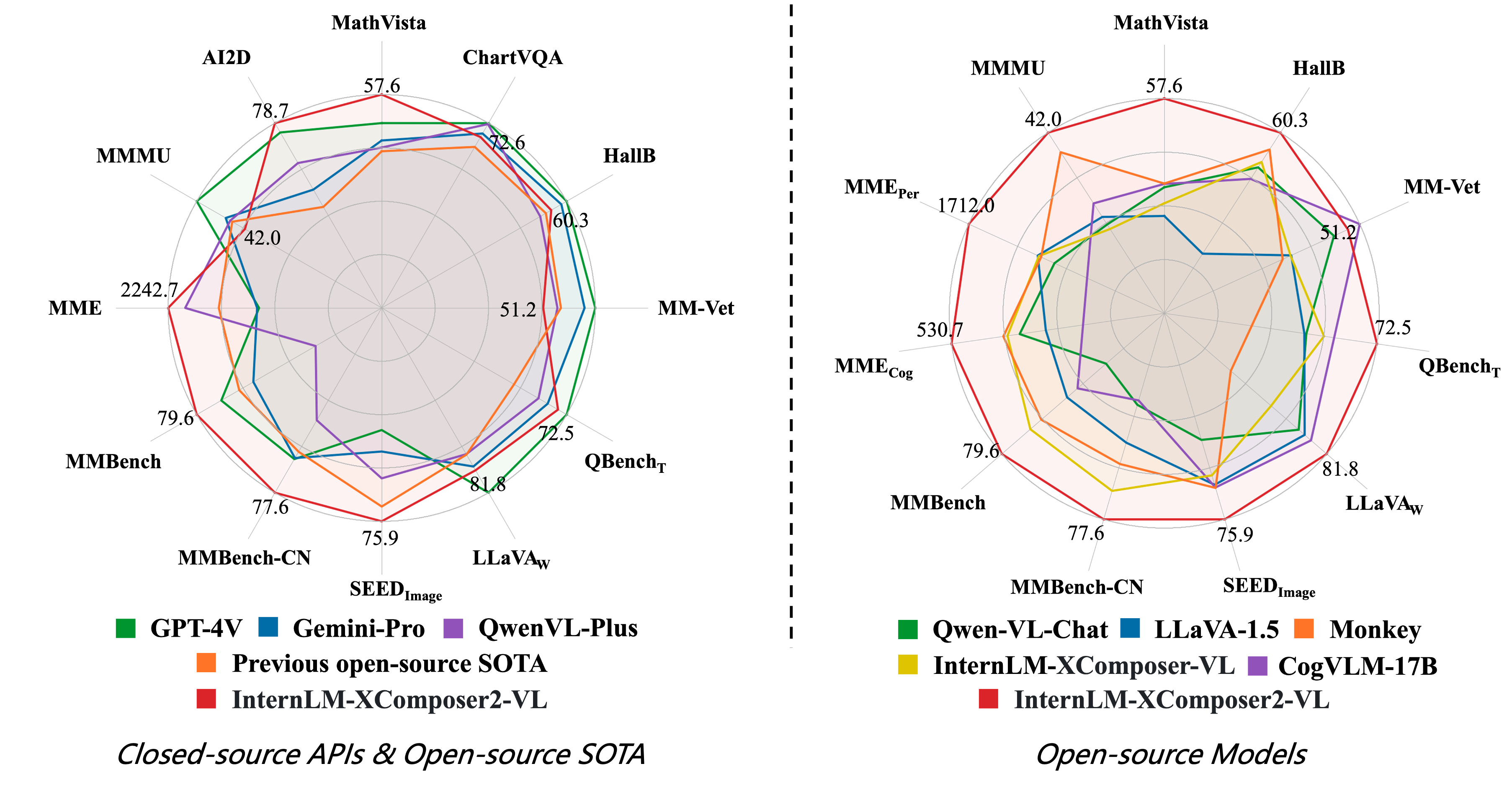

Awesome performance: InternLM-XComposer2 based on InternLM2-7B not only significantly outperforms existing open-source multimodal models in 13 benchmarks but also matches or even surpasses GPT-4V and Gemini Pro in 6 benchmarks

We release InternLM-XComposer2 series in two versions:

-

InternLM-XComposer2-VL-7B 🤗 : The multi-task trained VLLM model with InternLM-7B as the initialization of the LLM for VL benchmarks and AI assistant. It ranks as the most powerful vision-language model based on 7B-parameter level LLMs, leading across 13 benchmarks.

-

InternLM-XComposer2-7B 🤗 : The further instruction tuned VLLM for Interleaved Text-Image Composition with free-form inputs.

Please refer to Technical Report for more details.

InternLM-XComposer2_cps.mp4

Please refer to Chinese Demo for the demo of the Chinese version.

2024.02.22🎉🎉🎉 We release DualFocus, a framework for integrating macro and micro perspectives within MLLMs to enhance vision-language task performance.

2024.02.06🎉🎉🎉 InternLM-XComposer2-7B-4bit and InternLM-XComposer-VL2-7B-4bit are publicly available on Hugging Face and ModelScope.

2024.02.02🎉🎉🎉 The finetune code of InternLM-XComposer2-VL-7B are publicly available.2024.01.26🎉🎉🎉 The evaluation code of InternLM-XComposer2-VL-7B are publicly available.2024.01.26🎉🎉🎉 InternLM-XComposer2-7B and InternLM-XComposer-VL2-7B are publicly available on Hugging Face and ModelScope.2024.01.26🎉🎉🎉 We release a technical report for more details of InternLM-XComposer2 series.2023.11.22🎉🎉🎉 We release the ShareGPT4V, a large-scale highly descriptive image-text dataset generated by GPT4-Vision and a superior large multimodal model, ShareGPT4V-7B.2023.10.30🎉🎉🎉 InternLM-XComposer-VL achieved the top 1 ranking in both Q-Bench and Tiny LVLM.2023.10.19🎉🎉🎉 Support for inference on multiple GPUs. Two 4090 GPUs are sufficient for deploying our demo.2023.10.12🎉🎉🎉 4-bit demo is supported, model files are available in Hugging Face and ModelScope.2023.10.8🎉🎉🎉 InternLM-XComposer-7B and InternLM-XComposer-VL-7B are publicly available on ModelScope.2023.9.27🎉🎉🎉 The evaluation code of InternLM-XComposer-VL-7B are publicly available.2023.9.27🎉🎉🎉 InternLM-XComposer-7B and InternLM-XComposer-VL-7B are publicly available on Hugging Face.2023.9.27🎉🎉🎉 We release a technical report for more details of our model series.

| Model | Usage | Transformers(HF) | ModelScope(HF) | Release Date |

|---|---|---|---|---|

| InternLM-XComposer2 | Text-Image Composition | 🤗internlm-xcomposer2-7b |  internlm-xcomposer2-7b internlm-xcomposer2-7b |

2024-01-26 |

| InternLM-XComposer2-VL | Benchmark, VL-Chat | 🤗internlm-xcomposer2-vl-7b |  internlm-xcomposer2-vl-7b internlm-xcomposer2-vl-7b |

2024-01-26 |

| InternLM-XComposer2-4bit | Text-Image Composition | 🤗internlm-xcomposer2-7b-4bit |  internlm-xcomposer2-7b-4bit internlm-xcomposer2-7b-4bit |

2024-02-06 |

| InternLM-XComposer2-VL-4bit | Benchmark, VL-Chat | 🤗internlm-xcomposer2-vl-7b-4bit |  internlm-xcomposer2-vl-7b-4bit internlm-xcomposer2-vl-7b-4bit |

2024-02-06 |

| InternLM-XComposer | Text-Image Composition, VL-Chat | 🤗internlm-xcomposer-7b |  internlm-xcomposer-7b internlm-xcomposer-7b |

2023-09-26 |

| InternLM-XComposer-4bit | Text-Image Composition, VL-Chat | 🤗internlm-xcomposer-7b-4bit |  internlm-xcomposer-7b-4bit internlm-xcomposer-7b-4bit |

2023-09-26 |

| InternLM-XComposer-VL | Benchmark | 🤗internlm-xcomposer-vl-7b |  internlm-xcomposer-vl-7b internlm-xcomposer-vl-7b |

2023-09-26 |

We evaluate InternLM-XComposer2-VL on 13 multimodal benchmarks: MathVista, MMMU, AI2D, MME, MMBench, MMBench-CN, SEED-Bench, QBench, HallusionBench, ChartQA, MM-Vet, LLaVA-in-the-wild, POPE.

See Evaluation Details here.

| MathVista | AI2D | MMMU | MME | MMB | MMBCN | SEEDI | LLaVAW | QBenchT | MM-Vet | HallB | ChartVQA | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Open-source Previous SOTA | SPH-MOE | Monkey | Yi-VL | WeMM | L-Int2 | L-Int2 | SPH-2 | CogVLM | Int-XC | CogVLM | Monkey | CogAgent |

| 8x7B | 10B | 34B | 6B | 20B | 20B | 17B | 17B | 8B | 30B | 10B | 18B | |

| 42.3 | 72.6 | 45.9 | 2066.6 | 75.1 | 73.7 | 74.8 | 73.9 | 64.4 | 56.8 | 58.4 | 68.4 | |

| GPT-4V | 49.9 | 78.2 | 56.8 | 1926.5 | 77 | 74.4 | 69.1 | 93.1 | 74.1 | 67.7 | 65.8 | 78.5 |

| Gemini-Pro | 45.2 | 73.9 | 47.9 | 1933.3 | 73.6 | 74.3 | 70.7 | 79.9 | 70.6 | 64.3 | 63.9 | 74.1 |

| QwenVL-Plus | 43.3 | 75.9 | 46.5 | 2183.3 | 67 | 70.7 | 72.7 | 73.7 | 68.9 | 55.7 | 56.4 | 78.1 |

| InternLM-XComposer2-VL | 57.6 | 78.7 | 42 | 2242.7 | 79.6 | 77.6 | 75.9 | 81.8 | 72.5 | 51.2 | 60.3 | 72.6 |

| Method | LLM | MathVista | MMMU | MMEP | MMEC | MMB | MMBCN | SEEDI | LLaVAW | QBenchT | MM-Vet | HallB | POPE |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BLIP-2 | FLAN-T5 | - | 35.7 | 1,293.8 | 290.0 | - | - | 46.4 | 38.1 | - | 22.4 | - | - |

| InstructBLIP | Vicuna-7B | 25.3 | 30.6 | - | - | 36.0 | 23.7 | 53.4 | 60.9 | 55.9 | 26.2 | 53.6 | 78.9 |

| IDEFICS-80B | LLaMA-65B | 26.2 | 24.0 | - | - | 54.5 | 38.1 | 52.0 | 56.9 | - | 39.7 | 46.1 | - |

| Qwen-VL-Chat | Qwen-7B | 33.8 | 35.9 | 1,487.5 | 360.7 | 60.6 | 56.7 | 58.2 | 67.7 | 61.7 | 47.3 | 56.4 | - |

| LLaVA | Vicuna-7B | 23.7 | 32.3 | 807.0 | 247.9 | 34.1 | 14.1 | 25.5 | 63.0 | 54.7 | 26.7 | 44.1 | 80.2 |

| LLaVA-1.5 | Vicuna-13B | 26.1 | 36.4 | 1,531.3 | 295.4 | 67.7 | 63.6 | 68.2 | 70.7 | 61.4 | 35.4 | 46.7 | 85.9 |

| ShareGPT4V | Vicuna-7B | 25.8 | 36.6 | 1,567.4 | 376.4 | 68.8 | 62.2 | 69.7 | 72.6 | - | 37.6 | 49.8 | - |

| CogVLM-17B | Vicuna-7B | 34.7 | 37.3 | - | - | 65.8 | 55.9 | 68.8 | 73.9 | - | 54.5 | 55.1 | - |

| LLaVA-XTuner | InernLM2-20B | 24.6 | 39.4 | - | - | 75.1 | 73.7 | 70.2 | 63.7 | - | 37.2 | 47.7 | - |

| Monkey-10B | Qwen-7B | 34.8 | 40.7 | 1,522.4 | 401.4 | 72.4 | 67.5 | 68.9 | 33.5 | - | 33.0 | 58.4 | - |

| InternLM-XComposer | InernLM-7B | 29.5 | 35.6 | 1,528.4 | 391.1 | 74.4 | 72.4 | 66.1 | 53.8 | 64.4 | 35.2 | 57.0 | - |

| InternLM-XComposer2-VL | InernLM2-7B | 57.6 | 43.0 | 1,712.0 | 530.7 | 79.6 | 77.6 | 75.9 | 81.8 | 72.5 | 51.2 | 59.1 | 87.7 |

- python 3.8 and above

- pytorch 1.12 and above, 2.0 and above are recommended

- CUDA 11.4 and above are recommended (this is for GPU users)

Before running the code, make sure you have setup the environment and installed the required packages. Make sure you meet the above requirements, and then install the dependent libraries. Please refer to the installation instructions

We provide a simple example to show how to use InternLM-XComposer with 🤗 Transformers.

🤗 Transformers

import torch

from transformers import AutoModel, AutoTokenizer

torch.set_grad_enabled(False)

# init model and tokenizer

model = AutoModel.from_pretrained('internlm/internlm-xcomposer2-vl-7b', trust_remote_code=True).cuda().eval()

tokenizer = AutoTokenizer.from_pretrained('internlm/internlm-xcomposer2-vl-7b', trust_remote_code=True)

text = '<ImageHere>Please describe this image in detail.'

image = 'examples/image1.webp'

with torch.cuda.amp.autocast():

response, _ = model.chat(tokenizer, query=text, image=image, history=[], do_sample=False)

print(response)

#The image features a quote by Oscar Wilde, "Live life with no excuses, travel with no regret,"

# set against a backdrop of a breathtaking sunset. The sky is painted in hues of pink and orange,

# creating a serene atmosphere. Two silhouetted figures stand on a cliff, overlooking the horizon.

# They appear to be hiking or exploring, embodying the essence of the quote.

# The overall scene conveys a sense of adventure and freedom, encouraging viewers to embrace life without hesitation or regrets.🤖 ModelScope

import torch

from modelscope import snapshot_download, AutoModel, AutoTokenizer

torch.set_grad_enabled(False)

# init model and tokenizer

model_dir = snapshot_download('Shanghai_AI_Laboratory/internlm-xcomposer2-vl-7b')

model = AutoModel.from_pretrained(model_dir, trust_remote_code=True).cuda().eval()

tokenizer = AutoTokenizer.from_pretrained(model_dir, trust_remote_code=True)

model.tokenizer = tokenizer

text = '<ImageHere>Please describe this image in detail.'

image = 'examples/image1.webp'

with torch.cuda.amp.autocast():

response, _ = model.chat(tokenizer, query=text, image=image, history=[], do_sample=False)

print(response)

#The image features a quote by Oscar Wilde, "Live life with no excuses, travel with no regret,"

# set against a backdrop of a breathtaking sunset. The sky is painted in hues of pink and orange,

# creating a serene atmosphere. Two silhouetted figures stand on a cliff, overlooking the horizon.

# They appear to be hiking or exploring, embodying the essence of the quote.

# The overall scene conveys a sense of adventure and freedom, encouraging viewers to embrace life without hesitation or regrets.We provide 4-bit quantized models to ease the memory requirement of the models. To run the 4-bit models (GPU memory >= 12GB), you need first install the corresponding dependency, then execute the follows scripts for chat:

🤗 Transformers

import torch, auto_gptq

from transformers import AutoModel, AutoTokenizer

from auto_gptq.modeling._base import BaseGPTQForCausalLM

auto_gptq.modeling._base.SUPPORTED_MODELS = ["internlm"]

torch.set_grad_enabled(False)

class InternLMXComposer2QForCausalLM(BaseGPTQForCausalLM):

layers_block_name = "model.layers"

outside_layer_modules = [

'vit', 'vision_proj', 'model.tok_embeddings', 'model.norm', 'output',

]

inside_layer_modules = [

["attention.wqkv.linear"],

["attention.wo.linear"],

["feed_forward.w1.linear", "feed_forward.w3.linear"],

["feed_forward.w2.linear"],

]

# init model and tokenizer

model = InternLMXComposer2QForCausalLM.from_quantized(

'internlm/internlm-xcomposer2-vl-7b-4bit', trust_remote_code=True, device="cuda:0").eval()

tokenizer = AutoTokenizer.from_pretrained(

'internlm/internlm-xcomposer2-vl-7b-4bit', trust_remote_code=True)

text = '<ImageHere>Please describe this image in detail.'

image = 'examples/image1.webp'

with torch.cuda.amp.autocast():

response, _ = model.chat(tokenizer, query=text, image=image, history=[], do_sample=False)

print(response)

#The image features a quote by Oscar Wilde, "Live life with no excuses, travel with no regrets."

#The quote is displayed in white text against a dark background. In the foreground, there are two silhouettes of people standing on a hill at sunset.

#They appear to be hiking or climbing, as one of them is holding a walking stick.

#The sky behind them is painted with hues of orange and purple, creating a beautiful contrast with the dark figures.Please refer to our finetune scripts.

Thanks the community for 3rd-party HuggingFace Demo

We provide code for users to build a web UI demo.

Please run the command below for Composition / Chat:

# For Free-form Text-Image Composition

python examples/gradio_demo_composition.py

# For Multimodal Chat

python examples/gradio_demo_chat.py

The user guidance of UI demo is given in HERE. If you wish to change the default folder of the model, please use the --folder=new_folder option.

If you find our models / code / papers useful in your research, please consider giving stars ⭐ and citations 📝, thx :)

@article{internlmxcomposer2,

title={InternLM-XComposer2: Mastering Free-form Text-Image Composition and Comprehension in Vision-Language Large Model},

author={Xiaoyi Dong and Pan Zhang and Yuhang Zang and Yuhang Cao and Bin Wang and Linke Ouyang and Xilin Wei and Songyang Zhang and Haodong Duan and Maosong Cao and Wenwei Zhang and Yining Li and Hang Yan and Yang Gao and Xinyue Zhang and Wei Li and Jingwen Li and Kai Chen and Conghui He and Xingcheng Zhang and Yu Qiao and Dahua Lin and Jiaqi Wang},

journal={arXiv preprint arXiv:2401.16420},

year={2024}

}@article{internlmxcomposer,

title={InternLM-XComposer: A Vision-Language Large Model for Advanced Text-image Comprehension and Composition},

author={Pan Zhang and Xiaoyi Dong and Bin Wang and Yuhang Cao and Chao Xu and Linke Ouyang and Zhiyuan Zhao and Shuangrui Ding and Songyang Zhang and Haodong Duan and Wenwei Zhang and Hang Yan and Xinyue Zhang and Wei Li and Jingwen Li and Kai Chen and Conghui He and Xingcheng Zhang and Yu Qiao and Dahua Lin and Jiaqi Wang},

journal={arXiv preprint arXiv:2309.15112},

year={2023}

}The code is licensed under Apache-2.0, while model weights are fully open for academic research and also allow free commercial usage. To apply for a commercial license, please fill in the application form (English)/申请表(中文). For other questions or collaborations, please contact internlm@pjlab.org.cn.