Source code of the 1st place solution for SIIM-FISABIO-RSNA COVID-19 Detection Challenge.

- Ubuntu 18.04.5 LTS

- CUDA 10.2

- Python 3.7.9

- python packages are detailed separately in requirements

$ conda create -n envs python=3.7.9

$ conda activate envs

$ conda install -c conda-forge gdcm

$ pip install -r requirements.txt

$ pip install git+https://github.com/ildoonet/pytorch-gradual-warmup-lr.git

$ pip install git+https://github.com/bes-dev/mean_average_precision.git@930df3618c924b694292cc125114bad7c7f3097e

- download competition dataset at link then extract to ./dataset/siim-covid19-detection

$ cd src/prepare

$ python dicom2image_siim.py

$ python kfold_split.py

$ prepare_siim_annotation.py # effdet and yolo format

$ cp -r ../../dataset/siim-covid19-detection/images ../../dataset/lung_crop/.

$ python prepare_siim_lung_crop_annotation.py

- download pneumothorax dataset at link then extract to ./dataset/external_dataset/pneumothorax/dicoms

- download pneumonia dataset at link then extract to ./dataset/external_dataset/rsna-pneumonia-detection-challenge/dicoms

- download vinbigdata dataset at link then extract to ./dataset/external_dataset/vinbigdata/dicoms

- download chest14 dataset at link then extract to ./dataset/external_dataset/chest14/images

- download chexpert high-resolution dataset at link then extract to ./dataset/external_dataset/chexpert/train

- download padchest dataset at link then extract to ./dataset/external_dataset/padchest/images

- Note: most of the images in bimcv and ricord duplicate with siim covid trainset and testset. To avoid data-leak when training, I didn't use them. You can use script src/prepare/check_bimcv_ricord_dup.py

$ cd src/prepare

$ python dicom2image_pneumothorax.py

$ python dicom2image_pneumonia.py

$ python prepare_pneumonia_annotation.py # effdet and yolo format

$ python dicom2image_vinbigdata.py

$ python prepare_vinbigdata.py

$ python refine_data.py # remove unused file in chexpert + chest14 + padchest dataset

$ python resize_padchest_pneumothorax.py

dataset structure should be ./dataset/dataset_structure.txt

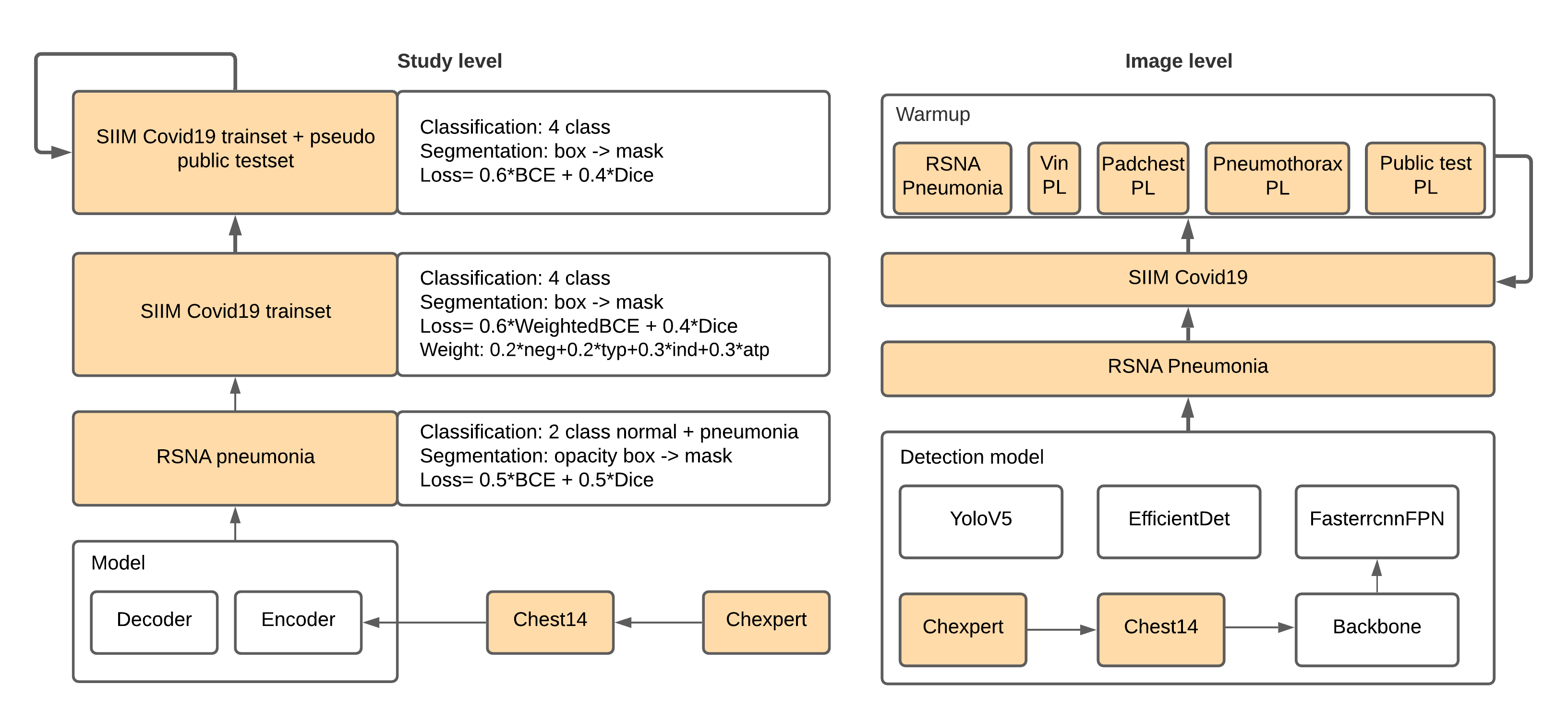

- Stage1

$ cd src/classification_aux

$ bash train_chexpert_chest14.sh #Pretrain backbone on chexpert + chest14

$ bash train_rsnapneu.sh #Pretrain rsna_pneumonia

$ bash train_siim.sh #Train siim covid19

- Stage2: Generate soft-label for classification head and mask for segmentation head.

Output: soft-label in ./pseudo_csv/[source].csv and public test masks in ./prediction_mask/public_test/masks

$ bash generate_pseudo_label.sh [checkpoints_dir]

- Stage3: Train model on trainset + public testset, load checkpoint from previous round

$ bash train_pseudo.sh [previous_checkpoints_dir] [new_checkpoints_dir]

Rounds of pseudo labeling (stage2) and retraining (stage3) were repeated until the score on public LB didn't improve.

- For final checkpoints

$ bash generate_pseudo_label.sh checkpoints_v3

$ bash train_pseudo.sh checkpoints_v3 checkpoints_v4

- For evaluation

$ CUDA_VISIBLE_DEVICES=0 python evaluate.py --cfg configs/xxx.yaml --num_tta xxx

mAP@0.5 4 classes: negative, typical, indeterminate, atypical

| SeR152-Unet | EB5-Deeplab | EB6-Linknet | EB7-Unet++ | Ensemble | |

|---|---|---|---|---|---|

| w/o TTA/8TTA | 0.575/0.584 | 0.583/0.592 | 0.580/0.587 | 0.589/0.595 | 0.595/0.598 |

8TTA: (orig, center-crop 80%)x(None, hflip, vflip, hflip & vflip). In final submission, I use 4.1.2 lung detector instead of center-crop 80%

4.1.2 Lung Detector-YoloV5

I annotated the train data(6334 images) using LabelImg and built a lung localizer. I noticed that increasing input image size improves the modeling performance and lung detector helps the model to reduce background noise.

$ cd src/detection_lung_yolov5

$ cd weights && bash download_coco_weights.sh && cd ..

$ bash train.sh

| Fold0 | Fold1 | Fold2 | Fold3 | Fold4 | Average | |

|---|---|---|---|---|---|---|

| mAP@0.5:0.95 | 0.921 | 0.931 | 0.926 | 0.923 | 0.922 | 0.9246 |

| mAP@0.5 | 0.997 | 0.998 | 0.997 | 0.996 | 0.998 | 0.9972 |

Rounds of pseudo labeling (stage2) and retraining (stage3) were repeated until the score on public LB didn't improve.

- Stage1:

$ cd src/detection_yolov5

$ cd weights && bash download_coco_weights.sh && cd ..

$ bash train_rsnapneu.sh #pretrain with rsna_pneumonia

$ bash train_siim.sh #train with siim covid19 dataset, load rsna_pneumonia checkpoint

- Stage2: Generate pseudo label (boxes)

$ bash generate_pseudo_label.sh

Jump to step 4.2.4 Ensembling + Pseudo labeling

- Stage3:

$ bash warmup_ext_dataset.sh #train with pseudo labeling (public-test, padchest, pneumothorax, vin) + rsna_pneumonia

$ bash train_final.sh #train siim covid19 boxes, load warmup checkpoint

- Stage1:

$ cd src/detection_efffdet

$ bash train_rsnapneu.sh #pretrain with rsna_pneumonia

$ bash train_siim.sh #train with siim covid19 dataset, load rsna_pneumonia checkpoint

- Stage2: Generate pseudo label (boxes)

$ bash generate_pseudo_label.sh

Jump to step 4.2.4 Ensembling + Pseudo labeling

- Stage3:

$ bash warmup_ext_dataset.sh #train with pseudo labeling (public-test, padchest, pneumothorax, vin) + rsna_pneumonia

$ bash train_final.sh #train siim covid19, load warmup checkpoint

- Stage1: train backbone of model with chexpert + chest14 -> train model with rsna pneummonia -> train model with siim, load rsna pneumonia checkpoint

$ cd src/detection_fasterrcnn

$ CUDA_VISIBLE_DEVICES=0,1,2,3 python train_chexpert_chest14.py --steps 0 1 --cfg configs/resnet200d.yaml

$ CUDA_VISIBLE_DEVICES=0,1,2,3 python train_chexpert_chest14.py --steps 0 1 --cfg configs/resnet101d.yaml

$ CUDA_VISIBLE_DEVICES=0 python train_rsnapneu.py --cfg configs/resnet200d.yaml

$ CUDA_VISIBLE_DEVICES=0 python train_rsnapneu.py --cfg configs/resnet101d.yaml

$ CUDA_VISIBLE_DEVICES=0 python train_siim.py --cfg configs/resnet200d.yaml --folds 0 1 2 3 4 --SEED 123

$ CUDA_VISIBLE_DEVICES=0 python train_siim.py --cfg configs/resnet101d.yaml --folds 0 1 2 3 4 --SEED 123

Note: Change SEED if training script runs into issue related to augmentation (boundingbox area=0) and comment/uncomment the following code if training script runs into issue related to resource limit

import resource

rlimit = resource.getrlimit(resource.RLIMIT_NOFILE)

resource.setrlimit(resource.RLIMIT_NOFILE, (8192, rlimit[1]))- Stage2: Generate pseudo label (boxes)

$ bash generate_pseudo_label.sh

Jump to step 4.2.4 Ensembling + Pseudo labeling

- Stage3:

$ CUDA_VISIBLE_DEVICES=0 python warmup_ext_dataset.py --cfg configs/resnet200d.yaml

$ CUDA_VISIBLE_DEVICES=0 python warmup_ext_dataset.py --cfg configs/resnet101d.yaml

$ CUDA_VISIBLE_DEVICES=0 python train_final.py --cfg configs/resnet200d.yaml

$ CUDA_VISIBLE_DEVICES=0 python train_final.py --cfg configs/resnet101d.yaml

Keep images that meet the conditions: negative prediction < 0.3 and maximum of (typical, indeterminate, atypical) predicion > 0.7. Then choose 2 boxes with the highest confidence as pseudo labels for each image.

Note: This step requires at least 128 GB of RAM

$ cd ./src/detection_make_pseudo

$ python make_pseudo.py

$ python make_annotation.py

| YoloV5x6 768 | EffdetD7 768 | F-RCNN R200 768 | F-RCNN R101 1024 | |

|---|---|---|---|---|

| mAP@0.5 TTA | 0.580 | 0.594 | 0.592 | 0.596 |

siim-covid19-2021 Public LB: 0.658 / Private LB: 0.635

demo notebook to visualize output of models

Pytorch✨

PyTorch Image Models✨

Segmentation models✨

EfficientDet✨

YoloV5✨

FasterRCNN FPN✨

Albumentations✨

Weighted boxes fusion✨