Trace FastAPI with Jaeger through OpenTelemetry Python API and SDK.

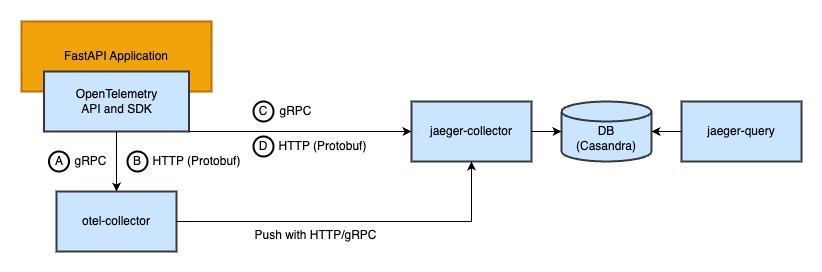

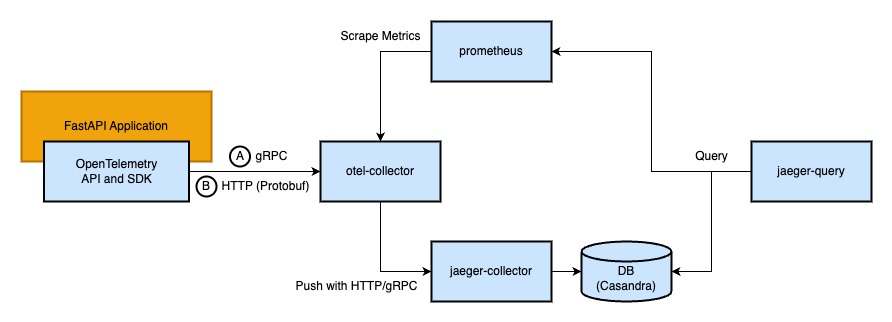

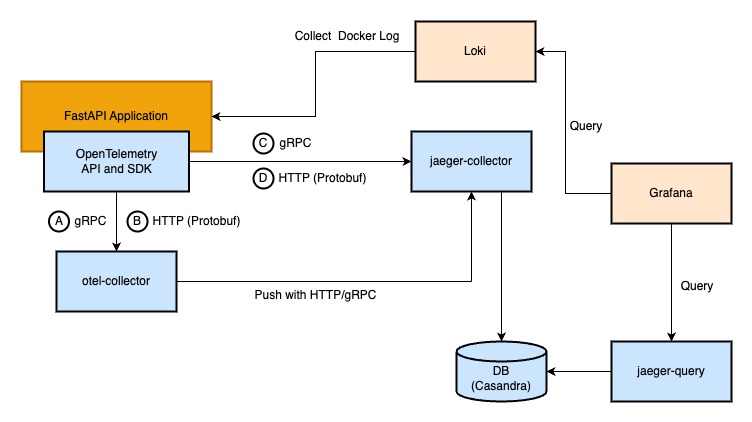

The span from the application could be collected with Jaeger Collector(jaeger-collector) or OpenTelemetry Collector(otel-collector):

There are four ways to push span:

- A: Push span to OpenTelemetry Collector with gRPC (Port: 4317)

- B: Push span to OpenTelemetry Collector over HTTP (Port: 4318)

- C: Push span to Jaeger Collector with gRPC (Port: 4317)

- D: Push span to Jaeger Collector with gRPC (Port: 4318)

In this architecture, OpenTelemetry Collector is an agent to collect and process data and sent data to Jaeger Collector. Jaeger Collector is responsible for collecting span and writing span to DB, then Jaeger Query queries data from DB.

Jaeger Agent has been deprecated since version 1.43. Since version 1.43, OpenTelemetry SDK allows direct data transmission to Jaeger Collector or utilization of OpenTelemetry Collector as an Agent. If you still want to utilize Jaeger Agent for span collection, please refer to the previous version of this project.

- FastAPI Tracing with Jaeger through OpenTelemetry

- Reference

-

Build application image and start all services with docker-compose

docker-compose build docker-compose up -d

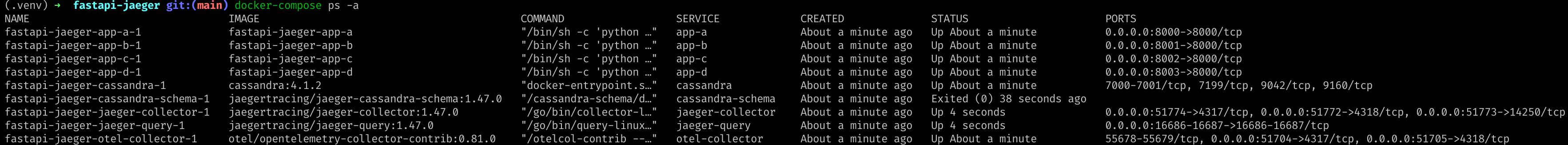

It may take some time for DB(Cassandra) to initialize. You can run

docker-compose psto check thejaeger-querystatus is running when DB is ready as below: -

Send requests with

curlto the FastAPI applicationcurl http://localhost:8000/chain

-

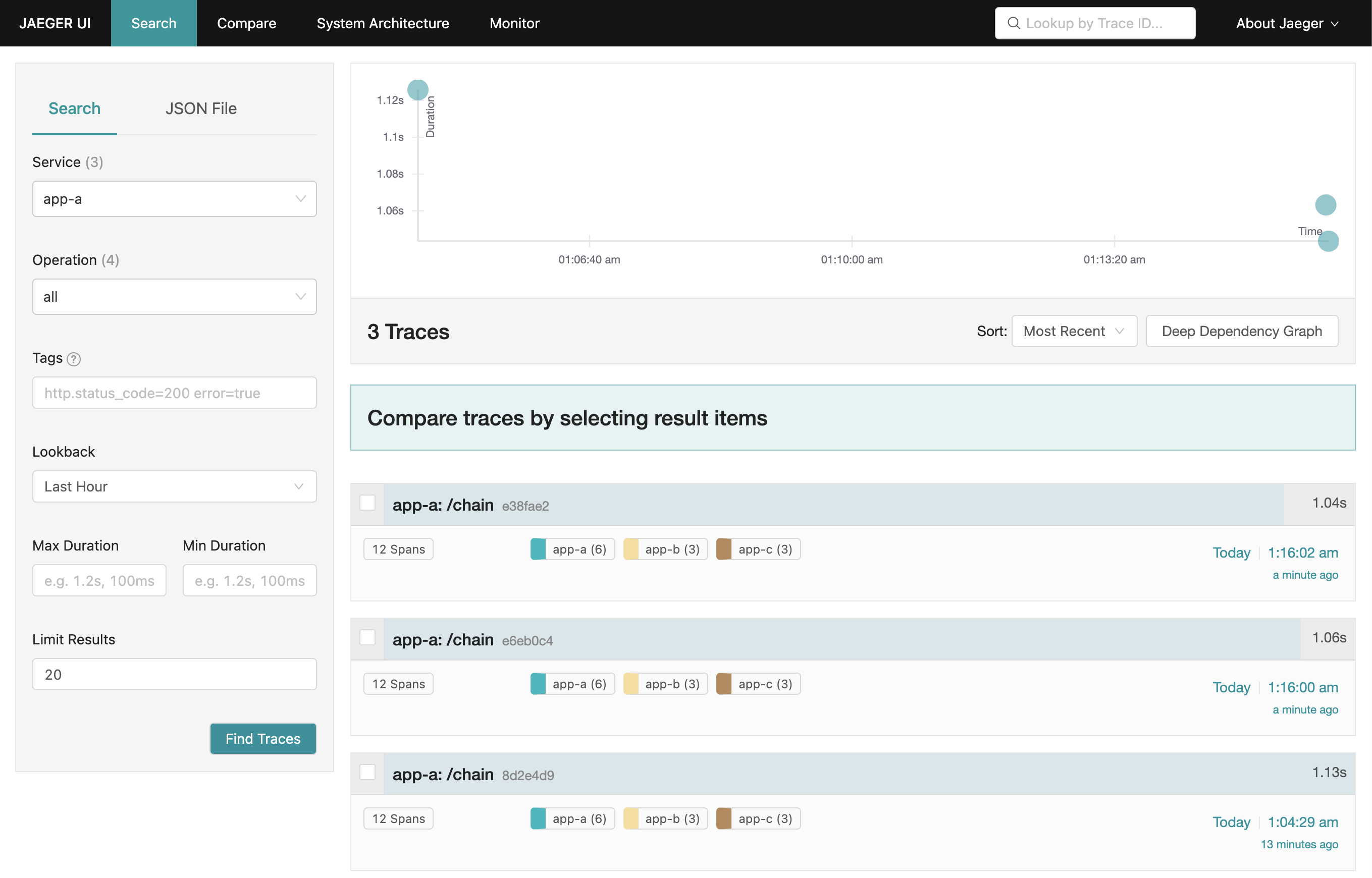

Check on Jaeger UI http://localhost:16686/

Jaeger UI screenshot:

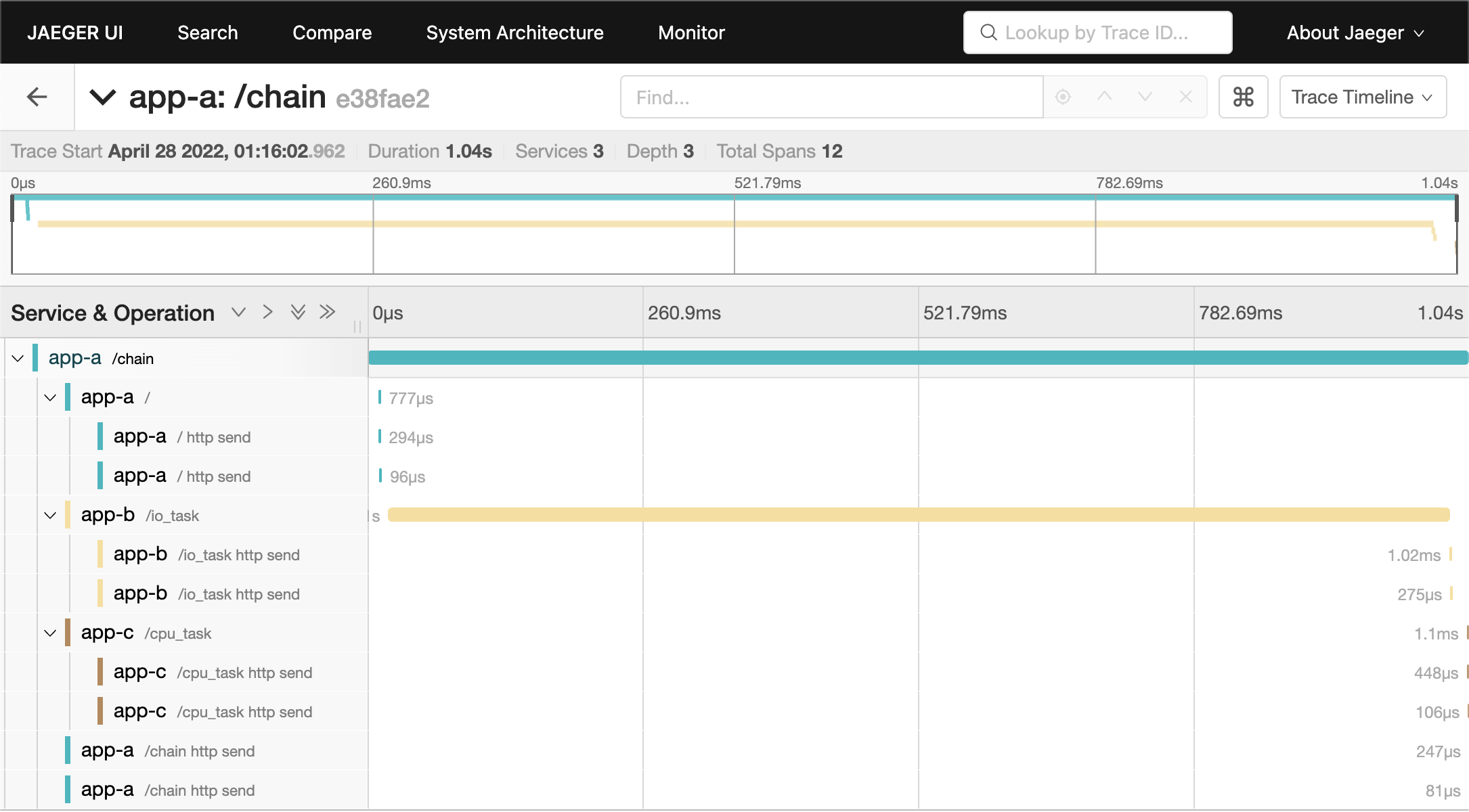

For a more complex scenario, we use three FastAPI applications with the same code in this demo. There is a cross-service action in /chain endpoint, which provides a good example of how to use OpenTelemetry SDK process span and how Jaeger Query presents trace information.

Utilize OpenTelemetry Python SDK to send span to Jaeger. Each request span contains other child spans when using OpenTelemetry instrumentation. The reason is that instrumentation will catch each internal asgi interaction (opentelemetry-python-contrib issue #831). If you want to get rid of the internal spans, there is a workaround in the same issue #831 by using a new OpenTelemetry middleware with two overridden methods of span processing.

Utilize OpenTelemetry Logging Instrumentation override logger format which with trace id and span id.

# fastapi_app/main.py

# jaeger-grpc, jaeger-http, otel-collector-grpc, otel-collector-http

MODE = os.environ.get("MODE", "otel-collector-grpc")

JAEGER_GRPC_ENDPOINT = os.environ.get("JAEGER_GRPC_ENDPOINT", "jaeger-collector:4317")

JAEGER_HTTP_ENDPOINT = os.environ.get(

"JAEGER_HTTP_ENDPOINT", "http://jaeger-collector:4318/v1/traces"

)

OTEL_GRPC_ENDPOINT = os.environ.get("OTEL_GRPC_ENDPOINT", "otel-collector:4317")

OTEL_HTTP_ENDPOINT = os.environ.get(

"OTEL_HTTP_ENDPOINT", "http://otel-collector:4318/v1/traces"

)

def setting_jaeger(app: ASGIApp, app_name: str, log_correlation: bool = True) -> None:

resource = Resource.create(attributes={"service.name": app_name})

# set the tracer provider

tracer = TracerProvider(resource=resource)

trace.set_tracer_provider(tracer)

if MODE == "jaeger-grpc":

tracer.add_span_processor(

BatchSpanProcessor(

OTLPSpanExporterGRPC(endpoint=JAEGER_GRPC_ENDPOINT, insecure=True)

)

)

elif MODE == "jaeger-http":

tracer.add_span_processor(

BatchSpanProcessor(OTLPSpanExporterHTTP(endpoint=JAEGER_HTTP_ENDPOINT))

)

elif MODE == "otel-collector-grpc":

tracer.add_span_processor(

BatchSpanProcessor(

OTLPSpanExporterGRPC(endpoint=OTEL_GRPC_ENDPOINT, insecure=True)

)

)

elif MODE == "otel-collector-http":

tracer.add_span_processor(

BatchSpanProcessor(OTLPSpanExporterHTTP(endpoint=OTEL_HTTP_ENDPOINT))

)

else:

# default otel-collector-grpc

tracer.add_span_processor(

BatchSpanProcessor(

OTLPSpanExporterGRPC(endpoint=OTEL_GRPC_ENDPOINT, insecure=True)

)

)

# override logger format which with trace id and span id

if log_correlation:

LoggingInstrumentor().instrument(set_logging_format=True)

FastAPIInstrumentor.instrument_app(app, tracer_provider=tracer)If we want other services to use the same Trace ID, we have to use inject function to add current span information to the header. Because OpenTelemetry FastAPI instrumentation only takes care of the asgi app's request and response, it does not affect any other modules or actions like sending HTTP requests to other servers or function calls.

# fastapi_app/main.py

from opentelemetry.propagate import inject

@app.get("/chain")

async def chain(response: Response):

headers = {}

inject(headers) # inject trace info to header

async with httpx.AsyncClient() as client:

await client.get(f"http://localhost:8000/", headers=headers,)

async with httpx.AsyncClient() as client:

await client.get(f"http://{TARGET_ONE_HOST}:8000/io_task", headers=headers,)

async with httpx.AsyncClient() as client:

await client.get(f"http://{TARGET_TWO_HOST}:8000/cpu_task", headers=headers,)

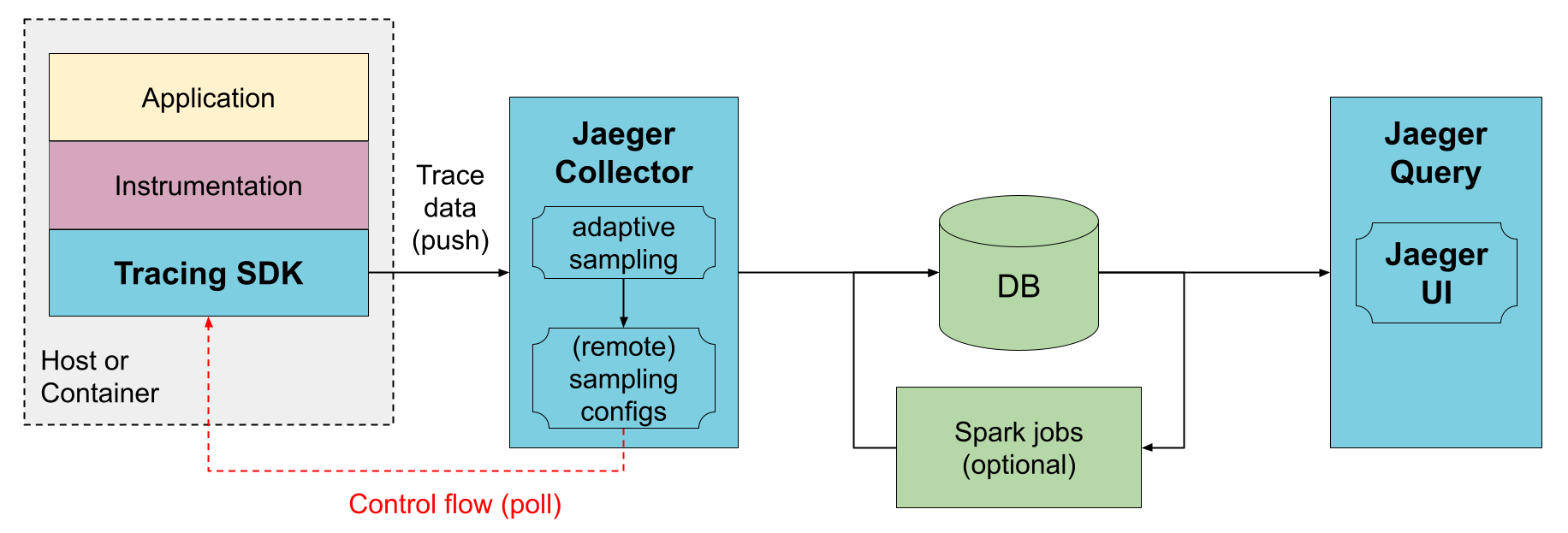

return {"path": "/chain"}There is an all-in-one Jaeger for quick testing, but in production running Jaeger backend components as a scalable distributed system is the most common method, as illustrated below.

Image Source: Jaeger

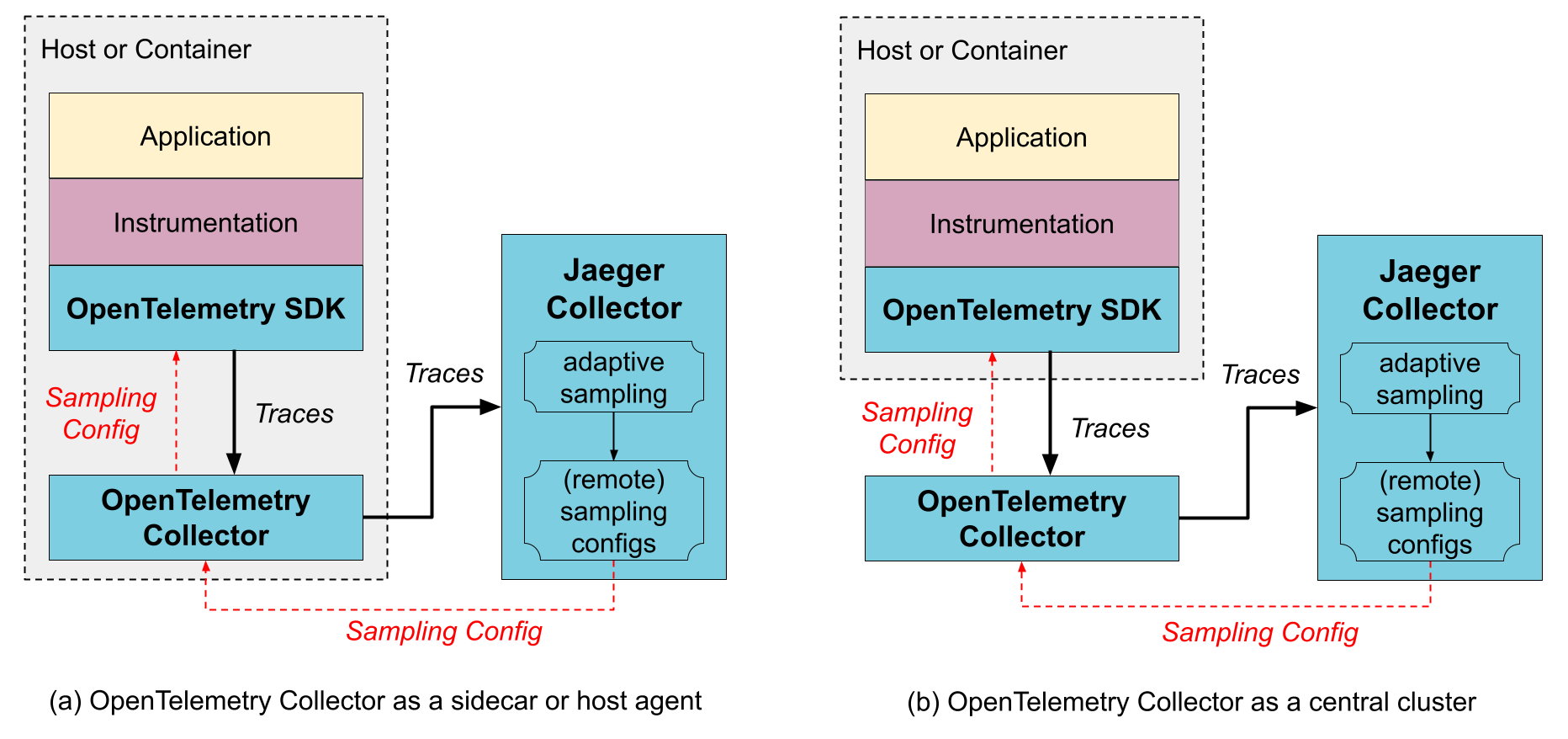

Or with OpenTelemetry Collector:

Image Source: Jaeger

We create the Jaeger backend of this demo project based on the docker compose example from Jaeger's official repository.

Check more details on Jaeger docs about architecture.

The Jaeger collector receives traces from OpenTelemetry SDKs or OpenTelemetry Agent and runs them through a processing pipeline.

# docker-compose.yaml

services:

jaeger-collector:

image: jaegertracing/jaeger-collector:1.50.0

command:

- "--cassandra.keyspace=jaeger_v1_dc1"

- "--cassandra.servers=cassandra"

- "--sampling.initial-sampling-probability=.5"

- "--sampling.target-samples-per-second=.01"

- "--collector.otlp.enabled=true"

environment:

- SAMPLING_CONFIG_TYPE=adaptive

ports:

- "4317" # accept OpenTelemetry Protocol (OTLP) over gRPC

- "4318" # accept OpenTelemetry Protocol (OTLP) over HTTP

- "14250" # accept model.proto

restart: on-failure

depends_on:

- cassandra-schemaCheck more details on Jaeger docs Deployment about Collector, CLI flags about Collector, and Sampling.

The OpenTelemetry Collector receives traces from OpenTelemetry SDKs and processes them according to the configuration file etc/otel-collector-config.yaml.

# docker-compose.yaml

services:

otel-collector:

image: otel/opentelemetry-collector-contrib:0.88.0

command:

- "--config=/conf/config.yaml"

volumes:

- ./etc/otel-collector-config.yaml:/conf/config.yaml

ports:

- "4317" # OTLP gRPC receiver

- "4318" # OTLP http receiver

restart: on-failure

depends_on:

- jaeger-collector# etc/otel-collector-config.yaml

receivers:

otlp:

protocols:

grpc:

http:

exporters:

otlp:

endpoint: jaeger-collector:4317

tls:

insecure: true

otlphttp:

endpoint: http://jaeger-collector:4318

tls:

insecure: true

jaeger:

endpoint: jaeger-collector:14250

tls:

insecure: true

processors:

batch:

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp] # export to jaeger-collector:4317

# exporters: [otlphttp] # export to jaeger-collector:4318

# exporters: [jaeger] # export to jaeger-collector:14250Check more details on OpenTelemetry Collector docs Configuration.

All traces collected by Jaeger Collector will be validated, indexed, and then stored in storage. Jaeger supports multiple span storage backends:

- Cassandra 3.4+

- Elasticsearch 5.x, 6.x, 7.x

- Kafka

- memory storage

- Storage plugin

In this demo, we use Cassandra as the storage backend.

# docker-compose.yaml

services:

# Cassandra instance container

cassandra:

image: cassandra:4.1.2

# initialize Cassandra

cassandra-schema:

image: jaegertracing/jaeger-cassandra-schema:1.50.0

depends_on:

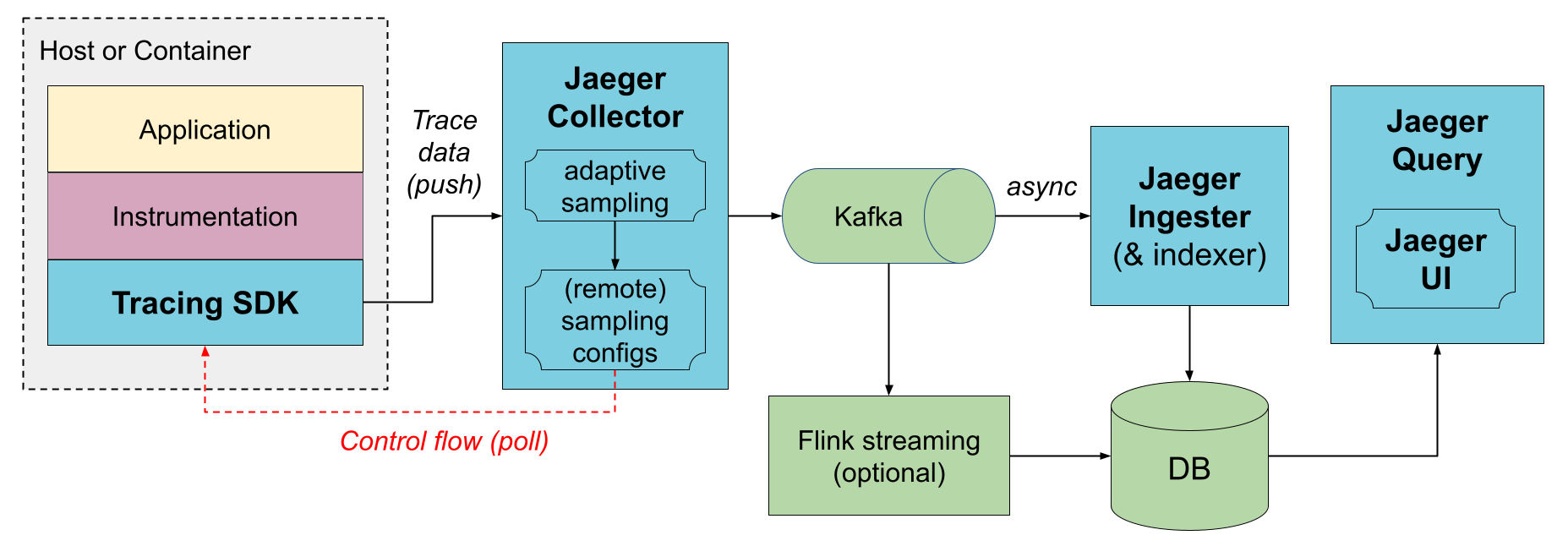

- cassandraIf you want to mitigate the pressure on Cassandra and prevent data loss, you can add Kafka and Jaeger Ingester to the architecture to process data asynchronously. The architecture will look like this:

Image Source: Jaeger

There is a docker compose example on Jaeger's official repository.

Check more details on Jaeger docs Deployment about Span Storage Backends.

The Jaeger Query is a service that retrieves traces from storage and hosts a UI to display them.

# docker-compose.yaml

services:

jaeger-query:

image: jaegertracing/jaeger-query:1.50.0

command:

- "--cassandra.keyspace=jaeger_v1_dc1"

- "--cassandra.servers=cassandra"

ports:

- "16686:16686"

- "16687:16687"

restart: on-failure

depends_on:

- cassandra-schemaCheck more details on Jaeger docs Deployment about Query Service & UI.

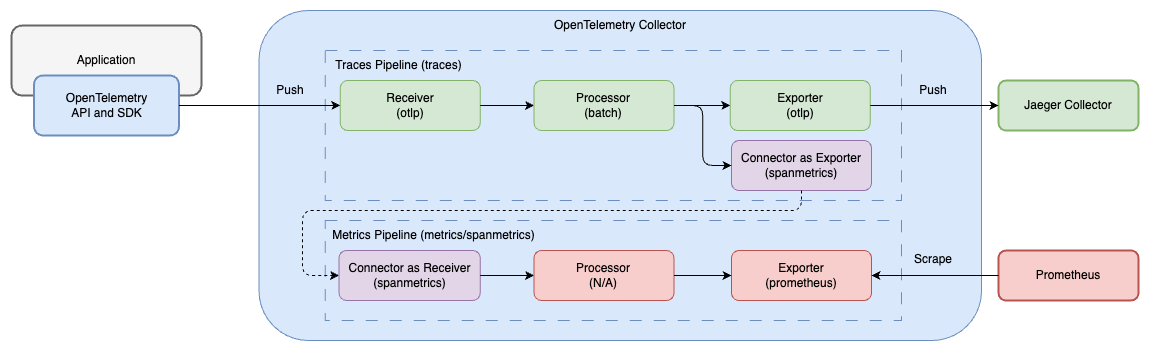

Jaeger Service Performance Monitoring become stable since Jaeger 1.43.0. It provides a new way to monitor the performance of services, which extracts the RED (Request, Error, Duration) metrics from span data. The data flow is shown below:

-

Build application image and start all services with docker-compose

docker-compose build docker-compose -f docker-compose-spm.yaml up -d

It may take some time for DB(Cassandra) to initialize. You can run

docker-compose psto check thejaeger-querystatus is running when DB is ready. -

Send requests with siege and curl to the FastAPI app

bash request-script.sh

-

Check on Jaeger UI Monitoring tab http://localhost:16686/monitor

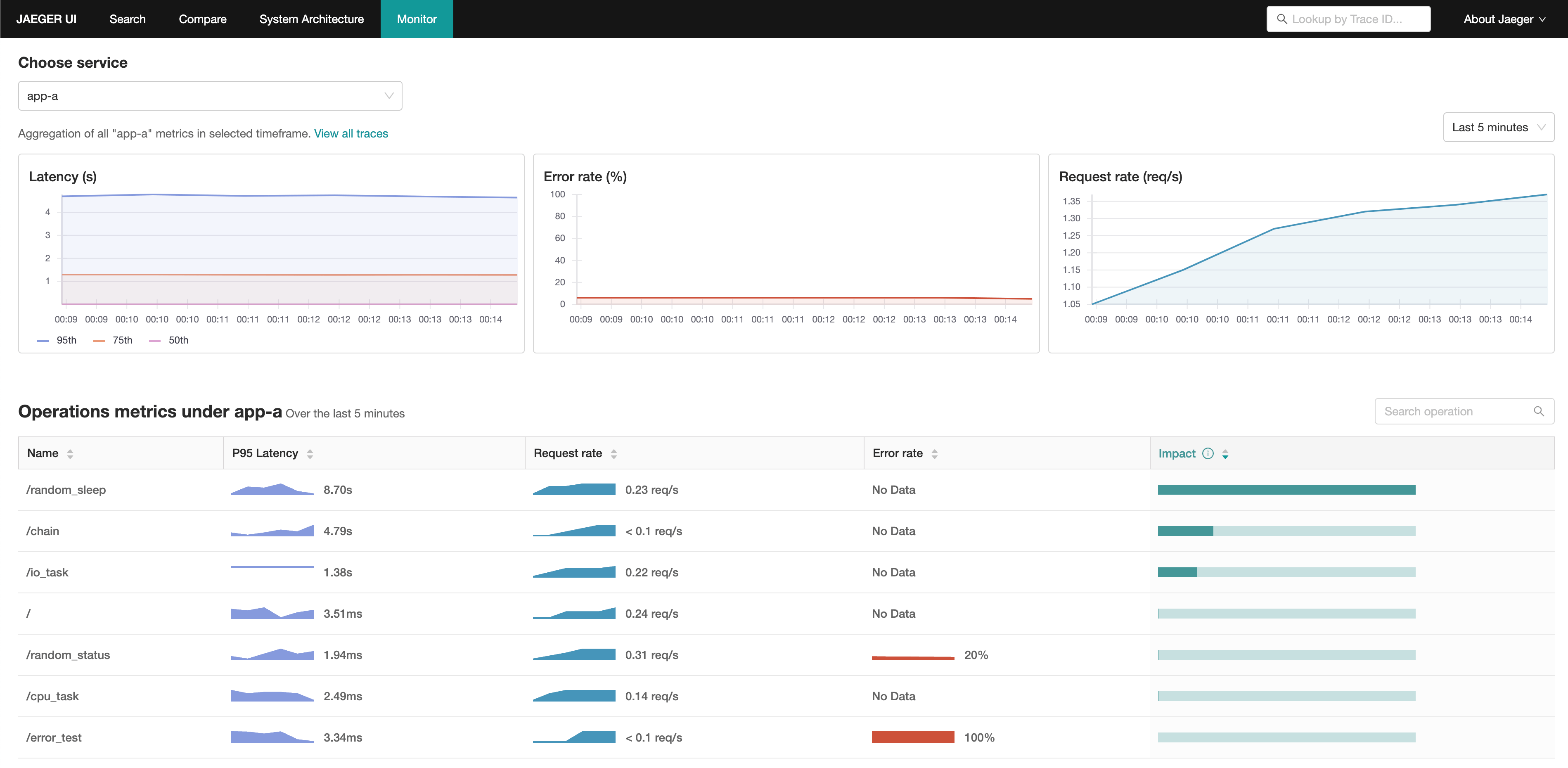

Jaeger UI Monitoring tab screenshot:

To enable Service Performance Monitoring, we need to:

- Add some configurations to OpenTelemetry Collector for extracting RED metrics from span data and exporting them to Prometheus.

- Create a Prometheus instance to store the metrics.

- Update Jaeger Query configurations to scrape metrics from Prometheus.

The OpenTelemetry Collector receives traces from OpenTelemetry SDKs and processes them according to the configuration file etc/otel-collector-config-spm.yaml.

# docker-compose-spm.yaml

service:

otel-collector:

image: otel/opentelemetry-collector-contrib:0.81.0

command:

- "--config=/conf/config.yaml"

volumes:

- ./etc/otel-collector-config-spm.yaml:/conf/config.yaml

ports:

- "4317" # OTLP gRPC receiver

- "4318" # OTLP http receiver

- "8889" # Prometheus metrics exporter

restart: on-failure

depends_on:

- jaeger-collector# etc/otel-collector-config-spm.yaml

receivers:

otlp:

protocols:

grpc:

http:

exporters:

otlp:

endpoint: jaeger-collector:4317

tls:

insecure: true

prometheus:

endpoint: "0.0.0.0:8889"

connectors:

spanmetrics:

processors:

batch:

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [spanmetrics, otlp]

metrics/spanmetrics:

receivers: [spanmetrics]

exporters: [prometheus]To generate metrics from span data, we need to:

- Add

spanmetricstoconnectors: Enable and configure the spanmetrics connector- dimensions: Extract span attributes to Prometheus labels

- Add

spanmetricsto traces pipelineexporters: Let the traces pipeline export traces to the spanmetrics connector - Add

spanmetricsto metrics pipelinereceivers: Set the spanmetrics connector as the receiver of the metrics pipeline, and the data is from the traces pipeline exporter - Add

prometheusto metrics pipelineexporters: Expose metrics in Prometheus format on port 8889

The pipeline diagram and configuration file are as follows:

Prometheus collects metrics from OpenTelemetry Collector and stores them in its database. The metrics can be scraped by Jaeger Query.

# docker-compose-spm.yaml

service:

prometheus:

image: prom/prometheus:v2.45.0

ports:

- "9090:9090"

volumes:

- ./etc/prometheus.yml:/workspace/prometheus.yml

command:

- --config.file=/workspace/prometheus.yml# etc/prometheus.yml

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

scrape_configs:

- job_name: aggregated-trace-metrics

static_configs:

- targets: ['otel-collector:8889']Jaeger Query scrapes metrics from Prometheus and displays them on the Monitoring tab.

# docker-compose-spm.yaml

service:

jaeger-query:

image: jaegertracing/jaeger-query:1.50.0

environment:

- METRICS_STORAGE_TYPE=prometheus

command:

- "--cassandra.keyspace=jaeger_v1_dc1"

- "--cassandra.servers=cassandra"

- "--prometheus.query.support-spanmetrics-connector=true"

- "--prometheus.server-url=http://prometheus:9090"

- "--prometheus.query.normalize-duration=true"

- "--prometheus.query.normalize-calls=true"

ports:

- "16686:16686"

- "16687:16687"

restart: on-failure

depends_on:

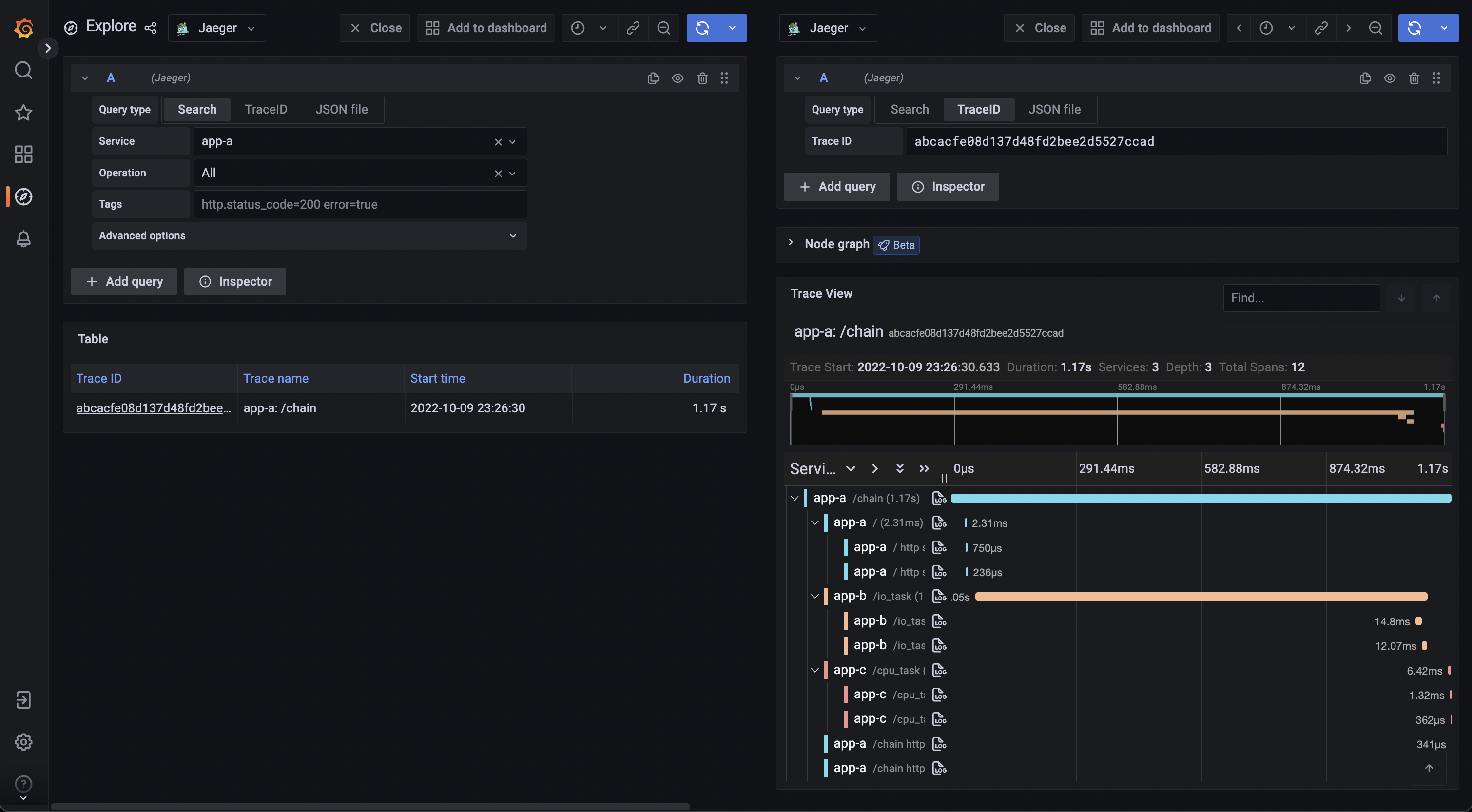

- cassandra-schemaOnly viewing the trace information on Jaeger UI may not be good enough. How about also tracing with logs at the same time? Grafana started supporting Jaeger data source since Grafana 7.4+, and also provides a good log aggregation system Loki. Grafana provides a great user experience tracing information across logs and traces.

-

Install Loki Docker Driver

docker plugin install grafana/loki-docker-driver:latest --alias loki --grant-all-permissions

-

Build application image and start all services with docker-compose

docker-compose build docker-compose -f docker-compose-grafana.yaml up -d

It may take some time for DB(Cassandra) to initialize.

-

Send requests with

curlto the FastAPI applicationcurl http://localhost:8000/chain

-

Explore the collected span on Grafana http://localhost:3000/ with default user

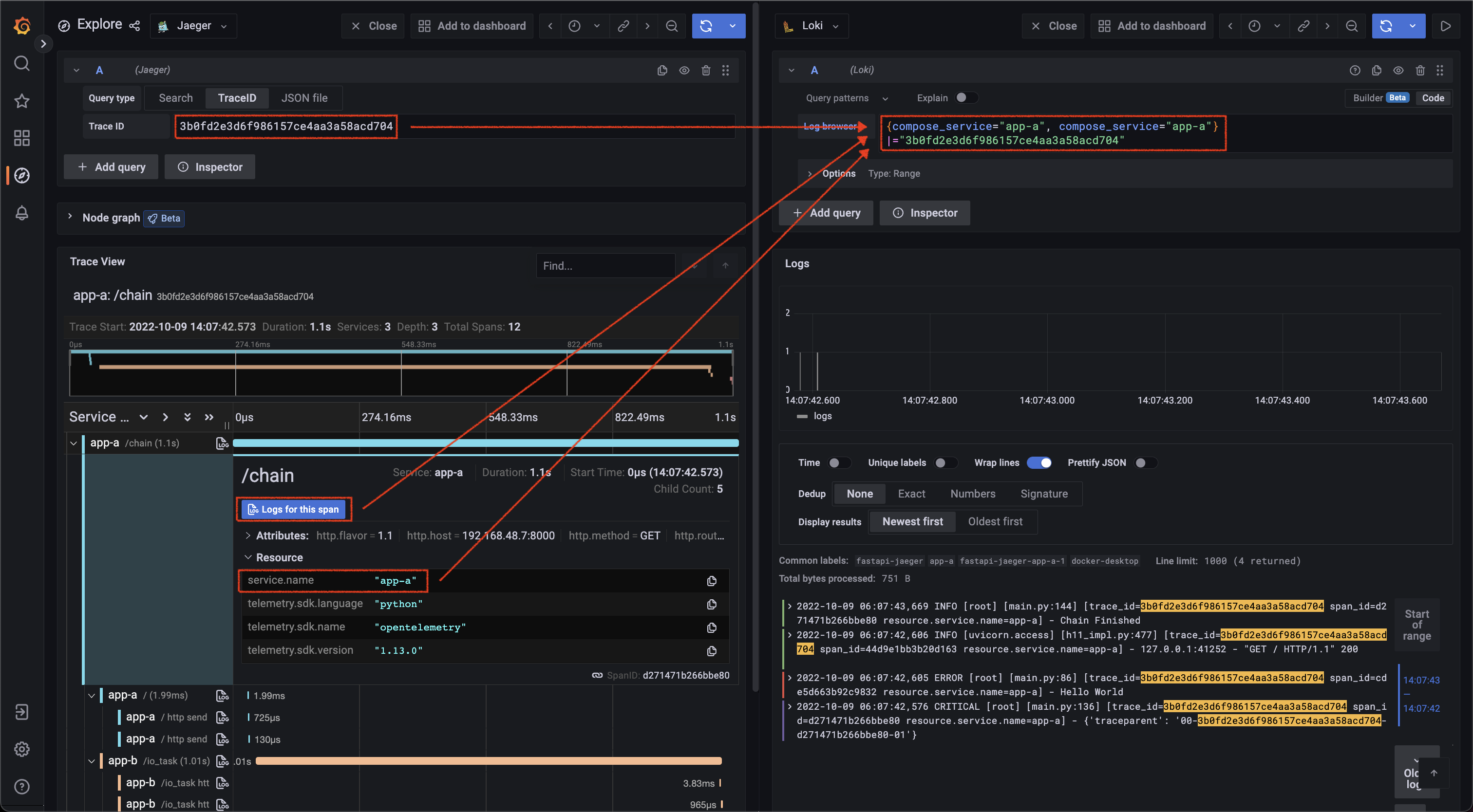

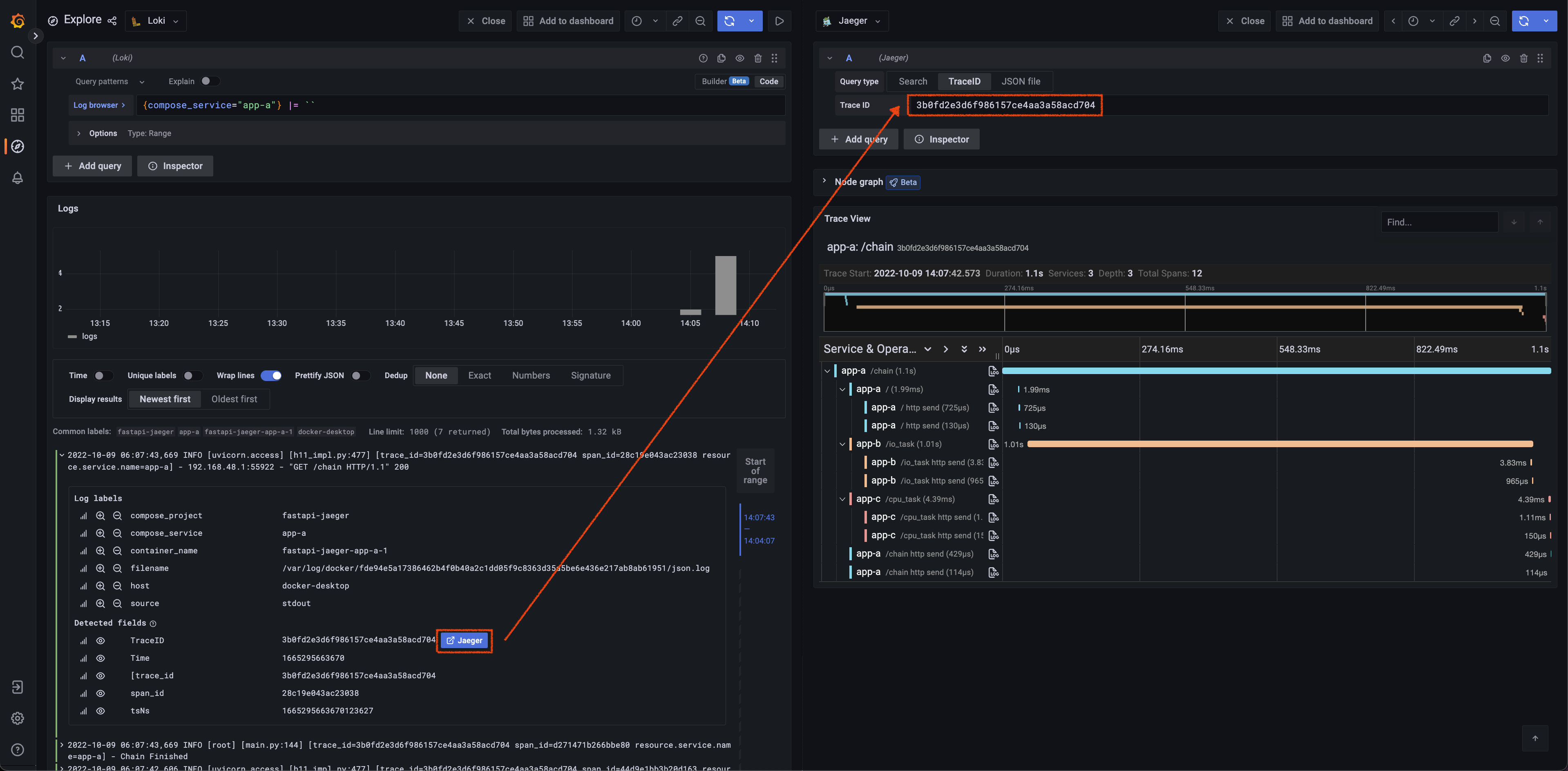

adminand passwordadminGrafana Explore screenshot:

Get Trace ID and tags (here is compose.service mapping to compose_service) defined in Jaeger data source from span, then query with Loki.

Get Trace ID pared from log (regex defined in Loki data source), then query in Jaeger.

Receives spans from applications.

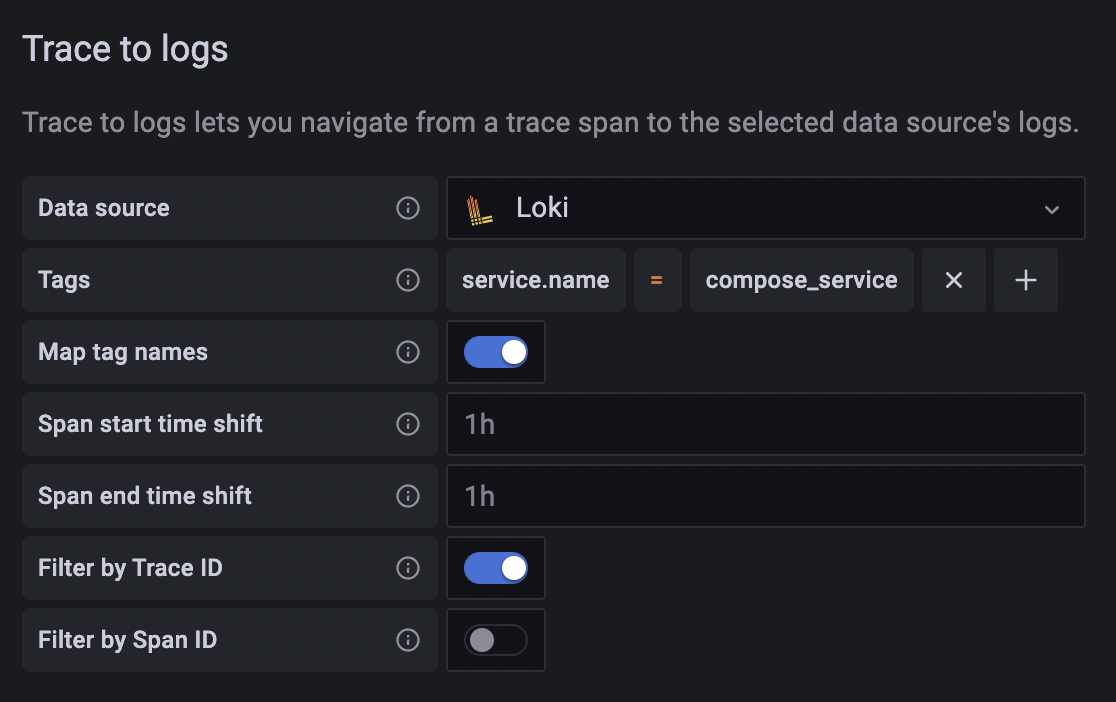

Trace to logs setting:

- Data source: target log source

- Tags: key of tags or process level attributes from the trace, which will be log query criteria if the key exists in the trace

- Map tag names: Convert existing key of tags or process level attributes from trace to another key, then used as log query criteria. Use this feature when the values of the trace tag and log label are identical but the keys are different.

Grafana data source setting example:

Grafana data sources config example:

# etc/grafana/datasource.yml

name: Jaeger

type: jaeger

typeName: Jaeger

access: proxy

url: http://jaeger-query:16686

user: ''

database: ''

basicAuth: false

isDefault: false

jsonData:

nodeGraph:

enabled: true

tracesToLogs:

datasourceUid: loki

filterBySpanID: false

filterByTraceID: true

mapTagNamesEnabled: true

mappedTags:

- key: service.name

value: compose_serviceCollects logs with Loki Docker Driver from applications.

- Use YAML anchor and alias feature to set logging options for each service.

- Set Loki Docker Driver options

- loki-url: loki service endpoint

- loki-pipeline-stages: processes multiline log from FastAPI application with multiline and regex stages (reference)

# docker-compose-grafana.yaml

x-logging: &default-logging # anchor(&): 'default-logging' for defines a chunk of configuration

driver: loki

options:

loki-url: 'http://localhost:3100/api/prom/push'

loki-pipeline-stages: |

- multiline:

firstline: '^\d{4}-\d{2}-\d{2} \d{1,2}:\d{2}:\d{2}'

max_wait_time: 3s

- regex:

expression: '^(?P<time>\d{4}-\d{2}-\d{2} \d{1,2}:\d{2}:\d{2},d{3}) (?P<message>(?s:.*))$$'

# Use $$ (double-dollar sign) when your configuration needs a literal dollar sign.

version: "3.4"

services:

foo: # sample service

image: foo

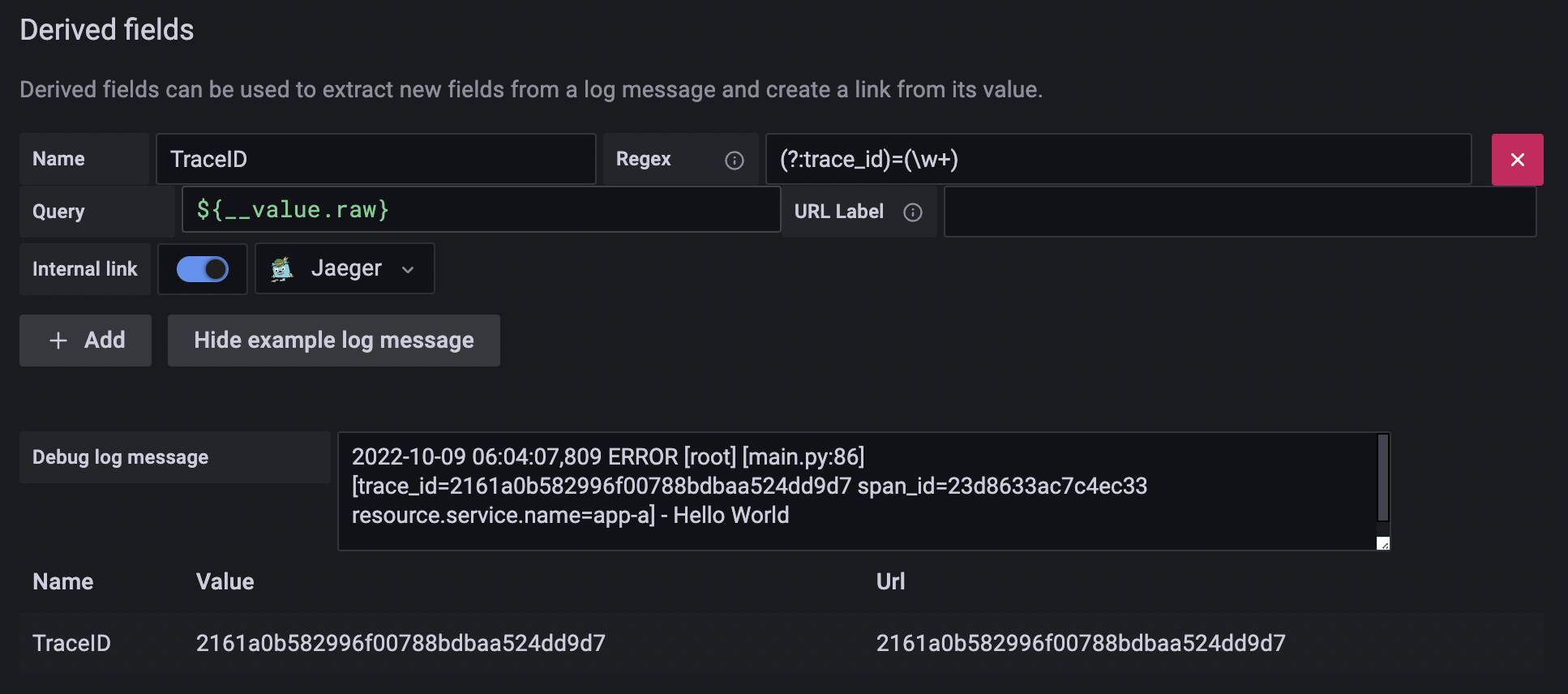

logging: *default-logging # alias(*): refer to 'default-logging' chunk Add a TraceID derived field to extract the trace id and create a Jaeger link from the trace id.

Grafana data source setting example:

Grafana data source config example:

# etc/grafana/datasource.yml

name: Loki

type: loki

typeName: Loki

access: proxy

url: http://loki:3100

password: ''

user: ''

database: ''

basicAuth: false

isDefault: false

jsonData:

derivedFields:

- datasourceUid: jaeger

matcherRegex: (?:trace_id)=(\w+)

name: TraceID

url: $${__value.raw}

# Use $$ (double-dollar sign) when your configuration needs a literal dollar sign.

readOnly: false

editable: true- Add Jaeger, and Loki to the data source with config file

etc/grafana/datasource.yml.

# grafana in docker-compose-grafana.yaml

service:

grafana:

image: grafana/grafana:9.4.13

ports:

- "3000:3000"

volumes:

- ./etc/grafana/:/etc/grafana/provisioning/datasources # data sources

environment:

GF_AUTH_ANONYMOUS_ENABLED: "true"

GF_AUTH_ANONYMOUS_ORG_ROLE: "Admin"

GF_AUTH_DISABLE_LOGIN_FORM: "true"