Created by Thanos Delatolas, Vikcy Kalogeiton, Dim P. Papadopoulos

[Paper (WACV 2024)] [Project page] [Extended Abstract (ICCV-W 2023)]

conda env create -f environment.yamlDownload the data with python download_data.py. The data should be arranged with the following layout:

data

├── DAVIS_17

│ ├── Annotations

│ ├── ImageSets

│ └── JPEGImages

│

├── MOSE

│ ├── Annotations

│ ├── ImageSets

│ └── JPEGImagesThe script download_data.py also creates the train/val/test splits in MOSE, as discussed in the paper. If qdown denies access to the MOSE dataset, you can manually download MOSE from here and place it in the directory: ./data/MOSE/

Download the model-weights with python download_weights.py. The weights should be arranged with the following layout:

model_weights

├── mivos

│ └── stcn.pth

│ └── fusion.pth

├── qnet

│ └── qnet.pth

├── rl_agent

│ └── model.pth

├── sam

│ └── sam.pthWe provide the weights of MiVOS trained only on YouTube-VOS. If you wish to replicate the training process, please refer to the original repository.

- Generate Frame Quality Dataset:

python generate_fq_dataset.py --imset subset_train_4andpython generate_fq_dataset.py --imset val - Train QNet:

python train_qnet.py - Generate the annotation type dataset:

python generate_annotation_dataset.py --imset subset_train_4 - Train the RL Agent:

python train_rl_agent.py

The script eval_annotation_method.py is used to execute all annotation methods. The script scripts/eval.sh can be used to run all the experiments. Finally, the scripts vis/frame_selection.py and vis/full_pipeline.py are used to plot the results obtained from the experiments conducted. To speed up the process, it is recommended to run the experiments simultaneously on multiple GPUs.

@inproceedings{delatolas2024learning,

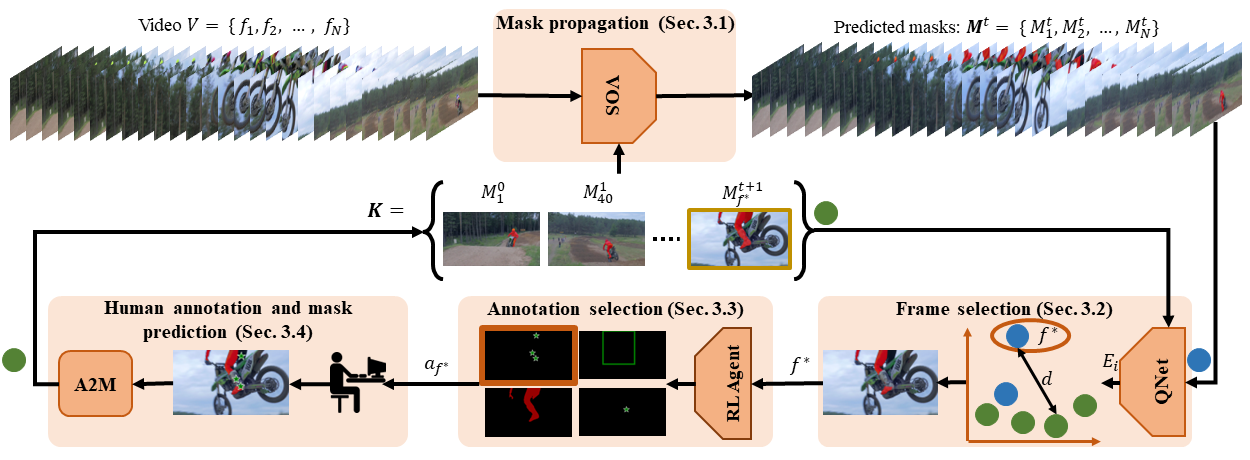

title={Learning the What and How of Annotation in Video Object Segmentation},

author={Thanos Delatolas and Vicky Kalogeiton and Dim P. Papadopoulos},

year={2024},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)}

}