Semantic Segmentation of HyperSpectral Images using a U-Net with Separable Convolutions.

Please do not use this codebase for your experiments.

In my opinion the results presented in this document is not a fair analysis for two reasons.

Reason one is that there is data leakage between the train and val sets. Since patches are created using stride 1, it means that some patches have partial overlap with few other patches. And also since synthetic patches are created using smaller patches, some common information maybe present across various patches. Since the general and synthetic patches are first created, then concatenated and then finally split into train and val splits, there is data leakage.

Reason two is that I think the final performance mentioned in the document is over all pixels, which naturally would include the ones used for training as well. Hence the numbers may not be representative of the effectiveness of the models.

In retrospect, I also think the idea of synthetic patches is not good. This is because the smaller patches that make up a synthetic patch belong to different parts of the full image and hence the spatial context within a synthetic patch may not be useful.

Due to the above reasons, please do not use this codebase. Since this repo was created long ago I do not remember how/why things ended up this way. I wanted to post this update for quite a while now but I kept forgetting and/or postponing. I am truly sorry for the inconvenience.

Currently there are no plans to resolve the aforementioned issues and hence the repository will be made read-only. Hence, once again, please do not use this codebase and I am truly sorry for the inconvenience. If you have any questions, please send me an email (email ID will be available in my profile).

- HyperSpectral Images (HSI) are semantically segmented using two variants of U-Nets and their performance is comparaed.

- Model A uses Depthwise Separable Convolutions in the downsampling arm of the U-Net, and Model B uses Convolutions in the downsampling arm of the U-Net.

- Due to the lack of multiple HSI image and ground truth pairs, we train the models by extracting patches of the image. Here, patches are smaller square regions of the image. After training the model, we make predictions patch-wise.

- Patches are extracted using a stride of 1 for training. We used patches of size

patch_size = 4(4x4 square regions) for our experiment. - We use the

sample-weightfeature offered by Keras to weight the classes in the loss function by their log frequency. We use this as there is a skew in the number of examples per class. - Some classes do not have patches of size

patch_size = 4. For these classes, we create synthetic patches of sizepatch_size = 4by using patches of size 1. - Experimental results are tabulated below.

- Keras

- TensorFlow (>1.4)

- Scikit-Learn

- Numpy

- Scipy

- Clone the repository and change working directory using:

git clone https://github.com/thatbrguy/Hyperspectral-Image-Segmentation.git \

&& cd Hyperspectral-Image-Segmentation

- Download the dataset from here or by using the following commands:

wget http://www.ehu.eus/ccwintco/uploads/6/67/Indian_pines_corrected.mat

wget http://www.ehu.eus/ccwintco/uploads/c/c4/Indian_pines_gt.mat

- Train the model using:

python main.py \

--model A \

--mode train

The file main.py supports a few options, which are listed below:

--model: (required) Choose between modelsAandB.--mode: (required) Choose between training (train) and inference (infer) modes.--weights: (required for inference only) Path of the weights file for inference mode. Pretrained weights for both models (AandB) are available in theweightsdirectory.--epochs: Set the number of epochs. Default value is100.--batch_size: Set the batch size. Default value is200.--lr: Set the learning rate. Default value is0.001.

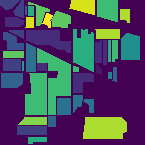

| Ground Truth | Model A | Model B |

|---|---|---|

|

|

|

| Class Number | Class Name | Ground Truth Samples | Model A | Model B | ||

|---|---|---|---|---|---|---|

| Predicted Samples | Accuracy | Predicted Samples | Accuracy | |||

| 1 | Alfalfa | 46 | 30 | 65.21 | 29 | 63.04 |

| 2 | Corn notill | 1428 | 1343 | 94.05 | 1324 | 92.72 |

| 3 | Corn mintill | 830 | 753 | 90.72 | 762 | 91.81 |

| 4 | Corn | 237 | 189 | 79.75 | 186 | 78.48 |

| 5 | Grass pasture | 483 | 449 | 92.96 | 439 | 90.89 |

| 6 | Grass trees | 730 | 717 | 98.22 | 710 | 97.26 |

| 7 | Grass pasture mowed | 28 | 28 | 100 | 28 | 100 |

| 8 | Hay windrowed | 478 | 476 | 99.58 | 473 | 98.95 |

| 9 | Oats | 20 | 20 | 100 | 7 | 35 |

| 10 | Soybean notill | 972 | 843 | 86.72 | 844 | 86.83 |

| 11 | Soybean mintill | 2455 | 2328 | 94.83 | 2311 | 94.13 |

| 12 | Soybean clean | 593 | 524 | 88.36 | 530 | 89.38 |

| 13 | Wheat | 205 | 175 | 85.37 | 176 | 85.85 |

| 14 | Woods | 1265 | 1246 | 98.50 | 1229 | 97.15 |

| 15 | Buildings Grass Trees Drives | 386 | 386 | 100 | 382 | 98.96 |

| 16 | Stone Steel Towers | 93 | 92 | 98.92 | 91 | 97.85 |

| Overall Accuracy (OA) | 93.66 | 92.90 | ||||

| Average Accuracy (AA) | 92.07 | 87.39 | ||||

| Kappa Coefficient (k) | 92.77 | 91.91 | ||||