Official implementation of PointCLIP V2: Prompting CLIP and GPT for Powerful 3D Open-world Learning.

The V1 version of PointCLIP accepted by CVPR 2022 is open-sourced at here.

- We release the code for zero-shot 3D classification and part segmentation 🔥.

- Check our latest 3D works in CVPR 2023 🚀: Point-NN for non-parametric 3D analysis, and I2P-MAE for 2D-guided 3D pre-training.

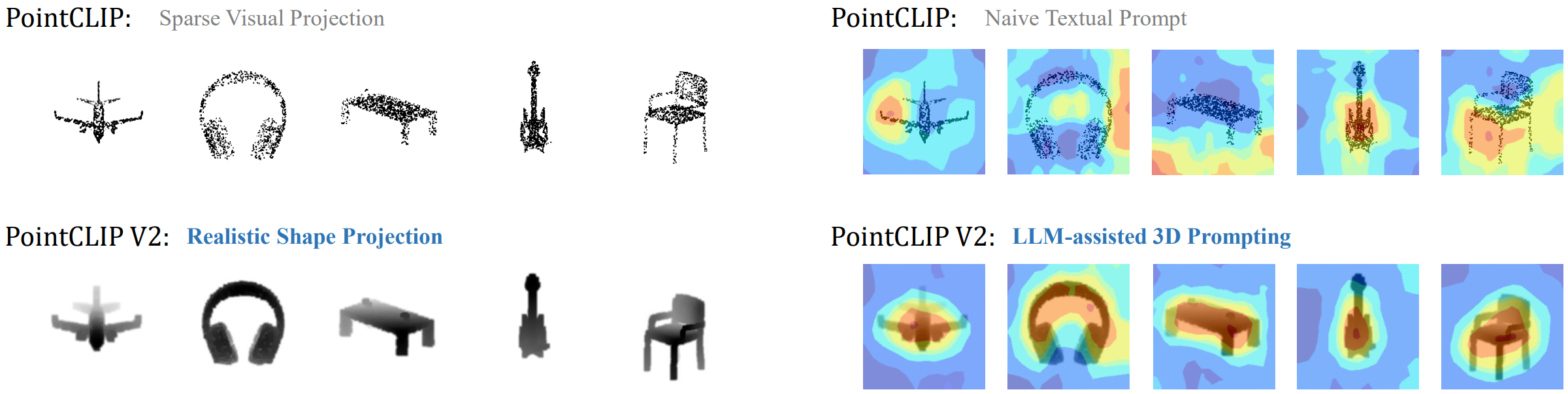

PointCLIP V2 is a powerful 3D open-world learner, which improves the performance of PointCLIP with significant margins. V2 utilizes a realistic shape projection module for depth map generation, and adopts the LLM-assisted 3D prompt to align visual and language representations. Besides classification, PointCLIP V2 also conducts zero-shot part segmentation and 3D object detection.

Please check the zeroshot_cls folder for zero-shot 3D classification, and zeroshot_seg folder for zero-shot part segmentation.

Thanks for citing our paper:

@article{Zhu2022PointCLIPV2,

title={PointCLIP V2: Prompting CLIP and GPT for Powerful 3D Open-world Learning},

author={Zhu, Xiangyang and Zhang, Renrui and He, Bowei and Guo, Ziyu and Zeng, Ziyao and Qin, Zipeng and Zhang, Shanghang and Gao, Peng},

journal={arXiv preprint arXiv:2211.11682},

year={2022},

}

If you have any question about this project, please feel free to contact xiangyzhu6-c@my.cityu.edu.hk and zhangrenrui@pjlab.org.cn.