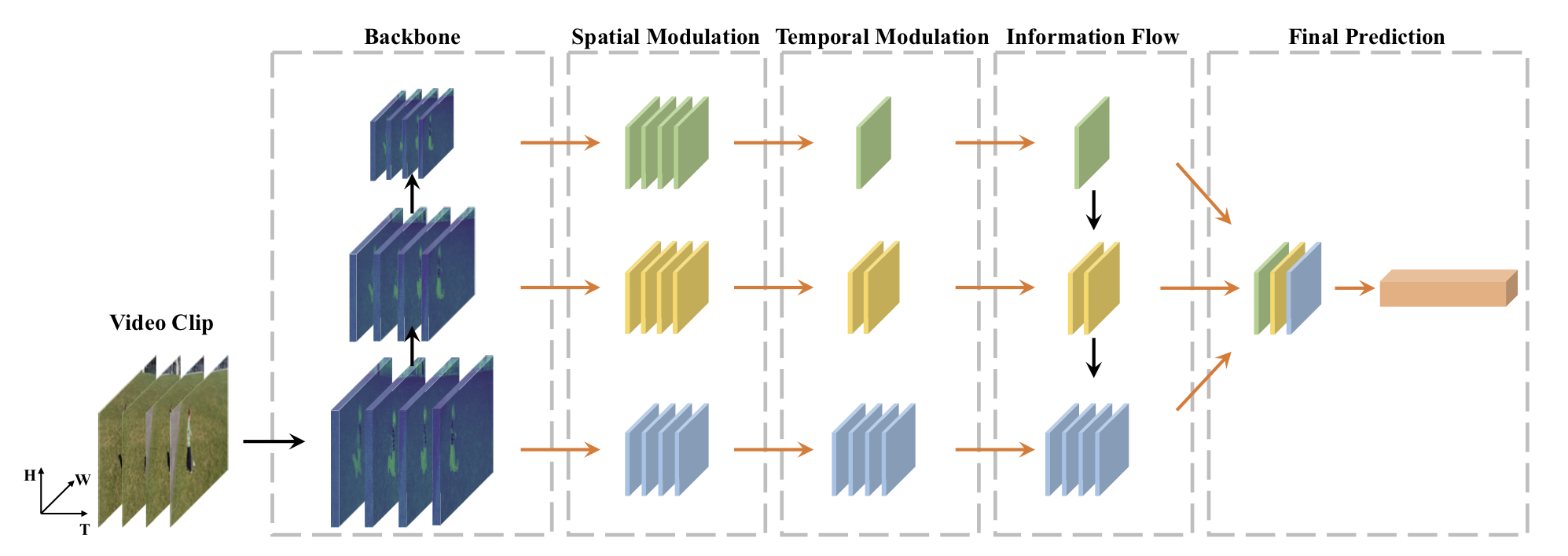

Temporal Pyramid Network for Action Recognition

Run demo

Try running the service straight away!!

Click the button below to go to the hosting site. Then, click the blue link, which is the endpoint.

General

[Paper]

[Project Page]

[Paper]

[Project Page]

License

The project is release under the Apache 2.0 license.

Model Zoo

Results and reference models are available in the model zoo.

Installation and Data Preparation

Please refer to INSTALL for installation and DATA for data preparation.

Get Started

Please refer to GETTING_STARTED for detailed usage.

Quick Demo

We provide test_video.py to inference a single video.

Download the checkpoints and put them to the ckpt/. and run:

python ./test_video.py ${CONFIG_FILE} ${CHECKPOINT_FILE} --video_file ${VIDOE_NAME} --label_file ${LABLE_FILE} --rendered_output ${RENDERED_NAME}

Arguments:

--video_file: Path for demo video, default is./demo/demo.mp4--label_file: The label file for pretrained model, default isdemo/category.txt--redndered_output: The output file name. If specified, the script will render output video with label name, default isdemo/demo_pred.webm.

For example, we can predict for the demo video (download here and put it under demo/.) by running:

python ./test_video.py config_files/sthv2/tsm_tpn.py ckpt/sthv2_tpn.pth

The rendered output video:

Acknowledgement

We really appreciate developers of MMAction for such wonderful codebase. We also thank Yue Zhao for the insightful discussion.

Contact

This repo is currently maintained by Ceyuan Yang (@limbo0000) and Yinghao Xu (@justimyhxu).

Bibtex

@inproceedings{yang2020tpn,

title={Temporal Pyramid Network for Action Recognition},

author={Yang, Ceyuan and Xu, Yinghao and Shi, Jianping and Dai, Bo and Zhou, Bolei},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2020},

}