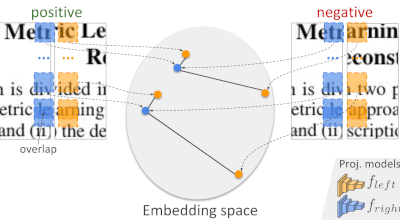

Fast(er) Reconstruction of Shredded Text Documents via Self-Supervised Deep Asymmetric Metric Learning

This repository comprises datasets and source codes used in our CVPR 2020 paper.

Python dependencies are listed in requirements.txt. Optimization 3rd party dependencies include Concorde and QSopt.

For a fully-automatic setup of the virtual environment (tested on Linux Ubuntu 22.04), run source scripts/install.sh.

You should have sudo privileges to run properly the installation script. The virtual environment will be created at the root repository directory. When finished, the script will automatically activate the just created environment.

The datasets comprise the (i) integral documents where the small samples are extracted and (ii) the mechanically-shredded documents collections D1 and D2 used in the tests. To download them, just run bash scripts/get_dataset.sh.

It will create a directory datasets in the local directory. A document in our dataset is a directory with the format D<3-digits>. It comprises two subdirectories: strips (image files) and masks (binary .npy files).

The name of the files follow the format D<5-digits>. The first 3 digits match the sequence of the document, while the following 2 digits define the order of the strip image/mask. For instance, datasets/D2/mechanical/D004/strips/D00401.jpeg is the first strip of the document D004 in the dataset D2.

Important:

- To use your own dataset, you should follow strictly the above described structure. No warranties are given otherwise.

- In the absence of the

maskdirectory, it will be assumed the strip in composed of all pixels in the image file.

A reconstruction demo is available by running python demo.py. By default, the script uses a pretrained model available in the traindata directory. Here is an example of output of the demo script:

For details of the parameters, you may run python demo.py --help.

To ease replicability, we created bash scripts with the commands used in each experiment. You can just run bash scripts/experiment<ID>.sh replacing <ID> by 1, 2, or 3. As described in the paper, each id corresponds to:

- 1: single-reconstruction experiment

- 2: multi-reconstruction experiment

- 3: sensitivity analysis

Results are stored as .json files containing, among other things, the solution, the initial permutation of the shreds, accuracy, and times. The files generated by the experiments 1 and 3 are at results/proposed and results/sib18, while those generated by the experiment 2 are at results/proposed_multi and results/sib18_multi. Results can be visualized through the scripts in the charts directory`.