A real-world dataset of anonymized e-commerce sessions for multi-objective recommendation research.

Get the Data • Data Format • Evaluation • FAQ • License

The OTTO session dataset is a large-scale dataset intended for multi-objective recommendation research. We collected the data from anonymized behavior logs of the OTTO webshop and the app. The mission of this dataset is to serve as a benchmark for session-based recommendations and foster research in the multi-objective and session-based recommender systems area. We also launched a Kaggle competition with the goal to predict clicks, cart additions, and orders based on previous events in a user session.

- 12M real-world anonymized user sessions

- 220M events, consiting of

clicks,cartsandorders - 1.8M unique articles in the catalogue

- Ready to use data in

.jsonlformat - Evaluation metrics for multi-objective optimization

| Dataset | #sessions | #items | #events | #clicks | #carts | #orders | Density [%] |

|---|---|---|---|---|---|---|---|

| Train | 12.899.779 | 1.855.603 | 216.716.096 | 194.720.954 | 16.896.191 | 5.098.951 | 0.0005 |

| Test | 1.671.803 | TBA | TBA | TBA | TBA | TBA | TBA |

| mean | std | min | 50% | 75% | 90% | 95% | max | |

|---|---|---|---|---|---|---|---|---|

| Train #events per session | 16.80 | 33.58 | 2 | 6 | 15 | 39 | 68 | 500 |

| Test #events per session | TBA | TBA | TBA | TBA | TBA | TBA | TBA | TBA |

| mean | std | min | 50% | 75% | 90% | 95% | max | |

|---|---|---|---|---|---|---|---|---|

| Train #events per item | 116.79 | 728.85 | 3 | 20 | 56 | 183 | 398 | 129004 |

| Test #events per item | TBA | TBA | TBA | TBA | TBA | TBA | TBA | TBA |

Note: The full test set is not yet available. We will update the tables once the Kaggle competition is over and the test set is released.

The data is stored on the Kaggle platform and can be downloaded using their API:

kaggle competitions download -c otto-recommender-systemThe sessions are stored as JSON objects containing a unique session ID and a list of events:

{

"session": 42,

"events": [

{ "aid": 0, "ts": 1661200010000, "type": "clicks" },

{ "aid": 1, "ts": 1661200020000, "type": "clicks" },

{ "aid": 2, "ts": 1661200030000, "type": "clicks" },

{ "aid": 2, "ts": 1661200040000, "type": "carts" },

{ "aid": 3, "ts": 1661200050000, "type": "clicks" },

{ "aid": 3, "ts": 1661200060000, "type": "carts" },

{ "aid": 4, "ts": 1661200070000, "type": "clicks" },

{ "aid": 2, "ts": 1661200080000, "type": "orders" },

{ "aid": 3, "ts": 1661200080000, "type": "orders" }

]

}session- the unique session idevents- the time ordered sequence of events in the sessionaid- the article id (product code) of the associated eventts- the Unix timestamp of the eventtype- the event type, i.e., whether a product was clicked, added to the user's cart, or ordered during the session

For each session id and type combination in the test set, you must predict the aid values in the label column, which is space delimited. You can predict up to 20 aid values per row. The file should contain a header and have the following format:

session_type,labels

42_clicks,0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

42_carts,0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

42_orders,0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

Submissions are evaluated on Recall@20 for each action type, and the three recall values are weight-averaged:

{'clicks': 0.10, 'carts': 0.30, 'orders': 0.60}

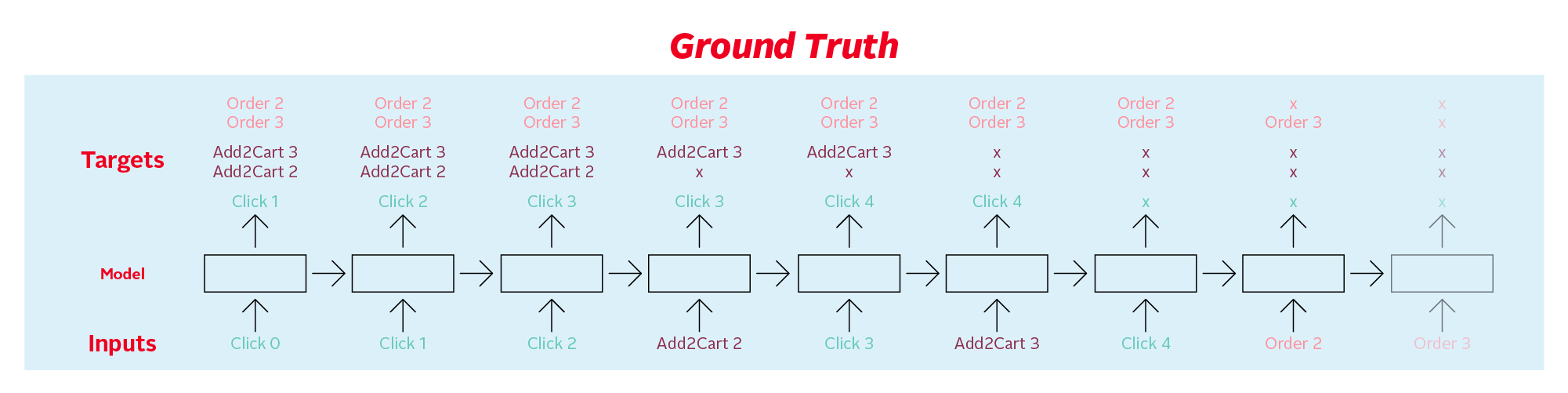

For each session in the test data, your task it to predict the aid values for each type that occur after the last timestamp ts the test session. In other words, the test data contains sessions truncated by timestamp, and you are to predict what occurs after the point of truncation.

For clicks there is only a single ground truth value for each session, which is the next aid clicked during the session (although you can still predict up to 20 aid values). The ground truth for carts and orders contains all aid values that were added to a cart and ordered respectively during the session.

Click here to see the labeled session as JSON from above

[

{

"aid": 0,

"ts": 1661200010000,

"type": "clicks",

"labels": {

"clicks": 1,

"carts": [2, 3],

"orders": [2, 3]

}

},

{

"aid": 1,

"ts": 1661200020000,

"type": "clicks",

"labels": {

"clicks": 2,

"carts": [2, 3],

"orders": [2, 3]

}

},

{

"aid": 2,

"ts": 1661200030000,

"type": "clicks",

"labels": {

"clicks": 3,

"carts": [2, 3],

"orders": [2, 3]

}

},

{

"aid": 2,

"ts": 1661200040000,

"type": "carts",

"labels": {

"clicks": 3,

"carts": [3],

"orders": [2, 3]

}

},

{

"aid": 3,

"ts": 1661200050000,

"type": "clicks",

"labels": {

"clicks": 4,

"carts": [3],

"orders": [2, 3]

}

},

{

"aid": 3,

"ts": 1661200060000,

"type": "carts",

"labels": {

"clicks": 4,

"orders": [2, 3]

}

},

{

"aid": 4,

"ts": 1661200070000,

"type": "clicks",

"labels": {

"orders": [2, 3]

}

},

{

"aid": 2,

"ts": 1661200080000,

"type": "orders",

"labels": {

"orders": [3]

}

}

]To create these labels from unlabeled sessions, you can use the function, ground_truth in labels.py.

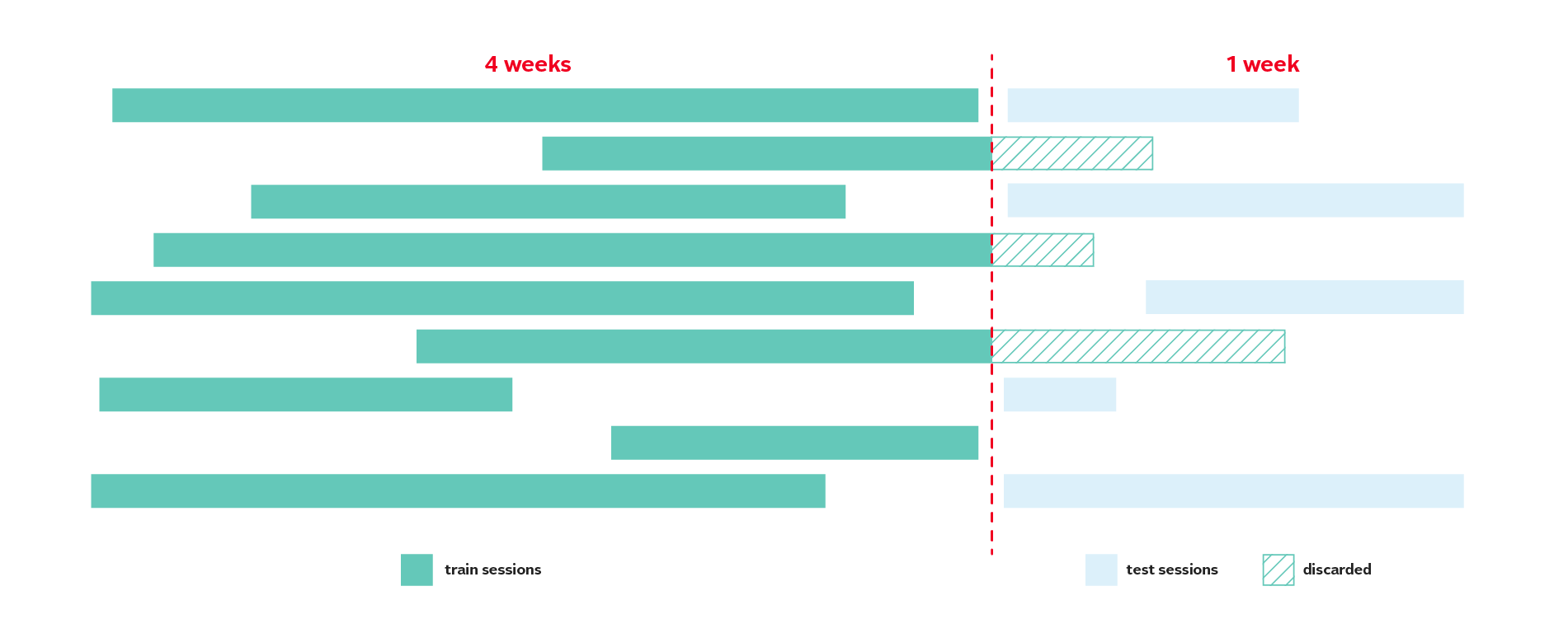

Since we want to evaluate a model's performance in the future, as would be the case when we deploy such a system in an actual webshop, we choose a time-based validation split. Our train set consists of observations from 4 weeks, while the test set contains user sessions from the following week. Furthermore, we trimmed train sessions overlapping with the test period, as depicted in the following diagram, to prevent information leakage from the future:

We will publish the final test set after the Kaggle competition is finalized. However, until then, participants of the competition can create their truncated test sets from the training sessions and use this to evaluate their models offline. For this purpose, we include a Python script called testset.py:

pipenv run python -m src.testset --train-set train.jsonl --days 2 --output-path 'out/' --seed 42 You can use the evalute.py script to calculate the Recall@20 for each action type and the weighted average Recall@20 for your submission:

pipenv run python -m src.evaluate --test-labels test_labels.jsonl --predictions predictions.csv- A session is all activity by a single user either in the train or the test set.

- Yes, for the scope of the competition, you may use all the data we provided.

- If you predict 20 correctly out of the ground truth labels, you will score 1.0.

The OTTO dataset is released under the CC-BY 4.0 License, while the code is licensed under the MIT License.

BibTeX entry:

@online{normann2022ottodataset,

author = {Philipp Normann, Sophie Baumeister, Timo Wilm},

title = {OTTO Recommender Systems Dataset: A real-world dataset of anonymized e-commerce sessions for multi-objective recommendation research},

date = {2022-11-01},

}