Deep MDMA is a combination of Lucid and librosa. The goal is to visualize the movement between layers of a neural network set to music. While Lucid makes beautiful images, independently training and interpolating between them leaves the animation disjointed. This is because the locally minimized regions of the CPPN are far from each other for each sample. The trick was to reuse the initial coordinates from the previous model to train the next. This provides continuity to train one image into another.

Start with python 3.6.2, install tensorflow 1, and all the requirements

pip install -r requirements.txt

If you want to use a GPU, do this

pip uninstall tensorflow

pip install tensorflow-gpu==1.14.0

Test a single single layer render with

python render_activations.py mixed4a_3x3_pre_relu 25

This should produce an image like the one below.

If you leave off the last two arguments, render_activations.py will try to build a model for every one of the 3x3 mixed layer/channels.

If all goes well, use smooth_transfer.py to generate the intermediate models, sound/collect_beats.py to measure the beat pattern for a target wav, and finally matching_beats.py to put it all together.

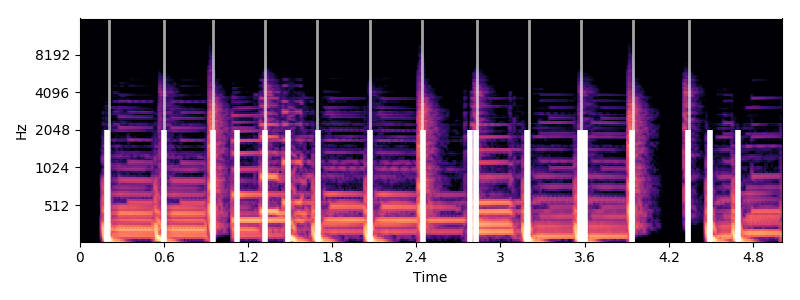

Beats are denoted by the large white line, while the fat lines indicate the onsets (kicks or hi-hats). Images change between the beats, and pulse during the onsets.