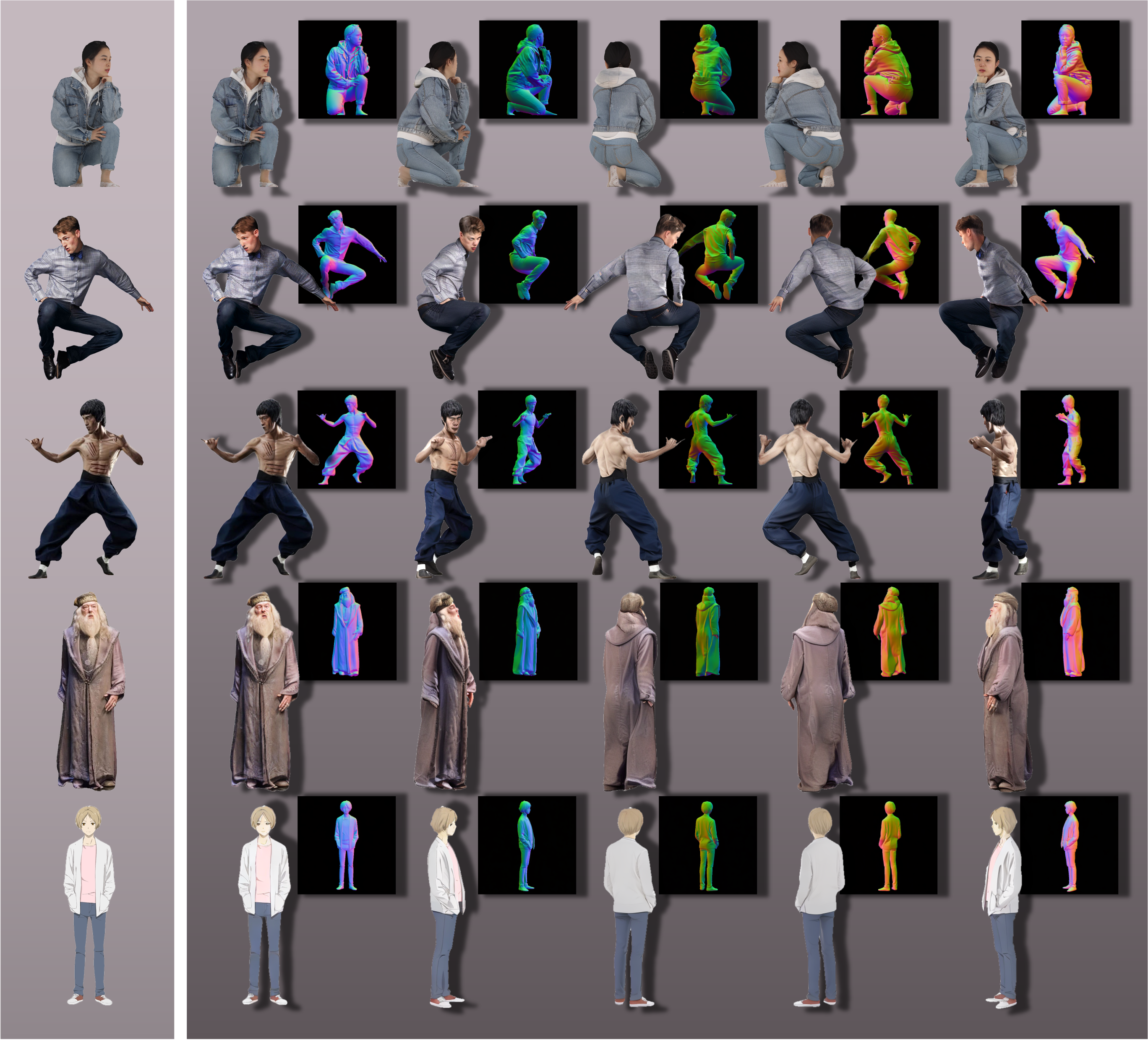

MagicMan: Generative Novel View Synthesis of Humans with 3D-Aware Diffusion and Iterative Refinement

Xu He1

·

Xiaoyu Li2

·

Di Kang2

·

Jiangnan Ye1

·

Chaopeng Zhang2

·

Liyang Chen1

·

Xiangjun Gao3

·

Han Zhang4

·

Zhiyong Wu1,5

·

Haolin Zhuang1

·

1Tsinghua University 2Tencent AI Lab

3The Hong Kong University of Science and Technology

4Standford University 5The Chinese University of Hong Kong

This repository will contain the official implementation of MagicMan. For more visual results, please checkout our project page.

- [2024.09.16] Release inference code and pretrained weights!

- [2024.08.27] Release paper and project page!

- Release reconstruction code.

- Release training code.

| Model | Resolution | #Views | GPU Memery (w/ refinement) |

#Training Scans | Datasets |

|---|---|---|---|---|---|

| magicman_base | 512x512 | 20 | 23.5GB | ~2500 | THuman2.1 |

| magicman_plus | 512x512 | 24 | 26.5GB | ~5500 | THuman2.1, CustomHumans, 2K2K, CityuHuman |

Currently, we provide two versions of models: a base model trained on ~2500 scans to generate 20 views and an enhanced model trained on ~5500 scans to generate 24 views.

Models can be downloaded here. Both pretrained_weights and one version of magicman_{version} are needed to be downloaded and put under ./ckpt as:

|--- ckpt/

| |--- pretrained_weights/

| |--- magic_base/ or magic_plus/

git clone https://github.com/thuhcsi/MagicMan.git

cd MagicMan# Create conda environment

conda create -n magicman python=3.10

conda activate magicman

# Install PyTorch and other dependencies

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2

pip install -r requirements.txt

# Install PyTorch3D

pip install "git+https://github.com/facebookresearch/pytorch3d.git"

# Install mmcv-full

pip install "mmcv-full>=1.3.17,<1.6.0" -f https://download.openmmlab.com/mmcv/dist/cu117/torch2.0.1/index.html

# Install mmhuman3d

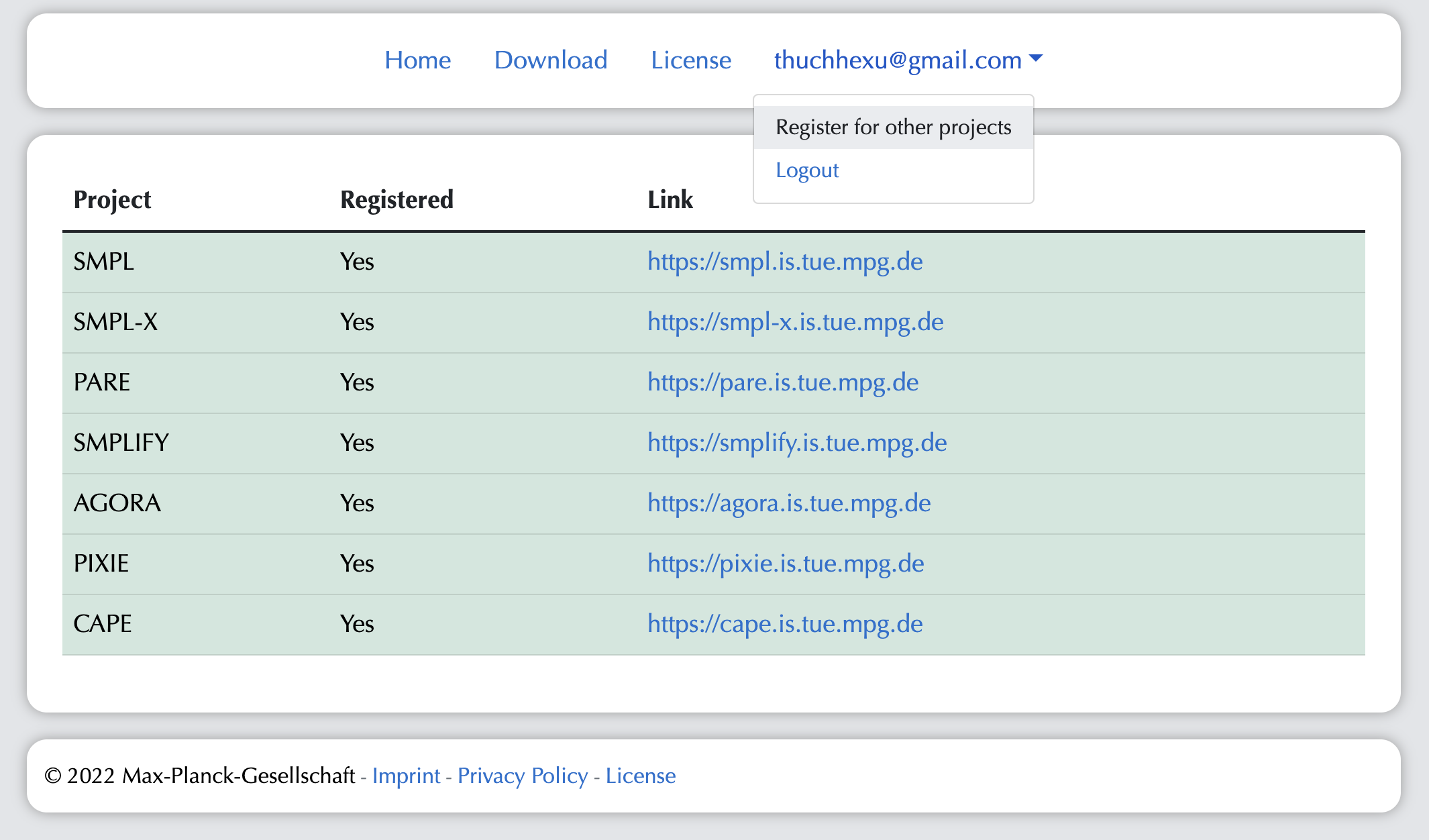

pip install "git+https://github.com/open-mmlab/mmhuman3d.git"Register at ICON's website.

Click Register now on all dependencies, then you can download them all using ONE account with:

cd ./thirdparties/econ

bash fetch_data.shRequied models and extra data are from SMPL-X, PIXIE, PyMAF-X, and ECON.

Please consider cite these awesome works if they also help on your project.

@inproceedings{SMPL-X:2019,

title = {Expressive Body Capture: 3D Hands, Face, and Body from a Single Image},

author = {Pavlakos, Georgios and Choutas, Vasileios and Ghorbani, Nima and Bolkart, Timo and Osman, Ahmed A. A. and Tzionas, Dimitrios and Black, Michael J.},

booktitle = {Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR)},

year = {2019}

}

@inproceedings{PIXIE:2021,

title={Collaborative Regression of Expressive Bodies using Moderation},

author={Yao Feng and Vasileios Choutas and Timo Bolkart and Dimitrios Tzionas and Michael J. Black},

booktitle={International Conference on 3D Vision (3DV)},

year={2021}

}

@article{pymafx2023,

title={PyMAF-X: Towards Well-aligned Full-body Model Regression from Monocular Images},

author={Zhang, Hongwen and Tian, Yating and Zhang, Yuxiang and Li, Mengcheng and An, Liang and Sun, Zhenan and Liu, Yebin},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2023}

}

@inproceedings{pymaf2021,

title={PyMAF: 3D Human Pose and Shape Regression with Pyramidal Mesh Alignment Feedback Loop},

author={Zhang, Hongwen and Tian, Yating and Zhou, Xinchi and Ouyang, Wanli and Liu, Yebin and Wang, Limin and Sun, Zhenan},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

year={2021}

}

@inproceedings{xiu2023econ,

title = {{ECON: Explicit Clothed humans Optimized via Normal integration}},

author = {Xiu, Yuliang and Yang, Jinlong and Cao, Xu and Tzionas, Dimitrios and Black, Michael J.},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

}python inference.py --config configs/inference/inference-{version}.yaml --input_path {input_image_path} --output_path {output_dir_path} --seed 42 --device cuda:0

# e.g.,

python inference.py --config configs/inference/inference-base.yaml --input_path examples/001.jpg --output_path examples/001 --seed 42 --device cuda:0

Our code follows several excellent repositories. We appreciate them for making their codes available to the public.

- Moore-AnimateAnyone.

- ECON.

- HumanGaussian. Thanks to the authors of HumanGaussian for additional advice and help!

If you find our work useful, please consider citing:

@misc{he2024magicman,

title={MagicMan: Generative Novel View Synthesis of Humans with 3D-Aware Diffusion and Iterative Refinement},

author={Xu He and Xiaoyu Li and Di Kang and Jiangnan Ye and Chaopeng Zhang and Liyang Chen and Xiangjun Gao and Han Zhang and Zhiyong Wu and Haolin Zhuang},

year={2024},

eprint={2408.14211},

archivePrefix={arXiv},

primaryClass={cs.CV}

}